Table of contents

1. Equality constrained optimization (eg. llp)

2. Inequality constraint optimization (eg. svm)

2. Common definitions and properties of traces

synopsis

This blog is used to record various pre-knowledge required for machine learning theory learning and optimization problem derivation.

1. Lagrangian function

In the optimization problem, after the optimization problem and constraints of the method are obtained, the corresponding Lagrangian function is often required and solved. The following is the construction method of the Lagrangian function in several common situations.

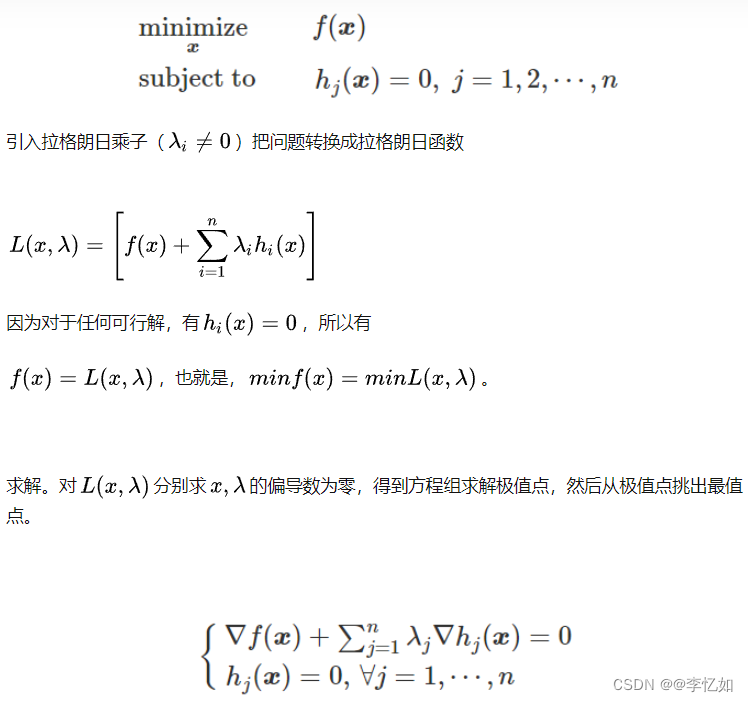

1. Equality constrained optimization (eg. llp)

1.1 No summation

Define its Lagrangian function as:

Find the partial derivative for the corresponding unknown (here α)

Then use eigendecomposition to the above formula to solve the original optimization problem.

1.2 Qualified sums

2. Inequality constraint optimization (eg. svm)

Define its Lagrangian function as:

Find the partial derivative for the corresponding unknown, and set it to 0.

3. Unconstrained (eg. ls)

It directly corresponds to the requirement to solve the unknown to find the partial derivative, and let it be 0.

2. Norm

1. F norm

The F norm is a matrix norm. Assuming that A is an mxn matrix, the corresponding F norm is defined as follows:

2.l2 norm

The l2 norm is the Euclidean distance, which is often used to measure "error". It is defined as follows:

For matrices, the l2 norm is defined as follows:

Tips: The parameter is the absolute value of the corresponding maximum eigenvalue.

3.l1 norm

The l1 norm is the sum of absolute values, defined as follows:

4.l2,1 norm

The l2,1 norm is to first find the l2 norm by column and then find the l1 norm by row, which is defined as follows:

Define the corresponding D matrix, l2, 1 norm can be rewritten as:

where D is a diagonal matrix, and the diagonal is the square of the two-norm of 1/row.

3. Partial guide

The commonly used partial derivative of the trace of the matrix is as follows:

For details, please refer to: The relationship between the Frobenius norm of the matrix and the trace (trace) and its partial derivative rule_Love life's blog

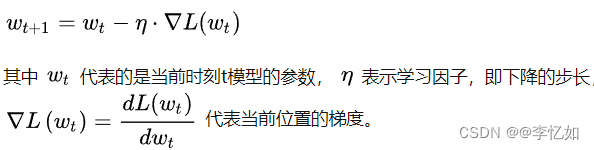

1. Gradient descent method

The gradient descent method is commonly used in iteration and optimization. The calculation formula can be simply understood as finding the corresponding partial derivative, as follows:

The purpose of gradient descent is to continuously update the weight parameter w, so that the value of the loss function L is continuously reduced.

2. Common definitions and properties of traces

1.tr(AB) = tr(BA)

2.tr(A) = tr(A^T)

3.tr(A + B) = tr(A) + tr(B)

4.tr( rA ) = r tr( A ) = tr(rA * I) (i is the identity matrix)

4. Kronecker product

The Kronecker product is an operation between two matrices of any size, and the result is a matrix, denoted by ![]() . The Kronecker product is a special form of the tensor product , as follows:

. The Kronecker product is a special form of the tensor product , as follows: