Transparency is divided into two types: Alpha Test and Alpha Blending. The transparency test only discards the corresponding pixel according to the threshold value of the Alpha channel, and what is obtained is not truly transparent! Alpha blending is to obtain real transparency .

If you want to be transparent, you have to talk about the order. For opaque objects, because of the Z-Test, the occlusion of the Z-buffer recording depth value pixels, etc. will be removed, and there is even no overdraw, but the transparent objects are different. Z-Test, Z-write closed, resulting in no order! The order problem has always been a major problem in the graphics industry, especially in order to be able to be truly transparently rendered. OIT is required to solve situations such as self-occlusion, self-intersection, loop overlap, intersection of A and B, etc., but correct And performance overhead is now incompatible, how to balance and discard the major engines in real-time rendering have different methods, for example, threejs does not deal with it directly!

Transparent and opaque objects:

For blending to work on multiple objects, we need to draw the furthest object first and the closest object last. Ordinary objects that don't need to be blended can still be drawn normally using the depth buffer, so they don't need to be sorted. But we still have to make sure they are drawn before drawing the (sorted) transparent objects. When drawing a scene with opaque and transparent objects, the general principles are as follows:

- Draw all opaque objects first.

- Sorts all transparent objects.

- Draws all transparent objects in order.

Transparent objects do not have Z-test and erlay-z, only rendering from back to front can get normal! The rest of the opaque and occluded ones just need to be rendered from front to back !

Two types: 1. Transparency test , 2. Transparency blending

Transparency test:

As long as the transparency of a fragment does not meet the condition (usually less than the Mogg threshold), the corresponding fragment will be discarded, and the discarded fragment will not be processed and will not have any impact on the color buffer; For example, the fragment shader in unity:

// Alpha test

clip (texColor.a - _Cutoff);

// Equal to

// if ((texColor.a - _Cutoff) < 0.0) {

// discard;

// }

Transparency blending:

Start the mix:

glEnable(GL_BLEND);

Mixing formula:

ThreeJs transparency:

There are two ways to render transparent objects in threejs, one is to set the renderOrder rendering order, the other is to implement the rendering process by yourself, and the second is to achieve a separate large one. You need to have a clear understanding of the threejs rendering process, and then pass it Handle forms to customize your own rendering pipeline. Blending can also try to treat the symptoms but not the root cause. The problem of transparent rendering is a problem that threejs has always had.

there.js example:

http://127.0.0.1:5555/examples/?q=alpha#webgl_materials_blending

http://127.0.0.1:5555/examples/?q=alpha#webgl_materials_blending_custom

http://127.0.0.1:5555 /examples/?q=alpha#webgl_materials_physical_transmission

https://threejs.org/manual/#en/transparency

Material properties:

blending (混合模式)

depthWrite( 渲染此材质是否对深度缓冲区有任何影响)

depthTest ( 是否在渲染此材质时启用深度测试)

All objects of threejs are Object3D, and the property value of Object3D.renderOrder will overwrite the default rendering order in **scene graph (scene graph)**, even if opaque objects and transparent objects maintain independent order. The rendering order is sorted from low to high, and the default value is 0. To deal with transparent ray tracing, it is very good to deal with transparent objects, and it needs to be rendered in real time! (The sorting of threejs is based on the sorting of the mesh in the scene graph)

Performance issues caused by transparency OverDraw:

What is OverDraw ?

Overdraw is overdrawing, which means that pixels are drawn multiple times within one frame. In theory, it is optimal to draw a pixel only once at a time, mainly to solve the bottleneck problem of pixel filling rate and calculation amount on the GPU side. Of course, the pixel fill rate, as the name implies, is the number of pixels that the GPU can write to the frame buffer within each frame, usually in Megapixels per second or Gigapixels per second. measure. There is no standard calculation method for this thing, and it is usually obtained by multiplying the rasterization unit by the core clock frequency of the GPU. The amount of calculation is generally measured in FLOPS, that is, the number of floating-point number operation instructions that can be performed per second. And we know that for each pixel, it needs to perform at least one rasterization, pixel shader, and output merging stage. Considering that the number of pixels is often far greater than the number of vertices, reducing the number of pixels that need to participate in rendering is a performance optimization. one of the key points.

Transparency: Use less transparent and translucent materials; now the rendering pipeline is generally optimized, right? The only thing I can think of is that translucency will lead to overdraw, and the post-processing OverDraw will also be very high. However, post-processing performance problems are better optimized than translucent special effects, and they should be eliminated when early z pass.

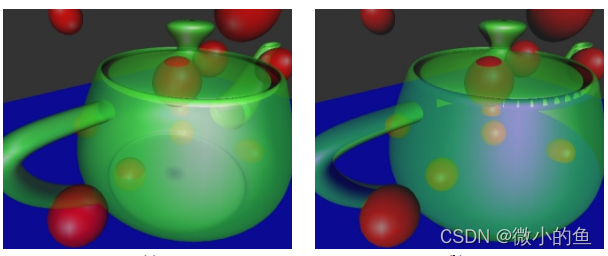

Multi-Layer Transparent Depth Conflict

As shown in the figure:

Common cases of out-of-order rendering:

Order Independent Transparency (OIT)

The usual way to achieve translucent rendering is to sort the distances from the semi-transparent objects in the scene to the camera after the opaque objects in the scene are rendered, and start from the object farthest from the camera (with the largest Z value) to render one by one. It is mixed and superimposed with the original color in the output buffer during rendering. For 2D translucent rendering, this implementation is sufficient, but in 3D scenes, since the sorting is based on the position of the axis point of the object, there will be an overlay effect in units of objects during rendering, such as the effect of circular coverage between objects, which cannot is rendered correctly. (oit creates a linked list in the shader and then sorts and mixes it. There are complete examples in the Red Book)

If your engine really needs top-notch transparency, here are some techniques worth looking into:

The original 2001 Depth Peeling paper : Pixel-perfect, but not fast.

Dual Depth Peeling : Minor improvements

Several papers related to bucket sorting. Store the fragments in an array and do depth sorting in the shader.

ATI's Mecha Demo : The effect is fast, but it is difficult to implement and requires the latest hardware. Use a linked list to store fragments.

Cyril Crassin's variation on the ATI's technique : more difficult to implement

In ancient times, there was a painter's algorithm: it essentially draws objects from far to near, so that the occlusion is correct. With the Z-test, the early-z feature of the hardware is fully utilized, and the objects are sorted from the near to the far (in The mobile terminal is the same whether it is tbr or tbdr), the same reason is suitable for translucent mixing, and the mixing itself has nothing to do with the order!

Graphics library/hardware limitations and the difficulties they create, and why transparency is so tricky. Take a look back at some of the better-known transparency techniques relevant to current hardware over the past two decades.

Graphics library and hardware limitations:

The source of the problem is a combination of depth testing and color blending. In the fragment shader stage, there is no zbuffer and Ztest like opaque objects to tell the graphics library which pixels are fully visible or semi-visible; one of the reasons is that there is no

buffer that can store depth values or sequential order, and between pixels There is an occlusion relationship, so there needs to be a way to store all screen coordinates of all pixels in different layers.

Ordered Transparency:

The most convenient solution is to order transparent objects so they are either drawn from furthest to closest, or closest to farthest (relative to the camera's position). That way the depth test doesn't affect the results of those pixels drawn after/before but above/below farther/closer objects. Sorting can be expensive to perform, and since most 3D applications are rendered in real time, this is even more pronounced when you perform sorting every frame. So we're going to look into the world of order-independent transparency techniques and find a technique that suits our purposes better, as well as our pipeline, so that we don't have to sort objects before drawing.

The goal of the OIT technique is to eliminate the need to classify transparent objects when drawing. Depending on the technology, some of them have to sort fragments to get accurate results, but only at a later stage when all draw calls have been done, and some of them don't need sorting, but the results are approximate.

Some more advanced techniques, invented to overcome the limitations of rendering transparent surfaces, explicitly use buffers that can hold multiple layers (such as linked lists or 3D arrays, such as [x][y][z] pixel information, and can be in Pixels are sorted on the GPU, usually because of its parallel processing capabilities, not the CPU.

A buffer area is a computer graphics technique introduced in 1984 that stores per-pixel lists of fragment data (including micropolygon information) in software rasterizer Reyes rendering, originally designed for anti-aliasing but also supports transparency. At the same time, there is already hardware capable of facilitating this task by performing hardware computations, which is the most convenient way for developers to gain out-of-the-box transparency. The SEGA Dreamcast is one of the few consoles that has automatic pixel-by-pixel translucency sorting implemented in its hardware.

Depth Peeling:

Depth peeling method, from NVIDIA, its core idea is to trigger from the camera, divide the translucent objects in the scene into N layers according to the depth after N (configurable) PASS, record the color and depth of each layer separately, and then divide each Layer colors are blended together. Later, in order to optimize the peeling performance, NVIDIA proposed a method of peeling the front layer and the back layer at the same time in one PASS, which is called Dual Depth Peeling. Depth Peeling is a very slow OIT method that consumes a lot of video memory space (space allocation can be determined), but has no high requirements for hardware.

The overhead is that two depth buffers are required. The rendering process requires N passes, where N is the number of translucent layers. The improved Dual Depth Peeling algorithm requires N/2+1 passes .

Possible problems caused by Depth peeling:

- Reduced efficiency and unstable overhead. (number of passes depends on camera angle, skeletal animation, etc.)

- Complexity introduced by the program itself. (How to judge how many layers of translucency and occlusion query there are currently?)

- There are no existing reference products, only technical demos.

Depth peeling is a translucent rendering scheme that has been researched more recently, and the implementation of dx11 based on linked list is not a small breakthrough. It may become a more practical solution in the future.

NVIDIA's algorithm:

OrderIndependentTransparency

DualDepthPeeling