MPI (Massage Passing Interface), which is not a language, but a library description, is a standard specification of a message passing function library. The MPI standard defines a set of portable programming interfaces, which can directly call the corresponding functions in Fortran and C/C++.

There are many implementations of MPI. MPICH is one of the most important MPI implementations, and it and its derivatives constitute the most widely used MPI implementation in the world, and are also widely used in supercomputers.

The most basic message passing operations of MPI include: send message send, receive message receive, process synchronization barrier, reduction reduction, etc. This article briefly introduces sending and receiving messages . If you want to know more about MPI, please refer to other related materials.

1. Basic concepts of MPI and related function explanations

communicator

The so-called communicator can be understood as a collection of a class of processes, or a process group. Processes in a process group can communicate with each other. All MPI communication must take place within some communicator. The MPI system provides a default communicator MPI_COMM_WORLD, and all started MPI processes are included in the communicator by calling the function MPI_Init(). Each process can obtain the number of MPI processes contained in the communicator through the function MPI_Comm_size(). All processes in a communicator have a unique serial number (rank) in the communicator as their own identification (the value range of the serial number is [0, the number of communicator processes - 1] )

int MPI_Comm_size(MPI_Comm comm, int* size)

//获取通信器的进程数,comm为通信器名称(也叫通信域),获取得到的进程个数结果被放在size中Enter the program number (rank)

Rank is used to identify a process in a communicator. The same process can have different serial numbers in different communicators. The serial number of a process is assigned when the communicator is created.

int MPI_Comm_rank(MPI_Comm comm, int* rank)

//获取进程在通信器中的标号,comm为通信器名称,获取得到的进程id被放在rank中message

Data is passed between different processes through messages, so MPI can be applied to distributed storage systems. The parameters of the message sending and receiving functions can be divided into two parts: data (data) and envelope (envelope). The package consists of three parts: the program number, the message label and the communicator;

int MPI_Send(void *buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm)

//消息发送函数,参数可以分为数据、信封

//数据:<地址(buffer的地址),数据个数(count),数据类型(datatype)>

//信封:<目的(dest),标识(tag),通信域(comm)>

//count指定数据类型的个数、datatype为数据类型、dest取值范围是0~(进程总数-1);tag 取值范围是0~MPI_TAG_UB,用来区分消息

int MPI_Recv(void* buf, int count, MPI_Datatype datatype, int source,int tag, MPI_Comm comm, MPI_Status *status)

//接收buffer必须至少可以容纳count个由datatype参数指明类型的数据. 如果接收buf太小, 将导致溢出。

//消息匹配机制:

//1.参数匹配 dest,tag,comm 分别与 source,tag,comm相匹配;

//2.若 Source 为 MPI_ANY_SOURCE:接收任意处理器来的数据。

//3.若Tag为MPI_ANY_TAG:则匹配任意tag值的消息

//在阻塞式消息传送中不允许Source==Dest,否则会导致死锁。

//消息传送被限制在同一个通信域中。

//在send函数中必须指定唯一的接收者

int MPI_Get_count(MPI_Status status, MPI_Datatype datatype, int*count)

//查询接收到的消息长度,该函数在count中返回数据类型的个数,即消息的长度(count属于MPI_Status结构的一个域,但不能被用户直接访问)requires attention:

The sending process needs to specify a valid target receiving process

The receiving process needs to specify a valid source sending process

The process of receiving and sending messages should be in the same communicator

The tag of receiving and sending messages should be the same

The receive buffer should be large enough

Two, MPI environment configuration

2.1 gcc/g++ environment configuration

Ubuntu does not install gcc/g++ and other compilation environments by default. To simplify environment configuration, the build-essential package can be used. This package contains compilers such as gcc/g++/gfortran.

sudo apt install build-essential2.2 mpich environment configuration

You can install mpich directly using the following command.

sudo apt install mpich2.3 View version information

Check the program version by entering the following information at the command line

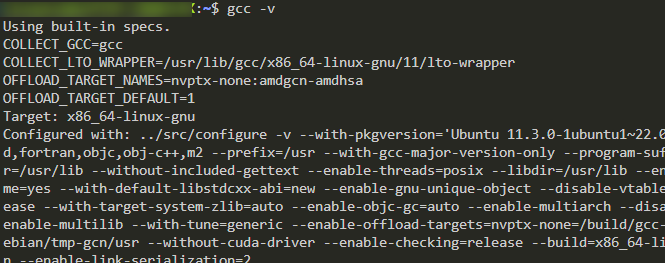

gcc version view (C language compiler)

gcc -v

g++ version view (C++ compiler)

g++ -v

View mpicc version (MPI program written in C language needs to be compiled with mpicc)

mpicc -v

View mpic++ version (MPI program written in C++ needs to be compiled with mpic++)

mpic++ -v

3. Compile and run MIP

3.1 Compilation of the program

The mpi program can be compiled using the following command:

使用C语言编写的MPI程序:

mpicc -o XXX XXX.cpp

使用C++编写的MPI程序:

mpic++ -o XXX XXX.cpp3.2 Running the program

The operation of the mpi program:

mpirun -np Y ./XXX

// Y表示的是一个数字,代表并行运行的进程数目。

// XXX表示源程序文件3.3 HelloWorld sample program

#include <iostream>

#include "mpi.h"

//使用了MPI程序需要包含mpi.h头文件

using namespace std;

int main(int argc, char** argv)

{

MPI_Init( &argc , &argv);

cout<<"Hello world!"<<endl;

MPI_Finalize();

return 0;

}The program simply makes each process output hello world! The result of the operation is as follows:

4. MPI data type (corresponding to C language)

MPI data types |

C language data type |

MPI_CHAR |

char |

MPI_SHORT |

short int |

MPI_INT |

int |

MPI_LONG |

long |

MPI_FLOAT |

float |

MPI_DOUBLE |

double |

MPI_LONG_DOUBLE |

long double |

MPI_BYTE |

|

MPI_PACKED |

Five, MPI basic functions

int MPI_Init(int *argc, char **argv)

//初始化函数。MPI_INIT是MPI程序的第一个调用,完成MPI程序的所有初始化工作,启动MPI环境,标志并行代码的开始,因此要求main函数必须带参数运行

int MPI_Finalize(void)

//退出/结束函数。它是MPI程序的最后一个调用,结束MPI程序的运行,是MPI程序的最后一条可执行语句。它标志并行代码的结束,结束除主进程外其它进程

int MPI_Initialized(int* flag)

//允许在MPI_Init前使用的函数,检测MPI系统是否已经初始化

int MPI_Get_processor_name(char* name, int* resultlen)

//获取处理器的名称,在返回的name中存储所在处理器的名称,resultlen存放返回名字所占字节,应提供参数name不少于MPI_MAX_PRCESSOR_NAME个字节的存储空间

double MPI_Wtime(void)

//返回调用时刻的墙上时间,用浮点数表示秒数,墙上时钟时间wall clock time,也叫时钟时间,是指从进程从开始运行到结束,时钟走过的时间,这其中包含了进程在阻塞和等待状态的时间。用来计算程序运行时间。6. Several simple sample programs

6.1 Print

Output information and running time of each process

#include <iostream>

#include "mpi.h"

using namespace std;

int main(int argc, char** argv)

{

int pid, pnum,namelen;

double starttime;

char processor_name[MPI_MAX_PROCESSOR_NAME];

MPI_Init( &argc , &argv);

//初始化

starttime=MPI_Wtime();

//开始时间

MPI_Comm_size( MPI_COMM_WORLD , &pnum);

//获取通信域内进程个数

MPI_Comm_rank( MPI_COMM_WORLD , &pid);

//获取本进程的rank

MPI_Get_processor_name( processor_name , &namelen);

//获取processor_name

cout<<"this is "<<pid<<" of "<<pnum<<" and my name is "<< processor_name <<endl;

cout<<"this is "<<pid<<" and spend ";

printf("%.6lf s\n",MPI_Wtime()-starttime);

//输出信息

MPI_Finalize();

//结束并退出

return 0;

}Command Line:

mpic++ -o print.o print.cpp

mpirun -np 4 ./print.o

6.2 Calculation of π

#include <iostream>

#include "mpi.h"

using namespace std;

int main(int argc, char**argv)

{

//mpirun -np 4 calculatePI.o 800 其中的800是以参数的形式传入的,位于argv[1]

long double pi=0, answer=0, PI=3.141592653589793238462643383279;

int size, id, namelen,n=1000;

double time;

char processor_name[MPI_MAX_PROCESSOR_NAME];

MPI_Status status;

//开始计时

time=MPI_Wtime();

//如果参数列表中制定了n的值,则将该值赋给n

if(argc==2)sscanf(argv[1], "%d", &n);

MPI_Init(&argc, &argv);

//获取进程信息

MPI_Comm_size( MPI_COMM_WORLD , &size);

MPI_Comm_rank( MPI_COMM_WORLD , &id);

//比较n和size大小,若n过小,则返回

if(n<size)

{

cout<<"输入n值过小, 请重新输入"<<endl;

MPI_Finalize();

return 0;

}

for(int i=id; i<=n; i+=size)

{

long double tempans;

tempans = (long double)1/(long double)(2*i+1);

if(i%2==0)

{

answer+=tempans;

}

else

{

answer-=tempans;

}

}

//如果是主进程

if(id==0)

{

long double recvbuf;

pi=answer;

for(int i=1; i<size; i++)

{

MPI_Recv( &recvbuf , 1 , MPI_LONG_DOUBLE , MPI_ANY_SOURCE , 0 , MPI_COMM_WORLD , &status);

pi+=recvbuf;

}

}

else//发送消息并退出程序

{

MPI_Send( &answer , 1 , MPI_LONG_DOUBLE , 0 , 0 , MPI_COMM_WORLD);

MPI_Finalize();

return 0;

}

pi*=4;

cout<<"主进程使用"<<MPI_Wtime()-time<<"秒, 最后得到PI的计算结果为: ";

printf("%.20Lf\n",pi);

cout<<"n = "<<n<<" 使用了 "<<MPI_Wtime()-time <<"s pi = ";

printf("%.20Lf %.20Lf \n",pi,abs(PI-pi));

MPI_Finalize();

return 0;

}Command Line:

mpic++ -o calculatePI.o calculatePI.cpp

mpirun -np 1 calculatePI.o 200000000

mpirun -np 4 calculatePI.o 200000000The result of the operation is:

If there is any inappropriate or wrong, please correct me, thank you! ! !