| 1. Background knowledge |

As the name implies, a process is a process that is being executed. A process is an abstraction of a running program.

The concept of process originates from the operating system, which is the core concept of the operating system and one of the oldest and most important abstract concepts provided by the operating system. Everything else in the operating system revolves around the concept of a process.

So if you want to really understand the process, you must understand the operating system in advance, click to enter

PS: The ability to support (pseudo) concurrency is guaranteed even if there is only one cpu available (early computers did). Turn a single cpu into multiple virtual cpus (multi-channel technology: time multiplexing and space multiplexing + isolation supported on hardware), without the abstraction of processes, modern computers will cease to exist.

| 2. What is a process |

Process: An ongoing process or a task, and the cpu is responsible for executing the task.

Example (single core + multi-channel, to achieve concurrent execution of multiple processes):

There are many tasks to be done in a period of time: the task of preparing lessons in python, the task of writing a book, the task of making girlfriends, the task of ranking the glory of the king, but only one task can be done at the same time (the cpu can only do one task at a time) ), how can I play the effect of concurrent execution of multiple tasks? Prepare for a class, chat with Li Jie's girlfriend, and play the King of Glory for a while... This ensures that every mission is in progress.

| The difference between a process and a program |

A program is just a bunch of code, and a process refers to the running process of a program.

Take cake as an example:

A cake recipe is a program (an algorithm described in a suitable form) The

baker is the processor

The ingredients for the cake are the input data The

process is the sum of the actions of the chef reading the recipe, taking the ingredients, and baking the cake.

Note: The same program is executed twice, that is also two processes , such as opening the same software of Baofengyingyin, one plays movies and the other plays AV.

| 4. Concurrency and Parallelism |

Whether it is parallel or concurrent, in the eyes of the user, it is running 'simultaneously'. No matter it is a process or a thread, it is just a task. The real work is the cpu. perform a task.

1. Concurrency: Pseudo-parallel, that is, it looks like multiple processes are running at the same time. A single cpu + multi-channel technology can achieve concurrency.

2. Parallelism: Multiple processes run at the same time, which can only be achieved if there are multiple CPUs.

Under a single core, multi-channel technology can be used, multiple cores, and each core can also use multi-channel technology ( multi-channel technology is for a single core ) There are four cores and six tasks, so there are four cores at the same time Each task is executed, assuming that they are assigned to cpu1, cpu2, cpu3, cpu4 respectively; once task 1 encounters I/O, it is forced to interrupt execution, and task 5 gets the time slice of cpu1 to execute, which is a single Multichannel technology under the nucleus.

Once the I/O of task 1 is over, the operating system will call it again (it needs to know the scheduling of the process, which cpu is allocated to run, the operating system has the final say), and it may be allocated to any one of the four cpus. implement.

All modern computers often do a lot of things at the same time, and a user's PC (whether single cpu or multi-cpu) can run multiple tasks at the same time (a task can be understood as a process).

Review of the concept of multi-channel technology : multiple (multiple) programs are stored in the memory at the same time, and the cpu quickly switches from one process to another, so that each process runs for tens or hundreds of milliseconds. In this way, although at a certain moment, A cpu can only perform one task, but in 1 second, the cpu can run multiple processes, which gives the illusion of parallelism, that is, pseudo-concurrency, in order to distinguish the true hardware parallelism of multi-processor operating systems ( Multiple CPUs share the same physical memory).

| Five, synchronous \ asynchronous and blocking \ non-blocking |

所谓同步,就是在发出一个功能调用时,在没有得到结果之前,该调用就不会返回。按照这个定义,其实绝大多数函数都是同步调用。但是一般而言,我们在说同步、异步的时候,特指那些需要其他部件协作或者需要一定时间完成的任务。

#举例: #1. multiprocessing.Pool下的apply #发起同步调用后,就在原地等着任务结束,根本不考虑任务是在计算还是在io阻塞,总之就是一股脑地等任务结束 #2. concurrent.futures.ProcessPoolExecutor().submit(func,).result() #3. concurrent.futures.ThreadPoolExecutor().submit(func,).result()

异步的概念和同步相对。当一个异步功能调用发出后,调用者不能立刻得到结果。当该异步功能完成后,通过状态、通知或回调来通知调用者。如果异步功能用状态来通知,那么调用者就需要每隔一定时间检查一次,效率就很低(有些初学多线程编程的人,总喜欢用一个循环去检查某个变量的值,这其实是一 种很严重的错误)。如果是使用通知的方式,效率则很高,因为异步功能几乎不需要做额外的操作。至于回调函数,其实和通知没太多区别。

#举例: #1. multiprocessing.Pool().apply_async() #发起异步调用后,并不会等待任务结束才返回,相反,会立即获取一个临时结果(并不是最终的结果,可能是封装好的一个对象)。 #2. concurrent.futures.ProcessPoolExecutor(3).submit(func,) #3. concurrent.futures.ThreadPoolExecutor(3).submit(func,)

阻塞调用是指调用结果返回之前,当前线程会被挂起(如遇到io操作)。函数只有在得到结果之后才会将阻塞的线程激活。有人也许会把阻塞调用和同步调用等同起来,实际上他是不同的。对于同步调用来说,很多时候当前线程还是激活的,只是从逻辑上当前函数没有返回而已。

#举例: #1. 同步调用:apply一个累计1亿次的任务,该调用会一直等待,直到任务返回结果为止,但并未阻塞住(即便是被抢走cpu的执行权限,那也是处于就绪态); #2. 阻塞调用:当socket工作在阻塞模式的时候,如果没有数据的情况下调用recv函数,则当前线程就会被挂起,直到有数据为止。

非阻塞和阻塞的概念相对应,指在不能立刻得到结果之前也会立刻返回,同时该函数不会阻塞当前线程。

小结:

1. 同步与异步针对的是函数/任务的调用方式:同步就是当一个进程发起一个函数(任务)调用的时候,一直等到函数(任务)完成,而进程继续处于激活状态。而异步情况下是当一个进程发起一个函数(任务)调用的时候,不会等函数返回,而是继续往下执行当,函数返回的时候通过状态、通知、事件等方式通知进程任务完成。

2. 阻塞与非阻塞针对的是进程或线程:阻塞是当请求不能满足的时候就将进程挂起,而非阻塞则不会阻塞当前进程。

| 六、进程的创建 |

但凡硬件都需要操作系统去管理。有操作系统就有进程,需要有创建进程的方式。

(一)操作系统只为一个应用程序设计:如微波炉一旦启动,所有进程都已存在。

(二)对于通用程序,需要有系统允许过程中创建或撤销进程的能力:

1.系统初始化

2.运行一个进程的过程中开启一个子进程(subprocess模块)。(并发)

3.用户交互请求,创建新进程

4.批处理作业的初始化

新进程的创建都是由一个已经存在的进程执行了一个用于创建进程的系统调用而创建的:

1.在UNIX中该系统调用是:fork 进程由操作系统管理。

2.在windows中该系统调用是:CreateProcess

关于创建的子进程,UNIX和windows系统对比:

1.相同的是:进程创建后,父进程和子进程有各自不同的地址空间(多道技术要求物理层面实现进程之间内存的隔离),任何一个进程的在其地址空间中的修改都不会影响到另外一个进程。

2.不同的是:在UNIX中,子进程的初始地址空间是父进程的一个副本,提示:子进程和父进程是可以有只读的共享内存区的。但是对于windows系统来说,从一开始父进程与子进程的地址空间就是不同的。

| 七、进程的终止 |

1、正常退出(自愿,如用户点击交互式页面的叉号,或程序执行完毕调用发起系统调用正常退出,在linux中用exit,在windows中用ExitProcess)

2、出错退出(自愿,python a.py中a.py不存在)

3、严重错误(非自愿,执行非法指令,如引用不存在的内存,1/0等,可以捕捉异常,try...except...)

4、被其他进程杀死(非自愿,如kill -9)

| 八、进程的层次结构 |

相同点:无论UNIX还是Windows,进程只有一个父进程。

不同点:1、UNIX中所有的进程,都是以init进程为根,组成树形结构。父子进程共同组成一个进程组,当键盘发出一个信号时,该信号被送给当前与键盘相关的进程组中的所有成员。

2、Windows中没有进程层次概念,进程地位相同。创建进程时,父进程得到句柄,可以控制子进程,句柄可以传给其他子进程,因此没有层次。

| 九、进程的状态 |

tail -f access.log |grep '404'

执行程序tail,开启一个子进程,执行程序grep,开启另外一个子进程,两个进程之间基于管道'|'通讯,将tail的结果作为grep的输入。

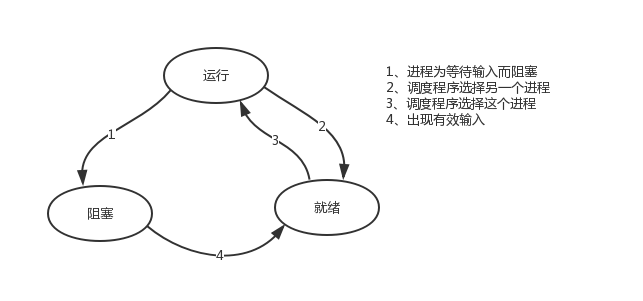

进程grep在等待输入(即I/O)时的状态称为阻塞,此时grep命令都无法运行

其实在两种情况下会导致一个进程在逻辑上不能运行,

-

进程挂起是自身原因,遇到I/O阻塞,便要让出CPU让其他进程去执行,这样保证CPU一直在工作

-

与进程无关,是操作系统层面,可能会因为一个进程占用时间过多,或者优先级等原因,而调用其他的进程去使用CPU。

因而一个进程由三种状态

| 十、进程并发的实现 |

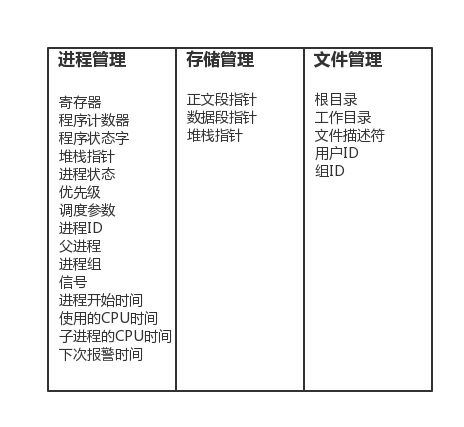

硬件中断一个正在运行的进程,把此时进程运行的所有状态保存下来,为此,操作系统维护一张表格,即进程表(process table),每个进程占用一个进程表项(这些表项也称为进程控制块)。

表存放了进程状态的重要信息:程序计数器、堆栈指针、内存分配状况、所有打开文件的状态、帐号和调度信息,以及其他在进程由运行态转为就绪态或阻塞态时,必须保存的信息,从而保证该进程在再次启动时,就像从未被中断过一样。