As functionality increases, the complexity of prompt engineering will inevitably increase. Here, I explain how to introduce complexity into the process of hinting engineering.

static prompt

Experimental prompts and prompt engineering are commonplace these days. Through the process of creating and running prompts, users experience the generative power of LLM.

Text generation is a meta-capability of large language models, and just-in-time engineering is the key to unlocking it.

One of the first principles gleaned while experimenting with Prompt Engineering was that a generative model cannot be explicitly asked to do something.

Instead, users need to understand what they want to achieve and mimic the launch of that vision. The process of imitation is called prompt design, prompt or casting.

Prompt Engineering is the way to provide guidance and reference data to LLMs.

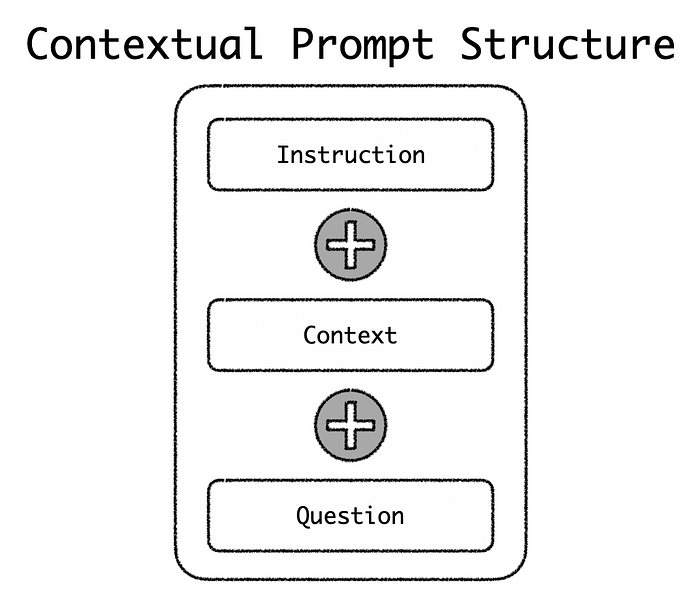

Introducing a set structure in the prompt can obtain more accurate responses from LLM. For example, as shown below, prompts can be contextualized to create contextual references for LLMs.

A prompt for contextual design usually consists of three parts, instruction , context and question .

Here's a practical example of contextual hints:

prompt = """使用提供的文本尽可能如实回答问题,如果答案未包含在下面的文本中,则说“我不知道” 背景:2020 年夏季奥运会男子跳高

项目

采用2021 年 7 月 30 日至 8 月 1 日在奥林匹克体育场举行。来自 24 个国家的 33 名运动员参加了比赛;可能的总人数取决于除了通过分数或排名的 32 个资格赛(没有普遍名额)之外,还有

多少国家使用普遍名额进入运动员

于 2021 年使用)。

意大利运动员 Gianmarco Tamberi 和卡塔尔运动员 Mutaz Essa Barshim 成为比赛的联合获胜者

当他们清除 2.37m 时,他们两人之间的平局。坦贝里和巴尔希姆都同意分享金牌,这

在奥运会历史上是罕见的,不同国家的运动员同意分享同一枚奖牌。

特别是 Barshim 被听到问比赛官员“我们能有两个金牌吗?” 作为对

“跳下”的回应。白俄罗斯的 Maksim Nedasekau 获得铜牌。这枚奖牌是意大利和白俄罗斯在男子跳高项目上的首枚奖牌

,意大利和卡塔尔在男子跳高项目上的首枚金牌,以及卡塔尔男子跳高项目连续第三枚奖牌

(均由巴尔希姆获得)。

Barshim 成为继瑞典的Patrik Sjöberg(1984 年至 1992 年)之后第二个在跳高比赛中获得三枚奖牌的人。

问:谁赢得了 2020 年夏季奥运会男子跳高冠军?

A:"""

openai.Completion.create(

prompt=prompt,

temperature=0,

max_tokens=300,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

model=COMPLETIONS_MODEL

)["choices"][0]["text "].strip("\n")

At this stage, hints are static in nature ** and do not form part of a larger application .

prompt template

The next step in static prompts is prompt templating.

Static prompts are converted to templates where key values are replaced by placeholders. Placeholders are replaced with application values/variables at runtime.

Some people refer to templating as entity injection or prompt injection .

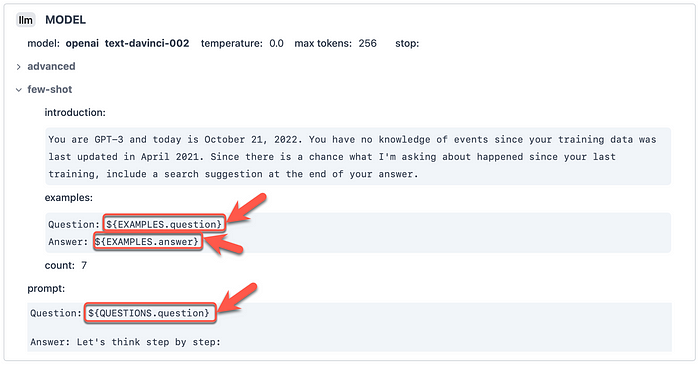

In the template example below from DUST, you can see the placeholders and ${EXAMPlES:question}these are replaced with values at runtime.${EXAMPlES:answer}${QUESTIONS:question}

Prompt templates allow prompts to be stored, reused , shared and programmed . Generate prompts can be incorporated into programs for programming, storage, and reuse.

Templates are text files with placeholders into which variables and expressions can be inserted at runtime.

prompt Pipelines

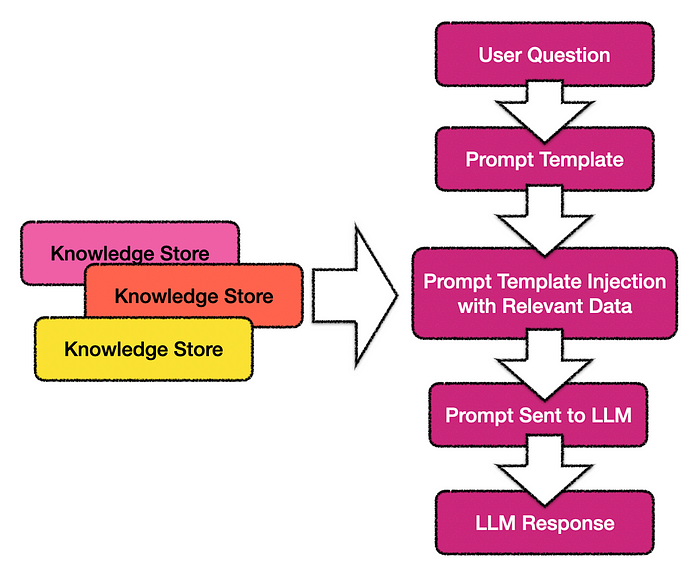

In the case of prompt Pipelines, predefined prompt templates are populated with questions or requests from users. The context or references included in the prompts that guide the LLM are data retrieved from the knowledge base.

Prompt Pipelines can also be described as an intelligent extension to prompt templates.

Thus, the variables or placeholders in the pre-defined prompt templates are filled (also known as prompt injection) with questions from the user, and with knowledge to be searched from the knowledge base.

Data from the knowledge store acts as a contextual reference for the questions to be answered. Having this information available prevents confusion in the LLM. This process also helps prevent the LLM from using outdated or old data in the model that was not accurate at the time.

Subsequently, the combined prompt is sent to the LLM, and the LLM response is returned to the user.

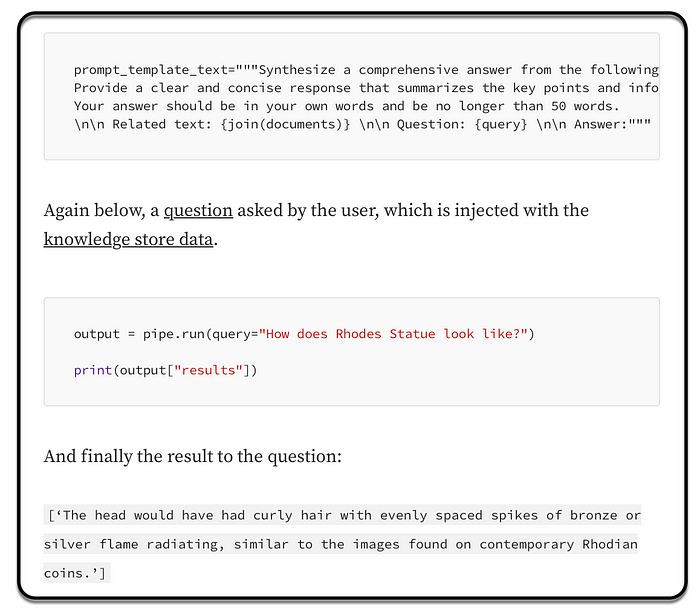

Below is an example prompt template before document and question data is injected.

prompt link

Prompt chaining is the process of chaining or sequencing multiple prompts to form larger applications. Prompt sequences can be arranged in series or in parallel.

When prompts are in order, a prompt (also called a node) in a chain usually depends on the output of the previous node in the chain. In some cases, data processing and decision making cases are implemented between prompts/nodes.

The L LM is versatile and has open-ended functionality.

In some cases, processes need to run in parallel, for example, user requests can be initiated in parallel while the user is talking to a chatbot.

Prompt Chaining will primarily consist of a conversational UI for input. The output will also be mostly unstructured dialog output. So a digital assistant or chatbot was created. Prompt links can also be used in RPA scenarios where processes and pipelines are initiated and users are notified of the results.

When large language model hints are linked through a visual programming UI, the largest part of the functionality will be the GUI that facilitates the authoring process.

Below is an image of such a GUI for prompt engineering and prompt chain authoring. This design stems from research conducted by the University of Washington and Google.

In summary

The term "last mile" is often used in the context of production implementations of generative AI and large language models (LLM). Ensure that AI implementations actually solve enterprise problems and deliver measurable business value.

Production implementations need to face customer rigor and scrutiny, as well as the need for continuous expansion, updates and improvements.

Production Implementation Requirements for LLM:

- Curated and structured data for fine-tuning LLM

- Supervised Approaches to Generative AI

- Scalable and manageable ecosystem of LLM-based applications