1. OneAPI industry plan

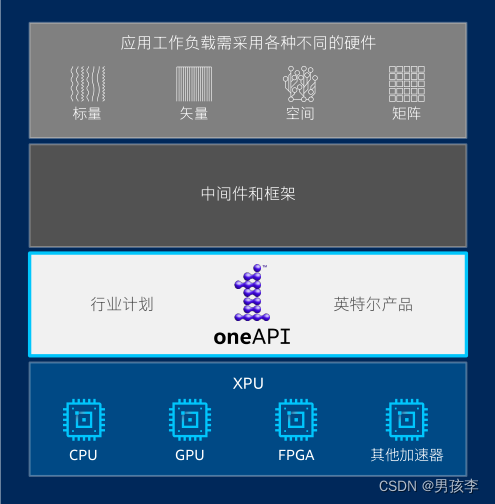

1.1 Programming Challenges for Multiple Architectures

Increasingly specialized workloads require a variety of data-centric hardware. Today, each architecture requires a separate programming model and toolchain. The complexity of software development limits freedom of architecture choice.

1.2 OneAPI A programming model for multiple architectures and vendors

Freedom of choice

Choose the best acceleration technology that software cannot decide for you

Realize all hardware value

Performance across CPUs, GPUs, FPGAs, and other accelerators

Develop and deploy software with confidence

Open industry standards provide a safe, clear path to the future Path

▪ Compatible with existing languages and programming models, including C++, Python, SYCL, OpenMP, Fortran and MPI

1.3 Powerful OneAPI library

Realizes all hardware value

Designed to accelerate domain-specific critical function

freedom of choice

Pre-optimized for each target platform for maximum performance

2. Intel oneAPI products

Based on Intel's rich CPU tool heritage and extended to the XPU architecture, a complete set of high-level compilers, libraries, and porting, analysis, and debugger tools Utilizes state-of-the-art

hardware capabilities to accelerate computing

Compatible with existing programming models and code bases (C++, Fortran, Python, OpenMP, etc.) interoperability, developers can rest assured

that existing applications will work seamlessly with oneAPI

▪ Easy transition to new systems and accelerators - using a single code base gives developers more time to innovate

2.1 Intel® DPC++ Compatibility Tool

Assist developers in migrating code written in CUDA to DPC++ in one go, generating human-readable code as much as possible

About 90-95% of the code is usually automatically migrated

Provides inline comments to help developers complete application porting

2.2 Analysis and debugging tools

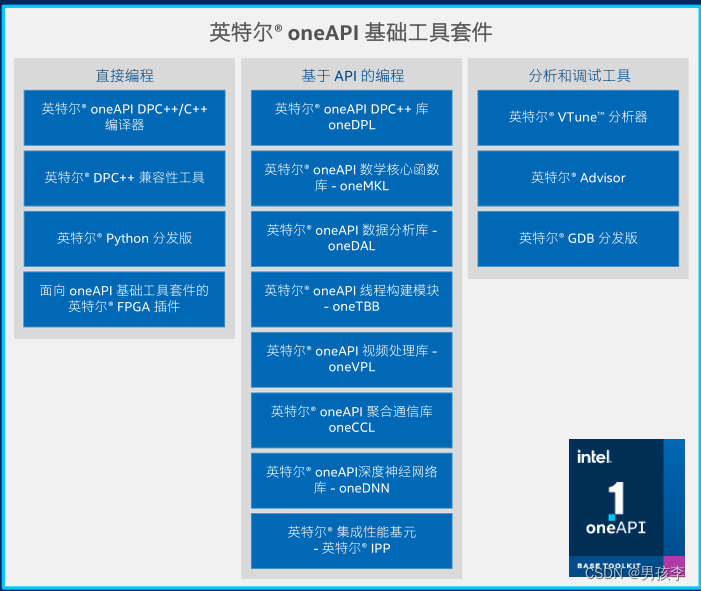

2.3 Intel® oneAPI Toolkit

2.3 Intel® oneAPI Toolkit

A suite of proven developer tools that scales from CPUs to more accelerators

3. Details about Intel® oneAPI Toolkit

3.1 Intel® oneAPI Basic Toolkit

A core set of tools and libraries for developing high-performance applications on Intel® CPUs, GPUs, and

FPGAs.

For whom?

▪ Various developers in different industries

▪ Add-on tool suite users as it is the foundation of all tool suites

Key features/benefits

▪ Data-parallel C++ compiler, library and analysis tools

▪ DPC++ compatibility tools to help migrate existing applications written in CUDA With code

▪ Python distribution includes accelerated scikit-learn, NumPy and SciPy libraries

▪ Optimized performance libraries with support for threading, math, data analysis, deep learning and

video/image/signal

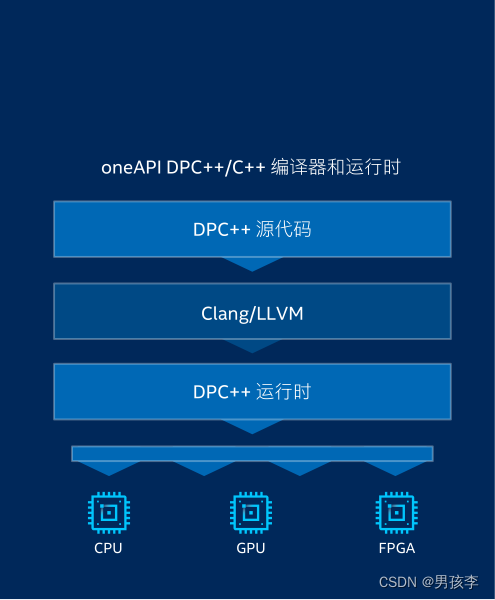

3.2Intel ® oneAPI DPC++/C++ Compiler

The compiler delivers exceptional parallel programming efficiency and performance across CPUs and accelerators

▪ Supports code reuse for different target hardware, as well as custom tuning for specific accelerators

▪ Open, cross-industry alternative to proprietary languages

DPC++ is based on ISO C++ and Khronos SYCL

▪ Uses common and familiar C and C++ constructs to achieve the efficiency benefits of C++

▪ Integrates with Khronos Group's SYCL to support data parallelism and heterogeneous

programming years of experience

3.3 Intel® oneAPI converged communication library optimizes communication mode

- Provides an optimized communication mode to achieve high performance on Intel CPUs and GPUs to distribute model training to multiple nodes

- Transparently supports multiple interconnects such as Intel® Omni-Path Architecture, InfiniBand, and Ethernet

- Built on low-level communication middleware (MPI and libfabrics)

- Support for efficient implementation of ensembles for deep learning training — all-gather, all-reduce, and reduce-scatter

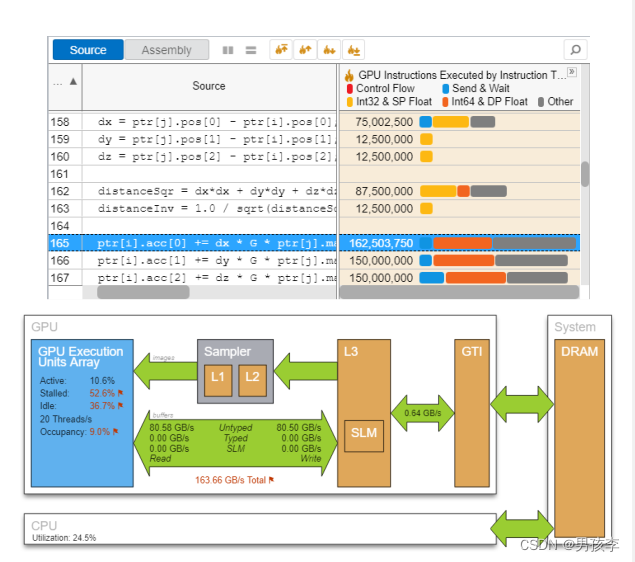

3.4 Intel® VTune™ Analyzer

DPC++ analysis⎯for CPU, GPU and FPGA tuning

Profile Data Parallel C++ (DPC++)

View the most time-consuming lines of DPC++ code Tuning

for Intel CPUs, GPUs, and FPGAs Optimizing offloading

for any supported hardware accelerator Tweaking OpenMP offloading performance Various performance profiles CPU, GPU, FPGA, threads, Memory, Cache, Storage… Support for common languages DPC++, C, C++, Fortran, Python, Go, Java or a combination of languages

3.5 Intel® Advisor

Design Assistant — Tailor-made for modern hardware

Offload Advisor

estimates performance offloaded to accelerators

Roofline analysis

Optimizes CPU/GPU code for memory and computation

Vectorization Advisor

adds and optimizes vectorization

Threading Advisor

adds efficient threading functionality to non-threaded applications

Flow graph analyzer

Efficient creation and analysis flow graph