Bilateral Filtering

1. Basic ideas

The basic idea of bilateral filtering (Bilateral Filtering) is to consider the spatial domain information and value range information of the pixel points at the same time. That is, first segment or classify the neighborhood to be used for filtering according to the pixel value, and then give a relatively high weight to the category to which the point belongs, and then perform neighborhood weighted summation to obtain the final result.

2. Implementation principle

In Bilateral Filtering, the two elements, namely: airspace and value domain, have similar mathematical expressions, as follows:

Among them, k in front of the integral sign is a normalization factor, which is to consider weighting all pixel points, c and s are closeness and similarity functions, x represents the required point, and f(x) represents the pixel value of the point. f(x) -->h(x) is the image before and after filtering, and our final filter function is:

Due to the space part, the filtering characteristics are better, and due to the range part, the edge preservation is better.

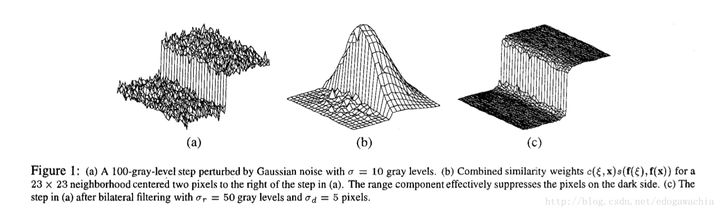

The figure below shows the weight and the final filtering result when there is an edge. It can be seen that the weight has a clear boundary at the boundary, so that almost only the pixels on the side of the edge to which they belong are weighted.

A method to realize the two functions of c and s is the Gaussian kernel, and its properties are determined by the respective sigma parameters, namely σd and σr

where, where,

3. Parameter discussion

Gaussian filtering in the spatial domain does not need to be introduced too much. For value domain filtering, that is, the weighted result of considering the similarity of pixels without considering the space, the value range filtering is just a transformation of the histogram of the image to be filtered, and for single peak The histogram of the value range filter compresses the value range toward the middle of the peak value, that is, the mean value.

The selection of parameters is discussed as follows:

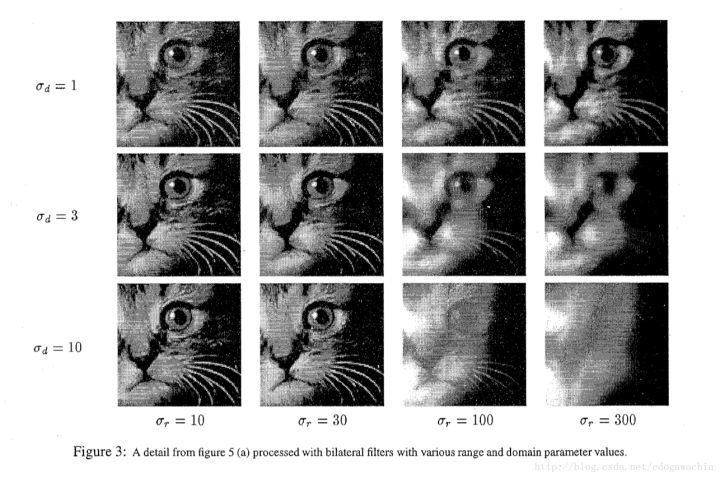

First, the two sigma values are the variance of the kernel. The larger the variance, the smaller the weight difference. Therefore, it means that the influence of this factor is not emphasized. On the contrary, it means that the weight imbalance caused by this factor is emphasized more. therefore:

-

The relatively smaller sigma of one of the two aspects indicates that this aspect is relatively more important and is emphasized. If sigma_d becomes smaller, it means that more neighbor values are used for smoothing, indicating that the spatial information of the image is more important, that is, similar. If sigma_r becomes smaller, it means that the conditions of the same category as itself become harsh, thereby emphasizing the similarity of the value range.

Secondly, sigma_d represents the smoothness of the airspace, so it is more suitable for the slow-changing part without edges; sigma_r represents the difference in the value range, so this difference is emphasized, that is, reducing sigma_r can highlight the edge.

-

When sigma_d becomes larger, the weight of each region of the image is basically derived from the weight of the range filter, so it is not very sensitive to the spatial neighborhood information; when sigma_r becomes larger, the value range is not considered, and the weight mostly comes from the spatial distance, so the approximation Compared with ordinary Gaussian filtering, the edge preservation performance of the image is reduced. So if you want to remove more noise in smooth areas, you should increase sigma_d, if you want to keep edges, you should reduce sigma_r.

-

In extreme cases, if sigma_d is infinite, it is equivalent to range filtering; if sigma_r is infinite, it is equivalent to spatial Gaussian filtering.

4. Discrete mathematical formula model

Among them, and are the filter parameters (uncertainties) of the spatial domain and the value domain, respectively, and are the pixel values of the pixel points and , respectively. The normalized weight coefficient is:

The kernel function of bilateral filtering is the comprehensive result of the spatial domain kernel and the pixel value domain kernel: in the flat area of the image, the pixel value changes very little, and the corresponding pixel value domain weight is close to 1. At this time, the spatial domain weight plays a major role, which is quite In the Gaussian blur; in the edge area of the image, the pixel value changes greatly, and the weight of the image value domain becomes larger, thus maintaining the edge information.

5. Implementation of bilateral filtering code

void BilateralFilter( const Mat& src, Mat& dst, int d, double sigma_color, double sigma_space, int borderType )

{

int cn = src.channels();

int i, j, k, maxk, radius;

Size size = src.size();

CV_Assert( (src.type() == CV_8UC1 || src.type() == CV_8UC3) &&

src.type() == dst.type() && src.size() == dst.size() &&

src.data != dst.data );

if( sigma_color <= 0 )

{

sigma_color = 1;

}

if( sigma_space <= 0 )

{

sigma_space = 1;

}

// 计算颜色域和空间域的权重的高斯核系数, 均值 μ = 0; exp(-1/(2*sigma^2))

double gauss_color_coeff = -0.5/(sigma_color*sigma_color);

double gauss_space_coeff = -0.5/(sigma_space*sigma_space);

// radius 为空间域的大小: 其值是 windosw_size 的一半

if( d <= 0 )

{

radius = cvRound(sigma_space*1.5);

}

else

{

radius = d/2;

}

radius = MAX(radius, 1);

d = radius*2 + 1;

Mat temp;

copyMakeBorder( src, temp, radius, radius, radius, radius, borderType );

vector<float> _color_weight(cn*256);

vector<float> _space_weight(d*d);

vector<int> _space_ofs(d*d);

float* color_weight = &_color_weight[0];

float* space_weight = &_space_weight[0];

int* space_ofs = &_space_ofs[0];

// 初始化颜色相关的滤波器系数: exp(-1*x^2/(2*sigma^2))

for( i = 0; i < 256*cn; i++ )

{

color_weight[i] = (float)std::exp(i*i*gauss_color_coeff);

}

// 初始化空间相关的滤波器系数和 offset:

for( i = -radius, maxk = 0; i <= radius; i++ )

{

j = -radius;

for( ;j <= radius; j++ )

{

double r = std::sqrt((double)i*i + (double)j*j);

if( r > radius )

{

continue;

}

space_weight[maxk] = (float)std::exp(r*r*gauss_space_coeff);

space_ofs[maxk++] = (int)(i*temp.step + j*cn);

}

}

// 开始计算滤波后的像素值

for( i = 0; i < 0, size.height; i++ )

{

const uchar* sptr = temp->ptr(i+radius) + radius*cn; // 目标像素点

uchar* dptr = dest->ptr(i);

if( cn == 1 )

{

// 按行开始遍历

for( j = 0; j < size.width; j++ )

{

float sum = 0, wsum = 0;

int val0 = sptr[j];

// 遍历当前中心点所在的空间邻域

for( k = 0; k < maxk; k++ )

{

int val = sptr[j + space_ofs[k]];

float w = space_weight[k] * color_weight[std::abs(val - val0)];

sum += val*w;

wsum += w;

}

// 这里不可能溢出, 因此不必使用 CV_CAST_8U.

dptr[j] = (uchar)cvRound(sum/wsum);

}

}

else

{

assert( cn == 3 );

for( j = 0; j < size.width*3; j += 3 )

{

float sum_b = 0, sum_g = 0, sum_r = 0, wsum = 0;

int b0 = sptr[j], g0 = sptr[j+1], r0 = sptr[j+2];

k = 0;

for( ; k < maxk; k++ )

{

const uchar* sptr_k = sptr + j + space_ofs[k];

int b = sptr_k[0], g = sptr_k[1], r = sptr_k[2];

float w = space_weight[k] * color_weight[std::abs(b - b0) + std::abs(g - g0) + std::abs(r - r0)];

sum_b += b*w;

sum_g += g*w;

sum_r += r*w;

wsum += w;

}

wsum = 1.f/wsum;

b0 = cvRound(sum_b*wsum);

g0 = cvRound(sum_g*wsum);

r0 = cvRound(sum_r*wsum);

dptr[j] = (uchar)b0;

dptr[j+1] = (uchar)g0;

dptr[j+2] = (uchar)r0;

}

}

}

}