Table of contents

2. Learn word embedding in the embedding layer of keras

2.3 Learning Embedding Examples

3. Use pre-trained word vectors for embedding learning

1. Keras embedding layer

Keras' embedding layer is initialized with random weights and will learn embeddings for all words in the dataset.

It is a flexible layer that can be used in various ways such as:

1. It can be used alone to learn a word embedding which can later be used in another model.

2. It can be used as part of a deep learning model where the embedding is learned along with the model itself.

3. It can be used to load the pre-trained word embedding model, which is a kind of migration learning, converting the word embedding model into a weight matrix and inputting it into the Embedding layer of keras

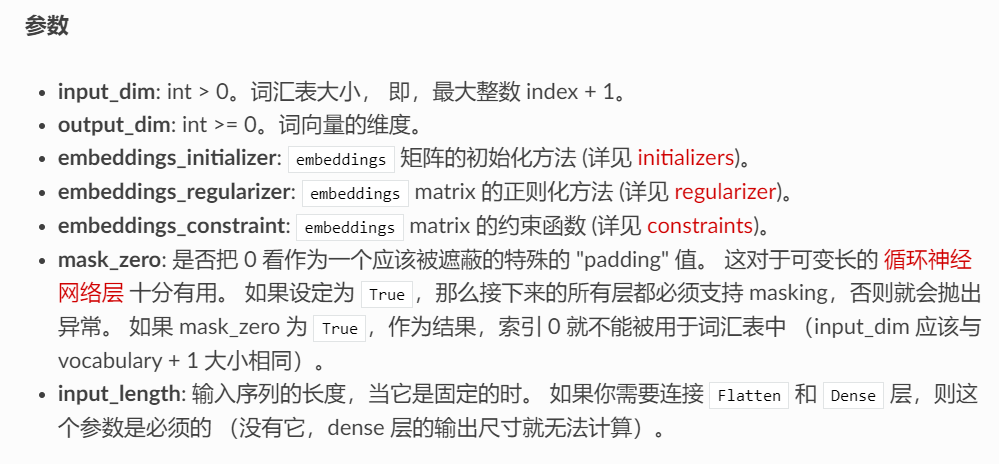

keras.layers.Embedding(input_dim, output_dim, embeddings_initializer='uniform', embeddings_regularizer=None, activity_regularizer=None, embeddings_constraint=None, mask_zero=False, input_length=None)

input size

(样本数, sequence_length(句子长度,句子的词长度需要人为统一)) A 2D tensor of size .

output size

A 3D tensor whose dimensions are the dimensions of (样本数, sequence_length, output_dim(the set vector after embedding .))

If you want to connect the dense layer directly to the embedding layer, you must first compress y and z into a row, forming a 2D moment of (x, yz)

2. Learn word embedding in the embedding layer of keras

There are two ways to set the initial value of keras embedding: randomly initialize Embedding, and use the weights parameter to specify the initial value of embedding

Here is random initialization of Embedding, and word embedding learning for words in the data set

2.1、one_hot

Encode text as a list of word indices of size n, mapping text words to numbers.

2.2、pad_sequences

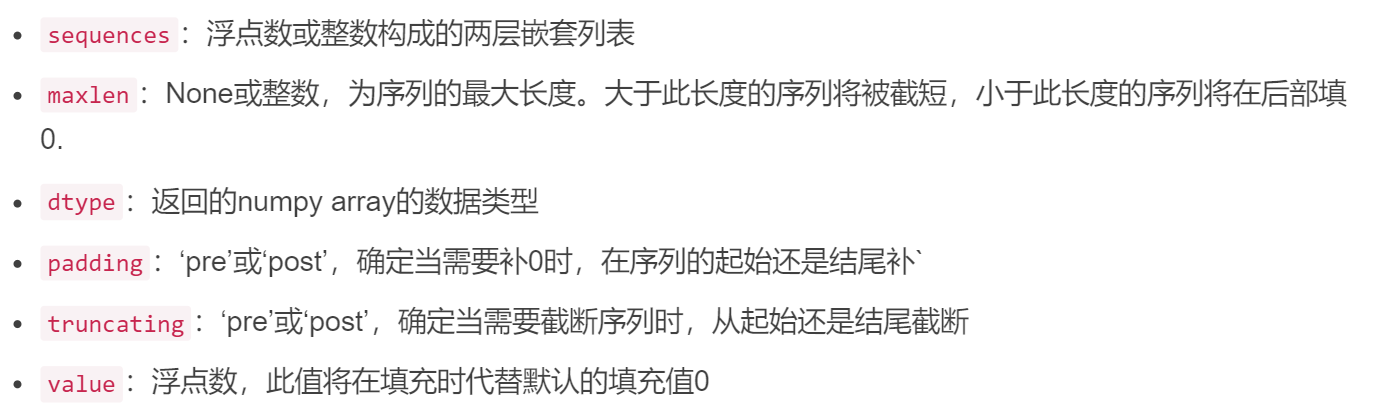

Keras requires the inputs to have the same length. keras can only accept sequence inputs of the same length. Therefore sentences have different lengths, and pad_sequences() is needed. This function is to convert the sequence into a new sequence of the same length after filling.

keras.preprocessing.sequence.pad_sequences(sequences,

maxlen=None,

dtype='int32',

padding='pre',

truncating='pre',

value=0.)

Parameter Description

return value

Returns a 2D tensor with a length of maxlen

example

>>>list_1 = [[2,3,4]]

>>>keras.preprocessing.sequence.pad_sequences(list_1, maxlen=10)

array([[0, 0, 0, 0, 0, 0, 0, 2, 3, 4]], dtype=int32)

>>>list_2 = [[1,2,3,4,5]]

>>>keras.preprocessing.sequence.pad_sequences(list_2, maxlen=10)

array([[0, 0, 0, 0, 0, 1, 2, 3, 4, 5]], dtype=int32)

2.3 Learning Embedding Examples

from keras.preprocessing.text import one_hot

from keras.preprocessing.sequence import pad_sequences

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import Flatten

from keras.layers.embeddings import Embedding

# define documents

docs = ['Well done!',

'Good work',

'Great effort',

'nice work',

'Excellent!',

'Weak',

'Poor effort!',

'not good',

'poor work',

'Could have done better.']

# define class labels

labels = [1,1,1,1,1,0,0,0,0,0]

# integer encode the documents

vocab_size = 50

encoded_docs = [one_hot(d, vocab_size) for d in docs]

print(encoded_docs)

# pad documents to a max length of 4 words

'''该嵌入具有50词汇及输入长度为4,我们将选择8尺寸的小嵌入空间。

该模型是一个简单的二进制分类模型。重要的是,嵌入层的输出将是4个向量,每个向量8个维度,每个单词一个。将其平坦化为一个32维度的向量,以传递到Dense输出层。'''

max_length = 4

padded_docs = pad_sequences(encoded_docs, maxlen=max_length, padding='post')

print(padded_docs)

# define the model

model = Sequential()

model.add(Embedding(vocab_size, 8, input_length=max_length))

model.add(Flatten())

model.add(Dense(1, activation='sigmoid'))

# compile the model

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['acc'])

# summarize the model

print(model.summary())

# fit the model

model.fit(padded_docs, labels, epochs=50, verbose=0)

# evaluate the model

loss, accuracy = model.evaluate(padded_docs, labels, verbose=0)

print('Accuracy: %f' % (accuracy*100))3. Use pre-trained word vectors for embedding learning

Use the weights parameter to specify the initial value of embedding

1. Load the entire GloVe word embedding file into memory as a dictionary of word embedding arrays.

Map words to integers and integers to words. Keras provides a tokenizer class that can fit the training data, can convert text to sequences by calling the texts_to_sequences() method of the tokenizer class, and provides access to a dictionary of words mapped to integers in the word_index attribute.

2. Create an embedding matrix for each word in the training dataset.

By enumerating all unique words in Tokenizer.word_index and locating the embedding weight vector from the loaded GloVe embedding.

3. Define the model, train and evaluate.

The key difference is that the embedding layer can be transferred with GloVe word embedding weights. We chose the 100-dimensional version, so the embedding layer must be defined with output_dim as 100. Finally, we do not update the word weights learned in this model, so we will set the model's trainable property to False.

Trying to learn with fixed pretrained embeddings is encouraged

from numpy import asarray

from numpy import zeros

from keras.preprocessing.text import Tokenizer

from keras.preprocessing.sequence import pad_sequences

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import Flatten

from keras.layers import Embedding

# define documents

docs = ['Well done!',

'Good work',

'Great effort',

'nice work',

'Excellent!',

'Weak',

'Poor effort!',

'not good',

'poor work',

'Could have done better.']

# define class labels

labels = [1,1,1,1,1,0,0,0,0,0]

# prepare tokenizer

t = Tokenizer()

t.fit_on_texts(docs)

vocab_size = len(t.word_index) + 1

# integer encode the documents

encoded_docs = t.texts_to_sequences(docs)

print(encoded_docs)

# pad documents to a max length of 4 words

max_length = 4

padded_docs = pad_sequences(encoded_docs, maxlen=max_length, padding='post')

print(padded_docs)

# load the whole embedding into memory

embeddings_index = dict()

f = open('../glove_data/glove.6B/glove.6B.100d.txt')

for line in f:

values = line.split()

word = values[0]

coefs = asarray(values[1:], dtype='float32')

embeddings_index[word] = coefs

f.close()

print('Loaded %s word vectors.' % len(embeddings_index))

# create a weight matrix for words in training docs

embedding_matrix = zeros((vocab_size, 100))

for word, i in t.word_index.items():

embedding_vector = embeddings_index.get(word)

if embedding_vector is not None:

embedding_matrix[i] = embedding_vector

# define model

model = Sequential()

e = Embedding(vocab_size, 100, weights=[embedding_matrix], input_length=4, trainable=False)

model.add(e)

model.add(Flatten())

model.add(Dense(1, activation='sigmoid'))

# compile the model

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['acc'])

# summarize the model

print(model.summary())

# fit the model

model.fit(padded_docs, labels, epochs=50, verbose=0)

# evaluate the model

loss, accuracy = model.evaluate(padded_docs, labels, verbose=0)

print('Accuracy: %f' % (accuracy*100))Two small examples take you to learn the word embedding layer