Paddle Lite is an end-side inference engine based on Paddle Mobile's new upgrade. It supports multi-hardware, multi-platform, and hardware hybrid scheduling. It provides efficient and lightweight AI applications for end-side scenarios including mobile phones. Reasoning ability, effectively solve problems such as mobile phone computing power and memory limitations, and is committed to promoting the wider implementation of AI applications

The deployment process is generally divided into four steps

- 1) Convert the model trained by other frameworks (pytorch/tensorlfow) to paddle model

- 2) The paddle model is optimized as a lightweight model

- 3) paddle-lite calls lightweight model reasoning through api

- 4) Integrate into the business code to complete the landing

1. X2Paddle model conversion tool

X2Paddle currently supports the conversion of prediction models of the four major frameworks of Caffe/TensorFlow/ONNX/PyTorch, and the conversion of PyTorch training projects, covering the current mainstream deep learning frameworks in the market, and a command line or an API can complete the model conversion. For details, refer to the link below:

https://github.com/PaddlePaddle/X2Paddle

Note that the conversion of the model is carried out in memory, that is to say, it is not a simple conversion of the model file. It is necessary to read the pytorch model through torch first, load the weights, read it into the memory, and then convert it into a paddle model for saving.

1.1 Convert pytorch model to paddle model

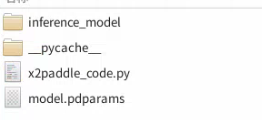

For example, a pytorch model is model.pt, and the final result is as follows:

Among them, the paddle model is saved in the inference_model. Inside model.pdmodel is the model result file, and model.pdiparams is the model weight file. For the specific conversion process, refer to the link below:

X2Paddle/pytorch2paddle.ipynb at develop · PaddlePaddle/X2Paddle · GitHub

2. The opt tool quantifies the model

Paddle-Lite provides a variety of strategies to automatically optimize the original training model, including quantization, subgraph fusion, hybrid scheduling, Kernel optimization and other methods. In order to make the optimization process more convenient and easy to use, the opt tool is provided to automatically complete the optimization steps and output a lightweight, optimal executable model.

According to the form, the paddle model can be divided into combine form and non-combine form. The combine form means that the model structure file and the weight file are separated, and the non-combine form means that the two are in one file. opt will eventually convert the paddle model into a .nb file in naive_buffer format (recommended), or a protobuf file. At the same time, according to the usage, select the corresponding platform for the parameter --valid_targets, arm or x86. If the hardware architecture does not match, the result will be wrong. Pay attention to the parameter explanation when using the opt tool . For details, refer to the following link:

Python calls opt to convert the model — Paddle-Lite documentation

3. Paddle-Lite deployment and inference

The deployment and reasoning of Paddle-Lite depends on the hardware platform, and can be deployed on x86, Arm (Android) and Arm (linux). paddle-lite can directly read the paddle model, or read the opt-optimized model . The steps to use paddle-lite are as follows:

- Compile paddle-lite source code and generate library files for the corresponding platform.

- Other languages (such as C++) call the api of paddle-lite, read the converted model of opt through the api, and get the result by reasoning. This process requires C++ to write the algorithm preprocessing part before inference, and the algorithm postprocessing part after inference, which should be consistent with the training model.

- Generally, after C++ is written, it is compiled and packaged into an interface again for business calls.

In actual use, refer to Welcome to Paddle-Lite's documentation! — Paddle-Lite Documentation