1. Introduction to TFlite

( 1 ) TFlite concept

- tflite is Google's own lightweight inference library. Mainly used on mobile.

- The idea used by tflite is mainly to convert the pre-trained model into a tflite model file and get it deployed on the mobile terminal.

- The source model of tflite can come from the saved model or frozen model of tensorflow , or from keras .

( 2 ) Advantages of TFlite

Use Flatbuffer to serialize model files, this format takes up less disk and loads faster

The model can be quantized, and the float parameter can be quantized into uint8 type, the model file is smaller and the calculation is faster.

Models can be pruned, structure merged and distilled.

Support for NNAPI. The underlying interface of Android can be called to make use of heterogeneous computing capabilities.

( 3 ) TFlite quantization

a. Quantified benefits

- Smaller storage size : Small models take up less storage space on the user's device. For example, an Android application that uses a small model will take up less storage space on the user's mobile device.

- Smaller download size : Small models take less time and less bandwidth to download to the user's device.

- Less memory usage : Small models use less memory at runtime, freeing up memory for other parts of the app, which can translate into better performance and stability.

b. The process of quantification

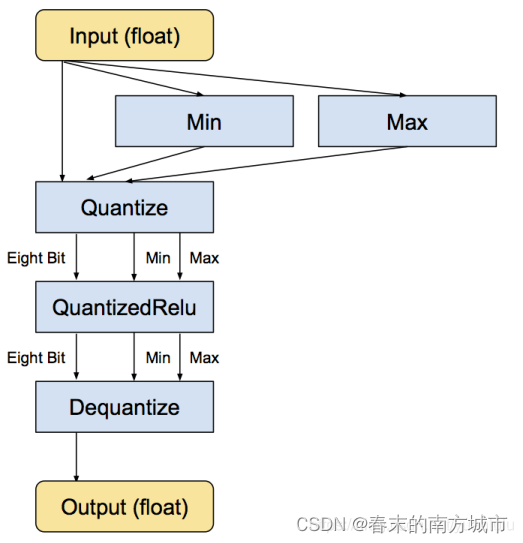

The quantization of tflite is not calculated using uint8 throughout. Instead, store the maximum and minimum values of each layer, and then linearly divide this interval into 256 discrete values, so each floating-point number in this range can be represented by an eight-bit (binary) integer, which is approximately the nearest discrete value value. For example, where the minimum value is -3 and the maximum value is 6, 0 bytes represent -3, 255 represents 6, and 128 represents 1.5. Each operation is first calculated using integers and recast to floating point on output. The figure below is a schematic diagram of quantized Relu.

|

|

|

Tensorflow official quantitative documentation

c. Realization of Quantification

Dynamic quantization after training

| import tensorflow as tf |

float16 quantization after training

| import tensorflow as tf |

int8 quantization after training

| import tensorflow as tf |

Note: float32 and float16 quantization can run on the GPU, int8 quantization can only run on the CPU

2.TFlite model conversion

( 1 ) Save the tflite model during training

| import tensorflow as tf |

( 2 ) Convert TensorFlow models in other formats to tflite models

First of all, you need to install Bazel, refer to: https://docs.bazel.build/versions/master/install-ubuntu.html, you only need to complete the Installing using binary installer part. Then clone the source code of TensorFlow:

| git clone https://github.com/tensorflow/tensorflow.git |

Then compile the conversion tool, which may take a long time to compile:

| cd tensorflow/ |

After getting the conversion tool, start to convert the model, the following operations are to freeze the graph:

- input_graph corresponds to the .pb file;

- input_checkpoint corresponds to mobilenet_v1_1.0_224.ckpt.data-00000-of-00001, and the suffix is removed when using it.

- output_node_names can be obtained in mobilenet_v1_1.0_224_info.txt.

| ./freeze_graph --input_graph=/mobilenet_v1_1.0_224/mobilenet_v1_1.0_224_frozen.pb \ |

Convert the frozen graph to a tflite model:

- input_file is a graph that has been frozen;

- output_file is the converted output path;

- output_arrays这个可以在mobilenet_v1_1.0_224_info.txt中获取;

- input_shapes这个是预测数据的shape

| ./toco --input_file=/tmp/mobilenet_v1_1.0_224_frozen.pb \ |

(3)使用检查点进行模型转换

- 将tensorflow模型保存成.pb文件

| import tensorflow as tf |

- 模型文件的读取

| Bash |

- pb文件转tflite

| Python |

3. Call the TFlite model file on the Android side

( 1 ) The process of calling the TFlite model in Android studio to implement reasoning

- define an interpreter

- Initialize the interpreter (load the tflite model)

- Load pictures into buffer in Android

- Executing graphs with an interpreter (inference)

- Display the results of inference in the app

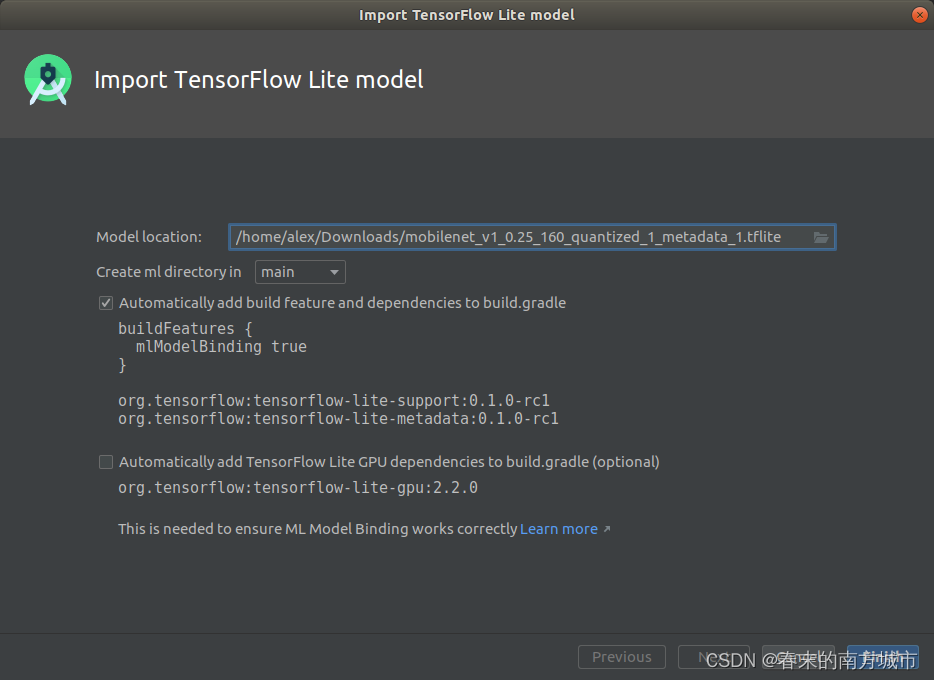

( 2 ) Import TFLite model steps in Android Studio

- Create a new or open an existing Android project.

- Open the TFLite model import dialog via the menu item File > New > Other > TensorFlow Lite Model .

- Select the model file with the extension .tflite. Model files can be downloaded from the Internet or trained by yourself.

- The imported .tflite model file is located under the ml/ folder of the project.

The model mainly includes the following three kinds of information:

- Model: Include model name, description, version, author, etc.

- tensors: input and output tensors. For example, images need to be pre-processed to a suitable size before reasoning can be performed.