Solve the problem that there is no ResourceManager after the cluster deployment Hadoop starts

question

I installed Hadoop3.3.4 and used Java17. When I started hadoop, the following problem occurred

1. After the startup is complete, enter the jps command and do not see the ResourceManager

2. Check the ResourceManager log and java.lang.reflect.InaccessibleObjectException appears

2023-03-18 11:14:47,513 ERROR org.apache.hadoop.yarn.server.resourcemanager.ResourceManager: Error starting ResourceManager

java.lang.ExceptionInInitializerError

at com.google.inject.internal.cglib.reflect.$FastClassEmitter.<init>(FastClassEmitter.java:67)

at com.google.inject.internal.cglib.reflect.$FastClass$Generator.generateClass(FastClass.java:72)

at com.google.inject.internal.cglib.core.$DefaultGeneratorStrategy.generate(DefaultGeneratorStrategy.java:25)

at com.google.inject.internal.cglib.core.$AbstractClassGenerator.create(AbstractClassGenerator.java:216)

at com.google.inject.internal.cglib.reflect.$FastClass$Generator.create(FastClass.java:64)

at com.google.inject.internal.BytecodeGen.newFastClass(BytecodeGen.java:204)

at com.google.inject.internal.ProviderMethod$FastClassProviderMethod.<init>(ProviderMethod.java:256)

at com.google.inject.internal.ProviderMethod.create(ProviderMethod.java:71)

at com.google.inject.internal.ProviderMethodsModule.createProviderMethod(ProviderMethodsModule.java:275)

at com.google.inject.internal.ProviderMethodsModule.getProviderMethods(ProviderMethodsModule.java:144)

at com.google.inject.internal.ProviderMethodsModule.configure(ProviderMethodsModule.java:123)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:340)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:349)

at com.google.inject.AbstractModule.install(AbstractModule.java:122)

at com.google.inject.servlet.ServletModule.configure(ServletModule.java:52)

at com.google.inject.AbstractModule.configure(AbstractModule.java:62)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:340)

at com.google.inject.spi.Elements.getElements(Elements.java:110)

at com.google.inject.internal.InjectorShell$Builder.build(InjectorShell.java:138)

at com.google.inject.internal.InternalInjectorCreator.build(InternalInjectorCreator.java:104)

at com.google.inject.Guice.createInjector(Guice.java:96)

at com.google.inject.Guice.createInjector(Guice.java:73)

at com.google.inject.Guice.createInjector(Guice.java:62)

at org.apache.hadoop.yarn.webapp.WebApps$Builder.build(WebApps.java:417)

at org.apache.hadoop.yarn.webapp.WebApps$Builder.start(WebApps.java:465)

at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.startWepApp(ResourceManager.java:1389)

at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.serviceStart(ResourceManager.java:1498)

at org.apache.hadoop.service.AbstractService.start(AbstractService.java:194)

at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.main(ResourceManager.java:1699)

Caused by: java.lang.reflect.InaccessibleObjectException: Unable to make protected final java.lang.Class java.lang.ClassLoader.defineClass(java.lang.String,byte[],int,int,java.security.ProtectionDomain) throws java.lang.ClassFormatError accessible: module java.base does not "opens java.lang" to unnamed module @5e922278

at java.base/java.lang.reflect.AccessibleObject.checkCanSetAccessible(AccessibleObject.java:354)

at java.base/java.lang.reflect.AccessibleObject.checkCanSetAccessible(AccessibleObject.java:297)

at java.base/java.lang.reflect.Method.checkCanSetAccessible(Method.java:199)

at java.base/java.lang.reflect.Method.setAccessible(Method.java:193)

at com.google.inject.internal.cglib.core.$ReflectUtils$2.run(ReflectUtils.java:56)

at java.base/java.security.AccessController.doPrivileged(AccessController.java:318)

at com.google.inject.internal.cglib.core.$ReflectUtils.<clinit>(ReflectUtils.java:46)

... 29 more

Solution

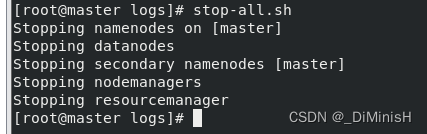

1. stop hadoop

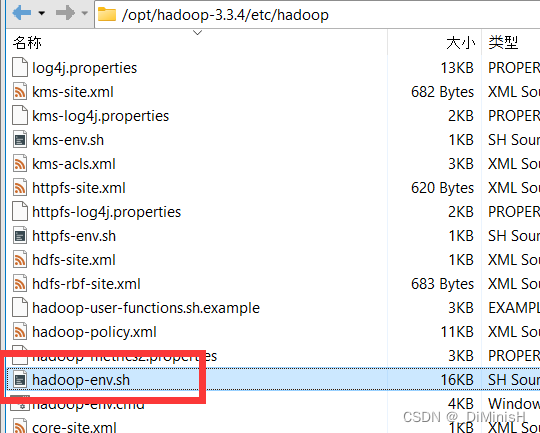

2. Modify hadoop-env on each machine

I found it /opt/hadoop-3.3.4/etc/hadoopunder this path and added the code

export HADOOP_OPTS="--add-opens java.base/java.lang=ALL-UNNAMED"

This is to add java runtime parameters, but it is not recommended as noted above

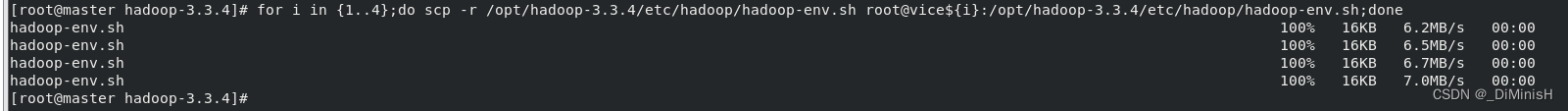

and then distributed to other machines

for i in {

1..4};do scp -r /opt/hadoop-3.3.4/etc/hadoop/hadoop-env.sh root@vice${i}:/opt/hadoop-3.3.4/etc/hadoop/hadoop-env.sh;done

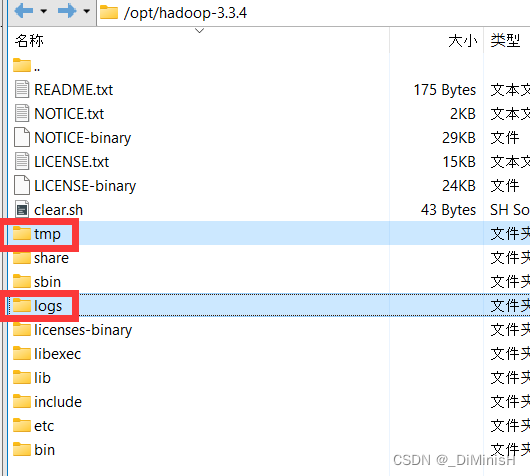

3. Delete the contents of the tmp and logs folders on all machines

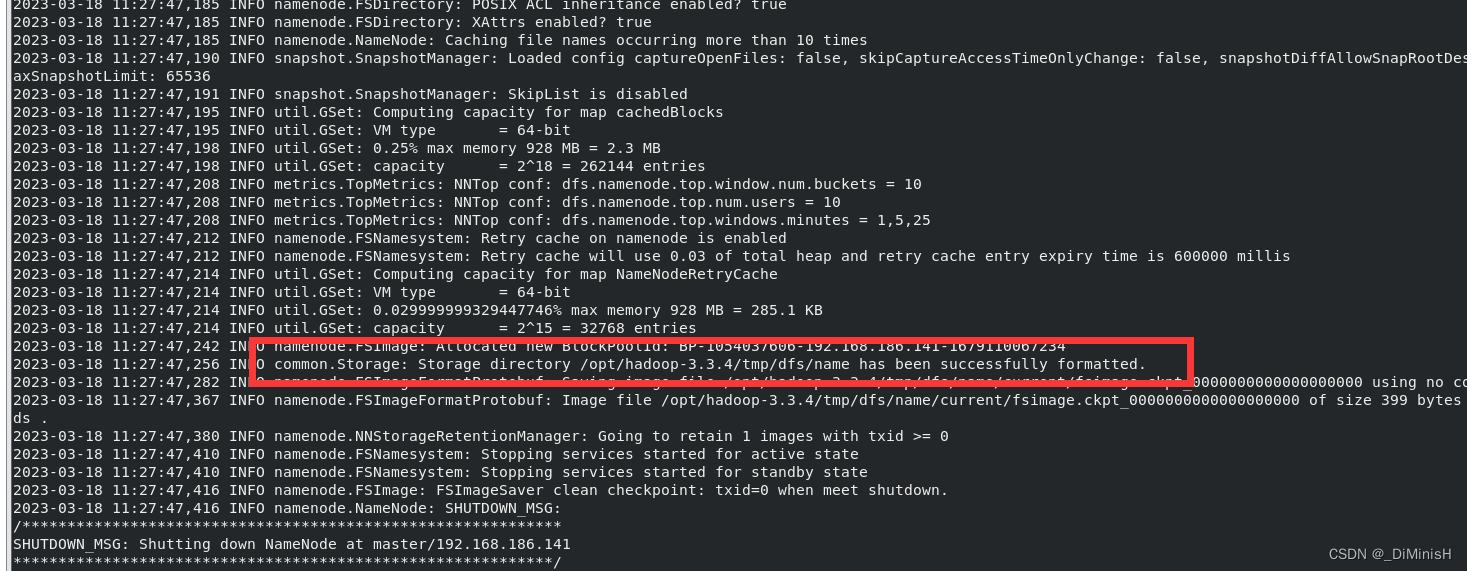

4. The master reformats the NameNode

hdfs namenode -format

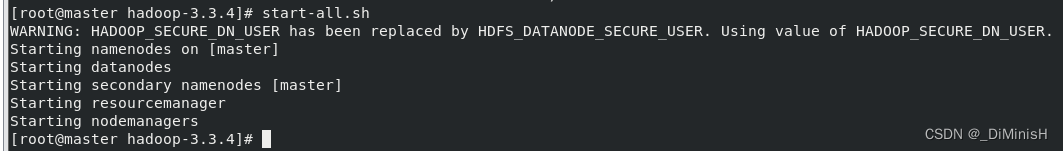

5. Start hadoop

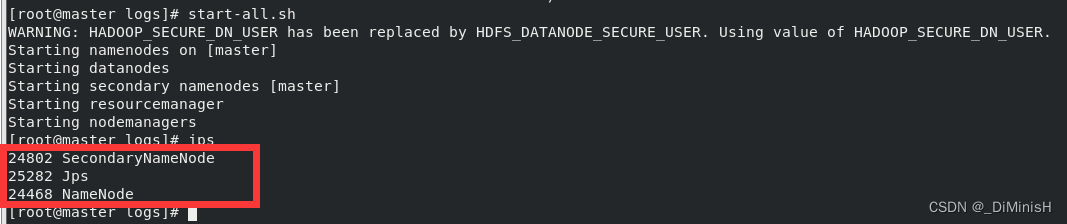

start-all.sh

6. Check if the problem is solved

(1) Enter the jps command on the master server

Appeared ResourceManager

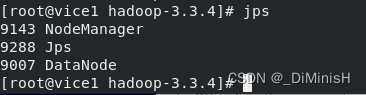

(2) Enter the jps command on the slave node

Operating normally

(3) Visit http://192.168.186.141:8088/

Operating normally

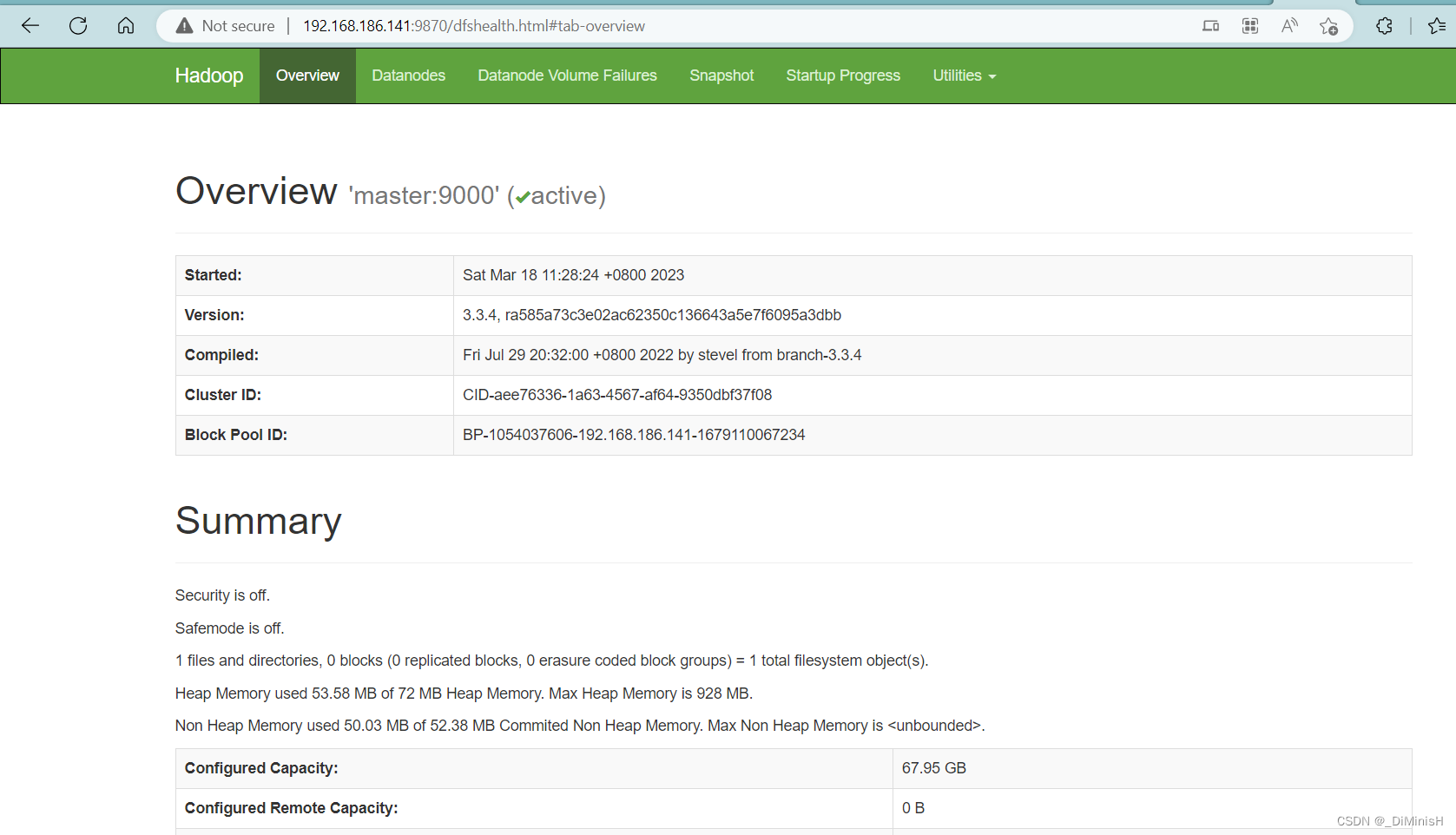

(4) Visit http://192.168.186.141:9870/

Everything works fine, problem solved successfully