When it comes to cloud computing, we usually see such a description, "realized by virtualization technology". It is not difficult to see that in the concept of cloud computing, virtualization is a very basic but very important part, and it is also the realization of cloud computing. The key to many issues such as computing isolation, scalability, and security.

The basis of cloud computing is virtualization, but virtualization is only a part of cloud computing. Cloud computing is an application after virtualizing several resource pools. Many people think that virtualization is just a small boost behind cloud computing, but it is not. Regarding virtualization itself, it has long been widely used in the IT field, and there are completely different virtual technologies for different resources.

Currently, there are four types of virtualization: server virtualization, storage virtualization, memory virtualization, and network virtualization.

There are many applications of server virtualization in the industry. Using server virtualization, we can centrally manage the server's CPU, memory, disk and other hardware, and improve resource utilization efficiency through centralized dynamic on-demand allocation.

Storage virtualization separates the logical view of storage resources from physical storage, thereby providing seamless resource management for the system. However, due to the low degree of storage standardization, if the storage virtualization technology used comes from different vendors, compatibility must be considered.

Memory virtualization refers to the use of virtualization technology to realize the management of memory by the computer memory system. The memory virtualization system enables upper-layer applications to have continuously available memory, and divides multiple fragments on the physical layer to satisfy memory allocation and necessary data exchange.

Network virtualization, the use of software to separate network power from physical network elements, has something in common with other forms of virtualization. However, if network devices and servers are different, they usually face technical challenges, such as the need to perform high I/O tasks and require proprietary hardware modules for data processing.

At present, cloud computing uses server virtualization technology more, but virtualization technology itself does not only serve cloud computing.

1. Virtualization of information resources and cloud resources

1. Information resources

1. Introduction to Information Resources

Information resources can be defined as a general term for all kinds of text, numbers, audio-visual, charts and languages that can be used and generate benefits, as well as all kinds of information related to social production and life. It includes structured, semi-structured and non-institutionalized data, such as databases, document files, images, audio and video, and html, which are all documents, materials, charts, and data involved in people's production and management processes. the general term for. Management guru John Naisbitt argues that out-of-control and disorganized information is no longer a resource, but rather the enemy of the information worker.

2. Characteristics of information resources

In the network environment, information resources are quite different from traditional local information in terms of capacity, carrier, structure, distribution, mode of transmission and scope of transmission, and its new features include the following aspects.

1) Large information capacity and rapid changes

There are countless information distributed throughout the Internet, and manual work alone cannot obtain the required information in a comprehensive and timely manner. Facing the vast ocean of information, it is often difficult to get started. Or want to see the information resource you last viewed, but don't know where it is. The network has a relatively fast update speed, and managers can modify or delete existing access entries at will, making information acquisition more difficult to control.

2) Information is widely distributed and structured

In the Internet environment, information is stored in different servers and uses different data description methods; at the same time, the operating systems, databases, and character sets of each server are different, which makes network information resources complex and diverse. in a disordered state.

3) Highly integrated information lacks unified standards

The information of each information website is collected and sorted out by information administrators, which has the characteristics of cross-industry and cross-time and space, and covers a wide range. And each information management website has its own information representation and organization method, for example, a picture can be directly read by File Transport Protocol (FTP) and stored on the hard disk or stored in a database with a certain character code.

4) Various ways of storage and transmission

Most of the information storage carriers are disks and tapes, and network storage can also be used. Through the Internet, people can obtain information in various ways, such as e-mail, network download, mobile terminal, online chat and video, etc. Users can roam the world without leaving home.

5) Strong real-time information exchange

Binary information spreads at high speed on the Internet. By browsing the Internet, people can obtain real-time news and the latest changes around them. E-mail reduces the time consumption of people's communication.

3. Organization of information resources

The organization of information resources can be broadly described as the following.

1) File method

A simple way to organize and manage local and network information through the file system, which uses the idea of topic organization. Each file is given a name, which is used to identify different information and share information through the network.

FTP organizes network information in this way. File servers based on FTP and HTTP store some unstructured information, such as pictures, videos, audio and programs, etc. Realize operations such as information browsing and downloading. With the widespread demand for network information and the increase in the amount of information, the use of documents as a carrier to disseminate information has become inadequate. When the information structure is complex and more logic functions are required, the efficiency of the file management method is too low, so this method can be used as the underlying file service of the information management system to provide assistance for other functions.

2) Database mode

A database is a warehouse that organizes, stores and manages data according to the data structure, and can perform massive data storage and management. It maintains data integrity and enables data sharing. Users can flexibly set keywords and combine query conditions, and finally return matching network information. Effective query reduces network load.

A large number of information systems have been established based on database technology, and a complete information management model has been formed, which greatly improves user query efficiency and network service capabilities. The disadvantage of the database method is that it is difficult to deal with unstructured information, and the correlation between information is not intuitive. Moreover, the processing of complex information units is inefficient, and the man-machine interchangeability is poor.

3) Home page method

The information organization in the form of the home page refers to organically organizing the detailed information of relevant departments and individuals and displaying them on a specific interface. It is a more comprehensive introduction to the organization and people. Organizations and individuals are free to create homepages on the Internet, and the specific content is up to them.

4. The significance of virtualization to information resource cloud

Information resource cloud is an information resource management platform and service model built with the concept of cloud computing. It does not need to change the distribution of existing Internet resources, but uses related technologies of virtualization and information resource integration to virtualize and integrate information resources. . And carry out the organization and construction of the knowledge level, and guarantee the quality of service to provide users with safe, reliable and on-demand knowledge services.

It can be said that the more complex the user's storage environment is, the more obvious the benefits of virtualization will be. Specifically, the significance of virtualization to information resource cloud is as follows.

- Virtualization can simplify the representation, access and management of resources, and provide standard interfaces for these resources so that users can access them transparently and on demand.

- Virtualization optimizes user-facing applications, reduces storage-related management burdens, and provides a better model for data center migration, backup, disaster recovery, and load balancing.

- Virtualization can implement different applications by virtualizing several machines to form isolation. Isolation can resolve various conflicts and improve resource processing efficiency.

- Virtualization reduces the degree of coupling between users and resources, so that users do not depend on the specific implementation of resources and can also enhance the dynamic scalability of resources.

- Virtualization can uniformly provide good services on the cloud after integrating many low-cost facilities, which greatly saves the provider's development costs and users' usage costs.

- Virtualization helps to establish an elastically scalable application architecture that users can use on demand to meet different business needs at any time. The host service requested by the user can be quickly provisioned and deployed (real-time online provisioning), and the on-demand expansion or reduction of the cloud server configuration can be quickly realized within a few minutes.

5. Barriers to Information Resource Search

There is a lot of information on the Internet, and not all information obtained is usable. The diversity of network information structures and representation methods, as well as the proficiency of network users in searching the network, all cause many obstacles to the query of network information resources. The main factors are analyzed as follows.

1) Various requirements for display terminal software and hardware configuration

The characteristics of information resources directly determine the diversity of access interfaces of local and network information resources. Local files can be browsed and viewed using a file manager or user terminal; database resources can be viewed through a database client or a third-party database display view. Browsing and operation; file information or webpages in the network can be browsed using a browser, including the use of FTP and HTTP protocols, which lead to poor user experience and cumbersome management.

2) Information organization is not standardized and flooded

Most of the information is displayed in the form of web pages, and each website that publishes information has its own set of rules. Website search engine optimization (Search Engine Optimization, SEO) is not all reasonably implemented, which makes keyword retrieval difficult. The information is chaotic and lacks the necessary constraints, and the information is reproduced in external links, which will cause a large number of repetitions in the search results. Although there are a lot of choices for users, it is difficult to distinguish the essence from the dross.

3) Serious information pollution

The Internet is a kingdom of freedom, filled with all kinds of information, making us deep in the ocean of information. Moreover, it contains a large amount of out-of-control, false, outdated, and even illegal information, which reduces the quality of information resources, so the research, analysis, cleaning and review of information are becoming more and more important.

2. Resource virtualization

1. Three types of cloud computing resources

Cloud computing can divide resources into three types, namely physical resources, virtualized resources and service resources.

1) Physical Resource

Refers to the infrastructure of the cloud computing platform, including servers, storage, networks, and routers. Physical resources are managed in an intuitive and relatively fixed structure. However, due to the relatively fixed scale and structure, it is difficult to support changing service requirements.

2) Virtual Resource (Virtual Resource)

Refers to the logical mapping of physical resources generated by virtualization technology on the infrastructure, which is characterized by flexibility and changeability. Based on the support of virtualization technology, administrators can adjust the scale and quantity of resources, and expand the total amount of virtualized resources of the entire platform by increasing physical resources.

3) Service Resource

Refers to an application service unit with specific functions. By calling the service interface, the user can obtain the specific functions supported by the service without caring about the internal implementation of the service. Service instances can be called by other services, and multiple identical service instances can be combined into a load-balanced resource pool, so service instances can be regarded as a form of resource provision.

In fact, resources are realized by a service that can provide certain functions, and it can realize standardized input and output. The resource here can be hardware, such as disk, CPU, memory, server, network and special equipment, etc. It can also be software, such as mail services and web services.

2. Resource virtualization

Resource virtualization refers to the creation of an abstraction layer that abstracts resources provided by physical hardware (such as power, disk space, CPU computing cycles, memory, and networks) and provides them to logical applications (such as Web Service, email, video, and voice) etc.) in order to use these resources.

The abstraction of resources often has multiple levels. For example, in the resource model proposed by the industry at present, several levels of resource abstraction such as virtual machines, clusters, virtual data centers, and clouds have emerged. Resource abstraction defines the object and granularity of operations for the upper-layer resource management logic, and is the basis for building the infrastructure layer. The advantage of this resource abstraction is that it can provide better redundancy, flexibility, and service isolation, thereby providing users with more reliable and powerful services. How to abstract physical resources of different brands and models, manage them as a global unified resource pool and present them to customers is a core problem that must be solved at the infrastructure layer.

From another perspective, resource virtualization refers to the encapsulation of the internal attributes, structures and function realization mechanisms of real resources, and the performance of their functions in a specific form in the virtual space. Virtualized resources in the virtual space provide users or applications with a common calling interface. This mechanism makes the use of resources not tightly bound to physical resources, but can be dynamically bound in real time according to information such as resource status and capabilities. physical resources.

The difficulty of resource virtualization technology often lies in balancing resource utilization and service reliability and efficiency. Massive service requests require a large number and variety of resources. Using limited resources to serve more user requests requires improving resource utilization as much as possible. efficiency. For example, service QoS and service level agreement (Service Level Agreement, SLA) and other requirements. And must provide efficient, stable and reliable services, while reducing service response delays, service acquisition costs, etc.

In addition, cross-virtualization among various resources and cross-platform portability of the same resource virtualization technology are also problems that resource virtualization needs to face.

3. Mapping between virtualized resources and physical resources

Divide virtualization into virtualizing up (Virtualizing Up) and virtualizing down (Virtualizing Down), the former refers to providing a virtualized resource with higher performance than a single physical resource based on multiple physical resources; the latter refers to dismantling a physical resource Provide multiple virtualized resources with lower performance than this physical resource at the same time.

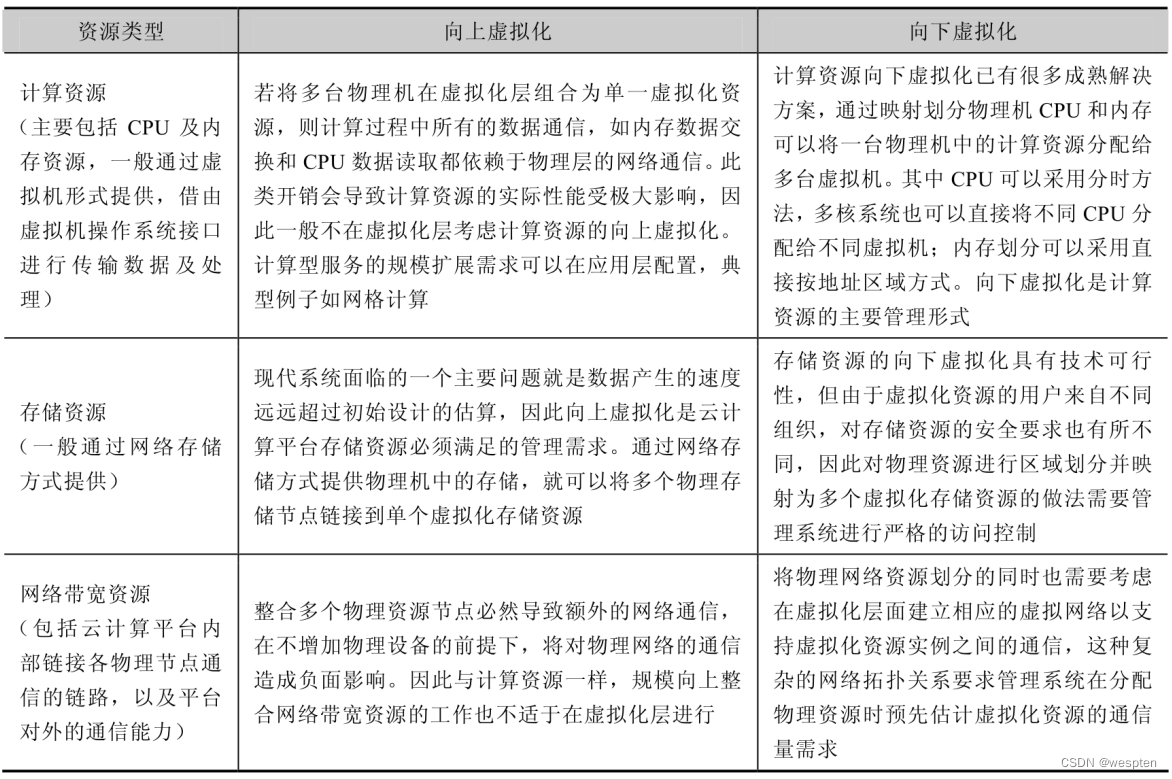

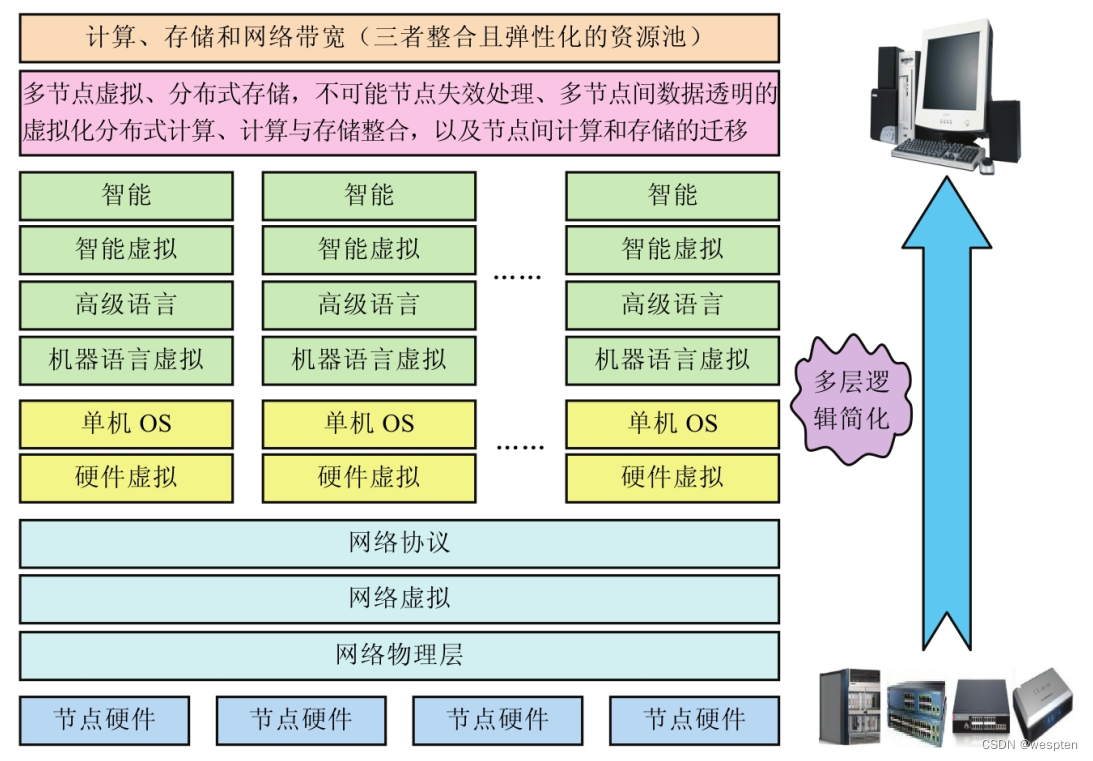

According to resource usage, platform resources can be divided into three types: computing resources, storage resources and network bandwidth resources.

The table below shows a comparison of the virtualization possibilities of the three resources in different forms:

It should be noted that computing resources provide data processing functions, such as CPU and memory resources. It does not include data storage functions, and computing resources are stateless. Storage resources are used to save data and do not include complex logic calculation processing functions. Database applications need to use storage resources to record data and computing resources to analyze and execute program statements.

3. Features of virtual resources

Virtual resources in a network virtualization environment have the following characteristics.

1. Heterogeneity

Virtual resources in a network virtualization environment are of various types and have different functions, and the access configuration methods, local management system operations, and sharing rules are very different; node resources include computers, routers, switches, base stations, and mobile handheld devices.

Link resources include optical fibers, optical wavelengths, microwaves, twisted pairs, and time slots; different forms of network resources lead to differences in virtual resources, and the heterogeneity of virtual resources will inevitably lead to virtual resource management and control. Its management needs to shield the heterogeneity of these physical resources and coordinate the sharing and use of physical resources.

2. Distributed

Virtual resources are distributed geographically and belong to multiple infrastructure providers. In this distributed environment, virtual resource management is not simply to combine distributed heterogeneous virtual resources, but more importantly, to solve the problem of resource allocation and scheduling for virtual network requests, and to achieve multiple virtual networks. Coordination and sharing of resources.

In the distributed heterogeneous virtual resource environment, resource management also needs to implement operations such as resource maintenance and resource configuration, so as to provide cloud users with cloud services with QoS guarantee.

3. Autonomy

The heterogeneous and distributed characteristics of virtual resources also bring autonomy in resource management. Virtual resources first belong to an infrastructure provider, and the infrastructure provider, as the owner of virtual resources, has the highest level of management authority over resources. . That is, it has autonomous management capabilities, so it has autonomy.

On the one hand, in order to make the resources in the system available to other virtual network users, the virtual resources must accept the unified management and configuration of virtual network users according to certain policies and agreements. Therefore, virtual resource management must realize resource sharing and scheduling among various infrastructure providers, and solve issues such as authority, security, and billing management in resource autonomous environments.

On the other hand, the portability of virtual resources provides greater flexibility for infrastructure providers to optimize resource usage. Infrastructure providers can transparently implement resource migration by means of virtual node migration, virtual link migration, virtual path splitting, and virtual path aggregation. For example, infrastructure providers migrate virtual nodes between different physical nodes in their own management area. Optimize the service performance of the virtual network and ensure the connectivity of the virtual network topology.

For reasons of security and ease of management, virtual resource migration should occur within the management domain of the infrastructure provider, without the need to notify other infrastructure providers or the global network of the event.

4. Scalability

The future network is a large global network, and the infrastructure will be provided by different providers. Therefore, the design of network virtualization resource management architecture must consider the problem that the underlying layer is composed of multiple competing infrastructure providers.

Scalability is an important requirement in a network virtualization environment. On the one hand, due to the needs of facility construction, existing infrastructure providers will add new network equipment resources to expand the network scale. On the other hand, due to the needs of business expansion, the virtual network may span new infrastructure providers, and the new infrastructure providers need to be included in the management framework. The expansion of resources and business expansion lead to the expansion of the set of candidate resources, so the resource management system needs to be scalable. Update resource information in time to complete the expansion and maintenance of the virtual network.

5. Dynamic

In a network virtualization environment, virtual resources can dynamically join or leave the system. Especially in a wireless network environment, the location, service provision capability, and load of resources change dynamically over time, and physical failures of equipment may also occur. A condition that renders a resource inaccessible. In the case of dynamic changes in resources, how to maintain the continuous use of resources by the virtual network and the continuous connection relationship of the virtual network topology to ensure that virtual network services do not interrupt are problems that need to be solved in virtual resource management.

To sum up, the characteristics of virtual resources determine the functions and characteristics that the virtual resource management mechanism should have. It must be scalable and hide the heterogeneity of physical network resources, provide a unified access interface for virtual network users to shield the dynamic nature of physical network resources, and respect the local management mechanism and strategy of physical network resources, so that virtual resources Better serve virtual network users.

4. Resource performance indicators

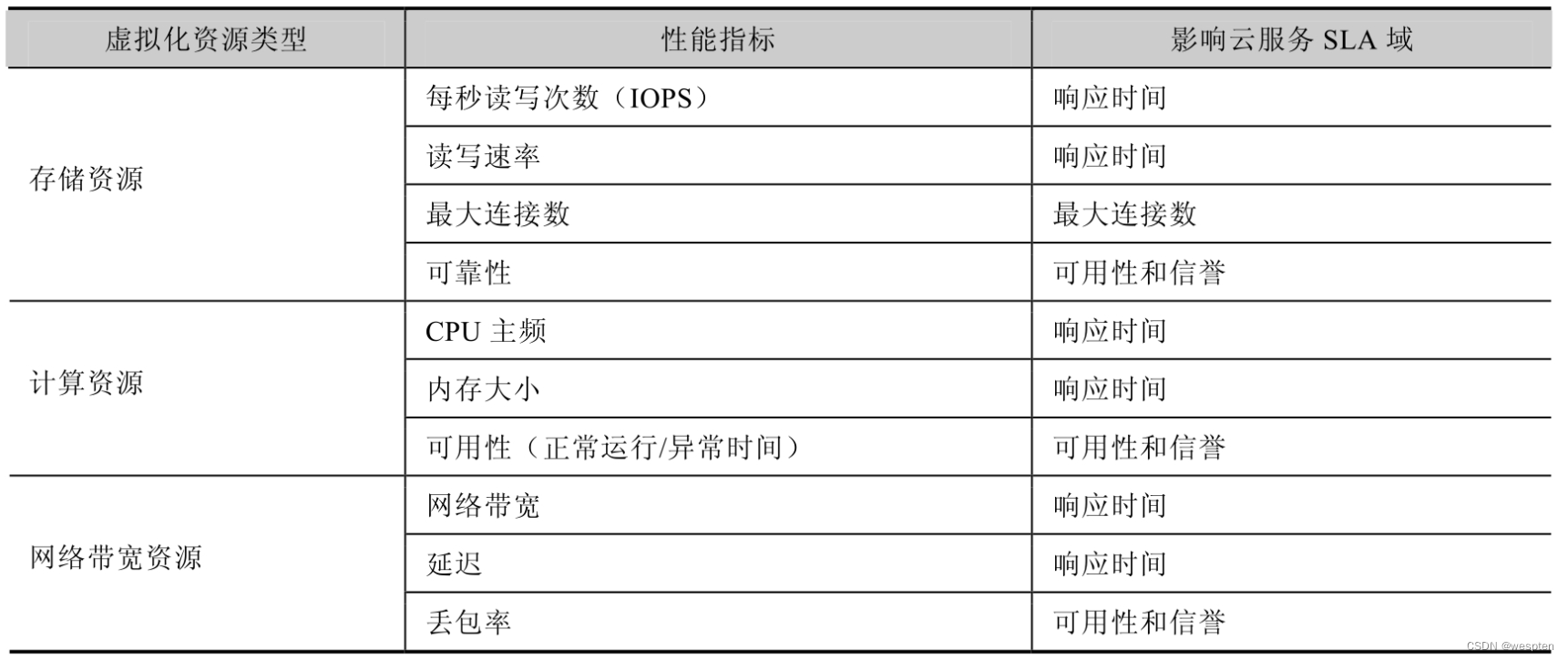

1. Performance indicators of virtualized resources

Virtualized resources are different from cloud services, and their operating processes are quite different depending on the type of resources, so it is impossible to establish a unified indicator system. From the perspective of management system performance indicators, corresponding indicators should be selected according to the actual operation of virtualized resources, such as storage resources, including the number of reads and writes per second, read and write rates, and reliability (backup and recovery mechanisms, etc.).

1) Virtualize computing resources

Computing resources are mainly provided in the form of virtual machines, so the performance indicators of computing resources are similar to those of physical machines. In order to simplify management in the management layer, virtual machines are generally assigned with preset standard configurations.

For example, in the implementation of EC2, virtual machines are divided into different levels such as tiny, small, middle, and large, and their main performance indicators include CPU frequency, number of CPUs, memory size, CPU occupancy, memory occupancy, and data transmission. rate etc.

2) Virtualized storage resources

The main concern of the management system is adjustable performance indicators. The main controllable items of storage resources include increasing the total amount of disk space by increasing storage resources, increasing I/O performance by increasing parallel resources, and improving data reliability through redundant backup between resources. Therefore, the performance indicators of virtualized storage resources include total disk space, used disk space, number of reads and writes per second (Input/Output Per Second, IOPS), I/O rate, maximum number of connections, and data redundancy rate ( recoverable backups), etc.

3) Virtualize network bandwidth resources

The network bandwidth resource of the virtualization layer is a logical concept, which mainly represents the network communication requirements and network topology between virtualized resources. Its performance indicators include the total network bandwidth, network bandwidth occupancy rate, packet delay, and the maximum number of users. When calculating the performance requirements of network bandwidth resources, it needs to be associated with related virtualization resource performance indicators, such as storage resource disk I/O and computing resource data transfer rate. The performance of network bandwidth resources should be at least not lower than these indicators, otherwise it will become a performance bottleneck.

The following table shows some important performance indicators:

It can be seen that the virtualization resource performance indicators do not completely cover the cloud service SLA domain, because some SLA indicators do not completely determine the resource performance. For example, the availability index of computing services is mainly related to the number of computing resources and whether fault tolerance measures such as HA are taken.

On the other hand, the particularity of network bandwidth resources is that they represent the abstract concept of association between resources. It does not map directly to real-world resource entities, so some metrics that are commonly used for physical network resources do not apply to virtualized network bandwidth resources.

2. Physical resource performance indicators

The performance indicators of virtualized resources are logical summaries and split combinations of physical resource performance indicators. Logical summaries refer to physical resources including information such as node functions, hardware configurations, machine names, and motherboard interface attributes. The management layer does not need to pay attention to these contents at the virtualization resource layer, so it logically summarizes them to exclude unnecessary information; splitting and combining refers to the various topological relationships that virtualization resources occupy on physical resources.

The important performance indicators of physical resources concerned by the management system are as follows.

- Computing resources: CPU performance (Hz), number of CPUs, CPU usage, total memory, memory usage, running time, unavailable time, and network I/O rate, etc.

- Storage resources: total storage capacity, available space, read/write rate, total number of available connections, current number of connections, and network I/O rate, etc.

- Network resources: network bandwidth rate and cache size, etc.

It can be seen that, except some of the performance indicators of virtualized resources have complex logic, the rest are easy to map to physical layer performance indicators.

There is complex logic in the mapping of the following two types of performance indicators:

- Indicators such as reliability and availability cannot be directly calculated from physical resource performance indicators through splitting and combining. There are two factors that determine the reliability of virtualized resources. One is the reliability of physical resources themselves, which can be obtained through physical resource nodes. Environmental conditions (such as motherboard temperature, etc.), service failure records, and the ratio of abnormal operation time to normal operation time are calculated; the second is the operation stability of the virtualization layer. In the example of virtualized storage resources that provide support for more than two physical resources, the reliability of the storage resources is also related to the logical relationship between the physical resources. If physical resources back up each other, the reliability of virtualized resources will be improved.

- The performance indicators of virtualized network resources are not based solely on physical network resources, and this indicator determines the performance indicators of all associated physical network resources. When multiple virtualized network resources occupy the same physical network resource, resource contention will occur. In this case, the service capability of the physical resource needs to be considered separately.

5. The relationship between cloud services and virtualized resources

The virtualization layer provides the cloud computing platform application layer with virtualized resources that meet its operating performance requirements, but there is still a certain distance from providing cloud services with SLA guarantees. The conditions that cloud services should meet are to meet the cloud service life cycle, provide cloud service usage interfaces, have a service SLA guarantee mechanism, and make reasonable use of virtualized resources while providing services.

The general idea of supporting cloud services through virtualized resources is to install service applications in the system based on virtual machines, and then provide various services through virtual machine network interfaces. However, it is not an optimal solution to provide different types of cloud services through virtual machines. Program. For example, if virtual machines are used to provide storage services, the additional overhead of virtualization technology on physical resources will be too high. Moreover, the way storage services are provided is relatively stable, and the flexibility obtained by using virtual machines does not actually improve service quality.

The storage service in Amazon Web Service (AWS) uses S3 instead of EC2, which shows that the provision of different services in the cloud service architecture should fully consider its demand characteristics; on the other hand, it is necessary to well manage complex structures Composition of cloud services requires a well-designed hierarchical resource model. That is to maintain the relevance of the cloud service itself, and can fully simplify the management operation.

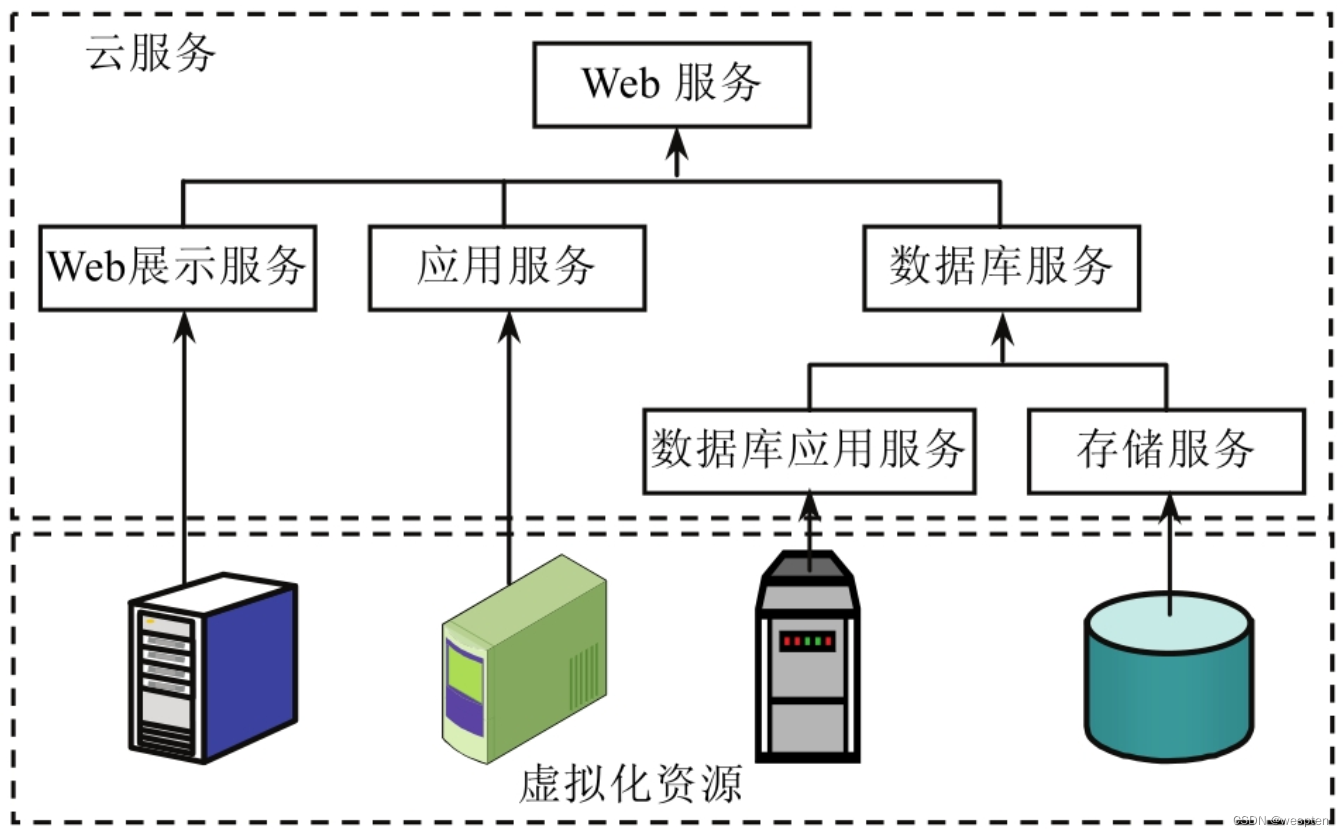

Refer to the existing cloud computing platform system to explain the relationship between cloud services and virtualized resources, as well as related management requirements.

1. Provide different types of cloud services

According to the use of cloud services, it can be divided into computing services, storage services and other auxiliary services. Various types of services beyond this basic classification can be regarded as derivatives or combinations of these three types of services.

For example, Web application services are generally composed of background database storage services, Web applications (computing services) and Web display (computing services), and database storage services are composed of database application services and storage services. If it is necessary to realize the monitoring and authentication of this Web service, the assistance of monitoring service and authentication service is needed.

According to the characteristics of virtualized resources, computing services are provided by computing resources plus network resources, while storage services are provided by storage resources plus network resources. Therefore, computing services are generally provided based on virtual machines at the virtualization layer, and specific computing service functions are provided by customizing virtual machine templates; storage services are generally provided based on network storage at the virtualization layer; platform auxiliary cloud services are specified by the cloud computing platform. The server directly provides the system service interface.

From the perspective of system implementation, if a virtual machine supports multiple computing services, its change reuse cost and development cost will increase. Therefore, a single virtual machine is limited to only support one computing service in the described platform.

2. Cloud service structure and virtualized resource structure

To ensure the cloud service SLA, the cloud computing platform management layer must first ensure the performance indicators of the relevant virtualized resources. Therefore, it is very important for the management layer to understand the relationship between cloud services and virtualized resources.

In the platform, a cloud service may be based on multiple basic services, which means that the cloud service is associated with multiple virtualized resources. In addition, cloud services may also involve storage services, etc. Taking a web service with a single function as an example, the cloud services and virtualized resources involved in it may be quite complex, as shown in the figure below.

In order to provide this web service, the platform needs to combine and configure a group of virtualized resources according to service requirements. The visible composition shown in the diagram above contains multiple hierarchies consisting of basic computing services plus basic storage services and other ancillary services.

This cloud service structure is universal. In most cloud services and virtualized resource structures, the provision of computing resources is the main load that causes platform energy consumption. Therefore, the management of computing resources is the key management requirement of the virtualization layer. In contrast, the management of storage services and other auxiliary services (such as accounting and authentication, etc.) is relatively simple.

1) Cloud service structure

The composition relationship of cloud services can refer to the simple object access protocol (Simple Object Access Protocol, SOAP) architecture, and reflect the different call processes between a group of cloud services by means of a similar composition service model expression. In the hierarchical relationship of cloud services, there are relationships such as sequential invocation, parallel invocation, and cyclic invocation of services.

2) Virtualization resource structure

The virtualization resource structure has the following two meanings.

- Virtualized resources form a resource network structure based on the virtual network topology at the virtualization layer, and the management system can partition resources according to the virtual network and control management operations such as access rights and load balancing.

- The association of virtualized resources based on the mutual invocation relationship between cloud service applications they support. Taking virtualized computing resources as an example, an application may use multiple virtual machines as its computing service support. According to the combined use of these computing services by this application, corresponding logical structures are generated between these virtual machines, and the network communication between virtualized resources in the same logical structure will be significantly higher than that of independent virtualized resources during service operation. node.

Unifying the resource structure of the two levels can maximize the communication performance of the platform, so the management layer obtains the structure description of the two levels at the same time, and implements effective adjustment management is a necessary condition for optimizing the application performance of the platform.

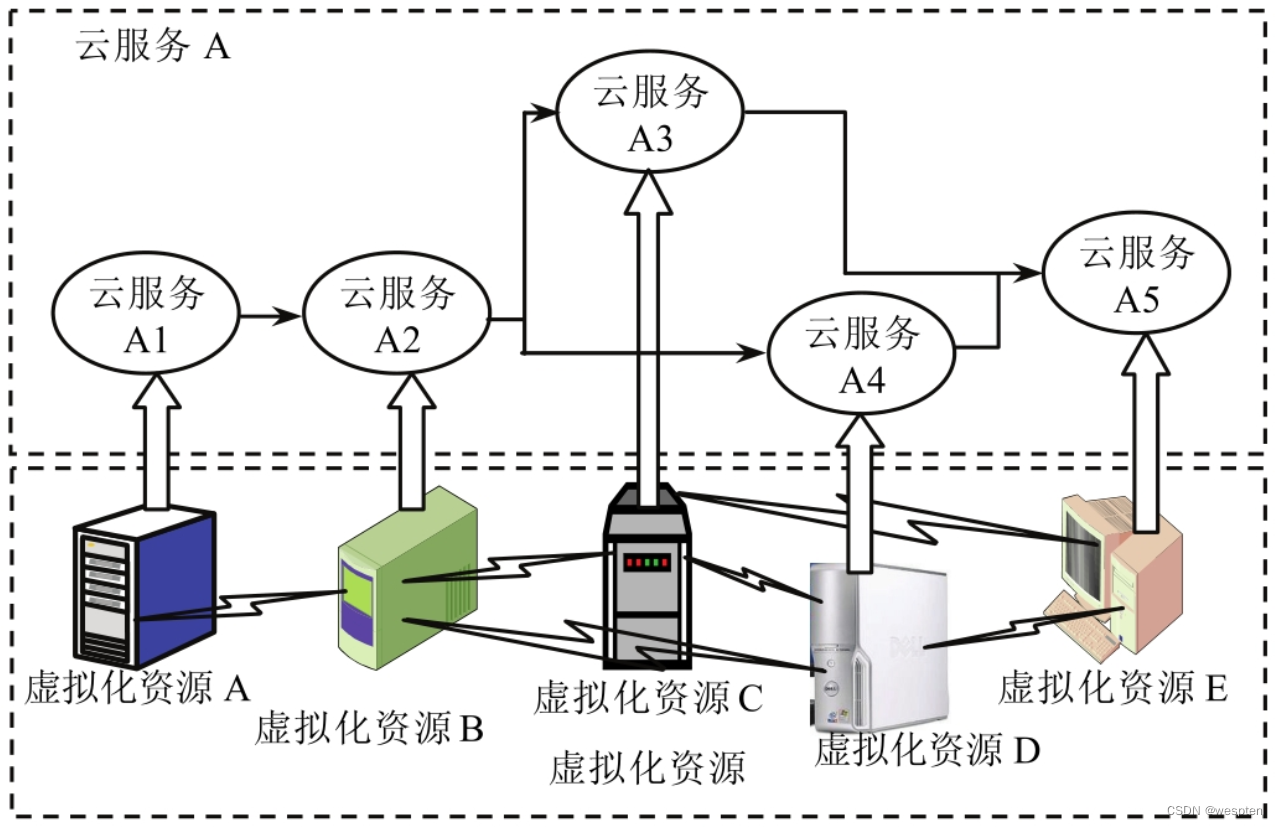

Imagine an application scenario, that is, the cloud computing platform receives a request to publish cloud service application A, and the SLA requirement description about A is sent together with the request message. The platform management layer first analyzes the basic services required by A and their minimum performance indicators according to the service structure and SLA description, and then searches the available atomic services in the platform service resource pool according to the analysis results and deploys the virtual services used by these services through management operations. resources, such as virtual machines and network storage. Publish virtualized resources on the virtualization layer, optimize resource distribution and structural relationship, and finally the platform returns the user interface of the released cloud service application A.

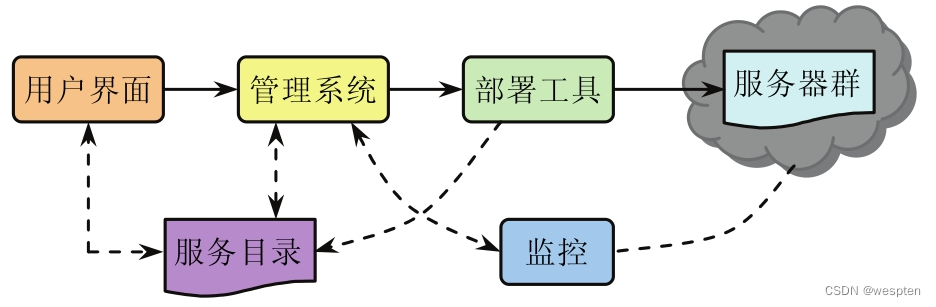

As shown in the figure below, structure A includes five basic services of cloud services A1 to A5.

When deploying resources, the platform needs to allocate virtualized resources according to the performance requirements of basic services. In this example, they are virtualized resources A~E, and each virtualized resource supports the operation of one cloud service. At the same time, the platform also configures the virtual network between virtualized resources according to the call relationship between basic cloud services, so as to reduce the transmission cost between virtualized resources that require data transmission.

2. Virtual resource platform

1. Functions of the virtual resource platform

Platform management of virtualized resources is the process of representing computer resources in a way that both users and applications can easily benefit from, rather than in a proprietary way based on the implementation, geographic location, or physical packaging of those resources. In other words, it provides a logical rather than a physical view of data, computing power, storage resources, and other resources.

Resources provide certain functions, which can receive input and provide output based on standard interfaces. Resources can also be hardware, such as servers, disks, networks, and instruments. Or software, such as web services.

Consumers access resources through standard interfaces supported by virtual resources. Using standard interfaces can minimize consumer losses when IT infrastructure changes. The infrastructure of cloud computing enables virtualized computing resources, storage resources, and network resources to be used and managed by users through the network in the form of infrastructure, that is, services.

Although the infrastructure of different cloud providers differs in the services they provide, as a service that provides underlying basic resources, this layer generally has the following basic functions.

1. Resource abstraction

When building infrastructure, the first thing to face is large-scale hardware resources, such as servers and storage devices connected to each other through the network. In order to implement high-level resource management logic, resources must be abstracted, that is, hardware resources must be virtualized.

On the one hand, the virtualization process needs to shield the differences of hardware products, and on the other hand, it needs to provide a unified management logic and interface for each hardware resource.

It is worth noting that depending on the logic implemented by the infrastructure, different virtualization methods of the same type of resources may have very different methods; in addition, according to the needs of business logic and infrastructure service interfaces, the abstraction of infrastructure resources often has multiple levels , resource abstraction is the basis for building infrastructure.

2. Resource monitoring

Resource monitoring is a key task to ensure the high efficiency of cloud computing infrastructure and a prerequisite for load management. Load management cannot be done without effectively monitoring resources. Infrastructure monitors different types of resources in different ways. For CPU, its usage rate is usually monitored; for memory and storage, in addition to monitoring the usage rate, read and write operations are also monitored as needed; for the network, its real-time input and output need to be monitored , and the routing status.

Infrastructure first needs to establish a resource monitoring model based on the abstract model of resources to describe the content and attributes of resource monitoring; at the same time, resource monitoring also has different granularity and abstraction levels. A typical scenario is a specific solution Resource monitoring is carried out in the overall scheme. A solution often consists of multiple virtual resources, and the overall monitoring result is the integration of the monitoring results of each part of the solution.

By analyzing the results, users can more intuitively monitor resource usage and its impact on performance, and take necessary actions to adjust solutions.

3. Load management

In a large-scale resource cluster environment such as cloud computing infrastructure, the load of all nodes is not uniform at any time. If the resource utilization of the nodes is reasonable, even if their load is uneven to some extent, it will not cause serious problems.

However, when the resource utilization rate of too many nodes is too low or the load difference between nodes is too large, it will cause a series of outstanding problems. On the one hand, if the load of too many nodes is low, it will cause waste of resources. The infrastructure needs to provide an automated load balancing mechanism to consolidate loads, improve resource utilization and shut down idle resources after load consolidation. On the other hand, if the difference in resource utilization is too large, the load on some nodes will be too high, and the performance of upper-layer services will be affected. And the load of other nodes is too low, resources are not fully utilized. At this time, the automatic load balancing mechanism of the infrastructure is required to transfer the load, that is, from the node with high load to the node with low load, so that all resources tend to balance in terms of overall load and overall utilization.

4. Data management

The integrity, reliability and manageability of data in the cloud computing environment are the basic requirements for infrastructure data management. Since the infrastructure consists of large-scale server clusters in data centers, or even server clusters in several different data centers, data integrity, reliability, and manageability are extremely challenging.

Integrity requires that the state of the data is determined at any time, and the data can be restored to a consistent state under normal and abnormal conditions through operations, so the integrity requires that the data can be correct at any time Read and synchronize appropriately on write operations.

Reliability requires minimizing the possibility of data damage and loss, for which redundant backups of data are usually required. Manageability requires data to be managed by administrators and upper-level service providers in a coarse-grained and logically simple manner. For this reason, it usually requires sufficient and reliable automated management processes for data management within the infrastructure layer. For the specific cloud infrastructure layer, there are other data management requirements, such as data reading performance or data processing scale requirements, and how to store massive data in the cloud computing environment.

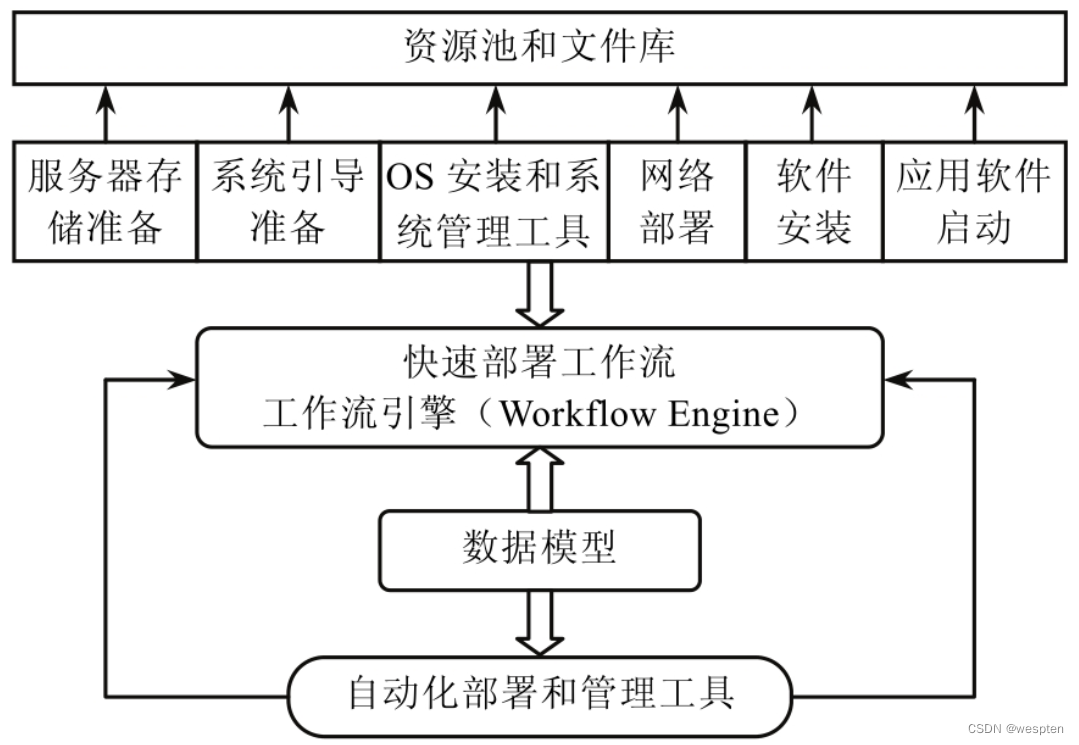

5. Resource deployment

Resource deployment refers to the process of delivering resources to upper-layer applications through automated deployment processes, that is, the process of making infrastructure services available. In the initial stage of application environment construction, when all virtualized hardware resource environments are ready, resource deployment in the initialization process is required; in addition, resource deployment is often performed twice or even multiple times during the application running process. In order to meet the needs of upper-layer services for resources in the infrastructure, that is, dynamic deployment during operation.

There are many application scenarios for dynamic deployment. A typical scenario is to achieve dynamic scalability of infrastructure, that is, cloud applications can be adjusted according to specific user needs and changes in service conditions within an extreme period of time. When the workload of user services is too high, users can easily expand their own service instances from several to thousands, and automatically obtain the required resources.

Usually, this kind of scaling operation must not only be completed in a very short time, but also ensure that the complexity of the operation will not increase with the increase in scale; another typical scenario is fault recovery and hardware maintenance. In cloud computing, thousands of In a large-scale distributed system consisting of tens of thousands of servers, hardware failure is inevitable. Applications also need to be temporarily removed during hardware maintenance. The infrastructure needs to be able to replicate the data and operating environment of the server and establish the same environment on another node through dynamic resource deployment, so as to ensure rapid recovery of services from failures.

6. Security management

The goal of security management is to ensure that infrastructure resources are legally accessed and used. Cloud computing needs to provide a reliable security protection mechanism to ensure data security in the cloud, and provide a security review mechanism to ensure that operations on cloud data are authorized and available. being tracked.

The cloud is a more open environment where user programs can be executed more easily. This means that malicious code and even virus programs can destroy other normal programs from within the cloud. Because programs are quite different from traditional programs in the way they run and use resources, how to better control code behavior or identify malicious code and virus code in a cloud computing environment has become a new challenge for administrators; at the same time , in the cloud computing environment, data is stored in the cloud, how to prevent cloud computing managers from leaking data through security policies is also a problem that needs to be considered emphatically.

7. Billing Management

Cloud computing is also a pay-as-you-go billing model. By monitoring the usage of the upper layer, it is possible to calculate the storage, network and memory resources consumed by the application within a certain period of time, and charge users based on these calculation results. During the specific implementation, the cloud computing provider can adopt some appropriate alternative methods to ensure the smooth completion of the user's business and reduce the fee that the user needs to pay.

The primary function of a virtualized resource platform is to integrate a large number and various types of computer resources, such as storage and network resources. And these resources need to be effectively monitored to achieve load balancing. To this end, the virtualized resource platform needs to have good heterogeneity and be able to effectively integrate different hardware and software resources; in addition, the virtualized resource platform has the function of dynamic resource deployment. That is, two or even multiple resource deployments can be performed during the running of the application. In this way, resources can be used on demand, and resource management and support tools transparent to physical facilities can be provided.

2. Virtual resource platform

In order to break through the key technologies of computing resource virtualization, software as a service, and resource flexible scheduling, it is necessary to build a virtual resource platform layer to provide users with services such as computing resources, virtual machine resources, virtual storage, services, and data resource space, and complete the support " Software as a Service, Location Transparency, and Interaction Pervasiveness" is a networked operating system virtualization platform for new network application models.

1. General framework

A virtualized resource platform (infrastructure layer) can aggregate and manage resources from one or more clusters, a cluster being a group of machines connected to the same LAN. There can be one or more virtual machine management node instances in a cluster, and each instance manages the startup, termination and restart of virtual instances. According to the number of clusters, the virtualization platform layer can be divided into two architectures: single-cluster and multi-cluster.

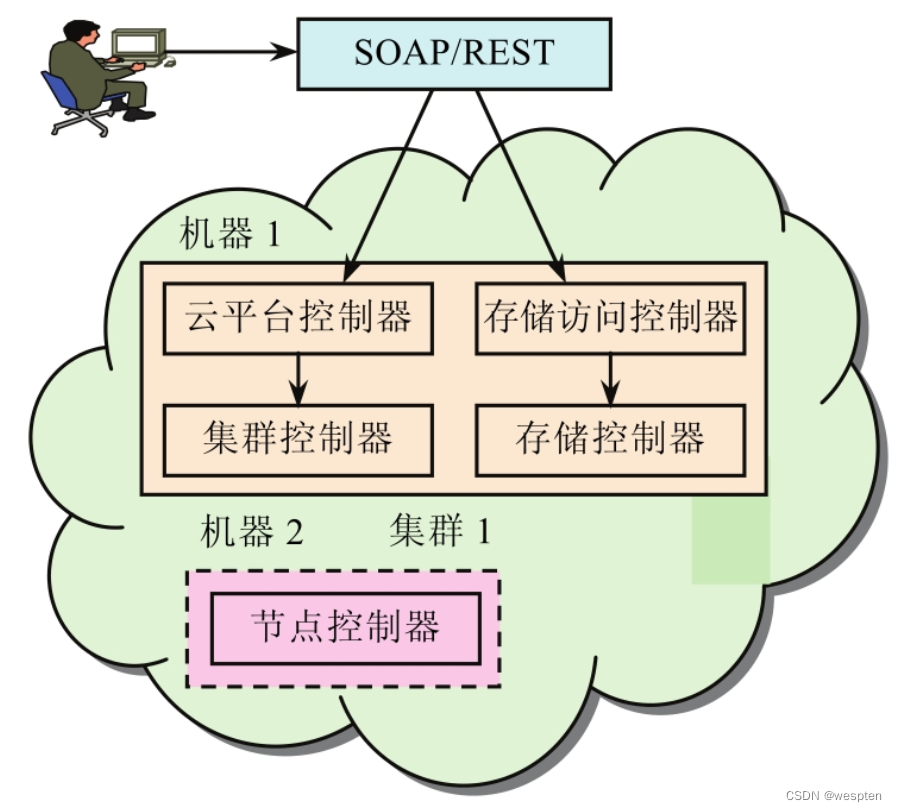

The single-cluster architecture is shown in the following figure:

It contains at least two machines, one machine runs cloud platform controller (CLoud Controller, CLC), cluster controller (Cluster Controller, CC), storage access controller (Walrus) and storage controller (Storage Controller, SC), etc. 4 front-end components; the other runs the Node Controller (NC), this configuration is mainly suitable for the purpose of experimentation and quick configuration. The installation process can be simplified by combining the front-end components into a single machine, but the performance of this machine needs to be very robust.

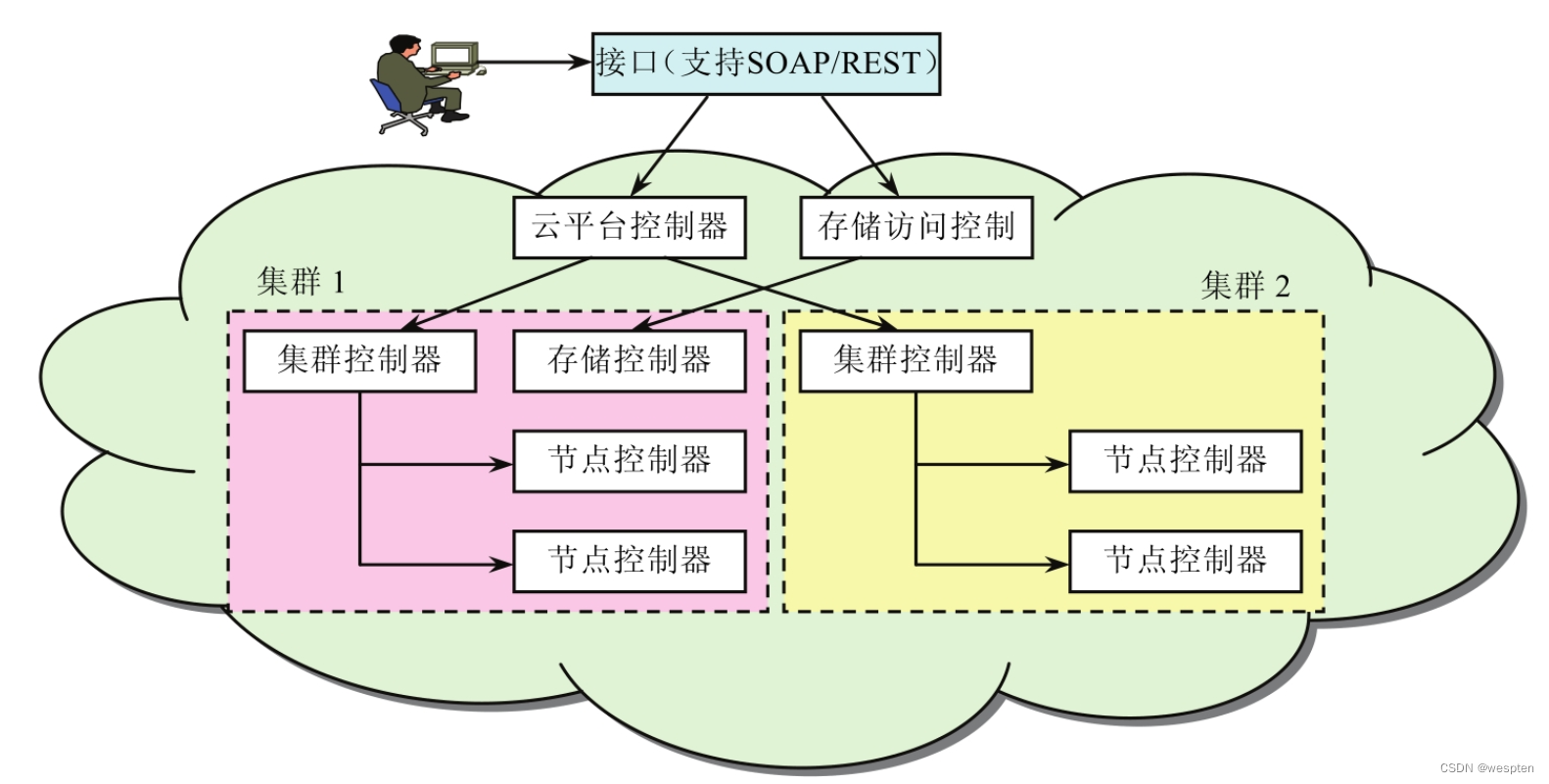

The multi-cluster architecture is shown in the following figure:

Here the individual components (CLC, SC, Walrus, CC and NC) can be placed in separate machines. This is an ideal way to configure the platform layer if you need to use it to perform significant tasks. A multi-cluster installation can also significantly improve performance by choosing the right machine for the type of controller it is running on. For example, you can choose a machine with an ultra-fast CPU to run CLC or choose a machine with large storage space to install Walrus. Multi-cluster The result is increased availability, and distribution of load and resources across the cluster. The concept of a cluster is similar to the concept of availability zones within Amazon EC2, where resources can be allocated across multiple availability zones so that a failure in one zone does not affect the entire application.

2. Components

Specifically, the virtualization platform layer consists of five main components. That is, CLC, CC, Walrus, SC and NC, they cooperate with each other to provide the required virtualization services, and use SOAP messaging with Work Station (Work Station, WS)-Security to communicate with each other securely.

1)CLC

CLC is the main controller component of the virtualization resource platform, responsible for managing the entire system, and is the main entrance for all users and administrators to enter the virtualization resource platform. All clients communicate with the CLC only through APIs based on SOAP or REpresentational State Transfer (REST), and the CLC is responsible for passing requests to the correct components and sending responses from those components back to the client, which is The external window of the virtual resource platform.

Every virtualized resource platform installation includes a single CLC, which acts as the central nervous system of the system, the user's visible entry point and component for making global decisions. It is responsible for processing requests initiated by users or management requests issued by system administrators, making high-level virtual machine instance scheduling decisions, and handling service level agreements and maintaining system metadata related to users. The CLC consists of a set of services that handle user requests, authenticate and maintain the system, user metadata (virtual machine images, key pairs, etc.), and manage and monitor the operation of virtual machine instances. These services are configured and managed by the Enterprise Service Bus (ESB), through which operations such as service publishing can be performed. The design of the virtualization resource platform emphasizes transparency and simplicity to facilitate experimentation and expansion of the platform.

In order to achieve this granular level of expansion, the components of CLC include virtual machine scheduler, SLA engine, user interface and management interface, etc. They are modular and independent components that provide well-defined interfaces to the outside world, and ESB is responsible for controlling and managing the interaction and organic cooperation between them. CLC can work like Amazon's EC2 by using web services and Amazon's EC2 query interface to interoperate with EC2's client tools.

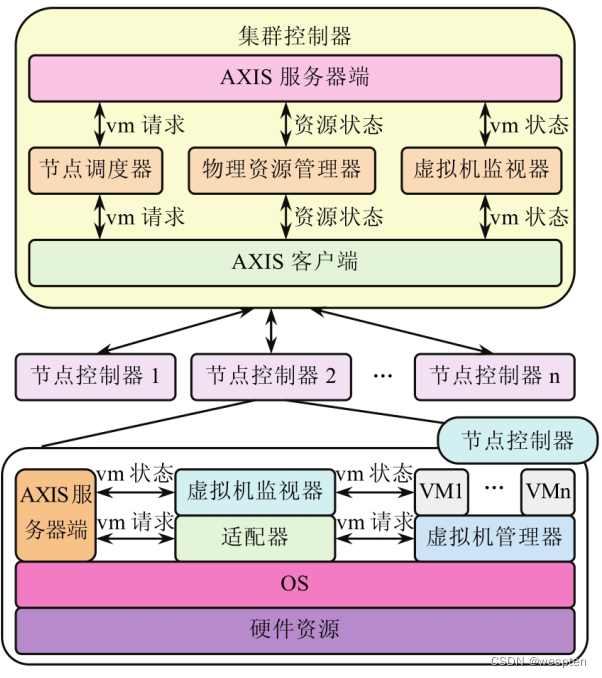

2)CC

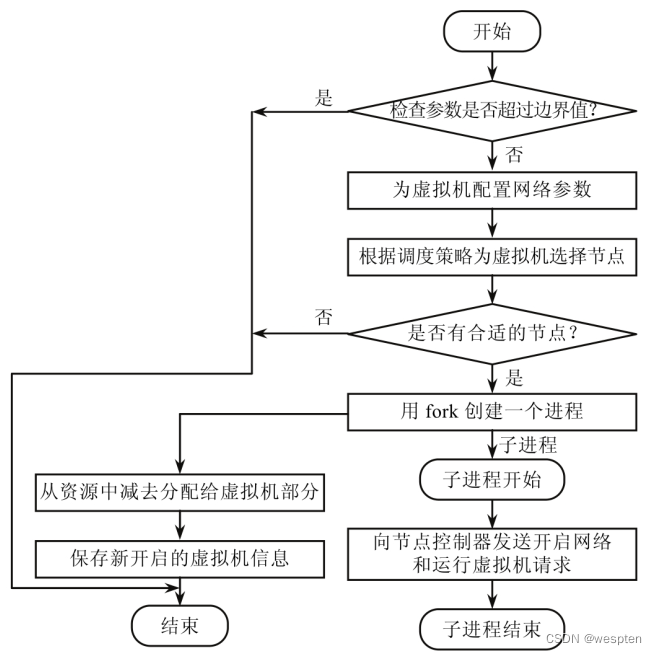

The CC of the virtualization resource platform is responsible for managing the entire virtual instance network, and the request is sent to the CC through the interface based on SOAP or REST. CC maintains all information about NCs running in the system, is responsible for controlling the life cycle of these instances, and routes requests to start virtual instances to NCs with available resources.

A typical CC runs on the head node or server of the cluster, both of which have access to private or public networks. One CC can manage multiple node controllers, and is responsible for collecting node status information from the node controller it belongs to.

According to the resource status information of these nodes, it schedules the requests of the incoming virtual machine instances to be executed on each node controller, and is responsible for managing the configuration of public and private instance networks. Like NC, CC interface is also described by Web Services Description Language (Web Services Description Language, WSDL) document, these operations include runInstances, describeInstances, terminateInatances and describeResources. Describing and terminating an instance is passed directly to the relevant node controller.

When CC receives a runInstances request, it executes a simple scheduling task. The task queries each node controller by calling describeResource, and selects the first NC with enough idle resources to execute the instance running request. CC also implements the describeResources operation, which takes the resources occupied by an instance as input and returns the number of instances that can be executed in its NC at the same time.

3)NC

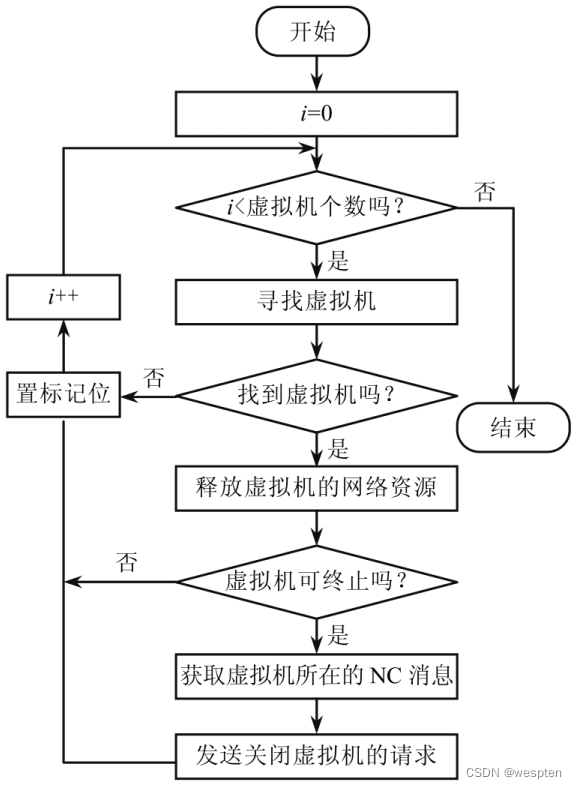

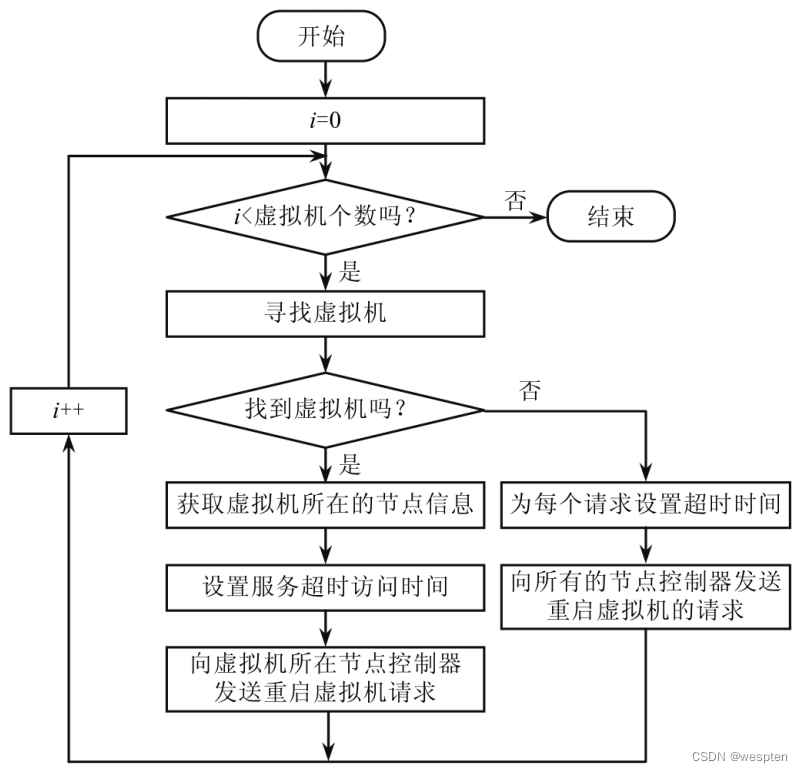

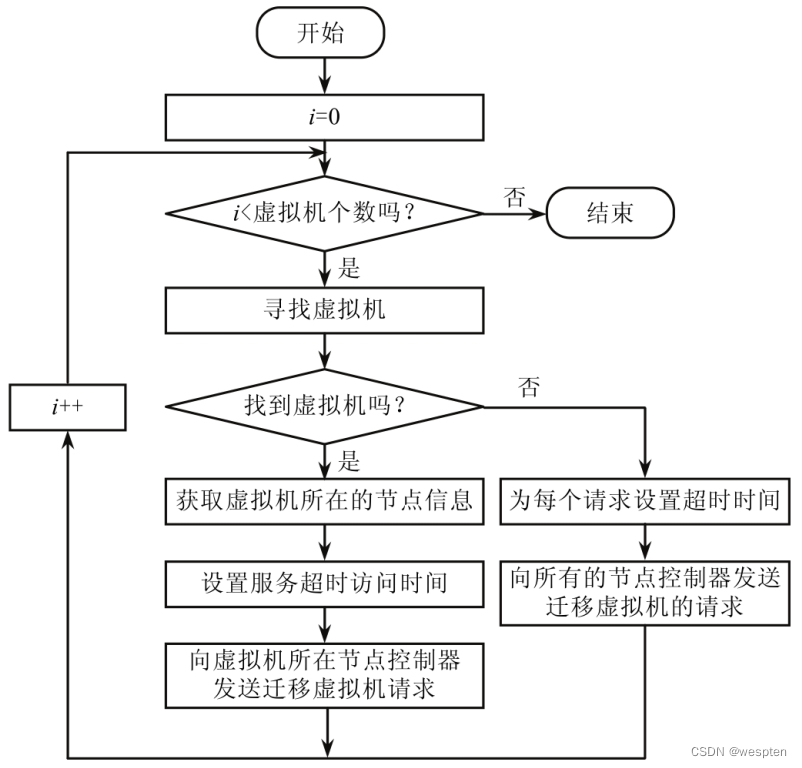

The NC control host operating system and corresponding virtualization platform hypervisor (Xen or KVM) must run an instance of NC in each machine hosting the actual virtual instance (instantiated upon request from CC).

NC is responsible for managing a physical node, which is a component running on the physical resource hosted by the virtual machine, responsible for starting, checking, shutting down and clearing the virtual machine instance, etc. A typical virtualization platform has multiple NCs installed, but only one NC needs to run on a machine, because one NC can manage multiple virtual machine instances running on the node.

NC interface is described by WSDL document, which defines the instance data structure and instance control operations supported by NC, these operations include runInstance, describeInstance, terminateInatance, describeResource and startNetwork. The running, describing, and terminating operations of the instance perform the minimal configuration of the system and invoke the current hypervisor to control and monitor the running instance.

The describeRescource operation returns the characteristics of the current physical resource to the caller, including information such as processor resources, memory and disk capacity; the StartNetwork operation is used to set and configure virtual Ethernet,

4)Walrus

This controller component manages the user's access to the storage service in the virtualization resource platform, and the request is passed to the storage access controller through the interface based on SOAP or REST.

5)SC

SC implements Amazon's S3 interface and is used in conjunction with Walrus to store and access virtual machine images, kernel images, RAM disk images, and user data. Where VM images can be public or private and are initially stored in a compressed and encrypted format, these images are only decrypted when a node needs to start a new instance and requests access to the image.

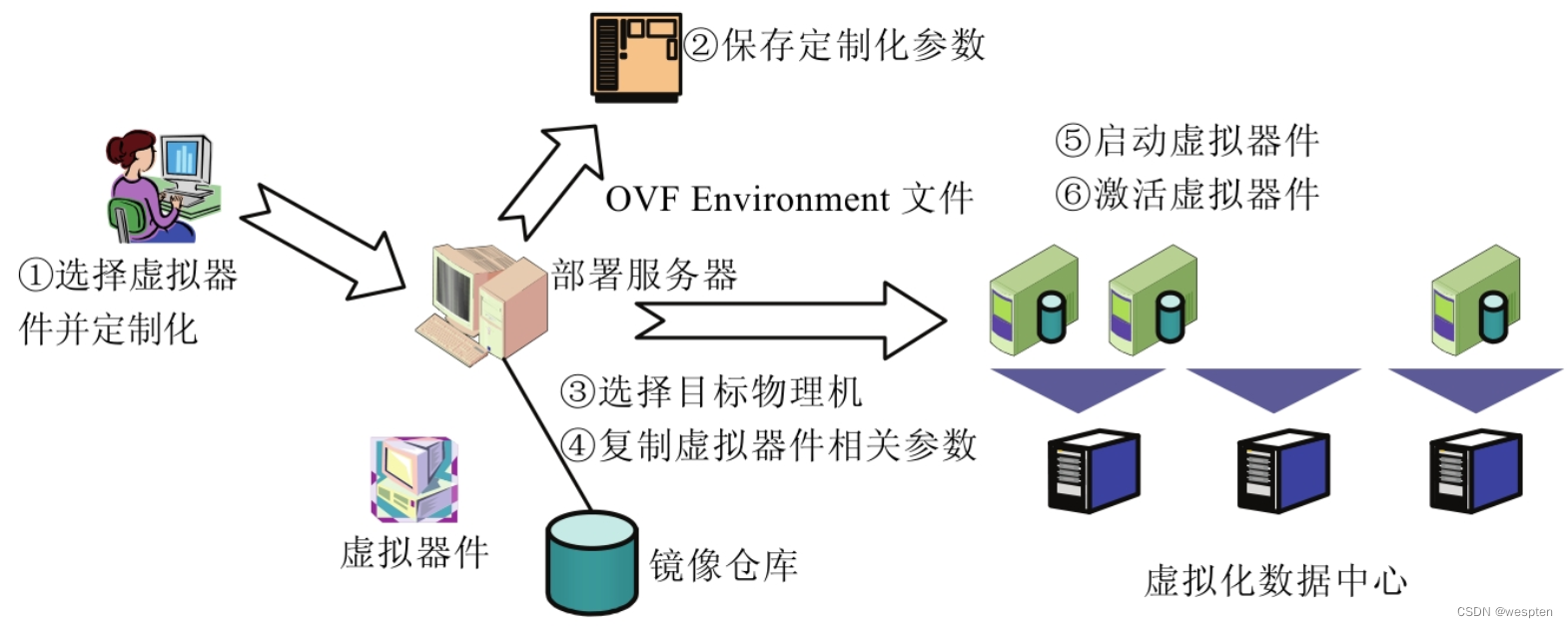

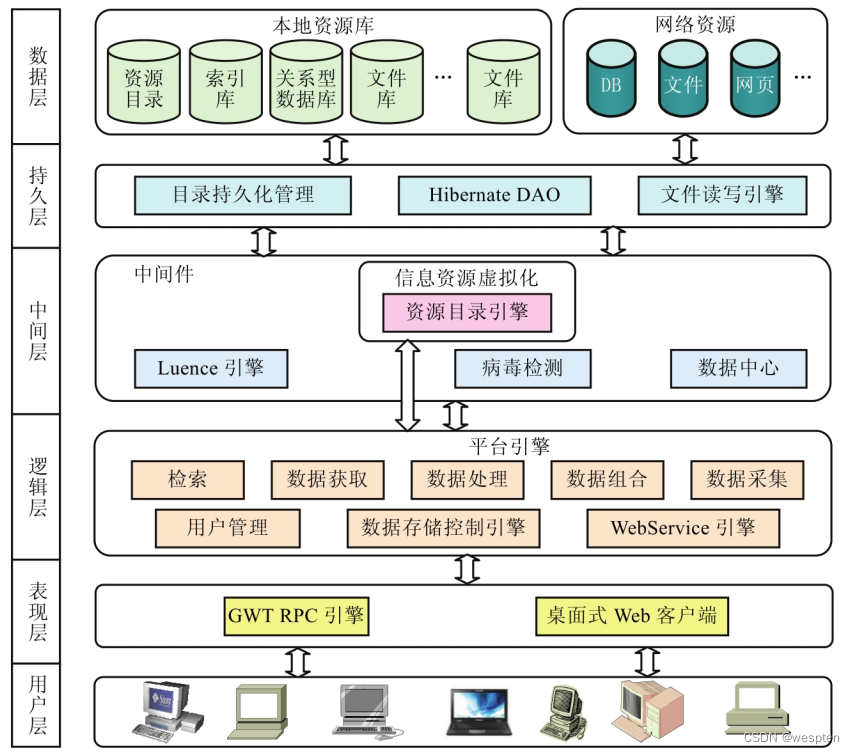

3. Virtual logical architecture of information resource cloud

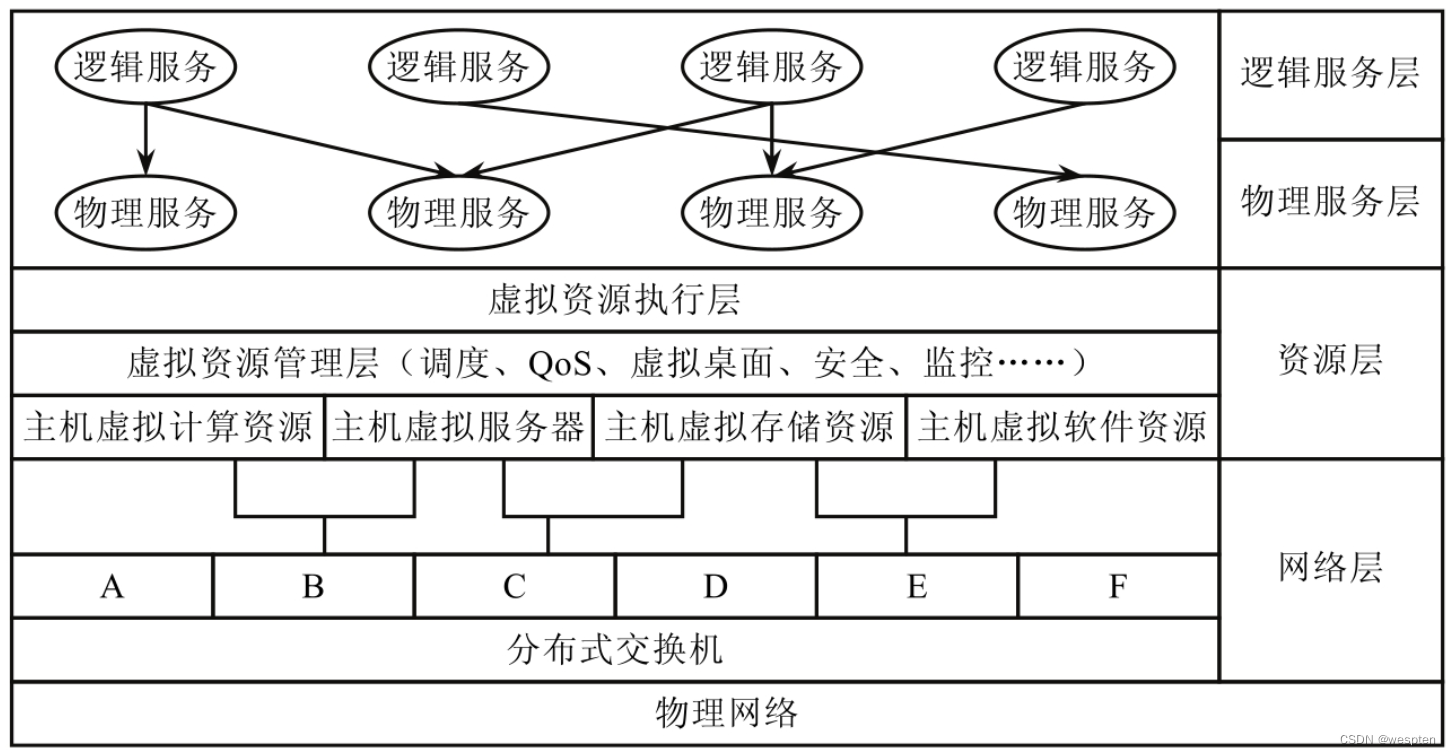

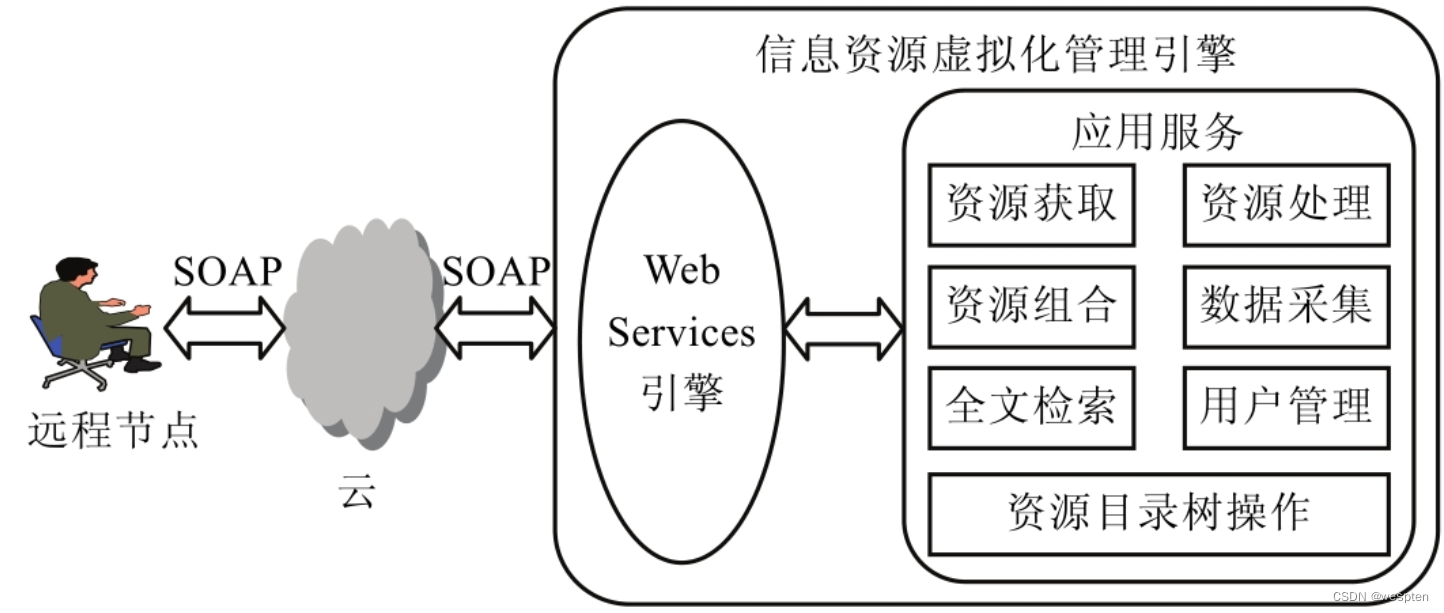

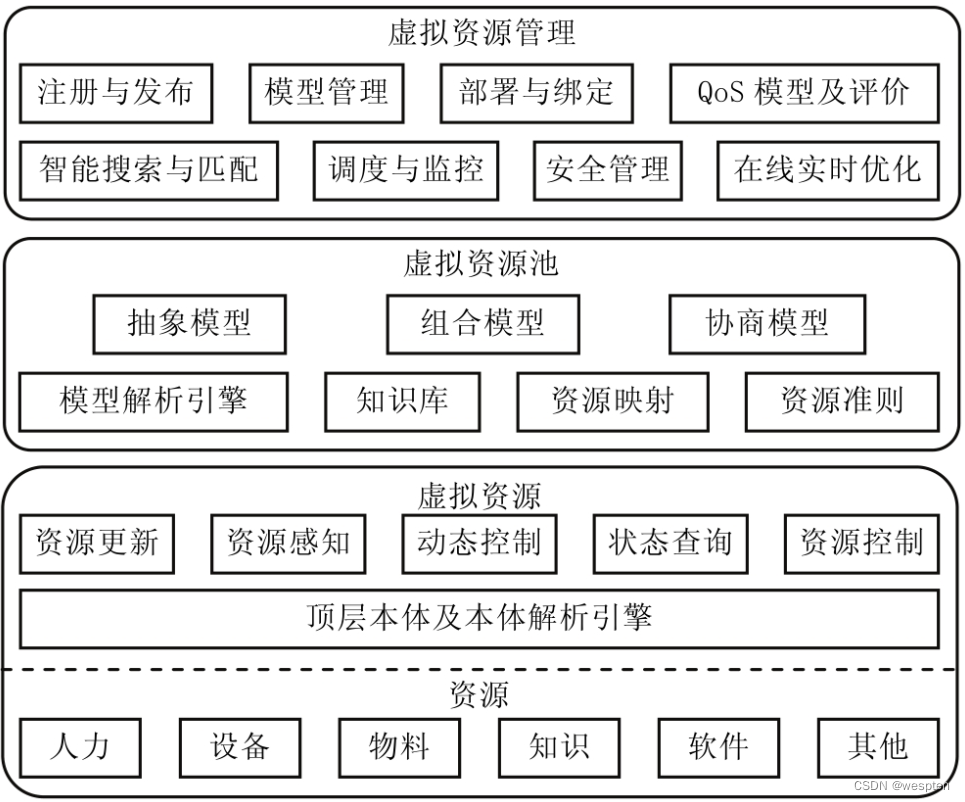

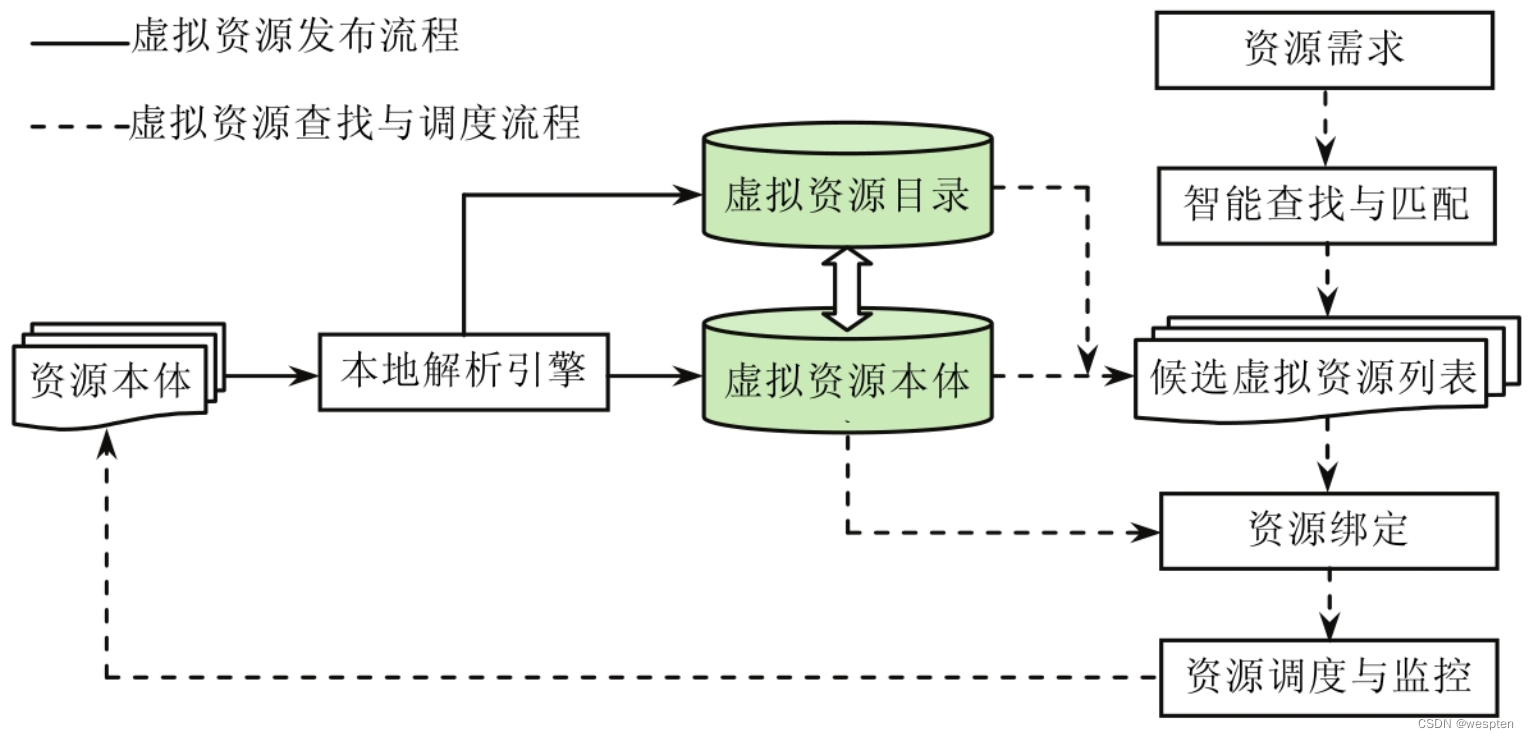

After expanding the specific attributes of information resources in combination with the cloud environment, the virtualization technology is introduced into the abstract layer model of resource virtualization to obtain a logical architecture for realizing cloud virtualization of information resources, as shown in the figure below.

It realizes virtualization from the perspective of user utilization, and can use information resources dynamically, transparently and at low cost.

1. Network layer

The network layer is the most basic layer to realize the virtualization of information resources. The physical host is not only an important carrier of information resources, but also an important part of the information resource cloud. Currently, it is mainly realized through the vNetwork network element in Vmware vSphere.

This layer utilizes distributed switches among virtual machines in a specified physical server to integrate them as a single virtual switch, so that virtual machines can ensure that their network configurations remain consistent when migrating across hosts.

One end of the switch is connected to the port group, and the other end is an uplink, which is connected to the physical Ethernet adapter in the server where the virtual machine resides. A virtual switch can link its uplinks to multiple physical Ethernet adapters to enable NIC teaming. With NIC teaming, two or more physical adapters can share the traffic load or provide passive failover in the event of a physical adapter hardware or network failure. All virtual machines associated with the same port group belong to different physical servers and are on the same network within the virtual environment.

From the perspective of the virtual machine, the communication process in the guest operating system is the same as that of the real physical device; from the outside of the virtual machine, the vNIC (virtual network interface card) has an independent MAC address and one or more IP addresses that comply with the standard Ethernet network protocol. It unifies discrete hardware resources to create a shared dynamic platform with built-in availability, security, and scalability for applications.

Network virtualization can enable virtual machines deployed in physical hosts in the data center to be interconnected in the same way as in the physical environment, regardless of which network they belong to. Thus, the boundary between different networks is eliminated, and the requirement of transparency is met.

2. Resource layer

Network virtualization enables hosts to be used on demand regardless of restrictive factors such as geography and attributes. On this basis, virtualization of storage and servers is required to virtualize the data stored in them.

1) Virtual storage resources

Virtual storage resources can transform the storage medium in the traditional environment into the mode required in the cloud environment. At present, the cloud storage system usually divides the virtual storage into the following three layers.

- Physical device layer: It is mainly used to allocate and manage resources at the data block level, and use the underlying physical device to create a continuous logical address space, that is, a storage pool. According to the attributes of physical devices and user requirements, a storage pool can have multiple data attributes, such as read and write characteristics, performance weight, and reliability level.

- Storage node layer: Allocate and manage resources among multiple storage pools inside the storage node, and integrate one or more storage pools allocated on demand into a unified virtual storage pool within the scope of the storage node. This layer is implemented inside the storage node by the storage node virtual module, which manages the storage devices allocated on demand from the bottom and supports the virtualization layer of the storage area network on the top.

- Storage area network layer: allocate and manage resources between storage nodes, and centrally manage storage pools on all storage devices to form a unified virtual storage pool. This layer is implemented by the virtual storage management module on the virtual storage management server, and manages the resource allocation of the virtual storage system in an out-of-band virtualization manner, and provides services such as address mapping and query for virtual disk management.

A two-layer address space mapping mechanism is introduced in the virtualized storage to construct two logical parts and a mapping component, in which the global extended address space is used to manage all remote free memory mapped to the local extended address space, and the serial extended address space is used to expand local physical address space.

Finally, the mapping component completes the mapping from the global extended address space to the logical extended address space according to certain rules, so as to construct a memory resource abstraction that crosses the boundary of physical server resources. In addition, using balloon drive, page swapping, content-based page sharing and page patching technologies, etc., to dynamically adjust the memory allocation size of virtual machines by releasing free memory and using remote memory, and using remote memory as an additional storage level To mediate memory allocation can achieve maximum optimization of memory resource allocation.

Each piece of data in cloud storage has several backups stored in different nodes. When a node in the cloud fails, the monitor sends a signal to quickly migrate the virtual machine. Nodes can be added and removed dynamically, which is more scalable than the original storage method.

The key to realizing information resource virtualization is storage virtualization. Hadoop distributed file system, vSphere high-performance cluster file system and Google file system are all distributed file systems applicable to cloud environment, which have high fault tolerance and can be deployed on On low-cost hardware equipment.

2) Virtual server

There are two approaches to server virtualization, namely software and hardware virtualization. Properly configured running servers allow multiple virtual machines, servers, or desktops to run on the same physical server, each without requiring its own power supply, generating its own heat, or requiring space. But these virtual machines can all contribute the same service and run in one physical machine at the same time, so that the utilization rate of each data center is greatly improved and a lot of space is saved.

The use of information resources relies on servers, and server virtualization can simplify management and improve efficiency by allocating server resources to workloads that need them most anytime and anywhere by prioritizing resources. This reduces the resources reserved for a single workload peak and plays an important role in resource scheduling.

3) Virtual data center

The result of information resource virtualization is like a huge data center, which can continuously output content on demand, which can also be called "data virtualization" in a narrow sense.

On the basis of storage and server virtualization, cloud computing focuses on providing packaged general services and resources to users. The processing of heterogeneous resources does not rely on middleware, but does different processing for different resources. When responding to the program, it allocates a CPU, memory and software instead of heterogeneous resources. This facilitates management and saves costs. Therefore, the resources in the virtual data center can be grouped together with similar attributes according to isomorphic resources, and then integrated through physical-to-logical mapping.

The virtual data center can import data from different data sources into an abstracted service layer, which helps to reduce the need for physical storage systems and provide data for all applications that use data (especially business intelligence systems, analysis systems and transaction system) provides a unified interface.

4) Virtual resource management layer

To achieve on-demand, dynamic and effective provisioning, various virtualization methods must be organized reasonably, and the management layer is responsible for storage management, scheduling monitoring, desktop instantiation, QoS evaluation and security, etc.

After the virtualization of servers, etc., the scale increases, and its migration characteristics make it difficult to visualize the physical location of virtual servers in the network. The management layer can introduce resource view and virtual topology to manage resources. Through the virtual resource view, you can view the resource affiliation information of physical servers, virtual switches, VMs, and network configuration capabilities; virtual topology data aggregates all nodes into physical server nodes , and can reflect the virtual world inside the physical server.

The monitor is responsible for viewing the availability and resource usage rights of the virtual machine and monitoring the resource storage operation status after receiving the request. When it is found that the storage node has failed, the control node can transfer the workload to the normal storage node to complete.

Desktop instantiation is a virtual desktop, which can integrate the display windows of all software instances to truly present a virtualized experience for users. It enables the fusion of local and virtual desktops through the process of requesting, authenticating, connecting, enabling list, applying, and disconnecting. End-user devices become lightweight computers that only handle keyboard, mouse, monitor, and locally attached scanners and printers, allowing them to truly be used anywhere. Compared with the traditional "fat desktop", its advantages are firstly lower cost, and improved manageability, security, flexibility and resettability; secondly, only the required application windows are pushed to customers; thirdly, dynamic allocation Resources, high performance and load balancing; the fourth is to have a protocol that controls the interaction between the client and the server.

Security management performs the tasks of third-party authentication, authorization, and verification of user identities, and can also dynamically detect changes in user loyalty through file information to protect sensitive data.

5) Virtual resource execution layer

The core function of this layer is to support the execution of virtual resource tasks.

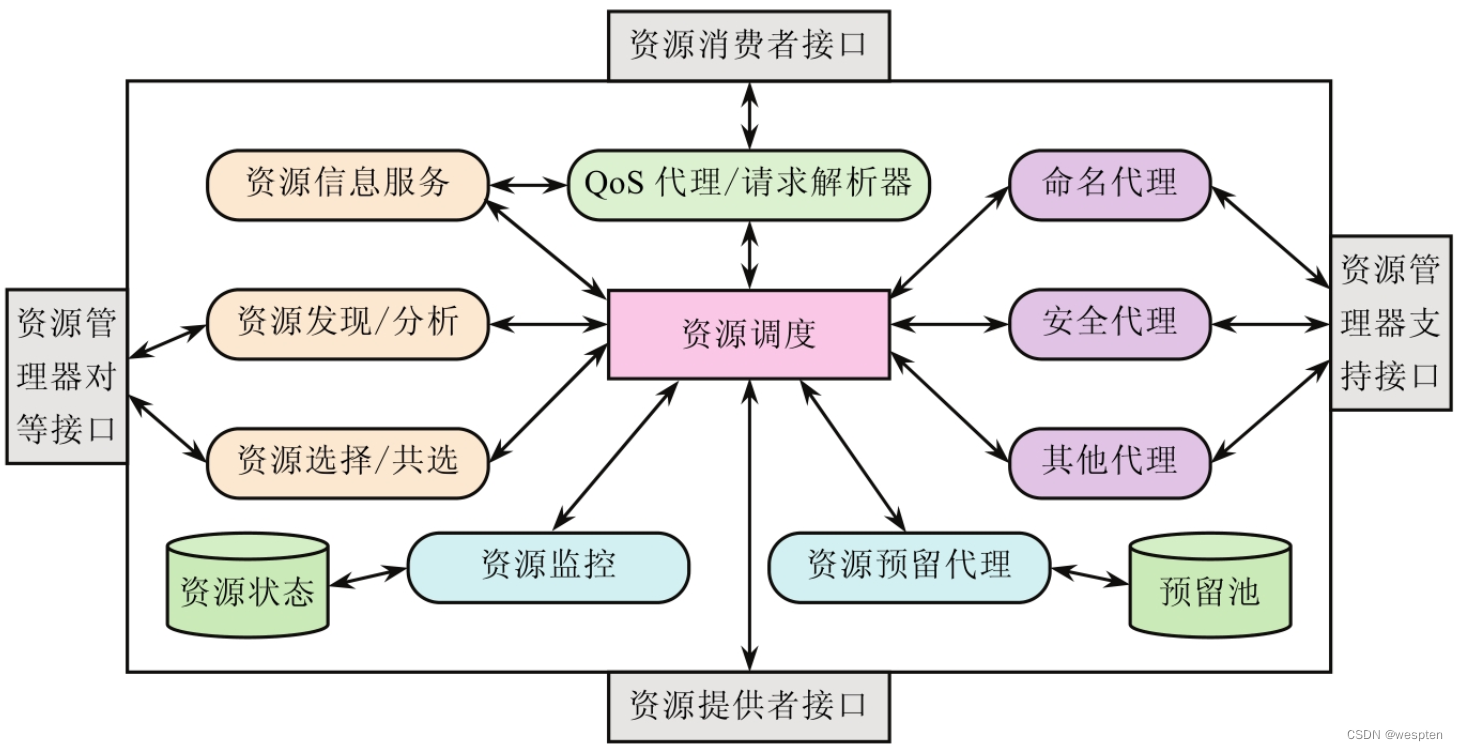

Resource scheduling is an important link to improve resource utilization efficiency in a cloud environment. For each service, real-time request volume is predicted based on load models, historical data analysis, and external event signs to meet resource requirements at a given point in time. Resource scheduling generally includes four steps, namely resource request, resource detection, resource selection and resource monitoring.

Firstly, user needs and resources are detected, and resources are evaluated according to the detected resource indicators and the predefined resource scheduling strategy. That is, select the optimal resource from the list of candidate resources, and perform corresponding actions according to the strategy and evaluation results. Then start the virtual machine to a suitable machine, so that the resources in the resource pool can be used reasonably. The scheduling migration strategy can reasonably shut down idle servers or start multiple virtual machines to balance the load when completing tasks with relatively heavy loads according to user needs.

Resources in the virtual execution environment are not aware of the existence of the virtualization layer, but will run as in the traditional computing environment, providing an independent environment for virtual resources. For software resources, virtual software can be dynamically deployed as long as part of it is not even installed in the system.

3. Physical service layer

The physical service layer mainly solves the problem of unified standardization of resources and unified call interface. Resource service encapsulation is a method of virtualization.

The first step is to describe the resource, that is, to select the corresponding resource description template. And fill in the corresponding resource attribute information as required to form a resource attribute document in XML format; the second is to package and form resource implementation classes as needed; the third is to deploy resources, that is, call the interface of the resource adapter to join the resource adapter. The resource adapter will automatically generate resources and obtain information about resource implementation classes. In this way, the service-oriented encapsulation of resources is completed, and a unified call interface is presented to the outside world.

There are three types of resource-to-service mapping. One is one-to-one. The functions that resources can complete are relatively simple, and they are directly encapsulated in the form of services. The second is many-to-one. Multiple combined resources are expressed as a single logical representation that provides a single interface. form; the third is one-to-many, for relatively powerful resources. Each function is independent of each other, and can be packaged into services that can complete different functions according to the functions.

4. Logical service layer

The logical service layer abstracts service functions from specific services and describes them in the form of logical services, thus forming a logical service layer to meet dynamically changing requirements or specific business needs.

The description of physical services and logical services is mainly described from two aspects: functional and non-functional attributes. The functional attribute description is the internal processing logic of the service, describing what the service can do; the non-functional attribute is also called "service quality" (Qos) , describing the external performance of the service when in use, such as performance, price, reliability, availability, and security.

There are two types of mapping from physical services to the logical layer. One is multiple physical resources with the same function and different non-functional attribute values. These physical services can complete the same business function and are the same kind of service.

Virtualize it into a logical service, and select the appropriate physical resource to use according to the specific non-functional attribute requirements during actual operation. The second is multiple physical resources with the same function and the same non-functional attributes. In order to increase fault tolerance or solve problems such as load balancing, multiple copies of a physical service are deployed on multiple machines. When invoking a physical service, one can be dynamically selected according to the current service operation status.

The above model basically covers the virtualization implementation methods of hardware, software, and data. The layers from top to bottom are not clearly defined and function independently, but complement each other and penetrate each other.

3. Resource virtualization design and implementation

1. How cloud computing realizes the virtualization of resources

According to the service model definition of Infrastructure as a Service (IaaS), service providers need to provide tenants with pay-as-you-go standardized elastic resource services, including elastic computing, elastic storage, elastic broadband, and virtual machines.

Cloud computing converts all hardware resources in the VDC provider's physical computer room into a huge resource pool through virtualization and standardization, and all virtual facilities in it are products that the provider can provide services, and are packaged in a standardized IaaS manner. Standard templates are rented out to users. Tenants can apply online and on-demand for the resources they need, and obtain access and use rights to the resources they apply for in a short period of time.

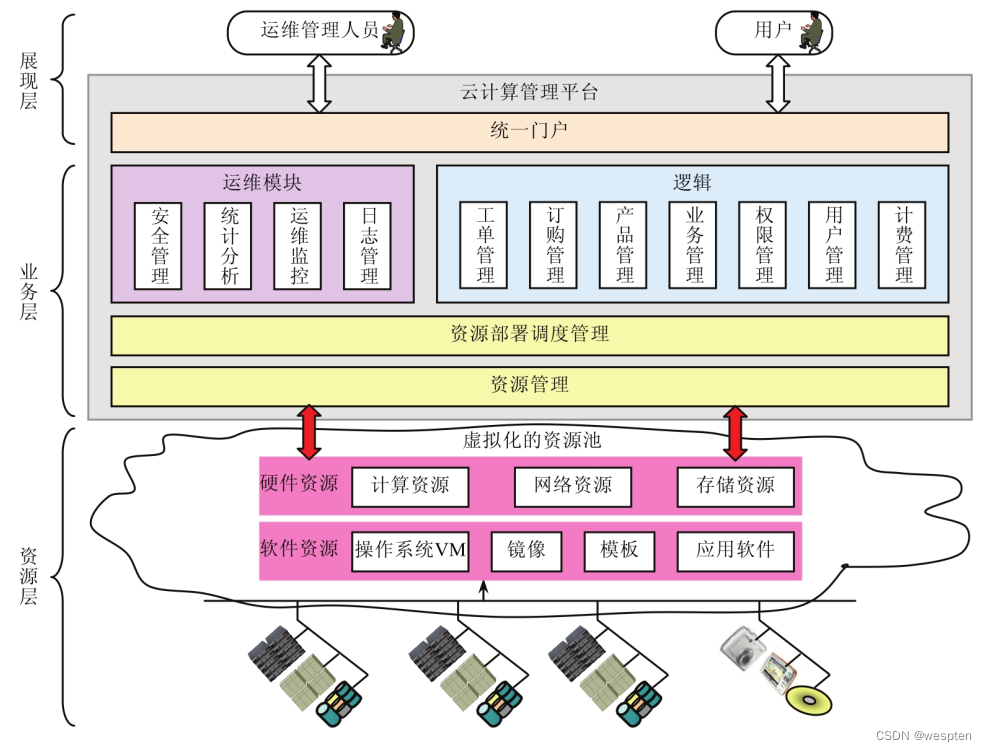

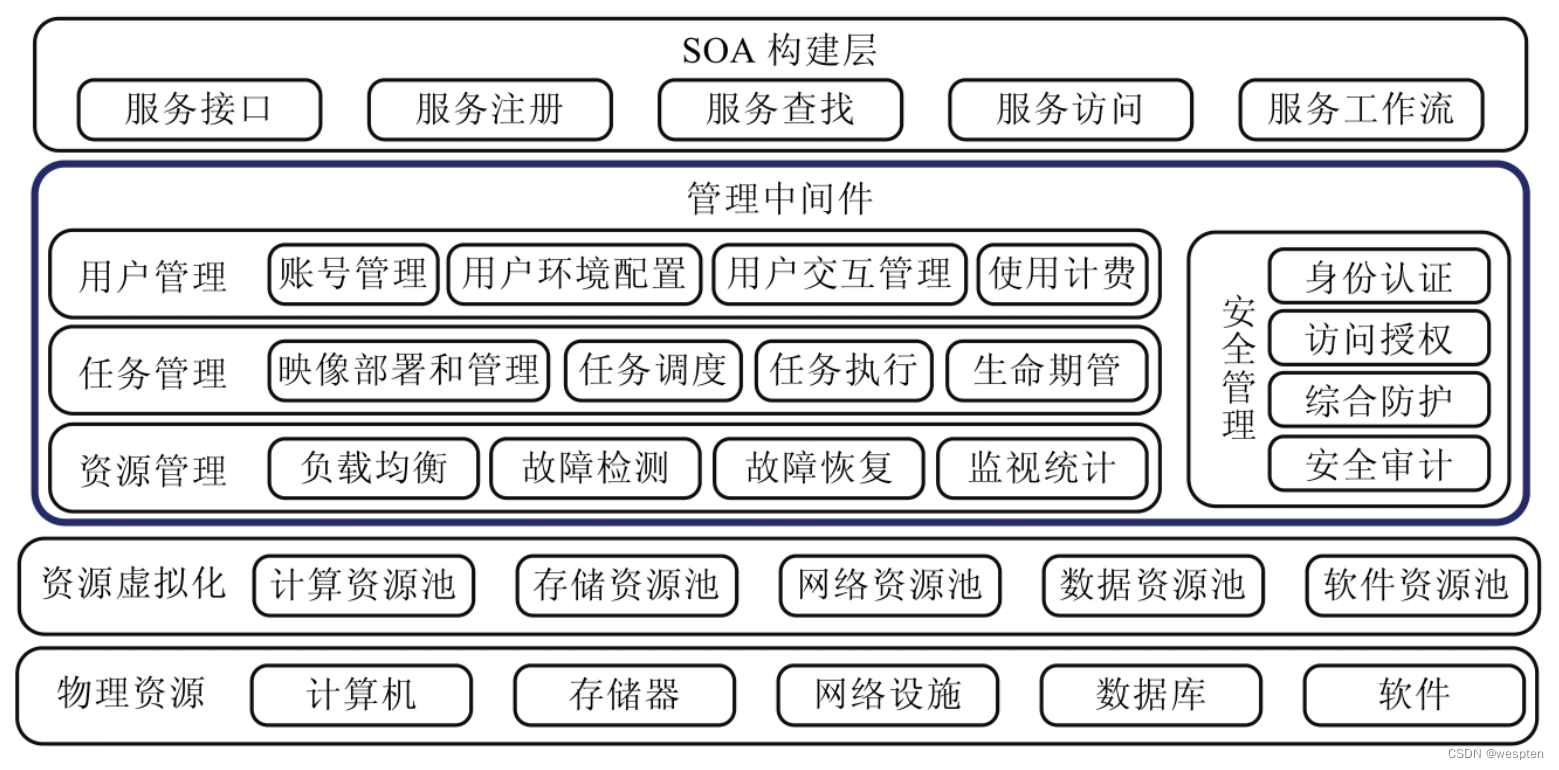

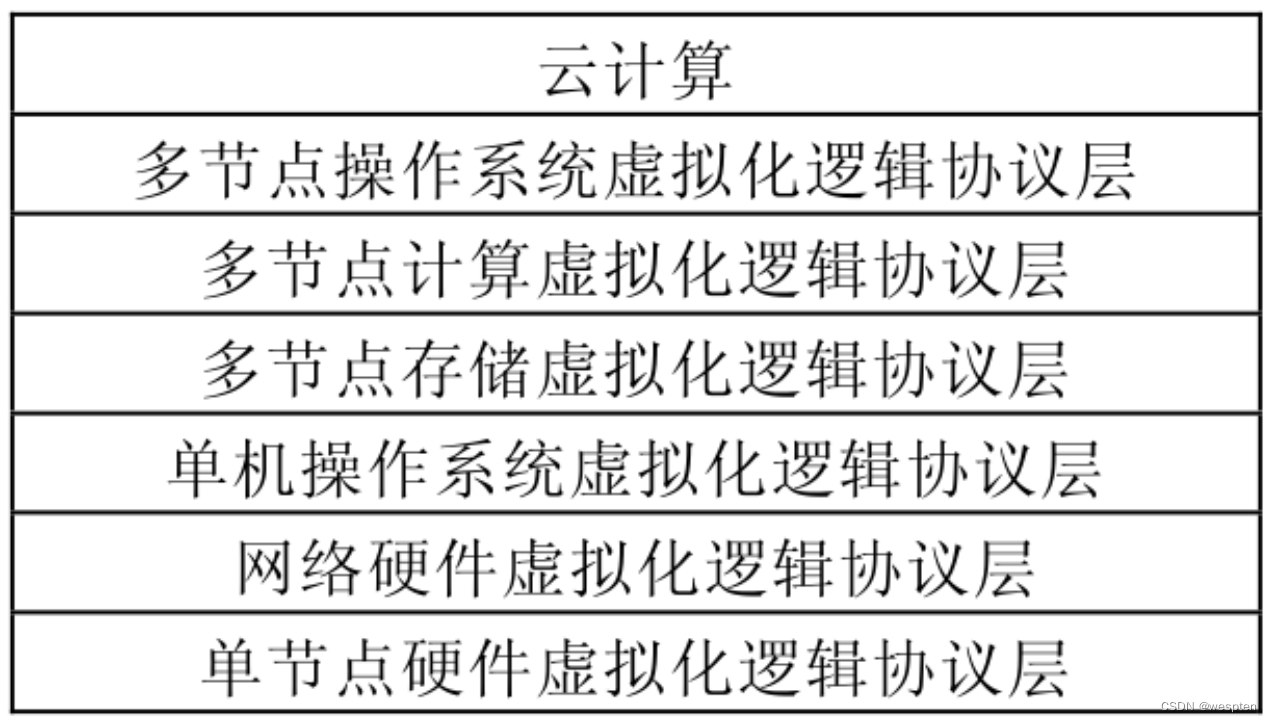

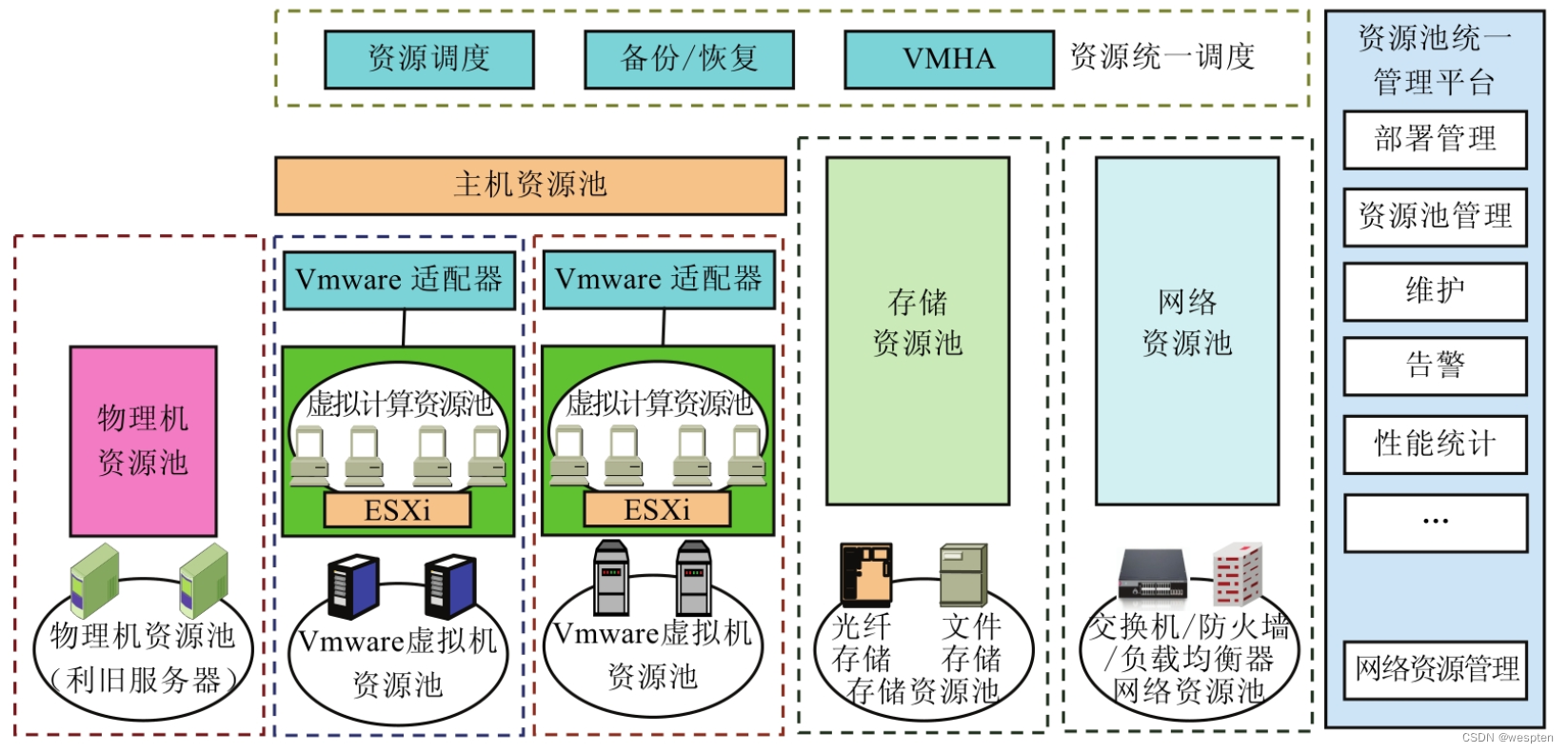

The following figure shows the hierarchical architecture of cloud computing to realize resource virtualization:

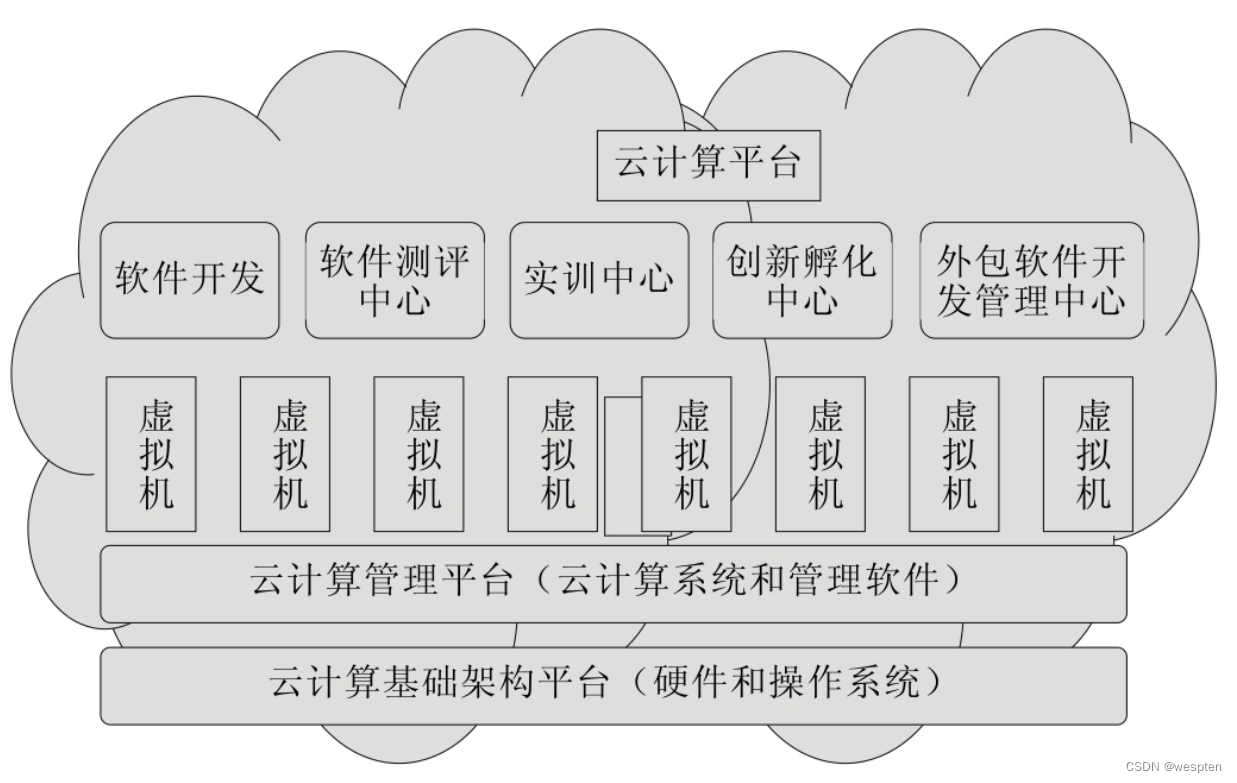

Administrators and ordinary users log in to the cloud computing management platform through the unified portal, and the services provided by the cloud computing management platform that each user can use according to different authorization levels are also different. What VDC tenants care about is how to apply for required resources on the cloud management platform, and view resource usage status, bills, and historical records.

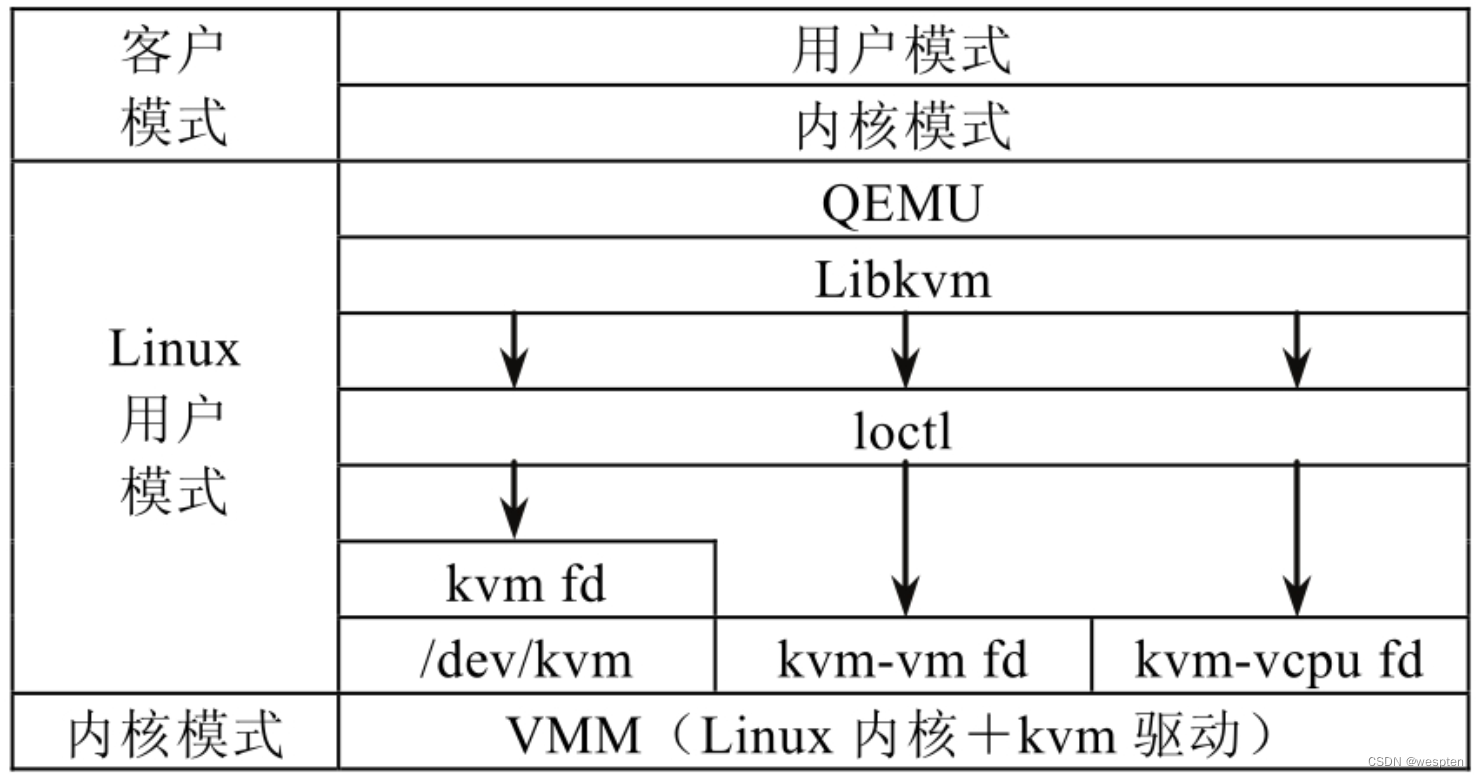

At the resource level, the cloud management platform implements virtualization of hardware resources through a virtual machine monitor (VMM), including CPU, memory, input/output (I/O) and network virtualization. Virtualized hardware resources are collected by different hypervisors and packaged into virtual machines (VMs) according to the instructions of the business layer.

2. Static and dynamic allocation algorithms for virtual resources

The virtualized operating environment in the cloud computing platform adopts an innovative computing model to enable users to obtain almost unlimited computing power through the Internet at any time, so that users can freely use computing and services and charge according to volume. The scalability and flexibility of virtualization technology greatly help the virtualized operating environment to improve resource utilization, and simplify the management and maintenance of resources and services.

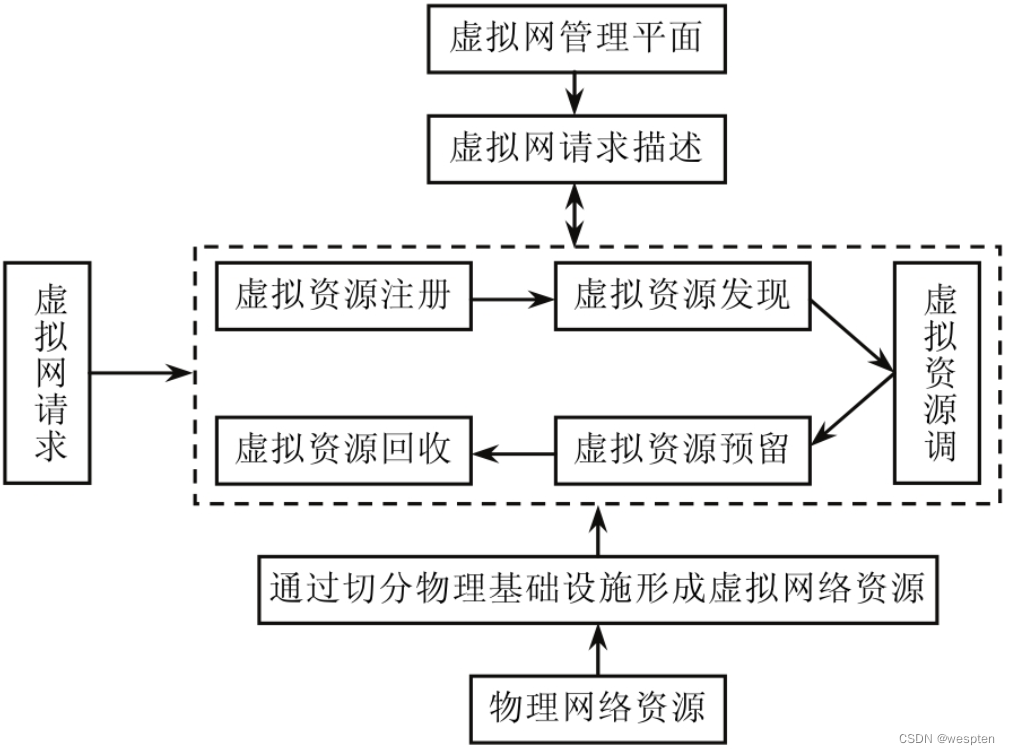

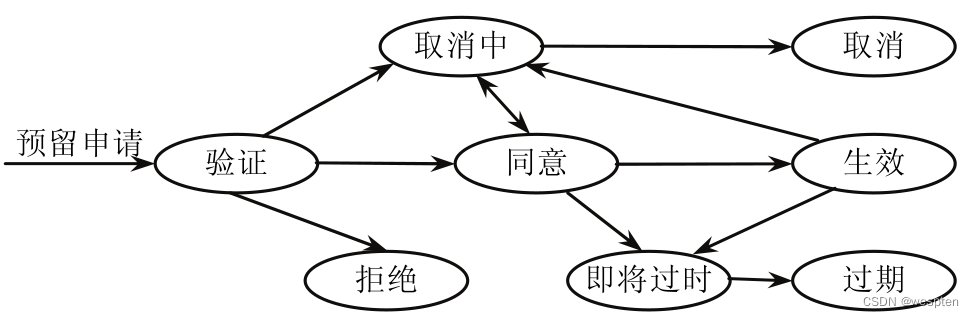

By integrating the physical resources of tens of thousands of servers to build a resource pool, it is finally provided to users on demand in the form of services. And it provides the virtual running environment of mainstream operating systems commonly used in Linux and Windows series, so that users can use the virtual machine in the virtual and running environment just like using a local physical machine. For various topologies and access control mechanisms, dynamic resource allocation of virtual networks requires persistent virtual network monitoring and dynamic update of physical node and link capacity.

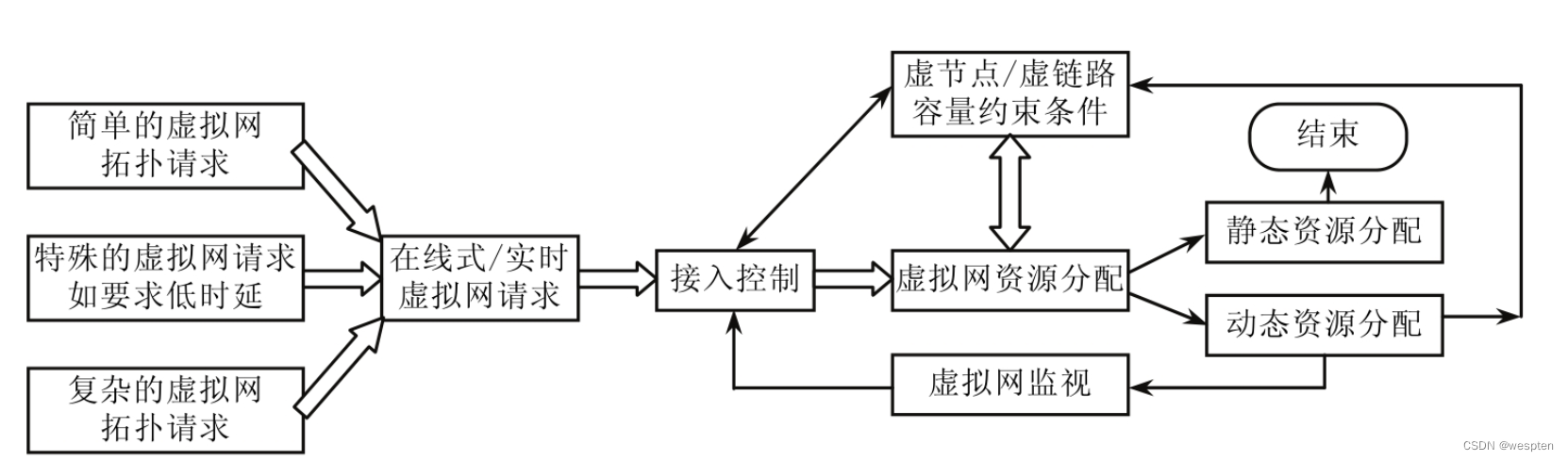

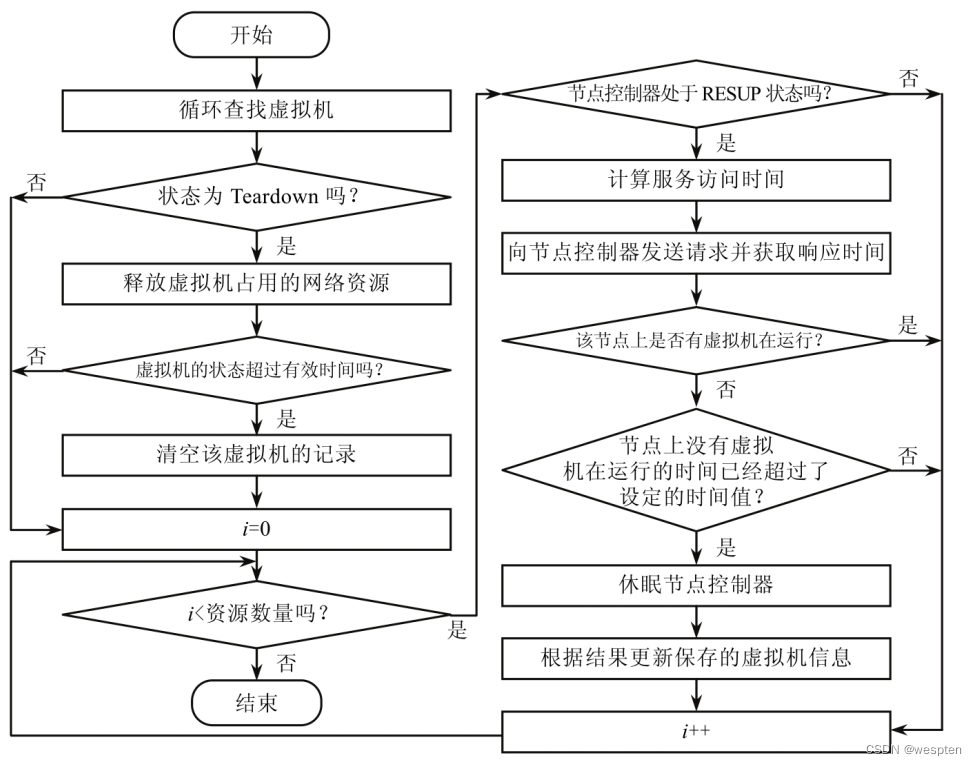

The following figure shows the dynamic and static resource allocation algorithm:

1. Static algorithm

Static virtual network mapping algorithms can be divided into the following two categories.

1) Two-stage virtual network mapping algorithm

The two-stage virtual network mapping algorithm separates node mapping and link mapping and executes them sequentially. Even if the offline virtual network mapping problem can be transformed into a demultiplexer problem, it is still an NP-hard problem. In the case of completed node mapping, virtual link mapping in the case of inseparable flows is also an NP-hard problem.

Therefore, the existing virtual network mapping algorithms all use greedy node mapping combined with a heuristic-based link mapping scheme to map the virtual network. Separate mapping of nodes and links can simplify the processing of the problem.

2) Virtual network mapping algorithm for one-step solution

The one-step virtual network mapping algorithm maps virtual network nodes and links at the same time to obtain the optimal mapping effect. Several common one-step virtual network mapping algorithms are as follows.

- Distributed Cooperative Segmentation Algorithm

In order to reduce the complexity and delay of the virtual network mapping algorithm, the distributed cooperative virtual network mapping algorithm divides the virtual network into several star topologies, that is, the hub-and-spoke topology. The algorithm uses a multi-agent-based scheme to simultaneously map the nodes and links of the star topology. The node mapping scheme also uses a greedy method to allocate the physical nodes with the largest available resources into hub nodes, and uses the shortest path algorithm to select connecting hub nodes. and the physical path of the spoke node. The distributed mapping method can obtain the advantages of no single point of failure and parallel processing of virtual network requests, but compared with the centralized mapping algorithm, its performance in terms of response time and global optimal resource allocation is poor.

Algorithms that aim to simultaneously map nodes and links can get stuck in multiple local optima if they need to recompute costs when network conditions change.

- Mapping algorithm based on business constraints

In order to narrow the search space, the VN topology is restricted to the back-bone Star structure. Some of these nodes are back-bone nodes and others are access nodes. The back-bone node is the center of the star topology, and access nodes are connected to the back-bone nodes.

In order to further reduce the complexity of the problem, the back-bone nodes are limited to a fully interconnected structure, that is, the entire virtual network topology is a complete graph composed of stars and rings. This virtual network mapping algorithm with the optimization goal of minimizing the virtual network mapping cost is only solved for one virtual network request, and the topology generated by the algorithm is not optimal, but the search space is narrowed.

This method transforms the mapping of back-bone nodes into a mixed integer quadratic programming model by limiting the topology of the virtual network, and uses the flow constraints between nodes to find the virtual network mapping scheme with the minimum mapping cost through an iterative method. However, the calculation of the algorithm is complex, and the generality of the virtual network topology design for a specific structure is poor.

- Algorithms that only consider embedded virtual links