之前博客PyQt5实现深度学习平台Demo(八)- c#调用python方式完成训练和预测_jiugeshao的专栏-CSDN博客中提到,接下来主精力还是先放在深度学习分类,检测,分割算法上面。之前虽然也对各算法做过了解,但没有一一用代码实现过,博主想花一段时间把这些算法大概实现下。

就从FCN开始吧,博主当前电脑的环境配置也大概说下:

Anaconda3的python环境

tensorflow2.3.1

cuda10.1

cudnn7.6

具体可参见我的博客PyQt5实现深度学习平台Demo(三)- Anaconda3配置tensorflow2.3.1及如何转化tensorflow1.x系列代码_jiugeshao的专栏-CSDN博客

FCN的大致理论过程可参见我的博客深度学习之检测、分类及分割(二)_jiugeshao的专栏-CSDN博客

上采样的时候,通过卷积将小尺寸feature map恢复回去

下面说明FCN的代码实现

本博客是在https://github.com/shekkizh/FCN.tensorflow代码基础上进行的实验操作,在pycharm中工程结构如下:

其中images下的trainning和validation原图是自己从网上下载的斑马线检测数据集(只选取了部分数据,且把原图缩放到了512*288)

链接:https://pan.baidu.com/s/1KRJCqnq8SbLT9dY5Ls3nbg

提取码:vki8

imagenet-vgg-verydeep-19.mat下载路径

链接:https://pan.baidu.com/s/1yjbiXkevQH7ukcd0f-9x1Q

提取码:9goj

1. 有了上面准备条件基础上,先用labelme来标注图片,labelme工具的介绍及使用方式见博客数据标注软件labelme详解_黑暗星球-CSDN博客_labelme

一张张的将上面文件夹trainining(24张)和validation(8张)中图片进行标注.

标注完毕后,每张图像都会对应一个json文件,我们想要的是标注图,而不是json文件,所以还需要有一个过程进行转化

我对该目录下的json_to_dataset.py进行了如下改进,此可以批量转化json文件,同时还可以将转化得到的各标注图汇总到一个文件夹里。

json_to_dataset.py代码更改为如下:

import argparse

import json

import os

import os.path as osp

import warnings

import yaml

import numpy as np

import PIL.Image

from labelme import utils

def main():

'''

usage: python json2png.py json_file

'''

parser = argparse.ArgumentParser()

parser.add_argument('json_file')

parser.add_argument('-o', '--out', default=None)

args = parser.parse_args()

json_file = args.json_file

list = os.listdir(json_file)

gtFolder = os.path.join(json_file, "gt")

if not osp.exists(gtFolder):

os.mkdir(gtFolder)

for i in range(0, len(list)):

result = ".json" in list[i]

if(result == False):

continue

path = os.path.join(json_file, list[i])

filename=list[i][:-5]

if os.path.isfile(path):

data = json.load(open(path))

img = utils.img_b64_to_arr(data['imageData'])

lbl, lbl_names = utils.labelme_shapes_to_label(img.shape, data['shapes'])

captions = ['%d: %s' % (l, name) for l, name in enumerate(lbl_names)]

lbl_viz = utils.draw_label(lbl, img, captions)

out_dir = osp.basename(list[i]).replace('.', '_')

out_dir = osp.join(osp.dirname(list[i]), out_dir)

#out_dir = osp.join('./png', out_dir)

if not osp.exists(out_dir):

os.mkdir(out_dir)

PIL.Image.fromarray(img).save(osp.join(out_dir, '{}.png'.format(filename)))

lbl = PIL.Image.fromarray(np.uint8(lbl))

lbl.save(osp.join(out_dir, '{}_gt.png'.format(filename)))

lbl.save(osp.join(gtFolder, '{}_gt.png'.format(filename)))

# PIL.Image.fromarray(lbl).save(osp.join(out_dir, 'label.png'))

PIL.Image.fromarray(lbl_viz).save(osp.join(out_dir, '{}_viz.png'.format(filename)))

PIL.Image.fromarray(lbl_viz).save(osp.join(gtFolder, '{}_viz.png'.format(filename)))

with open(osp.join(out_dir,'label_names.txt'),'w') as f:

for lbl_name in lbl_names:

f.write(lbl_name + '\n')

warnings.warn('info.yaml is being replaced by label_names.txt')

info = dict(label_names=lbl_names)

with open(osp.join(out_dir, 'info.yaml'), 'w') as f:

yaml.safe_dump(info, f, default_flow_style=False)

print('Saved to: %s' % out_dir)

if __name__ == '__main__':

main()

先cd到trainning目录下,同时在该目录下激活labelme

然后再使用labelme_json_to_dataset 【json文件所在的路径】命令,便出现如下对应每个json文件的一个个文件夹

打开其中一个1_json文件夹,可以看看里面有什么内容,可以看到有我们想要的标注图,由于前面我对代码进行了改进,标注图不需要一个个文件夹打开去选中

标注图会都集中到gt文件夹里

为了方便比较,我把对应的viz图像也copy到了此文件夹内

然后把这些标注图拷贝到annotations下的trainning文件夹中

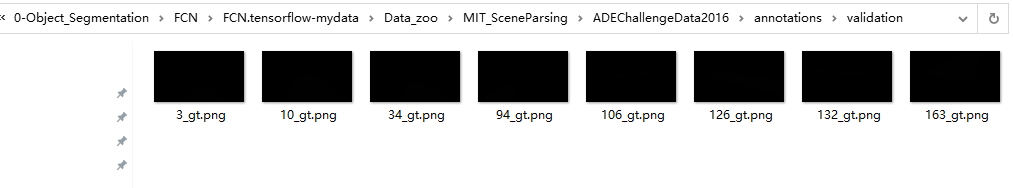

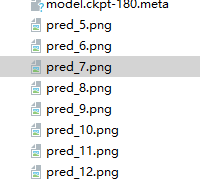

同理对validation下的json文件也进行批量转化操作获得标注图

接下来修改代码,后面我也会上传自己的工程

主要修改的地方如下:

1.read_MITSceneParsingData.py中注释掉

# utils.maybe_download_and_extract(data_dir, DATA_URL, is_zipfile=True)

2. 因为我用的是tensorflow2.x系列,为了兼容1.x系列,得使用语句

import tensorflow.compat.v1 as tf tf.disable_v2_behavior()

3. 图像长和宽并不相等,所以不能按照原代码设置,源代码中只用了一个IMAGE_SIZE

4.read_MITSceneParsingData.py中图像后缀改为bmp格式

file_glob = os.path.join(image_dir, "images", directory, '*.' + 'bmp')

其它的见我上传的代码吧

训练时的FCN代码设置如下:

from __future__ import print_function

#import tensorflow as tf

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

import numpy as np

import TensorflowUtils as utils

import read_MITSceneParsingData as scene_parsing

import datetime

import BatchDatsetReader as dataset

from six.moves import xrange

FLAGS = tf.flags.FLAGS

tf.flags.DEFINE_integer("batch_size", "2", "batch size for training")

tf.flags.DEFINE_string("logs_dir", "logs/", "path to logs directory")

tf.flags.DEFINE_string("data_dir", "Data_zoo/MIT_SceneParsing/", "path to dataset")

tf.flags.DEFINE_float("learning_rate", "1e-4", "Learning rate for Adam Optimizer")

tf.flags.DEFINE_string("model_dir", "Model_zoo/", "Path to vgg model mat")

tf.flags.DEFINE_bool('debug', "False", "Debug mode: True/ False")

tf.flags.DEFINE_string('mode', "train", "Mode train/ test/ visualize")

MODEL_URL = 'http://www.vlfeat.org/matconvnet/models/beta16/imagenet-vgg-verydeep-19.mat'

MAX_ITERATION = int(200)

print("max_iteration: " + str(MAX_ITERATION))

NUM_OF_CLASSESS = 2

IMAGE_SIZE1 = 288

IMAGE_SIZE2 = 512

config = tf.ConfigProto(gpu_options=tf.GPUOptions(allow_growth=True))

def vgg_net(weights, image):

layers = (

'conv1_1', 'relu1_1', 'conv1_2', 'relu1_2', 'pool1',

'conv2_1', 'relu2_1', 'conv2_2', 'relu2_2', 'pool2',

'conv3_1', 'relu3_1', 'conv3_2', 'relu3_2', 'conv3_3',

'relu3_3', 'conv3_4', 'relu3_4', 'pool3',

'conv4_1', 'relu4_1', 'conv4_2', 'relu4_2', 'conv4_3',

'relu4_3', 'conv4_4', 'relu4_4', 'pool4',

'conv5_1', 'relu5_1', 'conv5_2', 'relu5_2', 'conv5_3',

'relu5_3', 'conv5_4', 'relu5_4'

)

net = {}

current = image

for i, name in enumerate(layers):

kind = name[:4]

if kind == 'conv':

kernels, bias = weights[i][0][0][0][0]

# matconvnet: weights are [width, height, in_channels, out_channels]

# tensorflow: weights are [height, width, in_channels, out_channels]

kernels = utils.get_variable(np.transpose(kernels, (1, 0, 2, 3)), name=name + "_w")

bias = utils.get_variable(bias.reshape(-1), name=name + "_b")

current = utils.conv2d_basic(current, kernels, bias)

elif kind == 'relu':

current = tf.nn.relu(current, name=name)

if FLAGS.debug:

utils.add_activation_summary(current)

elif kind == 'pool':

current = utils.avg_pool_2x2(current)

net[name] = current

return net

def inference(image, keep_prob):

"""

Semantic segmentation network definition

:param image: input image. Should have values in range 0-255

:param keep_prob:

:return:

"""

print("setting up vgg initialized conv layers ...")

#加载模型

model_data = utils.get_model_data(FLAGS.model_dir, MODEL_URL)

mean = model_data['normalization'][0][0][0]

mean_pixel = np.mean(mean, axis=(0, 1))

weights = np.squeeze(model_data['layers'])

processed_image = utils.process_image(image, mean_pixel)

with tf.variable_scope("inference"):

image_net = vgg_net(weights, processed_image)

conv_final_layer = image_net["conv5_3"]

pool5 = utils.max_pool_2x2(conv_final_layer)

W6 = utils.weight_variable([7, 7, 512, 4096], name="W6")

b6 = utils.bias_variable([4096], name="b6")

conv6 = utils.conv2d_basic(pool5, W6, b6)

relu6 = tf.nn.relu(conv6, name="relu6")

if FLAGS.debug:

utils.add_activation_summary(relu6)

relu_dropout6 = tf.nn.dropout(relu6, keep_prob=keep_prob)

W7 = utils.weight_variable([1, 1, 4096, 4096], name="W7")

b7 = utils.bias_variable([4096], name="b7")

conv7 = utils.conv2d_basic(relu_dropout6, W7, b7)

relu7 = tf.nn.relu(conv7, name="relu7")

if FLAGS.debug:

utils.add_activation_summary(relu7)

relu_dropout7 = tf.nn.dropout(relu7, keep_prob=keep_prob)

W8 = utils.weight_variable([1, 1, 4096, NUM_OF_CLASSESS], name="W8")

b8 = utils.bias_variable([NUM_OF_CLASSESS], name="b8")

conv8 = utils.conv2d_basic(relu_dropout7, W8, b8)

# annotation_pred1 = tf.argmax(conv8, dimension=3, name="prediction1")

# now to upscale to actual image size

deconv_shape1 = image_net["pool4"].get_shape()

W_t1 = utils.weight_variable([4, 4, deconv_shape1[3].value, NUM_OF_CLASSESS], name="W_t1")

b_t1 = utils.bias_variable([deconv_shape1[3].value], name="b_t1")

conv_t1 = utils.conv2d_transpose_strided(conv8, W_t1, b_t1, output_shape=tf.shape(image_net["pool4"]))

fuse_1 = tf.add(conv_t1, image_net["pool4"], name="fuse_1")

deconv_shape2 = image_net["pool3"].get_shape()

W_t2 = utils.weight_variable([4, 4, deconv_shape2[3].value, deconv_shape1[3].value], name="W_t2")

b_t2 = utils.bias_variable([deconv_shape2[3].value], name="b_t2")

conv_t2 = utils.conv2d_transpose_strided(fuse_1, W_t2, b_t2, output_shape=tf.shape(image_net["pool3"]))

fuse_2 = tf.add(conv_t2, image_net["pool3"], name="fuse_2")

shape = tf.shape(image)

deconv_shape3 = tf.stack([shape[0], shape[1], shape[2], NUM_OF_CLASSESS])

W_t3 = utils.weight_variable([16, 16, NUM_OF_CLASSESS, deconv_shape2[3].value], name="W_t3")

b_t3 = utils.bias_variable([NUM_OF_CLASSESS], name="b_t3")

conv_t3 = utils.conv2d_transpose_strided(fuse_2, W_t3, b_t3, output_shape=deconv_shape3, stride=8)

annotation_pred = tf.argmax(conv_t3, dimension=3, name="prediction")

return tf.expand_dims(annotation_pred, dim=3), conv_t3

def train(loss_val, var_list):

optimizer = tf.train.AdamOptimizer(FLAGS.learning_rate)

grads = optimizer.compute_gradients(loss_val, var_list=var_list)

if FLAGS.debug:

# print(len(var_list))

for grad, var in grads:

utils.add_gradient_summary(grad, var)

return optimizer.apply_gradients(grads)

def main(argv=None):

keep_probability = tf.placeholder(tf.float32, name="keep_probabilty")

image = tf.placeholder(tf.float32, shape=[None, IMAGE_SIZE1, IMAGE_SIZE2, 3], name="input_image")

annotation = tf.placeholder(tf.int32, shape=[None, IMAGE_SIZE1, IMAGE_SIZE2, 1], name="annotation")

pred_annotation, logits = inference(image, keep_probability)

tf.summary.image("input_image", image, max_outputs=2)

tf.summary.image("ground_truth", tf.cast(annotation, tf.uint8), max_outputs=2)

tf.summary.image("pred_annotation", tf.cast(pred_annotation, tf.uint8), max_outputs=2)

loss = tf.reduce_mean((tf.nn.sparse_softmax_cross_entropy_with_logits(logits=logits,

labels=tf.squeeze(annotation, squeeze_dims=[3]),

name="entropy")))

loss_summary = tf.summary.scalar("entropy", loss)

trainable_var = tf.trainable_variables()

if FLAGS.debug:

for var in trainable_var:

utils.add_to_regularization_and_summary(var)

train_op = train(loss, trainable_var)

print("Setting up summary op...")

summary_op = tf.summary.merge_all()

print("Setting up image reader...")

#是解压缩后的文件夹,里面会去判断是否有pickle文件,没有就自己下载zip文件并解压文件夹,然后再压缩

train_records, valid_records = scene_parsing.read_dataset(FLAGS.data_dir)

print(len(train_records))

print(len(valid_records))

print("Setting up dataset reader")

image_options = {'resize': True, 'resize_size1': IMAGE_SIZE1, 'resize_size2':IMAGE_SIZE2}

if FLAGS.mode == 'train':

train_dataset_reader = dataset.BatchDatset(train_records, image_options)

validation_dataset_reader = dataset.BatchDatset(valid_records, image_options)

sess = tf.Session(config=config)

print("Setting up Saver...")

saver = tf.train.Saver()

# create two summary writers to show training loss and validation loss in the same graph

# need to create two folders 'train' and 'validation' inside FLAGS.logs_dir

train_writer = tf.summary.FileWriter(FLAGS.logs_dir + '/train', sess.graph)

validation_writer = tf.summary.FileWriter(FLAGS.logs_dir + '/validation')

sess.run(tf.global_variables_initializer())

ckpt = tf.train.get_checkpoint_state(FLAGS.logs_dir)

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess, ckpt.model_checkpoint_path)

print("Model restored...")

if FLAGS.mode == "train":

for itr in xrange(MAX_ITERATION):

train_images, train_annotations = train_dataset_reader.next_batch(FLAGS.batch_size)

feed_dict = {image: train_images, annotation: train_annotations, keep_probability: 0.85}

sess.run(train_op, feed_dict=feed_dict)

if itr % 10 == 0:

train_loss, summary_str = sess.run([loss, loss_summary], feed_dict=feed_dict)

print("Step: %d, Train_loss:%g" % (itr, train_loss))

train_writer.add_summary(summary_str, itr)

if itr % 20 == 0:

valid_images, valid_annotations = validation_dataset_reader.next_batch(FLAGS.batch_size)

valid_loss, summary_sva = sess.run([loss, loss_summary], feed_dict={image: valid_images, annotation: valid_annotations,

keep_probability: 1.0})

print("%s ---> Validation_loss: %g" % (datetime.datetime.now(), valid_loss))

# add validation loss to TensorBoard

validation_writer.add_summary(summary_sva, itr)

saver.save(sess, FLAGS.logs_dir + "model.ckpt", itr)

elif FLAGS.mode == "visualize":

valid_images, valid_annotations = validation_dataset_reader.get_random_batch(8)

pred = sess.run(pred_annotation, feed_dict={image: valid_images, annotation: valid_annotations,

keep_probability: 1.0})

valid_annotations = np.squeeze(valid_annotations, axis=3)

pred = np.squeeze(pred, axis=3)

for itr in range(8):

utils.save_image(valid_images[itr].astype(np.uint8), FLAGS.logs_dir, name="inp_" + str(5+itr))

utils.save_image(valid_annotations[itr].astype(np.uint8), FLAGS.logs_dir, name="gt_" + str(5+itr))

utils.save_image(pred[itr].astype(np.uint8), FLAGS.logs_dir, name="pred_" + str(5+itr))

print("Saved image: %d" % itr)

if __name__ == "__main__":

tf.app.run()

运行,程序开始训练起来

训练完毕后,我们可以预测一下图看看效果:

预测时只需要将语句改为如下即可:

tf.flags.DEFINE_string('mode', "visualize", "Mode train/ test/ visualize")

在long下可看到验证集的8张图像预测图

但由于里面像素值大小小于1,故图像全黑,看不出来,可以使用如下代码来查看:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# author:Icecream.Shao

from skimage import io,data,color

import cv2 as cv

import numpy as np

#img_name='Data_zoo/MIT_SceneParsing/ADEChallengeData2016/images/validation/gt/img_000495_bad_gt.png'

#img=io.imread(img_name,as_grey=False)

img_name='logs/pred_8.png'

img=io.imread(img_name)

img_gray=color.rgb2gray(img)

rows,cols=img_gray.shape

for i in range(rows):

for j in range(cols):

if (img_gray[i,j]<=0.5):

img_gray[i,j]=0

else:

img_gray[i,j]=1

io.imshow(img_gray)

io.show()

cv.imshow("original", img)

cv.waitKey(0)

ret, binary = cv.threshold(img, 0, 255, cv.THRESH_BINARY | cv.THRESH_OTSU)#大律法,全局自适应阈值 参数0可改为任意数字但不起作用

print("阈值:%s" % ret)

cv.imshow("OTSU", binary)

cv.waitKey(0)

# ret, binary = cv.threshold(gray, 150, 255, cv.THRESH_BINARY)# 自定义阈值为150,大于150的是白色 小于的是黑色

# print("阈值:%s" % ret)

# cv.imshow("自定义", binary)预测效果图展示如下:

原图为:

当时的标注效果图为:

较为接近了,可以再加强训练。

接下来再上传下整个工程,这里删除了log文件夹内的模型及预测图,有兴趣的下载下来后自己训练吧

链接:https://pan.baidu.com/s/1VZfEupbQK5O1mfBkGXQbQw

提取码:xr9k