Robot SLAM and autonomous navigation (1)-theoretical basis

table of Contents

Overview

SLAM can be described as: the robot starts to move from an unknown location in an unknown environment. During the movement, it locates itself according to the position estimation and the map, and at the same time builds an incremental map to realize the autonomous positioning and navigation of the robot.

Imagine a blind person in an unknown environment. If he wants to perceive the general situation around him, he needs to stretch his hands as his "sensor" and constantly explore whether there are obstacles around him. Of course, this "sensor" has a range, and he needs to keep moving while integrating the known perceptual information in his mind. When he feels that the newly explored environment seems to be a certain location he has encountered before, he will correct the integrated map in his mind and at the same time correct his current location. Of course, as a blind person, his perception ability is limited, so there will be errors in the environmental information he explores, and he will set a probability value for the obstacles he explores according to his degree of certainty. The greater the probability value, the greater the obstacles. The more likely it is. A scene where a blind person explores an unknown environment can basically represent the main process of the SLAM algorithm.

Homes, shopping malls, stations and other places are the main application scenarios of indoor robots. In these applications, users need robots to complete certain tasks by moving, which requires the robots to have the functions of autonomous movement and autonomous positioning. Such applications are collectively referred to as autonomous navigation. . Autonomous navigation is inseparable from SLAM, because the map generated by SLAM is the main blueprint for autonomous movement of robots. It is summarized as follows: In the working space of the service robot, find an optimal path from the starting state to the target state that can avoid obstacles according to the positioning and navigation system of the robot itself.

To complete the robot's SLAM and autonomous navigation, the robot must first have the ability to perceive the surrounding environment, especially the ability to perceive the depth information of the surrounding environment, because this is the key data for detecting obstacles. The sensors used to obtain depth information mainly include the following types.

1. Lidar

Lidar is the most researched and most mature depth sensor, which can provide distance information between the robot body and environmental obstacles. The advantages of lidar are high accuracy, fast response, small amount of data, and it can complete real-time SLAM tasks; the disadvantage is high cost, and the price of an imported high-precision lidar is more than 10,000 yuan.

2. Camera

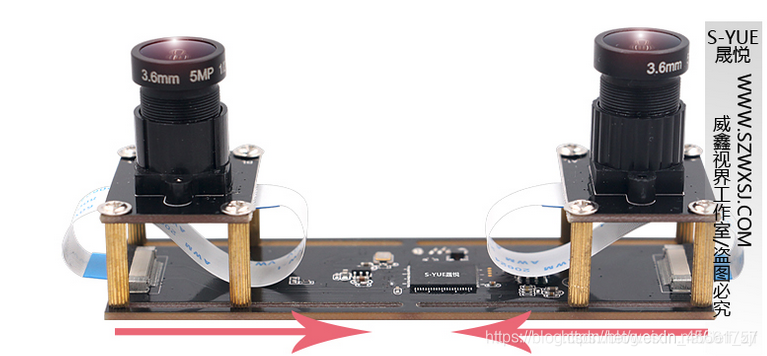

The cameras used in SLAM can be divided into two types: one is a monocular camera, that is, a single camera is used to complete SLAM. The sensor of this kind of scheme is simple, the applicability is strong, but the complexity of realization is higher, and the monocular camera cannot measure the distance in the static state, and can only perceive the distance according to the principle of triangulation in the moving state. The other is a binocular camera. Compared with a monocular camera, this solution can perceive distance information regardless of whether it is in motion or in a static state. However, the calibration of the two cameras is more complicated, and a large amount of image data will be affected. Lead to a large amount of calculations.

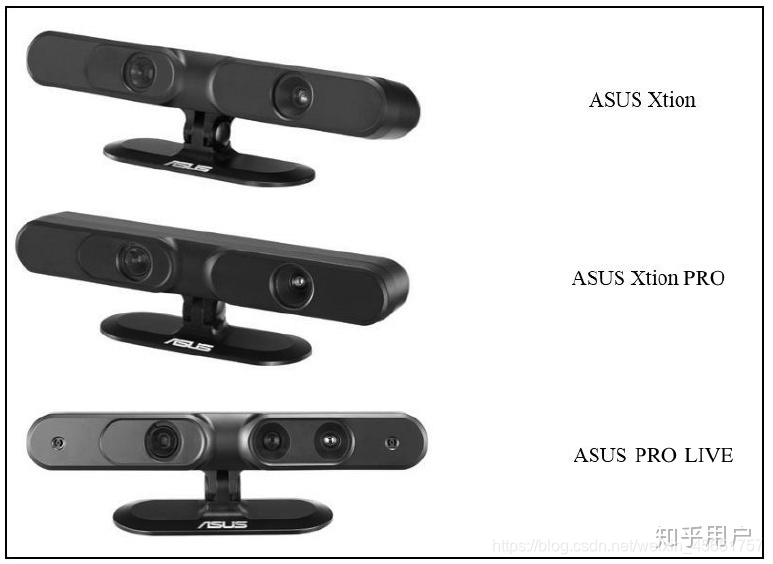

3. RGB-D camera

The RGB-D camera is a new type of sensor that has emerged in recent years. It can not only obtain the RGB image information of the environment like a camera, but also obtain the depth information of each pixel through principles such as infrared structured light and Time-of-Flight. Abundant data allows RGB-D cameras to be used not only for SLAM, but also for image processing, object recognition and other applications; the most important point is that RGB-D cameras are low in cost, and they are also the mainstream sensor solution for indoor service robots. . Common RGB-D cameras include Kinect v1/v2, ASUS Xtion Pro, etc. Of course, RGB-D cameras also have shortcomings such as narrow measurement field of view, large blind areas, and large noise.