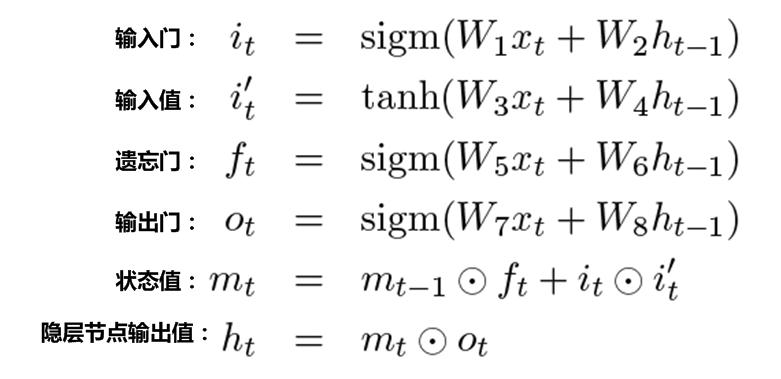

The above is the calculation formula of LSTM. First, find those doors. In fact, it is easy to find. Those three Sigmods are three doors as a non-linear function. Obviously, the value range is from 0 to 1 and the physical meaning of door opening and closing is Corresponding very well.

So the meaning is very clear,

The input gate is a gate control device used to control how much input i'(t) enters and exits or whether to allow entry and exit;

The output gate is a gate control device used to control how much the state value m(t) at time t is visible to the outside;

The forget gate is a gated device that controls how much of the historical state m(t-1) in the RNN flows to the time t is allowed to enter the time t;

Combined with the common LSTM diagram, the following is the control of the three gates. In the formula listed above, mt is Ct

Forgotten Door

Input gate

Output gate

LSTM is an RNN model. In addition to the current input value x(t) of the node at time t, the hidden layer node output h(t-1) at time t-1 is also used to determine the node at time t. This represents the impact of historical information on the current, so it is determined In addition to the current input x(t), there is also h(t-1) for the degree of door opening and closing. This is the only difference.

So the key lies in the LSTM's state value update function and hidden layer node output value function. For the status update function:

f(t) is the gate control of the forget gate, and m(t-1) is the historical state information. The multiplication of the two represents how much historical information is allowed to enter at time t to determine the current state of m(t). If the forget gate is fully closed, the value is 0 , Then history has no effect on the current state. If the value of the forget door is fully opened, the historical information will be transmitted to time t without any information loss. Of course, it is more likely to be between 0 and 1, representing history Partial inflow of information;

i(t) is the gate control of the input gate, i'(t) is the input value at the current time t, the multiplication of the two represents how much current input information is allowed to enter at the time t to determine the current state of m(t), if the input gate is fully closed, take If the value is 0, LSTM ignores the influence of the current input, which is equivalent to skipping over without seeing this input. If the input gate is fully opened and the value is 1, the current input maximizes the current state m(t) without any information loss. Of course, it is more likely that the value is between 0 and 1, which represents the inflow of input information;

After the influence of the above two gating control history information and the influence of the current input, the state value m(t) of the hidden layer node at time t is formed.

The hidden layer node output value h(t) is easy to understand, that is, how much of the current state m(t) is visible to the outside through the output gate, because m(t) is the hidden state information, except for the hidden state at t+1 Outside the layer transmission, other places outside can not be seen, but they can see h(t).

This is how LSTM uses three gates and extracted m-state memory to express the logic of operation. In fact, it can be seen that its essence is the same as RNN, which is nothing more than reflecting the historical influence and the influence of the current input, but compared to RNN In other words, through gating to adaptively control the flow of information according to the history and input, of course, the more important thing is to solve the gradient dispersion problem through the extracted m storage and the backward transfer method. Because today’s gating is the main topic, this The block does not expand to speak.

Many other deep learning works have also introduced gate functions. The idea is essentially the same as that of the pig family's gate control system introduced above. It is nothing more than the use of gate functions to control the degree of information flow. How to understand the models of "there are doors" and "there are no doors" in terms of computational models? In fact, you can assume that all models are "doors" by default, and "no door" is just a special case of doors. why? Because "no door" is equivalent to something, it is equivalent to: "there is a door" but that door is always fully open, never closed or half-concealed. So what is the entrance door actually doing? It is to add control. In some cases, it allows you to enter, and in some cases it does not allow you to enter. For example, if you see x, you will not let in, and you will see y.

|Other analogies

In order to facilitate the understanding of the role of the gate function above, we use the gate in real life as the analog scale. In fact, there are many devices that play a similar role in life, such as faucets. When the faucet is turned on, water can flow in. If the faucet is turned off, the water source will be cut off. If the faucet is opened to a larger point, the water flow will be larger. Open the smaller point, then the water flow is smaller. The gate function in DL is actually the same as this faucet adjustment function, the difference is nothing more than the control is not the flow of water, but the flow of information.

For another example, the gate function can also be analogous to the light regulator of a lamp. We often see light control equipment with a light regulator. If the device is adjusted larger, the lighting intensity will increase, and if the device is reduced, the lighting intensity will decrease. This analogy can also vividly illustrate the role of the gate function.

In fact, to sum up, what role do all these doors in life play? In fact, it plays the role of a "regulating valve". The inflow of objects is controlled by switching the regulating valve; the degree of inflow is controlled by switching the size of the regulating valve; therefore, all living facilities that function as regulating valves can be used as gate functions. Analogy.