Overview:

- What is Netty?

- Why use Netty?

- Do you understand Netty application scenarios?

- What are the core components of Netty? What is the role of each?

- Does EventloopGroup understand? What is the relationship with EventLoop?

- Do you know Bootstrap and ServerBootstrap?

- How many threads will the default constructor of NioEventLoopGroup start?

- Do you understand the Netty threading model?

- Do you understand the startup process of Netty server and client?

- Do you understand Netty's long connection and heartbeat mechanism?

- Do you understand Netty's zero copy?

What is Netty?

- Netty is a client-server framework based on NIO, which can be used to develop network applications quickly and easily.

- It greatly simplifies and optimizes network programming such as TCP and UDP socket servers, and performance and security are even better in many aspects.

- Support multiple protocols such as FTP, SMTP, HTTP and various binary and text-based traditional protocols.

The official summary is: Netty has successfully found a way to achieve ease of development, performance, stability and flexibility without compromising maintainability and performance.

In addition to the above, many open source projects such as our commonly used Dubbo, RocketMQ, Elasticsearch, gRPC, etc. all use Netty.

Why use Netty?

Because Netty has the following advantages, and it is easier to use than directly using the NIO-related API that comes with the JDK.

- Unified API supports multiple transmission types, blocking and non-blocking.

- Simple and powerful threading model.

- The built-in codec solves the TCP sticking/unpacking problem.

- Comes with various protocol stacks.

- True connectionless packet socket support.

- Compared with the direct use of Java core API, it has higher throughput, lower latency, lower resource consumption and less memory copying.

- The security is good, with complete SSL/TLS and StartTLS support.

- Active community

- It is mature and stable, has experienced the use and test of large-scale projects, and many open source projects have used Netty, such as Dubbo, RocketMQ, etc. that we often contact.

Do you understand Netty application scenarios?

In theory, what NIO can do can be done with Netty and better. Netty is mainly used for network communication:

- As a network communication tool of the RPC framework: In a distributed system, different service nodes often need to call each other. At this time, the RPC framework is needed. How is the communication between different service nodes done? You can use Netty to do it. For example, if I call a method of another node, at least I must let the other party know which method in which class I am calling and related parameters!

- Implement an own HTTP server: We can implement a simple HTTP server by ourselves through Netty, which should be familiar to everyone. Speaking of HTTP server, as Java back-end development, we generally use Tomcat more. A basic HTTP server can handle common HTTP method requests, such as POST requests, GET requests, and so on.

- Implement an instant messaging system: Using Netty we can implement an instant messaging system that can chat similar to WeChat. There are many open source projects in this area. You can go to Github to find it yourself.

- Realize the message push system: There are many message push systems on the market that are based on Netty.

What are the core components of Netty? What is the role of each?

1. The

Channel Channel interface is an abstract class of Netty for network operations. It includes basic I/O operations such as bind(), connect(), read(), write(), etc.

The more commonly used Channel interface implementation classes are NioServerSocketChannel (server) and NioSocketChannel (client). These two Channels can correspond to the two concepts of ServerSocket and Socket in the BIO programming model. The API provided by Netty's Channel interface greatly reduces the complexity of directly using the Socket class.

2. EventLoop

EventLoop (event loop) interface can be said to be the most core concept in Netty! "EventLoop defines the core abstraction of Netty, which is used to process events that occur in the life cycle of the connection. To put it bluntly, the main role of EventLoop is actually to monitor network events and call event handlers to process related I/O operations.

What is the direct connection between Channel and EventLoop?

Channel is an abstract class of Netty network operations (read and write operations). EventLoop is responsible for processing the Channel registered on it to process I/O operations, and the two cooperate to participate in I/O operations.

3. ChannelFuture

Netty is asynchronous and non-blocking, and all I/O operations are asynchronous.

Therefore, we cannot get the success of the operation immediately, but you can register a ChannelFutureListener through the addListener() method of the ChannelFuture interface. When the operation succeeds or fails, the listener will automatically trigger the return result. Also, you can get the associated Channel through the channel() method of ChannelFuture. In addition, we can also make asynchronous operations synchronous through the sync() method of the ChannelFuture interface.

4. ChannelHandler and ChannelPipeline

The following code should not be unfamiliar to those who have used Netty. We have specified a serialization codec and a custom ChannelHandler to process messages.

b.group(eventLoopGroup) .handler(new ChannelInitializer<SocketChannel>() {

@Override protected void initChannel(SocketChannel ch) {

ch.pipeline().addLast(new NettyKryoDecoder(kryoSerializer, RpcResponse.class));

ch.pipeline().addLast(new NettyKryoEncoder(kryoSerializer, RpcRequest.class));

ch.pipeline().addLast(new KryoClientHandler());

}

});

ChannelHandler is the concrete processor of the message. He is responsible for handling read and write operations, client connections, and other things. ChannelPipeline is a chain of ChannelHandlers that provides a container and defines APIs for propagating inbound and outbound event streams along the chain. When a Channel is created, it will be automatically assigned to its dedicated ChannelPipeline. We can add one or more ChannelHandlers on ChannelPipeline through the addLast() method, because a data or event may be processed by multiple Handlers. When a ChannelHandler is processed, the data is handed over to the next ChannelHandler.

Does EventloopGroup understand? What is the relationship with EventLoop?

EventLoopGroup contains multiple EventLoops (each EventLoop usually contains a thread inside). As we have said above, the main function of EventLoop is actually to monitor network events and call event handlers to process related I/O operations.

And I/O events handled by EventLoop will be processed on its dedicated Thread, that is, Thread and EventLoop belong to a 1:1 relationship, thereby ensuring thread safety.

The above figure is a rough block diagram of EventLoopGroup used by the server, in which Boss EventloopGroup is used to receive connections, and Worker EventloopGroup is used for specific processing (reading and writing of messages and other logical processing).

As can be seen from the above figure: when the client connects to the server through the connect method, the bossGroup processes the client connection request. When the client processing is completed, the connection will be submitted to the workerGroup for processing, and then the workerGroup is responsible for processing its IO related operations.

Do you know Bootstrap and ServerBootstrap?

Bootstrap is the startup boot class/auxiliary class of the client. The specific usage method is as follows:

EventLoopGroup group = new NioEventLoopGroup(); try {//Create a client boot/auxiliary class: Bootstrap Bootstrap b = new Bootstrap(); //Specify the thread model b.group(group).… // Try to establish a connection ChannelFuture f = b.connect(host, port).sync(); f.channel().closeFuture().sync();} finally {// Gracefully close related thread group resources group.shutdownGracefully();}

The boot boot class/auxiliary class of ServerBootstrap client, the specific usage method is as follows:

// 1.bossGroup is used to receive connections, workerGroup is used for specific processing EventLoopGroup bossGroup = new NioEventLoopGroup(1); EventLoopGroup workerGroup = new NioEventLoopGroup(); try {//2. Create server boot/auxiliary class: ServerBootstrap ServerBootstrap b = new ServerBootstrap(); //3. Configure two thread groups for the boot class and determine the thread model b.group(bossGroup, workerGroup).… // 6. Bind the port ChannelFuture f = b.bind(port) .sync(); // Wait for the connection to close f.channel().closeFuture().sync();} finally {//7. Gracefully close the related thread group resources bossGroup.shutdownGracefully(); workerGroup.shutdownGracefully();} }

From the above example, we can see:

- Bootstrap usually uses the connet() method to connect to a remote host and port as a client in Netty TCP protocol communication. In addition, Bootstrap can also bind a local port through the bind() method as one end of the UDP protocol communication.

- ServerBootstrap usually uses the bind() method to bind to the local port, and then waits for the client to connect.

- Bootstrap only needs to configure one thread group—EventLoopGroup, while ServerBootstrap needs to configure two thread groups—EventLoopGroup, one for receiving connections and one for specific processing.

How many threads will the default constructor of NioEventLoopGroup start?

// 1.bossGroup is used for receiving connections, workerGroup is used for specific processing EventLoopGroup bossGroup = new NioEventLoopGroup(1); EventLoopGroup workerGroup = new NioEventLoopGroup();

In order to find out how many threads are created by the default constructor of NioEventLoopGroup, let's take a look at its source code.

/** * No-argument constructor. * nThreads:0 / public NioEventLoopGroup() {//Call the next construction method this(0);} / * * Executor: null / public NioEventLoopGroup(int nThreads) {//Continue to call the next construction method this(nThreads, ( Executor) null);} //Part of the constructor is omitted in the middle/ * * RejectedExecutionHandler(): RejectedExecutionHandlers.reject() */ public NioEventLoopGroup(int nThreads, Executor executor, final SelectorProvider selectorProvider, final SelectStrategyFactory selectStrategyFactory) {//Start calling the parent The constructor of the class super(nThreads, executor, selectorProvider, selectStrategyFactory, RejectedExecutionHandlers.reject());}

If you go all the way down, you will find the relevant code for specifying the number of threads in the MultithreadEventLoopGroup class, as follows:

// From 1, the system properties, the number of CPU cores , take the largest one out of the three values // You can get the value of DEFAULT_EVENT_LOOP_THREADS as the number of CPU cores 2 private static final int DEFAULT_EVENT_LOOP_THREADS = Math.max(1, SystemPropertyUtil.getInt( "Io.netty.eventLoopThreads", NettyRuntime.availableProcessors() * 2)); // The parent class constructor that is called, the default constructor of NioEventLoopGroup will have the secret of how many threads // When the specified number of threads nThreads is 0 When using the default number of threads DEFAULT_EVENT_LOOP_THREADS protected MultithreadEventLoopGroup(int nThreads, ThreadFactory threadFactory, Object... args) {super(nThreads == 0? DEFAULT_EVENT_LOOP_THREADS: nThreads, threadFactory, args);}

In summary, we find that the number of threads actually started by the default constructor of NioEventLoopGroup is the number of CPU cores*2.

In addition, if you continue to look at the constructor, you will find that each NioEventLoopGroup object will allocate a group of NioEventLoops, the size of which is nThreads, which constitutes a thread pool, and a NIOEventLoop corresponds to a thread. This is similar to ours. The relationship between EventloopGroup and EventLoop mentioned above corresponds to this part.

Do you understand the Netty threading model?

Most network frameworks are designed and developed based on the Reactor pattern.

"The Reactor mode is based on event-driven, and uses multiplexing to distribute events to the corresponding Handler for processing, which is very suitable for handling massive IO scenarios.

Netty mainly relies on the NioEventLoopGroup thread pool to implement the specific threading model.

When we implement the server, we generally initialize two thread groups:

- bossGroup: Receive connection.

- workerGroup: Responsible for specific processing, which is handled by the corresponding Handler.

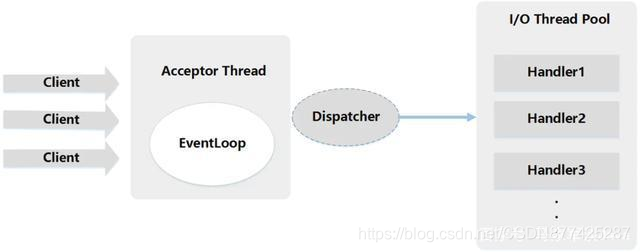

1. Single-threaded model:

One thread needs to execute and process all accept, read, decode, process, encode, and send events. It is not applicable to scenarios with high load, high concurrency, and high performance requirements.

The corresponding Netty code is as follows

"Use the parameterless constructor of the NioEventLoopGroup class to set the default value of the number of threads to be the number of CPU cores*2.

//1.eventGroup is not only used to handle client connections, but also responsible for specific processing. EventLoopGroup eventGroup = new NioEventLoopGroup(1); //2. Create a server boot/auxiliary class: ServerBootstrap ServerBootstrap b = new ServerBootstrap(); boobtstrap.group(eventGroup, eventGroup) //...

2. Multi-threaded model

An Acceptor thread is only responsible for monitoring client connections, and a NIO thread pool is responsible for specific processing: accept, read, decode, process, encode, and send events. It meets most application scenarios. When the number of concurrent connections is small, there is no problem, but when the number of concurrent connections is large, problems may occur and become a performance bottleneck.

The corresponding Netty code is as follows:

// 1.bossGroup is used to receive connections, workerGroup is used for specific processing EventLoopGroup bossGroup = new NioEventLoopGroup(1); EventLoopGroup workerGroup = new NioEventLoopGroup(); try {//2. Create server boot/auxiliary class: ServerBootstrap ServerBootstrap b = new ServerBootstrap(); //3. Configure two thread groups for the boot class and determine the thread model b.group(bossGroup, workerGroup) //...

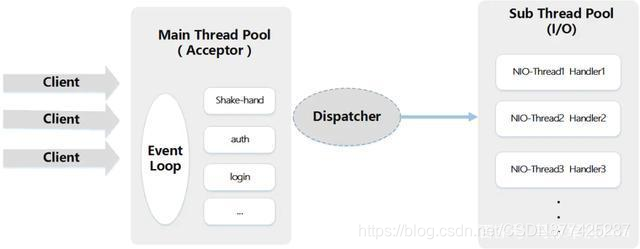

3. Master-slave multi-thread model

Select a thread from a main thread NIO thread pool as the Acceptor thread, bind the listening port, receive client connections, and other threads are responsible for subsequent access authentication and other tasks. After the connection is established, the Sub NIO thread pool is responsible for the specific processing of I/O read and write. If the multi-threaded model cannot meet your needs, you can consider using the master-slave multi-threaded model.

// 1.bossGroup is used to receive connections, workerGroup is used for specific processing EventLoopGroup bossGroup = new NioEventLoopGroup(); EventLoopGroup workerGroup = new NioEventLoopGroup(); try {//2. Create server boot/auxiliary class: ServerBootstrap ServerBootstrap b = new ServerBootstrap(); //3. Configure two thread groups for the boot class and determine the thread model b.group(bossGroup, workerGroup) //...

Do you understand the startup process of Netty server and client?

Server

// 1.bossGroup is used to receive connections, workerGroup is used for specific processing EventLoopGroup bossGroup = new NioEventLoopGroup(1); EventLoopGroup workerGroup = new NioEventLoopGroup(); try {//2. Create server boot/auxiliary class: ServerBootstrap ServerBootstrap b = new ServerBootstrap(); //3. Configure two thread groups for the boot class, and determine the thread model b.group(bossGroup, workerGroup) // (not required) print logs. handler(new LoggingHandler(LogLevel.INFO) )) // 4. Specify the IO model. channel(NioServerSocketChannel.class) .childHandler(new ChannelInitializer() {@Override public void initChannel(SocketChannel ch) {ChannelPipeline p = ch.pipeline(); //5. Can be customized The business processing logic of the client message p.addLast(new HelloServerHandler());} }); // 6. Bind the port, call the sync method to block until the binding is completed ChannelFuture f = b.bind(port).sync() ; // 7. Block and wait until the server Channel is closed (the closeFuture() method obtains the CloseFuture object of the Channel, and then calls the sync() method) f.channel().closeFuture().sync();} finally {//8. Gracefully shut down related thread group resources bossGroup.shutdownGracefully(); workerGroup.shutdownGracefully(); }

Briefly analyze how the creation process of the server is:

1. First, you create two NioEventLoopGroup object instances: bossGroup and workerGroup.

- bossGroup: Used to process the client's TCP connection request.

- workerGroup: Responsible for the processing logic of the specific read and write data of each connection, and is really responsible for I/O read and write operations, which are handled by the corresponding Handler.

For example: we treat the boss of the company as the bossGroup, and the employees as the workerGroup. After the bossGroup picks up the job outside, it is thrown to the workerGroup for processing. Under normal circumstances, we will specify the number of threads of the bossGroup as 1 (when the number of concurrent connections is not large), and the number of threads of the workGroup is the number of CPU cores*2. In addition, according to the source code, using the parameterless constructor of the NioEventLoopGroup class to set the default value of the number of threads is the number of CPU cores*2.

2. Next we created a server boot/auxiliary class: ServerBootstrap, this class will guide us to start the server.

3. Configure two thread groups for the boot class ServerBootstrap through the .group() method to determine the thread model.

Through the following code, we actually configure the multi-threaded model, which was mentioned above.

EventLoopGroup bossGroup = new NioEventLoopGroup(1); EventLoopGroup workerGroup = new NioEventLoopGroup();

4. The IO model of the boot class ServerBootstrap is specified as NIO through the channel() method

- NioServerSocketChannel: Specify the IO model of the server as NIO, which corresponds to the ServerSocket in the BIO programming model

- NioSocketChannel: Specify the client's IO model as NIO, which corresponds to the Socket in the BIO programming model. 5. Create a ChannelInitializer for the boot class through .childHandler(), and then formulate the service processing logic of the server message HelloServerHandler object 6. Call the ServerBootstrap class bind() method to bind the port

Client

//1. Create a NioEventLoopGroup object instance EventLoopGroup group = new NioEventLoopGroup(); try {//2. Create a client boot/auxiliary class: Bootstrap Bootstrap b = new Bootstrap(); //3. Specify thread group b. group(group) //4. Specify the IO model. channel(NioSocketChannel.class) .handler(new ChannelInitializer() {@Override public void initChannel(SocketChannel ch) throws Exception {ChannelPipeline p = ch.pipeline(); // 5 . Here you can customize the business processing logic of the message p.addLast(new HelloClientHandler(message));} }); // 6. Try to establish a connection ChannelFuture f = b.connect(host, port).sync(); // 7. Wait for the connection to close (block until the Channel is closed) f.channel().closeFuture().sync();} finally {group.shutdownGracefully();}

Continue to analyze the creation process of the client

- Create a NioEventLoopGroup object instance

- The bootstrap class for creating client startup is Bootstrap

- Configure a thread group for the boot class Bootstrap through the .group() method

- The IO model of Bootstrap is specified as NIO through the channel() method

- Create a ChannelInitializer for the bootstrap class through .childHandler(), and then formulate the business processing logic of the client message HelloClientHandler object

- Call the connect() method of the Bootstrap class to connect. This method needs to specify two parameters:

- inetHost: ip address

- inetPort: port number

- public ChannelFuture connect(String inetHost, int inetPort) { return this.connect(InetSocketAddress.createUnresolved(inetHost, inetPort)); } public ChannelFuture connect(SocketAddress remoteAddress) { ObjectUtil.checkNotNull(remoteAddress, “remoteAddress”); this.validate(); return this.doResolveAndConnect(remoteAddress, this.config.localAddress()); }

What the connect method returns is a Future type object public interface ChannelFuture extends Future {…} That is to say, this party is asynchronous, we can monitor whether the connection is successful through the addListener method, and then print out the connection information. The specific method is very simple, just need to make the following changes to the code: ChannelFuture f = b.connect(host, port).addListener(future -> {if (future.isSuccess()) {System.out.println("Connected successfully! ”);} else {System.err.println("Connection failed!"); }}).sync(); What is TCP sticking/unpacking? Is there any solution?

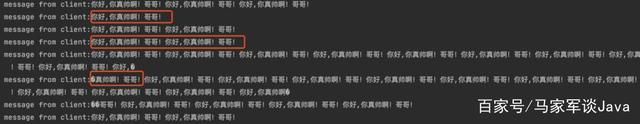

What is TCP sticking/unpacking?

TCP sticking/unpacking is when you send data based on TCP, multiple strings are "sticked" together or one string is "disassembled". For example, you send repeatedly: "Hello, you are so handsome! Brother!", but the client may receive something like the following

1. Use Netty's built-in decoder

- LineBasedFrameDecoder: When the sender sends data packets, each data packet is separated by a newline character. The working principle of LineBasedFrameDecoder is that it traverses the readable bytes in ByteBuf in turn, determines whether there is a newline character, and then performs corresponding interception.

- DelimiterBasedFrameDecoder: Delimiter decoder can be customized. LineBasedFrameDecoder is actually a special DelimiterBasedFrameDecoder decoder.

- FixedLengthFrameDecoder: Fixed length decoder, which can unpack the message according to the specified length.

- LengthFieldBasedFrameDecoder

2. Custom serialization codec

In Java comes with the implementation of the Serializable interface to achieve serialization, but due to its performance, security and other reasons, it will not be used under normal circumstances. Under normal circumstances, we use Protostuff, Hessian2, json sequence methods more, and there are some serialization methods with very good serialization performance are also good choices:

- Specifically for the Java language: Kryo, FST, etc.

- Cross-language: Protostuff (developed based on protobuf), ProtoBuf, Thrift, Avro, MsgPack, etc.

Do you understand Netty's long connection and heartbeat mechanism?

Do you understand TCP long and short connections?

We know that before TCP reads and writes, a connection must be established in advance between the server and the client. The process of establishing a connection requires the three-way handshake we often say, and four waves of hands are required to release/close the connection. This process consumes network resources and has a time delay.

The so-called short connection means that after the server side establishes a connection with the client side, the connection is closed after the reading and writing are completed. If the message is to be sent to each other next time, the connection must be reconnected. The short connection is a bit obvious, that is, the management and implementation are relatively simple, and the shortcomings are also obvious. The establishment of a connection for each read and write will inevitably lead to the consumption of a large amount of network resources, and the establishment of the connection also takes time.

Long connection means that after the client establishes a connection to the server, even if the client and server complete a read and write, the connection between them will not be actively closed, and subsequent read and write operations will continue to use this connection. Long connections can save more TCP establishment and closing operations, reduce dependence on network resources, and save time. For customers who frequently request resources, long connections are very suitable.

Why do we need a heartbeat mechanism? Do you understand Netty's central hop mechanism?

In the process of TCP maintaining a long connection, network abnormalities such as network disconnection may occur. When the abnormality occurs, if there is no interaction between the client and the server, they will not be able to find that the other party has been disconnected. In order to solve this problem, we need to introduce a heartbeat mechanism. The working principle of the heartbeat mechanism is: When there is no data interaction between the client and the server for a certain period of time, that is, when in the idle state, the client or server will send a special data packet to the other party, and when the receiver receives this data After the message is sent, a special data message is also sent immediately to respond to the sender, which is a PING-PONG interaction. Therefore, when one end receives the heartbeat message, it knows that the other party is still online, which ensures the validity of the TCP connection. In fact, TCP has its own long connection option, and it also has a heartbeat packet mechanism, that is, TCP's Options: SO_KEEPALIVE. However, the long connection flexibility at the TCP protocol level is not enough. Therefore, in general, we implement a custom heartbeat mechanism on the application layer protocol, that is, through coding at the Netty level. If the heartbeat mechanism is implemented through Netty, the core class is IdleStateHandler.

Do you understand Netty's zero copy?

"Zero-copy (English: Zero-copy; also translated as zero copy) technology means that when a computer performs an operation, the CPU does not need to first copy data from a certain memory to another specific area. This technology is usually used to transfer files over a network Time saving CPU cycles and memory bandwidth.

Zero-copy at the OS level usually refers to avoiding copying data back and forth between User-space and Kernel-space. At the Netty level, zero copy is mainly reflected in the optimization of data operations.

Zero copy in Netty is reflected in the following aspects:

- Using the CompositeByteBuf class provided by Netty, multiple ByteBufs can be merged into a logical ByteBuf, avoiding the copy between each ByteBuf.

- ByteBuf supports slice operation, so ByteBuf can be decomposed into multiple ByteBuf sharing the same storage area, avoiding memory copy.

- File transfer is realized through FileChannel.tranferTo wrapped by FileRegion, which can directly send the data in the file buffer to the target Channel, avoiding the memory copy problem caused by the traditional circular write method.