Regression problems can be divided into two situations. One is that we can list an expression. The input and output establish a corresponding relationship through this expression, then we can find the parameters in the expression; the other is that we may not be able to list the expression. At this time, we can use a neural network to replace this expression. The input and output establish a corresponding relationship through the neural network, then we can find the weight and bias in the neural network.

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

# 原始数据

# x_data.shape=[200,1]

x_data=np.linspace(-0.5,0.5,200)[:,np.newaxis]

noise=np.random.normal(0,0.02,x_data.shape)

y_data=np.square(x_data)+noise

# 定义两个placeholder,行数待定,列数为1

x=tf.placeholder(tf.float32,[None,1])

y=tf.placeholder(tf.float32,[None,1])

# 设计全连接神经网络中间层

weights_L1=tf.Variable(tf.random_normal([1,10]))

biases_L1=tf.Variable(tf.zeros([1,10]))

out_L1=tf.matmul(x,weights_L1)+biases_L1

L1=tf.nn.tanh(out_L1)

# 设计全连接神经网络输出层

weights_L2=tf.Variable(tf.random_normal([10,1]))

biases_L2=tf.Variable(tf.zeros([1,1]))

out_L2=tf.matmul(L1,weights_L2)+biases_L2

prediction=tf.nn.tanh(out_L2)

# 定义损失函数

loss=tf.reduce_mean(tf.square(prediction-y))

# 定义优化器

optimizer=tf.train.GradientDescentOptimizer(0.1)

# 定义模型,优化器通过调整loss函数里的参数使loss不断减小

train=optimizer.minimize(loss)

# 在图里运行

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

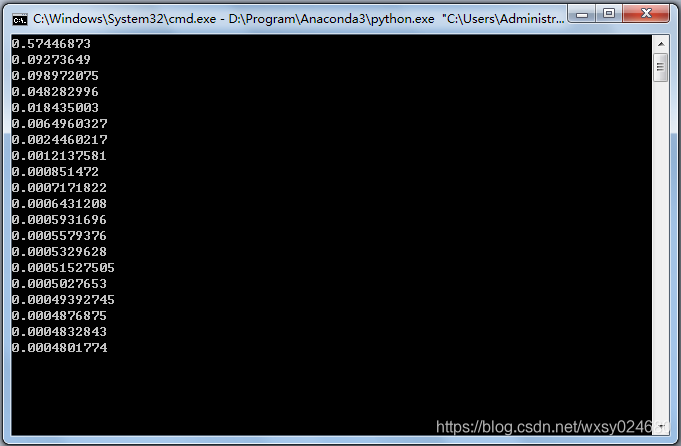

for step in range(1000):

sess.run(train,feed_dict={

x:x_data,y:y_data})

if step%50==0:

print(sess.run(loss,feed_dict={

x:x_data,y:y_data}))

# 获取预测值

prediction_value=sess.run(prediction,feed_dict={

x:x_data})

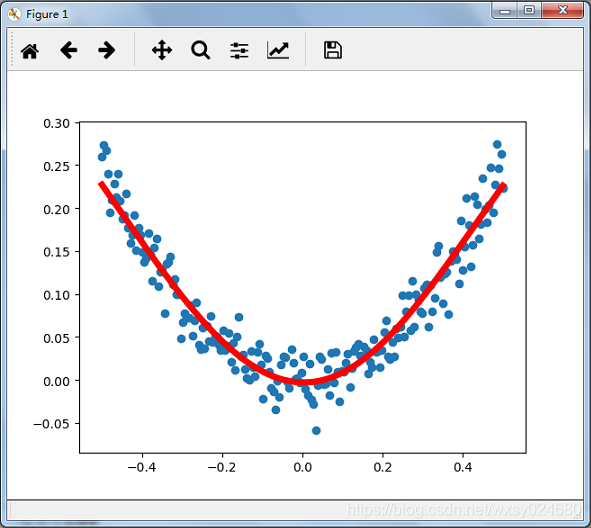

# 绘图

plt.figure()

plt.scatter(x_data,y_data)

plt.plot(x_data,prediction_value,'r-',lw=5)

plt.show()

operation result: