Mybaits source code analysis (5) Detailed explanation of first-level cache and second-level cache

Foreword: The previous article explained the mybaits data source, this article explained the basic use of mybaits first level cache, the second level cache, and the main implementation.

This article is mainly divided into the following parts:

The use and testing of the first level cache and the second level cache

Introduction to mybaits cache related classes

Detailed level 1 cache

Detailed second-level cache

1. The use and testing of the first level cache and the second level cache

In mybaits, the first level cache is enabled by default, the life cycle of the cache is at the sqlsession level, and the global configuration of the second level cache is enabled by default, but you need to also enable it in the namespace to use the second level cache, the second level cache The life cycle is sqlsessionFactory, and the scope of the cache operation is that each mapper corresponds to a cache (this is why it needs to be enabled in the namespace configured by the mapper to take effect)

1. Add the following configuration in SqlMapper, cacheEnabled is responsible for turning on the second-level cache, logImpl is responsible for printing sql (we can test whether the cache is gone according to whether to print the real sql)

<settings>

<!--打印执行sql用

<setting name="logImpl" value="STDOUT_LOGGING"/>

<!-- 默认为true -->

<setting name="cacheEnabled" value="true"/>

</settings>2. Test

/**

* 一级缓存测试 :

* 测试前,需要加下面logImpl这个打印sql语句的配置

<settings>

<setting name="logImpl" value="STDOUT_LOGGING"/> // 是为了打印sql语句用途

<setting name="cacheEnabled" value="true"/> // 二级缓存默认就是开启的

</settings>

测试结果,会发出一次sql语句,一级缓存默认开启,缓存生命周期是SqlSession级别

*/

@Test

public void test2() throws Exception {

InputStream in = Resources.getResourceAsStream("custom/sqlMapConfig3.xml");

SqlSessionFactory factory2 = new SqlSessionFactoryBuilder().build(in);

SqlSession openSession = factory2.openSession();

UserMapper mapper = openSession.getMapper(UserMapper.class);

User user1 = mapper.findUserById(40); // 会发出sql语句

System.out.println(user1);

User user2 = mapper.findUserById(40); // 会发出sql语句

System.out.println(user2);

openSession.close();

}

/**

* 二级缓存测试

* 二级缓存全局配置默认开启,但是需要每个名称空间配置<cache></cache>,

* 即需要全局和局部同时配置,缓存生命周期是SqlSessionFactory级别。

*/

@Test

public void test3() throws Exception {

InputStream in = Resources.getResourceAsStream("custom/sqlMapConfig3.xml");

SqlSessionFactory factory2 = new SqlSessionFactoryBuilder().build(in);

SqlSession openSession = factory2.openSession();

UserMapper mapper = openSession.getMapper(UserMapper.class);

User user1 = mapper.findUserById(40);

System.out.println(user1);

openSession.close(); // 关闭session

openSession = factory2.openSession();

mapper = openSession.getMapper(UserMapper.class);

User user3 = mapper.findUserById(40); // 二级缓存全局和局部全部开启才会打印sql

System.out.println(user3);

}

From the test results, it can be seen that when mybaits does not open the second-level cache, a query with the same sqlsession is executed again. If there is no close, the first-level cache will be checked. When the second-level cache is turned on, if the second-level cache is turned on, if the query is closed , The cache can still be used (the second-level cache is used), the cache mechanism is: the first query, the second-level cache will be checked first, the first-level cache is not found, and the database is not checked again, and the database is first found in the first-level cache , And then put it in the second-level cache. In the second query, the second-level cache will be checked first, and if the second-level cache is in stock, it will be returned.

Two, the introduction of mybaits cache related classes

The top-level interfaces of mybaits level-1 cache and level-2 cache are the same, both of which are cache classes. By default, the cache implementation of mybaits is a hashMap package, and there are many packages that implement the cache class.

1. Let's take a look at the Cache class and package structure of the top-level interface.

public interface Cache {

String getId();

void putObject(Object key, Object value);

Object getObject(Object key);

Object removeObject(Object key);

void clear();

int getSize();

ReadWriteLock getReadWriteLock();

}

Except for PerpetualCache , other Cache implementations use the decorator mode. The underlying PerpetualCache completes the actual cache and adds other functions on this basis.

SynchronizedCache : By locking in the get/put method, it is ensured that only one thread operates the cache

FifoCache , LruCache : When the cache reaches the upper limit, delete the cache through the FIFO or LRU (earliest access to memory) strategy ( the two can be used when the cache invalidation strategy is configured when the namespace starts <cache> )

ScheduledCache: Before performing operations such as get/put/remove/getSize, determine whether the cache time has exceeded the maximum cache time set (the default is one hour), if it is, clear the cache--that is, clear the cache every once in a while

SoftCache/WeakCache: Cache is implemented through JVM soft references and weak references. When JVM memory is insufficient, these caches will be automatically cleaned up

TranscationCache: Transactional packaging, that is, an operation to this cache will not be updated to the delegate cache immediately. The operation will be divided into removal and addition maintenance to the map container. After the commit method is called, the actual operation is cached.

2. The main implementation logic of the cache implementation class

1) PerpetualCache

public class PerpetualCache implements Cache {

private String id;

private Map<Object, Object> cache = new HashMap<Object, Object>();

PerpetualCache is a map cache. This is the default cache implementation of mybaits. The first level cache uses this class. If the second level cache wants to use a third-party cache, there are ready-made jar packages that can be used, which is not described here.

2) FifoCache

FifoCache is a caching package that implements first-in first-out caching. Its implementation principle is to use the LinkedList first-in first-out mechanism.

private final Cache delegate;

private LinkedList<Object> keyList;

private int size;

public FifoCache(Cache delegate) {

this.delegate = delegate;

this.keyList = new LinkedList<Object>();

this.size = 1024;

}

public void putObject(Object key, Object value) {

cycleKeyList(key);

delegate.putObject(key, value);

}

// 主要实现就是添加缓存的时候,顺便添加到list中,这样如果添加前,判断list的尺寸大于size

// 就移除list中的第一个,并且delegate缓存也移除。

private void cycleKeyList(Object key) {

keyList.addLast(key);

if (keyList.size() > size) {

Object oldestKey = keyList.removeFirst();

delegate.removeObject(oldestKey);

}

}3) LruCache

LruCache is a cache package that implements the LRU elimination mechanism. Its main principle is to use the three parameter structure of LinkList.

new LinkedHashMap<Object, View>(size, 0.75f, true), the function of the third parameter accessOrder is whether to add the element to the end of the linked list if the element is accessed. Combined with a protected removeEldestEntry method of LinkedHashMap, LRU (that is, the removal that has not been accessed for the longest time) can be implemented.

private MyCache delegate;

private Map<Object, Object> keyMap;

public LRUCache(MyCache delegate) {

super();

this.delegate = delegate;

setSize(1024);

}

private void setSize(int initialCapacity) {

keyMap = new LinkedHashMap<Object, Object>(initialCapacity, 0.75f, true) {

private static final long serialVersionUID = 4267176411845948333L;

protected boolean removeEldestEntry(Map.Entry<Object, Object> eldest) {

boolean tooBig = size() > initialCapacity; // 大于尺寸

if (tooBig) { // ture 移除delegate缓存

delegate.removeObject(eldest.getKey());

}

return tooBig; // 返回true会自动移除LinkHashMap的缓存

}

};

}

// 其他操作的时候KeyMap进行同步4)、SynchronizedCache

SynchronizedCache is a package of synchronous locking. This is simple, that is, all implementation methods that depend on cache state changes are locked.

@Override

public synchronized void putObject(Object key, Object value) {

delegate.putObject(key, value);

}

@Override

public synchronized Object getObject(Object key) {

return delegate.getObject(key);

}

@Override

public synchronized Object removeObject(Object key) {

return delegate.removeObject(key);

}5) 、 TranscationCache

The packaging of TranscationCache is to maintain two maps internally. One map installs the remove cache operation, and the other map installs the put cache operation. When committing, it traverses the two maps and executes the cache operation. If reset, it will be cleared. These two temporary maps. The following member variables of cahce, and two inner classes (these two inner classes are to wrap the cache operation).

/**

* 对需要添加元素的包装,并且传入了delegate缓存,调用此commit就可以用delegate缓存put进添加元素

*/

private static class AddEntry {

private Object key;

private Object value;

private MyCache delegate;

public AddEntry(Object key, Object value, MyCache delegate) {

super();

this.key = key;

this.value = value;

this.delegate = delegate;

}

public void commit() {

this.delegate.putObject(key, value);

}

}

/**

* 对需要移除的元素的包装,并且传入了delegate缓存,调用此commit就可以用delegate移除元素。

*/

private static class RemoveEntry {

private Object key;

private MyCache delegate;

public RemoveEntry(Object key, MyCache delegate) {

super();

this.key = key;

this.delegate = delegate;

}

public void commit() {

this.delegate.removeObject(key);

}

}

// 包装的缓存

private MyCache delegate;

// 待添加元素的map

private Map<Object, AddEntry> entriesToAddOnCommit;

// 待移除元素的map

private Map<Object, RemoveEntry> entriesToRemoveOnCommit;The following is the core implementation

// 添加的时候,操作的是二个map,既然添加,那么临时remove的map需要remove这个key

@Override

public void putObject(Object key, Object value) {

this.entriesToRemoveOnCommit.remove(key);

this.entriesToAddOnCommit.put(key, new AddEntry(key, value, delegate));

}

@Override

public Object getObject(Object key) {

return this.delegate.getObject(key);

}

// 移除的时候,操作的是二个map,既然移除,那么临时add的map需要remove这个可以。

@Override

public Object removeObject(Object key) {

this.entriesToAddOnCommit.remove(key);

this.entriesToRemoveOnCommit.put(key, new RemoveEntry(key, delegate));

return this.delegate.getObject(key); // 这里是为了获得返回值,注意不能用removeObject

}

@Override

public void clear() {

this.delegate.clear();

reset();

}

// 提交

public void commit() {

delegate.clear(); // delegate移除

for (RemoveEntry entry : entriesToRemoveOnCommit.values()) {

entry.commit(); // 移除remove里的元素

}

for (AddEntry entry : entriesToAddOnCommit.values()) {

entry.commit(); // 添加add里的元素

}

reset();

}

public void rollback() {

reset();

}

3. Implementation of the cache key

The key cached by mybaits is implemented based on a CacheKey class, and its core mechanism is as follows:

* The implementation mechanism of the cache key : through the update method, calculate the hashcode, and add it to the internal list collection to determine whether it is the same, also based on the internal list elements are all the same.

* Mechanism for creating mybaits' CacheKey : same statement, internal page parameter offset, internal page parameter limit, precompiled sql statement, parameter mapping.

/**

* CacheKey的组装

*/

public static void main(String[] args) {

String mappedStatementId = "MappedStatementId"; // 用字符串描述mybaits的cachekey的元素。

String rowBounds_getOffset = "rowBounds_getOffset";

String rowBounds_getLimit = "rowBounds_getLimit";

String buondSql_getSql = "buondSql_getSql";

List<String> parameterMappings = new ArrayList<>();

parameterMappings.add("param1");

parameterMappings.add("param2");

CacheKey cacheKey = new CacheKey(); // 创建CacheKey

cacheKey.update(mappedStatementId); // 添加元素到CacheKey

cacheKey.update(rowBounds_getOffset);

cacheKey.update(rowBounds_getLimit);

cacheKey.update(buondSql_getSql);

cacheKey.update(parameterMappings);

System.out.println(cacheKey);

}Three, the detailed explanation of the first level cache

We have already understood the cache class interface and its implementation above, and now we can explore how the next level of cache is used in mybaits.

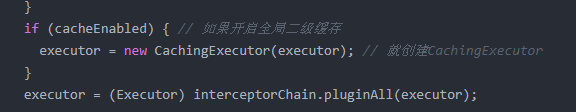

Before talking about the first level cache, let’s review that sqlsession actually calls Executor to operate, and BaseExecutor is the basic implementation of Executor. It has several other implementations. We use SimpleExecutor and another

CachingExecutor implements the second-level cache. Let's start by looking at the cache creation when creating a session, and then BaseExecutor will start looking at the first level cache.

1. Cache creation process (cache creation is when creating SqlSession, we directly look at the openSessionFromDataSource of SqlSsession)

private SqlSession openSessionFromDataSource(ExecutorType execType, TransactionIsolationLevel level, boolean autoCommit) {

Transaction tx = null;

try {

final Environment environment = configuration.getEnvironment();

final TransactionFactory transactionFactory = getTransactionFactoryFromEnvironment(environment);

tx = transactionFactory.newTransaction(environment.getDataSource(), level, autoCommit);

final Executor executor = configuration.newExecutor(tx, execType);

return new DefaultSqlSession(configuration, executor, autoCommit);

} catch (Exception e) {

closeTransaction(tx); // may have fetched a connection so lets call close()

throw ExceptionFactory.wrapException("Error opening session. Cause: " + e, e);

} finally {

ErrorContext.instance().reset();

}

}

public Executor newExecutor(Transaction transaction, ExecutorType executorType) {

executorType = executorType == null ? defaultExecutorType : executorType;

executorType = executorType == null ? ExecutorType.SIMPLE : executorType;

Executor executor;

if (ExecutorType.BATCH == executorType) { // 根据Executor类型创建不同的Executor

executor = new BatchExecutor(this, transaction);

} else if (ExecutorType.REUSE == executorType) {

executor = new ReuseExecutor(this, transaction);

} else {

executor = new SimpleExecutor(this, transaction); // 默认的Executor

}

if (cacheEnabled) { // 如果开启全局二级缓存

executor = new CachingExecutor(executor); // 就创建CachingExecutor

}

executor = (Executor) interceptorChain.pluginAll(executor);

return executor;

}Although the above is the creation process of Executor, it is actually the creation process of the first level cache. The first level cache is a member variable of a Cache in the Executor. The second level cache is realized by CachingExecutor 's packaging of Executor, which will be analyzed in detail later.

2. Query entry BaseExecutor.query to start analysis

All queries of SqlSession are implemented by the query method of the called Executor. The code of the implementation class BaseExecutor is as follows:

public <E> List<E> query(MappedStatement ms, Object parameter, RowBounds rowBounds, ResultHandler resultHandler) throws SQLException {

BoundSql boundSql = ms.getBoundSql(parameter);

CacheKey key = createCacheKey(ms, parameter, rowBounds, boundSql); // 缓存key创建

return query(ms, parameter, rowBounds, resultHandler, key, boundSql);

}This query is mainly to create a cache key (the creation logic has been discussed above), and to retrieve sql. Look at the overloaded query method called by query.

public <E> List<E> query(MappedStatement ms, Object parameter, RowBounds rowBounds, ResultHandler resultHandler, CacheKey key, BoundSql boundSql) throws SQLException {

if (queryStack == 0 && ms.isFlushCacheRequired()) {

clearLocalCache(); // 如果配置需要清除就清除本地缓存

}

List<E> list;

try {

queryStack++;

list = resultHandler == null ? (List<E>) localCache.getObject(key) : null;

if (list != null) {

handleLocallyCachedOutputParameters(ms, key, parameter, boundSql);

} else { // 从缓存没有取到,查数据库

list = queryFromDatabase(ms, parameter, rowBounds, resultHandler, key, boundSql);

}

} finally {

queryStack--;

}

if (queryStack == 0) {

for (DeferredLoad deferredLoad : deferredLoads) {

deferredLoad.load(); //处理循环引用?

}

deferredLoads.clear();

if (configuration.getLocalCacheScope() == LocalCacheScope.STATEMENT) {

clearLocalCache(); // 如果是statement的本地缓存,就直接清除!

}

}

return list; // 取到返回

}In this query method, first take out the local cache localCache (this is PerpetualCache), and return if found, and check the database method queryFromDatabase if it is not found. In addition, the first level cache can be configured as a statement range, that is, the local cache will be cleared for each query. Let's look at the queryFromDatabase method again.

private <E> List<E> queryFromDatabase(MappedStatement ms, Object parameter, RowBounds rowBounds, ResultHandler resultHandler, CacheKey key, BoundSql boundSql) throws SQLException {

List<E> list;

localCache.putObject(key, EXECUTION_PLACEHOLDER);

try { // 查询语句

list = doQuery(ms, parameter, rowBounds, resultHandler, boundSql);

} finally {

localCache.removeObject(key);

}

localCache.putObject(key, list); // 查询出来放入一级缓存

if (ms.getStatementType() == StatementType.CALLABLE) {

localOutputParameterCache.putObject(key, parameter);

}

return list;

}In addition, if operations such as update, the local cache will be removed.

public int update(MappedStatement ms, Object parameter) throws SQLException {

ErrorContext.instance().resource(ms.getResource()).activity("executing an update").object(ms.getId());

if (closed) throw new ExecutorException("Executor was closed.");

clearLocalCache();

return doUpdate(ms, parameter);

}Fourth, the realization of the second level cache

As mentioned above, the second-level cache mainly relies on the packaging of CachingExecutor, so we can understand the second-level cache by directly analyzing this class.

1. Detailed explanation of CachingExecutor members and TransactionalCacheManager

public class CachingExecutor implements Executor {

private Executor delegate;

private TransactionalCacheManager tcm = new TransactionalCacheManager(); // TransactionalCache的管理类TransactionalCacheManager : Used to manage the second-level cache object used by CachingExecutor, only one transactionalCaches field is defined

private final Map<Cache, TransactionalCache> transactionalCaches = new HashMap<Cache, TransactionalCache>();

Its key is the second-level cache object used by CachingExecutor, and value is the corresponding TransactionalCache object. Let's see its implementation below.

public class TransactionalCacheManager {

// 装未包装缓存和包装成Transaction缓存的map映射

private Map<Cache, TransactionalCache> transactionalCaches = new HashMap<Cache, TransactionalCache>();

// 操作缓存多了一个Cache参数,实际上是调用Transaction的对应方法

public void clear(Cache cache) {

getTransactionalCache(cache).clear();

}

public Object getObject(Cache cache, CacheKey key) {

return getTransactionalCache(cache).getObject(key);

}

public void putObject(Cache cache, CacheKey key, Object value) {

getTransactionalCache(cache).putObject(key, value);

}

// 全部缓存commit

public void commit() {

for (TransactionalCache txCache : transactionalCaches.values()) {

txCache.commit();

}

}

// 全部缓存rollback

public void rollback() {

for (TransactionalCache txCache : transactionalCaches.values()) {

txCache.rollback();

}

}

// 创建一个TransactionalCache,并把原cache为key放入map维护

private TransactionalCache getTransactionalCache(Cache cache) {

TransactionalCache txCache = transactionalCaches.get(cache);

if (txCache == null) {

txCache = new TransactionalCache(cache);

transactionalCaches.put(cache, txCache);

}

return txCache;

}

}2. Level 2 cache cache logic

public <E> List<E> query(MappedStatement ms, Object parameterObject, RowBounds rowBounds, ResultHandler resultHandler, CacheKey key, BoundSql boundSql)

throws SQLException {

Cache cache = ms.getCache(); // 获得二级缓存

if (cache != null) {

flushCacheIfRequired(ms);

if (ms.isUseCache() && resultHandler == null) { // 开启了缓存

ensureNoOutParams(ms, parameterObject, boundSql);

@SuppressWarnings("unchecked")

List<E> list = (List<E>) tcm.getObject(cache, key);

if (list == null) { // 没有就查询

list = delegate.<E> query(ms, parameterObject, rowBounds, resultHandler, key, boundSql);

tcm.putObject(cache, key, list); // issue #578. Query must be not synchronized to prevent deadlocks

}

return list; // 有就返回

}

}

return delegate.<E> query(ms, parameterObject, rowBounds, resultHandler, key, boundSql);

}The above is a simple query of the second-level cache first, and return if there is no, then check the doquery of BaseExcute (which is to first check the first-level cache, find the return, not check the database, and put it back to the first-level cache when the database is checked).

Other operations such as update and commit will clear the second-level cache (this is equivalent to a simple implementation, which means that if there is only query, the second-level cache is always valid, and if there is an update, all the second-level caches must be cleared).

In addition:

The Cache of the second-level cache, by default, each namespace shares a Cache. The implementation of this Cache must also be packaged with LRUCache, etc. and SynchronizedCache.

This is checked at the breakpoint of Cache cache = ms.getCache();. How to configure the packaging can be analyzed in the relevant process of Mapper.xml parsing.

Five: Summary of the use of primary and secondary caches

1. Level 1 cache configuration:

As can be seen from the code, the first-level cache is turned on by default, and there is no setting or judgment statement to control whether to perform the first-level cache query or add operation. Therefore, the first level cache cannot be turned off.

2. Level 2 cache configuration:

There are three places in the configuration of the secondary cache:

a, global cache switch, mybatis-config.xml

<settings><setting name="cacheEnabled" value="true"/></settings>

b. The second-level cache instance mapper.xml under each namespace

<cache/> or refer to the cache of other namespaces <cache-ref namespace="com.someone.application.data.SomeMapper"/>

c. Configure the useCache attribute in the <select> node

The default is true, when set to false, the secondary cache will not take effect for this select statement

a, the scope of the first level cache

The scope of the first level cache is configurable:

<settings><setting name="localCacheScope" value="STATEMENT"/></settings>

Range options are: SESSION and STATEMENT, the default value is SESSION

SESSION: In this case, all queries executed in a session (SqlSession) will be cached

STATEMENT: The local session is only used for statement execution, and the first level cache will be cleared after completing a query.

b, the scope of the secondary cache

The scope of the secondary cache is namespace, that is, multiple SqlSessions under the same namespace share the same cache

4. Suggestions for use

It is not recommended to use the second-level cache. The second-level cache is shared in the scope of the name space. Although the life cycle is sqlsesisonFacotry, any update will clear all the second-level caches. In addition, the second-level cache also has problems in querying tables, (for example, you Connected to a table that is not in this namespace, the data of that table is changed, the second-level cache of this namespace is not known), and some other problems, so it is not recommended to use it.

In some extreme cases, the first level cache may have dirty data. One suggestion is to change it to the STATEMENT range, and the other is not to use a sqlsession to repeatedly query the same statement in business logic.

end!