content

-

How to configure secondary cache in Mybatis

-

Cache parsing process

- Cache-supported expiration policies

- Decorator source code

How to configure secondary cache in Mybatis

Configure caching based on annotations

@CacheNamespace(blocking=true)

public interface PersonMapper {

@Select("select id, firstname, lastname from person")

public List<Person> findAll();

}

Configuring caching based on XML

<mapper namespace="org.apache.ibatis.submitted.cacheorder.Mapper2"> <cache/> </mapper>

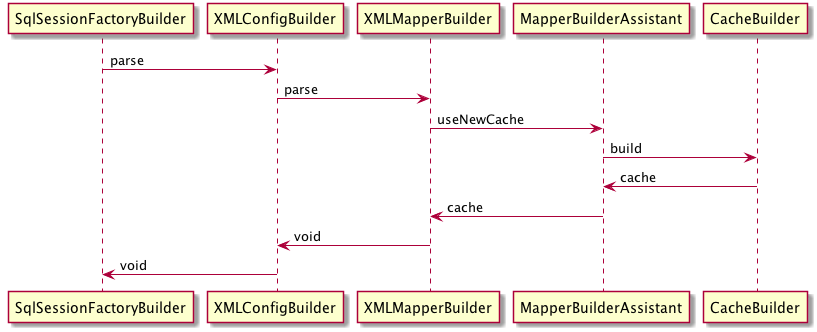

Cache parsing process

Why configure a <cache/> to use the cache? From the following source code, you can find that the cache configuration has default values.

private void cacheElement(XNode context) throws Exception {

if (context != null) {

//Get the type value of the configuration, the default value is PERPETUAL

String type = context.getStringAttribute("type", "PERPETUAL");

//Get the class of type

Class<? extends Cache> typeClass = typeAliasRegistry.resolveAlias(type);

//Get the configuration expiration policy, the default value is LRU

String eviction = context.getStringAttribute("eviction", "LRU");

Class<? extends Cache> evictionClass = typeAliasRegistry.resolveAlias(eviction);

//Get the configured refresh interval

Long flushInterval = context.getLongAttribute("flushInterval");

//Get the configured cache size

Integer size = context.getIntAttribute("size");

//Whether read-only is configured, the default is false

boolean readWrite = !context.getBooleanAttribute("readOnly", false);

//Whether blocking is configured, the default is false

boolean blocking = context.getBooleanAttribute("blocking", false);

Properties props = context.getChildrenAsProperties();

builderAssistant.useNewCache(typeClass, evictionClass, flushInterval, size, readWrite, blocking, props);

}

}

Cache-supported expiration policies

typeAliasRegistry.registerAlias("FIFO", FifoCache.class);

typeAliasRegistry.registerAlias("LRU", LruCache.class);

typeAliasRegistry.registerAlias("SOFT", SoftCache.class);

typeAliasRegistry.registerAlias("WEAK", WeakCache.class);

Basic implementation of cache

public class PerpetualCache implements Cache {

//cache ID

private final String id;

//cache

private Map<Object, Object> cache = new HashMap<Object, Object>();

public PerpetualCache(String id) {

this.id = id;

}

@Override

public String getId() {

return id;

}

@Override

public int getSize() {

return cache.size();

}

@Override

public void putObject(Object key, Object value) {

cache.put(key, value);

}

@Override

public Object getObject(Object key) {

return cache.get(key);

}

@Override

public Object removeObject(Object key) {

return cache.remove(key);

}

@Override

public void clear() {

cache.clear();

}

@Override

public ReadWriteLock getReadWriteLock() {

return null;

}

@Override

public boolean equals(Object o) {

if (getId() == null) {

throw new CacheException("Cache instances require an ID.");

}

if (this == o) {

return true;

}

if (!(o instanceof Cache)) {

return false;

}

Cache otherCache = (Cache) o;

return getId().equals(otherCache.getId());

}

@Override

public int hashCode() {

if (getId() == null) {

throw new CacheException("Cache instances require an ID.");

}

return getId().hashCode();

}

}

In Mybatis, is it not possible to create a cache based on the invalid expiration policy? In fact, all Cache algorithms of Mybatis are based on the decorator pattern to add functions to the PerpetualCache extension. The following is an analysis of its decorator source code

/**

* Simple blocking decorator

*

* Lock the key of the cache cache when it does not exist in the current cache, and other threads can only wait until the element is saved in the cache

* Since the lock object is saved for each key, there may be OOM risk if used in a large number of queries

* @author Eduardo Macarron

*

*/

public class BlockingCache implements Cache {

//overtime time

private long timeout;

//delegates

private final Cache delegate;

/ / Cache the mapping relationship between key and lock

private final ConcurrentHashMap<Object, ReentrantLock> locks;

public BlockingCache(Cache delegate) {

this.delegate = delegate;

this.locks = new ConcurrentHashMap<Object, ReentrantLock>();

}

//Get the ID and delegate directly to the delegate for processing

@Override

public String getId() {

return delegate.getId();

}

@Override

public int getSize() {

return delegate.getSize();

}

//Place the cache and release the lock after the end; note that there is no lock before the cache

//The setting here has a lot to do with getting the cache

@Override

public void putObject(Object key, Object value) {

try {

delegate.putObject(key, value);

} finally {

releaseLock(key);

}

}

@Override

public Object getObject(Object key) {

//acquire the lock

acquireLock(key);

// get cached data

Object value = delegate.getObject(key);

//If the cached data exists, release the lock, otherwise return, note that the lock is not released at this time; there is no way when the next thread acquires it

//To acquire the lock, you can only wait; remember that the lock will be released when the put ends. Here is why the lock was not acquired before the put, but the lock is released after the end.

if (value != null) {

releaseLock(key);

}

return value;

}

@Override

public Object removeObject(Object key) {

// despite of its name, this method is called only to release locks

releaseLock(key);

return null;

}

@Override

public void clear() {

delegate.clear();

}

@Override

public ReadWriteLock getReadWriteLock() {

return null;

}

private ReentrantLock getLockForKey(Object key) {

ReentrantLock lock = new ReentrantLock();

ReentrantLock previous = locks.putIfAbsent(key, lock);

return previous == null ? lock : previous;

}

private void acquireLock(Object key) {

Lock lock = getLockForKey(key);

if (timeout > 0) {

try {

boolean acquired = lock.tryLock(timeout, TimeUnit.MILLISECONDS);

if (!acquired) {

throw new CacheException("Couldn't get a lock in " + timeout + " for the key " + key + " at the cache " + delegate.getId());

}

} catch (InterruptedException e) {

throw new CacheException("Got interrupted while trying to acquire lock for key " + key, e);

}

} else {

lock.lock();

}

}

private void releaseLock(Object key) {

ReentrantLock lock = locks.get(key);

if (lock.isHeldByCurrentThread()) {

lock.unlock();

}

}

public long getTimeout() {

return timeout;

}

public void setTimeout(long timeout) {

this.timeout = timeout;

}

}

public class LruCache implements Cache {

private final Cache delegate;

//key mapping table

private Map<Object, Object> keyMap;

// the oldest key

private Object eldestKey;

public LruCache(Cache delegate) {

this.delegate = delegate;

setSize(1024);

}

@Override

public String getId() {

return delegate.getId();

}

@Override

public int getSize() {

return delegate.getSize();

}

public void setSize(final int size) {

//Use LinedListHashMap to implement LRU, accessOrder=true will be sorted according to the access order, the most recently accessed is placed first, and the earliest accessed is placed at the back

keyMap = new LinkedHashMap<Object, Object>(size, .75F, true) {

private static final long serialVersionUID = 4267176411845948333L;

@Override

protected boolean removeEldestEntry(Map.Entry<Object, Object> eldest) {

//If the current size has exceeded 1024, delete the oldest element

boolean tooBig = size() > size;

if (tooBig) {

//Assign the oldest element to eldestKey

eldestKey = eldest.getKey();

}

return tooBig;

}

};

}

@Override

public void putObject(Object key, Object value) {

delegate.putObject(key, value);

cycleKeyList(key);

}

@Override

public Object getObject(Object key) {

//Every time the question will trigger the sorting of keyMap

keyMap.get(key);

return delegate.getObject(key);

}

@Override

public Object removeObject(Object key) {

return delegate.removeObject(key);

}

@Override

public void clear() {

delegate.clear();

keyMap.clear();

}

@Override

public ReadWriteLock getReadWriteLock() {

return null;

}

private void cycleKeyList(Object key) {

// Put the current key into keyMap

keyMap.put(key, key);

//If the oldest key is not null, clear the cache of the oldest key

if (eldestKey != null) {

delegate.removeObject(eldestKey);

eldestKey = null;

}

}

}

public class FifoCache implements Cache {

private final Cache delegate;

// double ended queue

private final Deque<Object> keyList;

private int size;

public FifoCache(Cache delegate) {

this.delegate = delegate;

this.keyList = new LinkedList<Object>();

this.size = 1024;

}

@Override

public String getId() {

return delegate.getId();

}

@Override

public int getSize() {

return delegate.getSize();

}

public void setSize(int size) {

this.size = size;

}

@Override

public void putObject(Object key, Object value) {

cycleKeyList(key);

delegate.putObject(key, value);

}

@Override

public Object getObject(Object key) {

return delegate.getObject(key);

}

@Override

public Object removeObject(Object key) {

return delegate.removeObject(key);

}

@Override

public void clear() {

delegate.clear();

keyList.clear();

}

@Override

public ReadWriteLock getReadWriteLock() {

return null;

}

private void cycleKeyList(Object key) {

//Add the current key to the end of the queue

keyList.addLast(key);

//If the queue length of the key exceeds the limit, delete the key of the head of the queue and the cache

if (keyList.size() > size) {

Object oldestKey = keyList.removeFirst();

delegate.removeObject(oldestKey);

}

}

}