A very simple crawler, but because the request library does not support coroutines, if you want to crawl multiple pages of products, it will spend a lot of time on synchronous web page requests, which is simply turtle speed.

However, the official specially provides an aiohttp library to implement asynchronous web page requests and other functions. It can be regarded as an asynchronous version of the requests library, and we need to install it manually. Enter the command line to pip install aiohttpinstall.

Before writing the code, we need to get the user-agent and cookie first . First open the browser to visit Taobao, and then press f12 to enter the developer mode. If there is nothing, press f5 to refresh.

After clicking, you will see the cookie, and then you will see the user-agent.

Copy them, without cookies and user-agent crawlers, you can't crawl the information.

Copy them, without cookies and user-agent crawlers, you can't crawl the information.

import aiohttp

import re

import asyncio

sem=asyncio.Semaphore(10)#信号量,控制协程数,防止爬的过快

header={

"user-agent":"","Cookie":''}#header字典的value为刚刚复制的的user-agent和cookie

async def getExcel(url,header):

async with sem:

async with aiohttp.ClientSession() as session:

async with session.request('GET',url,headers=header) as result:

try:

info=[]#定义一个列表,用于存储商品名称和价格

text=await result.text()#异步获得页面信息

GoodsNames=re.findall(r'\"raw_title\"\:\".*?\"',text)#使用正则表达式获取页面的商品名

GoodsPrices=re.findall(r'\"view_price\"\:\"[\d\.]*\"',text)#使用正则表达式获取页面商品的价格

#将该页面的商品名称和价格保存到info列表中

for i in range(len(GoodsNames)):

try:

GoodsName=eval(GoodsNames[i].split(':')[1])

GoodsPrice=eval(GoodsPrices[i].split(':')[1])

info.append([GoodsName,GoodsPrice])

except:

info.append(['',''])

except:

pass

#将保存有商品信息的info列表写入csv文件,注意文件的打开模式一定要为'a',否则下一页面输入文件的新内容将覆盖本页面输入文件的内容。

f=open('goods_info.csv','a',encoding='utf-8')

for every in info:

f.write(','.join(every)+'\n')

def main(header):

goods=input('请输入想检索的商品:')

num=eval(input('请输入想检索的页面数:'))

start_url='https://s.taobao.com/search?q=' + goods

url_lst=[]

#生成要爬取的多个页面的url列表

for i in range(num):

url_lst.append(start_url+'&s='+str(44*i))

loop=asyncio.get_event_loop()#获取事件循环

tasks=[getExcel(url,header) for url in url_lst]#生成任务列表

loop.run_until_complete(asyncio.wait(tasks))#激活协程

if __name__=='__main__':

main(header)

Note:

1. When writing a file, use'a' in f.open(). If'w' is used, then the old content will be overwritten by the new one during circular writing.

2. The header dictionary at the beginning should be added to the user-agent and cookie value, otherwise no information can be crawled.

Compile and execute, enter the product you want to crawl and how many pages you want to crawl, and run.

请输入想检索的商品:羽绒服

请输入想检索的页面数:100

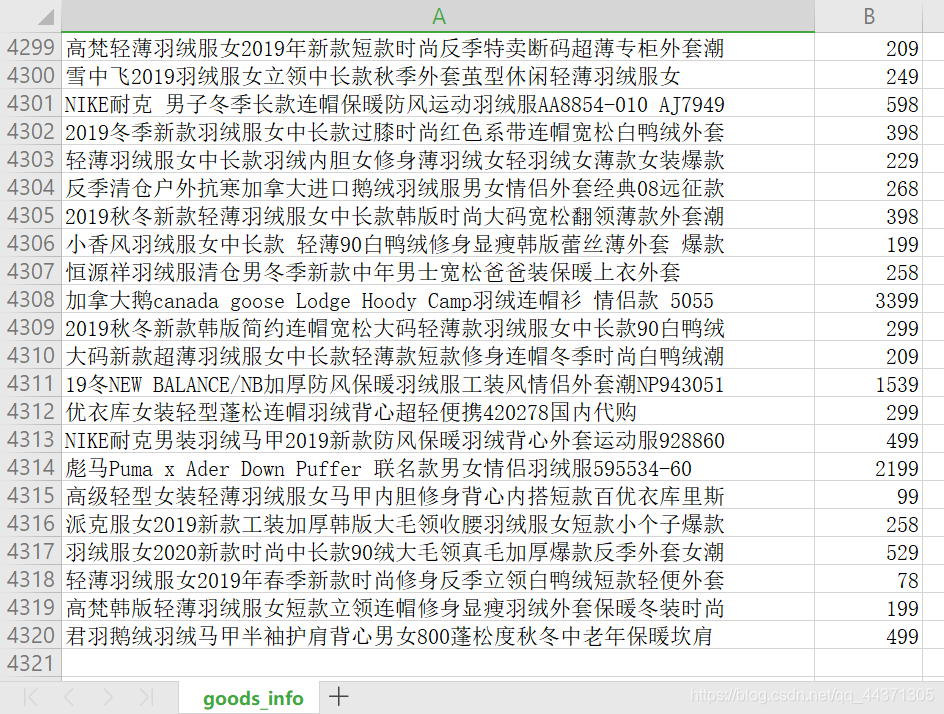

After running, a csv file will be generated in the same directory of the .py file, which can be opened with excel.

In just ten seconds, the price of thousands of similar products can be crawled, which is much higher than the efficiency of the simple request library. If necessary, you can also add functions, while crawling information such as sales volume and shipping location.