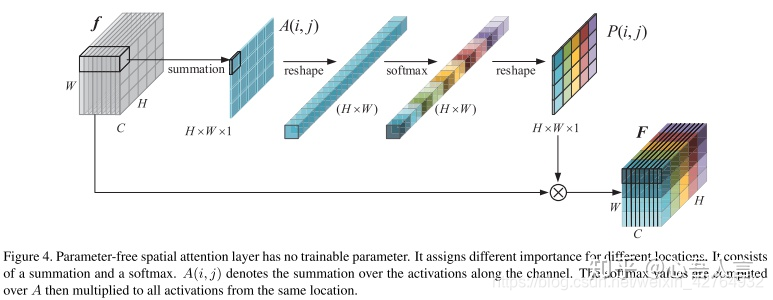

spatial attention

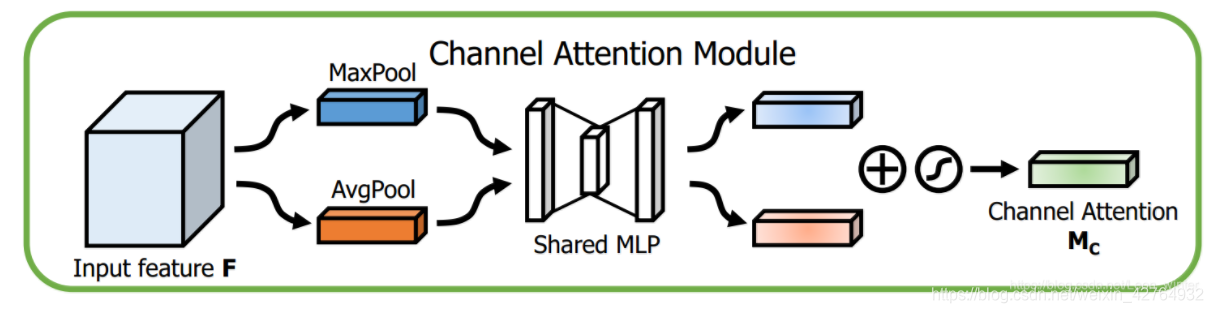

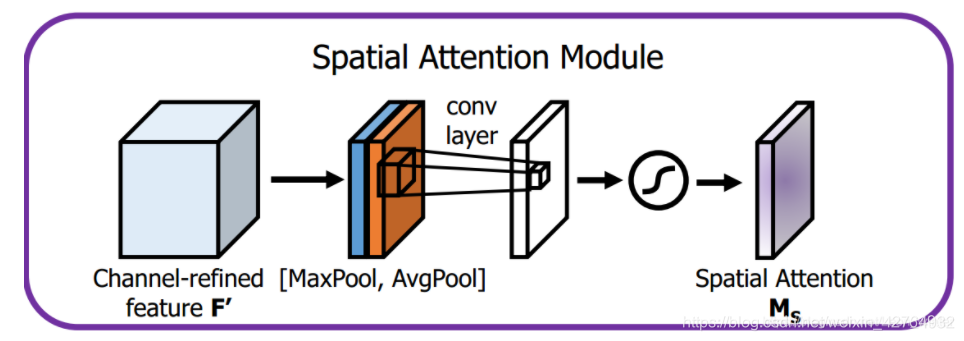

Channel attention is to weight the channel, and spatial attention is to weight the spatial

Parameter-Free Spatial Attention Network for Person Re-Identification

The feature map sums the channels to obtain the H*W matrix, and then reshape, softmax, and reshape obtain the attention matrix.

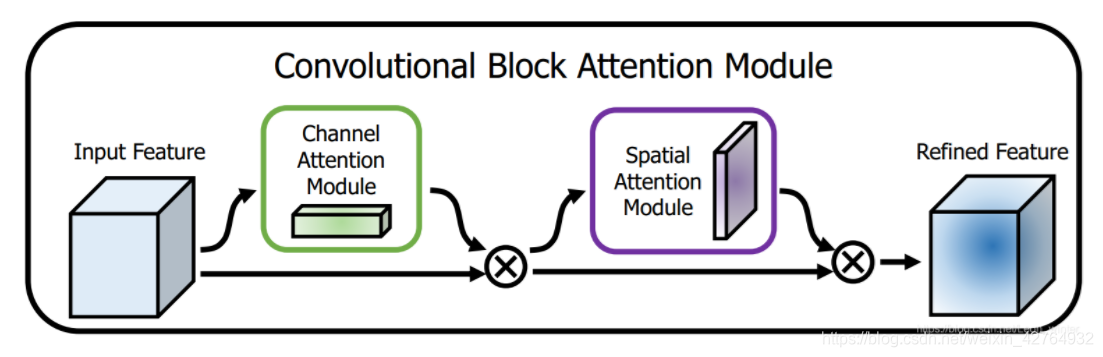

CBAM: Convolutional Block Attention Module

There are both channel attention and spatial attention

channel attention

spatial attention

class SpatialAttentionModule(nn.Module):

def __init__(self):

super(SpatialAttentionModule, self).__init__()

self.conv2d = nn.Conv2d(in_channels=2, out_channels=1, kernel_size=7, stride=1, padding=3)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

avgout = torch.mean(x, dim=1, keepdim=True)

maxout, _ = torch.max(x, dim=1, keepdim=True)

out = torch.cat([avgout, maxout], dim=1)

out = self.sigmoid(self.conv2d(out))

return out