1 Problem to be solved

When the cluster is allocated to multiple users, it is necessary to use quotas to limit the user's resource usage, including the number of CPU cores, memory size, and the number of GPU cards, to prevent resources from being exhausted by some users, resulting in unfair resource allocation.

In most cases, a native of cluster ResourceQuotamechanism can solve the problem. But with the expansion of the cluster size and the increase of task types, we need to adjust the rules of quota management:

ResourceQuotaFor single-cluster design, but in fact, development / production are often used in multi-cluster environment.- Most tasks such clusters through

deployment,mpijoband other high-level resource object commit, we hope that high-level resource object commit phase will be able to judge the quota. However,ResourceQuotawhen calculating resource requestpodgranularity, which can not meet this demand.

Based on the above problems, we need to carry out quota management by ourselves. And Kubernetes provides a dynamic admission mechanism, allowing us to write custom plug-ins to achieve request admission. Our quota management plan starts with this.

2 Principles of cluster dynamic admission

After the request to enter the K8s cluster is received by the API server, it will go through the following stages of sequential execution:

- Authentication/Authentication

- Access control (change)

- Format verification

- Access control (verification)

- Endurance

The request will be processed accordingly in the first four stages mentioned above, and it will be judged whether it is allowed to pass. After all stages have passed, it can be persisted, that is, stored in the etcd database, which becomes a successful request. Among them, the admission control (change) phase, mutating admission webhookwill be called, you can modify the contents of the request. In the admission control (verification) phase, validating admission webhookit will be called can be checked whether the requested content to meet certain requirements, to determine whether to allow or deny the request. These webhooksupport the expansion, can be independently developed and deployed to the cluster.

Although the admission control (change) stage, webhookalso can examine and reject the request, but it can not guarantee the order is called, can not restrict other webhookresource requests to be modified. Therefore, we deploy quota for verification validating admission webhook, arranged on admission control (validation) phase calls, check the requested resource, you can achieve resource quota management purposes.

3 plan

3.1 How to deploy the verification service in the cluster

Use custom in K8s cluster validating admission webhookneed to deploy:

ValidatingWebhookConfigurationConfiguration (need to enable ValidatingAdmissionWebhook cluster), used to define objects to what resources (pod,deployment,mpijobetc.) for verification, and to provide an address for service callback verification of the actual processing. Recommended in a cluster configurationServiceway to provide calibration service address.- The actual process of verification services through

ValidatingWebhookConfigurationaccessible address configuration can be.

A single cluster environment, will serve to verify deploymentthe way deployed in a cluster. In a multi-cluster environment, you can choose:

- Use virtual kubelet, cluster federation and other solutions to merge multiple clusters into a single cluster, which degenerates into a single cluster deployment.

- The verification service in

deloymentthe deployed one or more clusters, but it leads to communication service network for each cluster.

It should be noted that whether it is a single-cluster or a multi-cluster environment, the service processing verification requires resource monitoring, which is generally implemented by a single point. Therefore, it is necessary to choose the master.

3.2 How to implement verification service

3.2.1 Validation service architecture design

3.2.1.1 Basic component composition

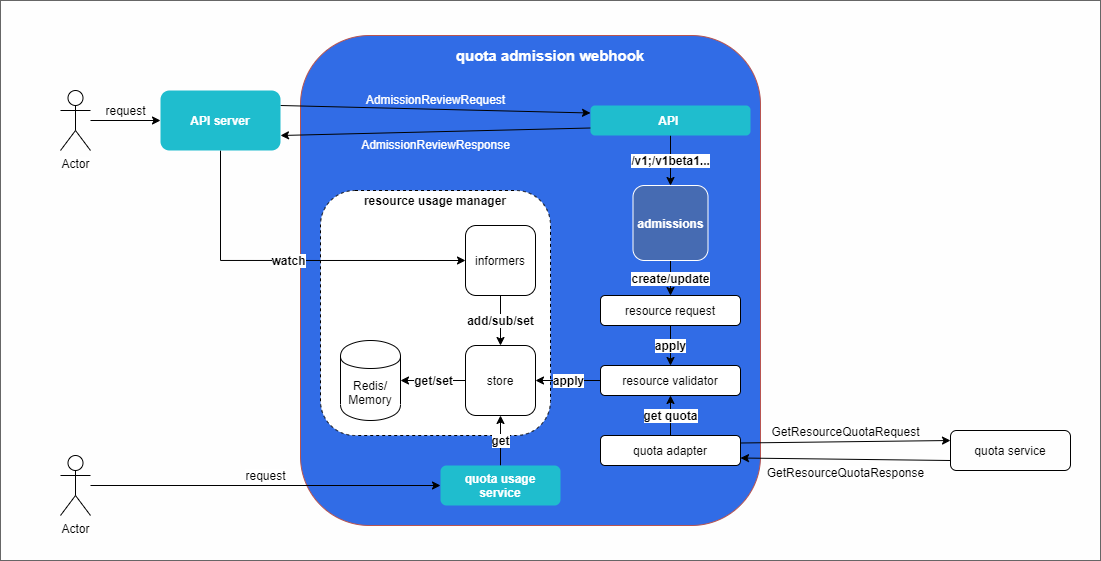

- Server the API : Request inlet cluster, calls

validating admission webhookto the verification request - API : Access service interface, using the AdmissionReview data structure agreed by the cluster as request and return

- Quota usage service : request resource usage interface

- Admissions : access service implementation, including

deploymentandmpijobdifferent types of access to resources - Resource validator : Quota verification for resource requests

- Quota adapter : connect to external quota service for validator to query

- Resource usage manager : Resource usage manager , maintain resource usage, realize quota judgment

- Informers : K8s provided by the watch mechanism to monitor cluster resources, including

deploymentandmpijob, in order to maintain the current resource use - Store : Store resource usage data, which can be realized by connecting to the local memory of the service, or realized by connecting to the Redis service

3.2.1.2 The basic process of resource quota judgment

User-created deploymentresources, for example:

- User to create

deploymentresources need to include the definition of the application group information is specifiedannotation, for exampleti.cloud.tencent.com/group-id: 1, as used herein, represents a group of application1resources (if there is no group information with the application, depending on the scene, directly rejected, or submit to the default set of applications, For example, an application group0, etc.). - Request by the API server to receive, because the cluster is configured correctly

ValidatingWebhookConfiguration, so the admission control validation phase, it will request the deployment of a clustervalidating admission webhookof API , using the structure specified K8sAdmissionReviewRequestas a request and look forward toAdmissionReviewResponsethe structure as a return. - Quota check service receives the request, it will enter the handles

deploymentresource admission logic, according to the action change request is CREATE or UPDATE to calculate the resource request or require a new application for release. - From

deploymentthespec.template.spec.containers[*].resources.requestsfield to apply for resource extraction, such ascpu: 2andmemory: 1Gito apply express. - Resource validator to find quota adapter to obtain application set

1quota information, such ascpu: 10andmemory: 20Gi, to quota representation. Together with the apply obtained above, apply for resources from the resource usage manager . - Resource usage manager has been through the informer acquiring monitor

deploymentresource usage and maintenance of the store in. Store can use local memory, so there is no external dependency. Or useRedisas a storage medium, convenient service expansion. - Resource usage manager received resource validator when requested, you can store found application group

1resource has been occupied by the current situation, for examplecpu: 8, andmemory: 16Gito usage indicate. The inspection found that apply + usage <= quota is considered that the quota is not exceeded, the request is passed, and finally returned to the API server .

The above is the basic process for realizing resource quota checking. There are some details worth adding:

- The API of the verification service must use https to expose the service.

- For unused resource types, such as

deployment,mpijobetc., you need to implement the corresponding admission and informer . - Each resource types may have different versions, such as

deploymentthere areapps/v1,apps/v1beta1and so on, need to be compatible according to the actual situation of the cluster. - When the UPDATE request is received, according to the resource type required

podif the field changes, whether it is necessary to reconstruct the current existingpodinstance, the number of computing resources in the correct application. - In addition K8s own resource types, such as

cpuother types of resources needed if quotas control customized, such as GPU type, etc., need to request the corresponding pre-allocated resources is goodannotations, such asti.cloud.tencent.com/gpu-type: V100 - In the resource usage manager carried usage, process applications and determine the quotas, there may be competition for resources , but the actual quota by checking resource creation fails and other issues. Next we will explain these two issues.

3.2.2 About resource application competition

Due to the existence of concurrent resource requests:

- Usage needs to be able to be updated immediately after resource request

- Concurrency control is required for usage updates

In the above step 7, when the Resource usage manager verifies the quota, it needs to query the current resource occupation of the application group, that is, the usage value of the application group . This usage value by the informers responsible for updating and maintenance, but because of the resource request is validating admission webhookpassed to the informer can be observed, there is a time difference. During this process, there may still be resource requests, so the usage value is inaccurate. Therefore, usage needs to be able to be updated immediately after resource request.

And the update of usage requires concurrency control, for example:

- Application Group

2's quota wascpu: 10, Usage iscpu: 8 - Into the two requests

deployment1anddeployment2applications using the application group2, they apply the samecpu: 2 - Need to be determined

deployment1, calculating Apply + Usage =cpu: 10, does not exceed the quota value,deployment1requests are allowed through. - usage is updated to

cpu: 10 - To judge again

deployment2, because the usage is updated tocpu: 10, apply + usage = is calculatedcpu: 12, which exceeds the value of quota , so the request is not allowed to pass.

The above process, easy to find usage is critical shared variables, you need to query and update the order. If deployment1and deployment2uncontrolled use usage is cpu: 8, it will lead deployment1, and deployment2requests are passed, so that the actual quota limit exceeded. In this way, the user may take over resource specified quota.

Possible solutions:

- The resource application enters the queue, and is consumed and processed by a single point of service in turn.

- Lock the critical section where the shared variable usage is located, and query and update the value of usage in the lock .

3.2.3 About resource creation failure

Due to resource competition, we require usage to be able to be updated immediately after resource request, but this also brings new problems. At 4. Admission Control (validation) , after phase, will enter the requested resource object 5. The persistence phase, the process may also be abnormal (such as other webhookrefuses the request, or the cluster off, ETCD failure, etc.) As a result, the task was not actually submitted to the cluster database successfully. In this case, we verify phase, it has increased the usage value, put no actual occupation of quotas counted as taking up the task of quotas. In this way, a user may consume insufficient resources as prescribed quota.

To solve this problem, the background service will periodically update the usage value of each application group globally . Thus, if the emergence of validation phase increased usage value, but the task is actually committed to the database failed, when the global update, usage value of the final will again be updated to accurately value the moment the application set of resource utilization in the cluster.

However, in rare cases, global update will happen this time: a success will ultimately credited etcd persistent resource object creation request has passed

webhookvalidation but not completed persistence moment. The presence of such moments, leading to a global update will bring users still occupy more than the quota of.

For example, in the previous example, afterdeployment1updating the usage value, a global update happened to occur. At this time,deployment1the information just has not been credited etcd, it will update the global usage again updated the old value, this will causedployment2can also be adopted, thus exceeding the quota limit.

But in general, from validation to the persistence of a very short time. Low frequency in the global updates, such cases rarely occur . In the future, if there is further demand, more complex solutions can be used to circumvent this problem.

3.2.3 native ResourceQuotaworks

Quota Management K8s cluster natively in ResourceQuotaresponse to these applications compete for resources and resource creation fails problems, a similar solution:

Real-time update to resolve application competition issues

After checking the quota, the resource usage is updated immediately. The optimistic lock that comes with the K8s system guarantees concurrent resource control (see the implementation of checkQuotas in the K8s source code for details ) and solves the problem of resource competition.

checkQuotas The most relevant source code interpretation in:

// now go through and try to issue updates. Things get a little weird here:

// 1. check to see if the quota changed. If not, skip.

// 2. if the quota changed and the update passes, be happy

// 3. if the quota changed and the update fails, add the original to a retry list

var updatedFailedQuotas []corev1.ResourceQuota

var lastErr error

for i := range quotas {

newQuota := quotas[i]

// if this quota didn't have its status changed, skip it

if quota.Equals(originalQuotas[i].Status.Used, newQuota.Status.Used) {

continue

}

if err := e.quotaAccessor.UpdateQuotaStatus(&newQuota); err != nil {

updatedFailedQuotas = append(updatedFailedQuotas, newQuota)

lastErr = err

}

}Here quotasis the quota after the calibration information, wherein newQuota.Status.Usedthe field records the resource usage of the quota. If the resource request for the quota is passed, when this code is run, Usedthe amount of newly applied resources has been added to the field. Subsequently, the Equalsfunction is called, that is, if Usedthe field has not changed, indicating that no new resources application. Otherwise, it will run to e.quotaAccessor.UpdateQuotaStatusimmediately go to the quotas in accordance with the information etcd newQuota.Status.Usedupdates.

Timed global update to solve the problem of creation failure

Regularly update the resource usage globally (see the implementation of Run in the K8s source code for details ) to solve possible resource creation failures.

Run The most relevant source code interpretation in:

// the timer for how often we do a full recalculation across all quotas

go wait.Until(func() { rq.enqueueAll() }, rq.resyncPeriod(), stopCh)Here rqis ResourceQuotaa corresponding reference from the controller object. The Controller run Runcycle, continuous control of all ResourceQuotaobjects. Cycle, uninterrupted regular call enqueueAll, that is, all the ResourceQuotapress-queue, modify its Usedvalue, for a global update.

4 Reference

- Controlling Access to the Kubernetes API

- Dynamic Admission Control

- A Guide to Kubernetes Admission Controllers

- Deep understanding of Kubernetes Admission Webhook

- https://github.com/kubernetes/kubernetes/blob/v1.13.0/test/images/webhook/main.go

- Admission Webhooks: Configuration and Debugging Best Practices - Haowei Cai, Google