rnn seems to be better at saving and updating information, while cnn seems to be better at precise feature extraction; rnn input and output size is flexible, while cnn size is relatively rigid.

1 ask

Speaking of recurrent neural network RNN, our first reaction may be: time sequence.

Indeed, RNN is good at time-related applications (natural language, video recognition, audio analysis). But why is it not easy for CNN to handle time series while RNN can? Why did we say that RNN has a certain memory ability?

2 general forecast

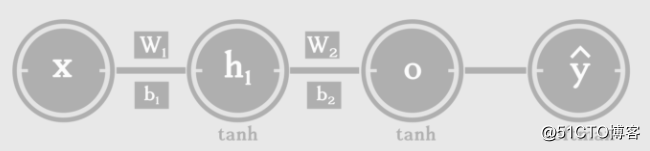

Mathematically, if we want to predict the next word y of a word x, we need 3 main elements (input word x; context state h1 of x; function such as softmax to output the next word through x and h1):

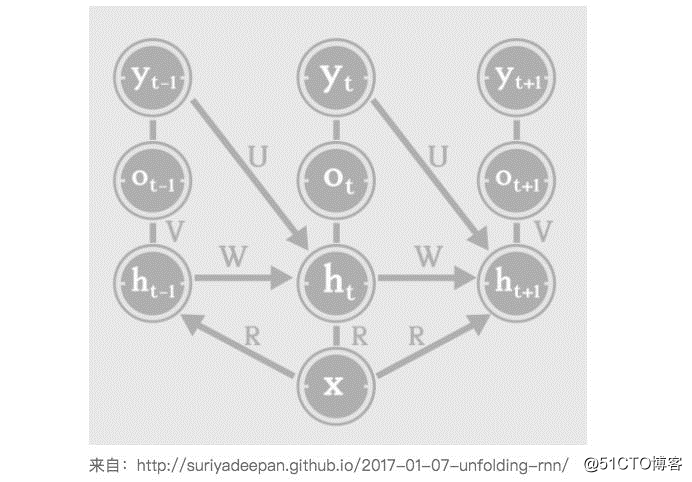

From: http://suriyadeepan.github.io/2017-01-07-unfolding-rnn/ The

mathematical calculation is as follows:

The above is a very simple directed acyclic graph (DAG), but this is only a word prediction at time t. This simple prediction can even be replaced by cnn or other simple prediction models.

3RNN introduction

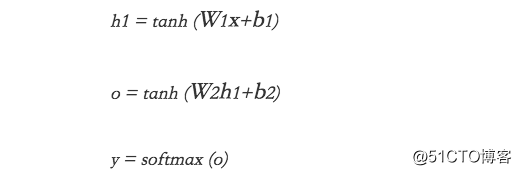

However, cnn is not good at updating or saving state. We know that at the next time t+1, the context (state) of word x changes:

Therefore, the difference between the threshold structure of RNN and the convolution structure of CNN (different information storage methods) also leads to a certain extent that RNN is good at dealing with time series problems. Even if we use other models instead of the threshold network, we need a loop structure similar to the above figure to update the context state at each point in time and save it.

Therefore, in the application of time series, it is so important to update the state

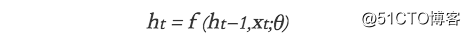

at each point in time . We need a network like rnn: at each point in time, the same update function f is used to update the context state. The state is based on the state at the previous time point t-1 and the input of this signal xt:

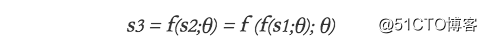

In addition, the threshold network of RNN has a natural Markov-like nature, and the current state S3 has included several State information before the time point (where the semicolon represents the previous state encoded with the parameter θ): the

current prediction only needs to be predicted based on the current state. This huge ability to save state information seems to be what RNN threshold units are good at. (Cnn seems to be better at precise feature extraction)

The traditional rnn is to input a word at each time t to generate another word, but the actual situation is not all that simple.

4RNN deformation

Finally, we look at some deformed RNN structures.

Vector to Sequence

has some applications such as outputting subtitles of a picture according to a picture:

RNN for this kind of problem requires the input of the feature vector x of the picture (such as the last hidden layer of cnn), and the output is a sentence Subtitles, but the y words in this sentence are generated one by one.

This requires rnn to generate a time series at a time from a single vector:

Of course, the generation of the time series follows the rule of cyclically updating the internal state in chronological order.

5 sequence to sequence (seq2seq)

In machine translation problems, such as the translation of an English sentence into a French sentence, the number of corresponding words may not be equal. So the traditional rnn must be modified.

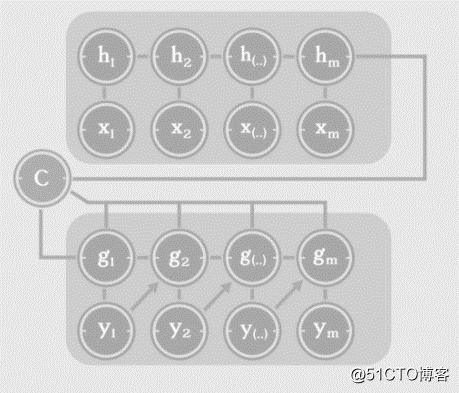

A common practice is to use two rnn, one rnn is used to encode sentences (encoder), and one rnn is used to decode the desired language (decoder):

here C is the context information, together with the encoded hidden layer information, Enter the decoder input for translation.

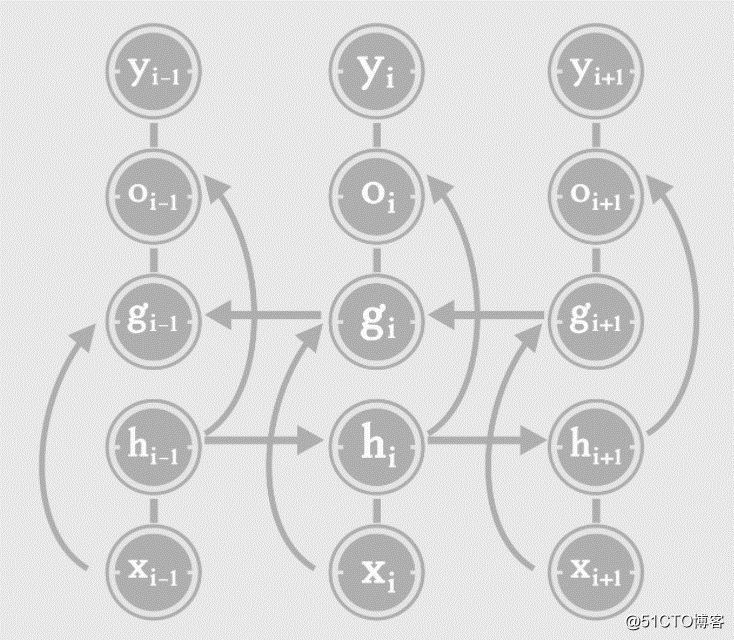

6 Two-way RNN:

The traditional RNN predicts a word, just captures the context state before the word, while the bidirectional RNN also captures the influence of the word environment behind the word on the word:

The two-way RNN in the above figure can actually be regarded as two RNNs. One RNN is the traditional RNN we mentioned earlier (only the hidden state h layer); the other RNN is the RNN that captures the word environment after the word (hidden state g That floor).

Such RNN takes into account the contextual information of a word to the left and right, and the accuracy is generally improved.

Reference: http://suriyadeepan.github.io/2017-01-07-unfolding-rnn/

Recommended reading:

The SCIR Center of Harbin Institute of Technology launched the dialogue technology platform-DTP (beta)

[Deep learning actual combat] How to deal with RNN input variable-length sequence padding in pytorch

[Basic theory of machine learning] Detailed understanding of maximum posterior probability estimation (MAP)

欢迎关注公众号学习交流~