You will gain

Master the method of training your own data set based on deep learning in Windows system

Master the labelImg image annotation method

Master the YOLOv4 data set organization method

Master YOLOv4 training, testing, performance statistics methods

Master the test and application of the trained network model in the front-end software

Master the communication method of the front-end software to transmit the test results to the PLC or robot

For people

Friends and practitioners who are interested in artificial intelligence machine vision and YOLOv4 target detection

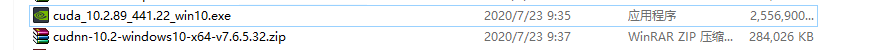

Software Environment:

Windows7 or 10 x64; cuda 10.2; cudnn7.6.5; Python3.7; VisualStudio2019; OpenCV4.3; MVS; Intelligent AI image detection system

YOLOv4 is here! Speed and accuracy are doubled!

Compared with YOLOv3, the new version of AP (accuracy) and FPS (frame rate per second) increased by 10% and 12%, respectively.

YOLOv4 not only has an outstanding target recognition effect, but also has unique features in defect detection. I happen to have a detection project for notch and crack defects of magnetic blocks. Use the idea of trying it out and learn about YOLOv4. The results are surprisingly good. Background interference and misjudgment problems that cannot be solved by traditional vision, now record the entire project implementation method for your reference, and make the reasoning part into an app executable file for your evaluation, welcome to communicate.

The YOLO series is an end-to-end real-time target detection method based on deep learning. This article will teach you how to use labelImg to label and use YOLOv4 to train your own data set.

The YOLOv4 training software in this article uses the original software of AlexyAB/darknet to compile it in OpenCV4.3 and VisualStudio2019 (the compiled software is provided at the end, and friends who can’t compile can use it directly. The next step is to develop a more friendly software for everyone, please Follow) , do project demonstration on Windows system. Including: installing the software environment, installing YOLOv4, labeling your own data set, organizing your own data set, modifying configuration files, training your own data set, installing front-end reasoning software, testing the trained network model, logical judgment, detection results and PLC Output.

The front-end reasoning software in this article is developed by VisualStudio2015; it integrates OpenCV4.4 and a variety of machine vision algorithms with sql database, modbus, Mitsubishi mc protocol, http and other communication functions. It can run without GPU, and the i5 processor speed is within 100ms. It can basically meet the real-time requirements.

Install the software environment

Find a host with a discrete graphics card, my configuration is windows10 64bit I5 8G, GeForce 1650S 4G video memory (here training YOLOv4 Tiny is enough, the training method of YOLOv4 standard version is the same, the video memory should be more than 8G).

Install cuda10.2, download link CUDA Toolkit 10.2 Download | NVIDIA Developer

After installation, you can open the system information of nvidia's control panel, as follows

Unzip the training development package ( compiled in OpenCV4.3 and VisualStudio2019 based on the original software of AlexyAB/darknet) in the attachment of this article to the local computer, and keep the path the same as my computer.

Copy the picture set you want to train to darknet-master-V4\VOCdevkit\VOC2009\JPEGImages. The pictures need color pictures. There is already a data set of magnet defects in the attachment.

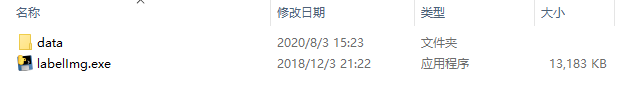

Mark the defect location and type for the picture: open the LabelImg software (downloadable in the attachment)

Click "Open Dir" in LabelImg to open the folder where the dataset image is located as darknet-master-V4\VOCdevkit\VOC2009\JPEGImages, as shown below

Click the "Change Save Dir" button in LabelImg and select the path to save the label data as E:\ylhwork\pycharm\darknet-master-V4\VOCdevkit\VOC2009\Annotations, as shown below

After configuring the path, you can start marking. The lower right corner of the software will list the pictures in the directory. Click the left and right arrows on the left side of the software to switch to the next picture to view the mark, and the button in the lower left corner of the software can add edit marks.

The label name can only be used in Chinese for the time being. Remember the label name and use it later. This project defines three types of labels, the names are rip, gap, and label, as shown in the figure.

Defects should be marked, pictures without defects or pictures that do not want to be trained, do not need to be marked, after marking all pictures, proceed to the next conversion work

The marked pictures and marked data are converted into trainable patterns in a python script, and a python program needs to be installed first (I will develop a more friendly software in the next step, so stay tuned). The attachment of this article is available for download. In the computer where python is installed, open E:/ylhwork/pycharm/darknet-master-V4/VOCdevkit/VOC2009/helmet-label.py with a text editor, and edit the content of the classes to be the same as the name and number of tags

Run the python script program: python.exe E:/ylhwork/pycharm/darknet-master-V4/VOCdevkit/VOC2009/helmet-label.py, or click run in the python IDE

If the operation is successful, the marking work is completed, and the converted file will appear in the E:\ylhwork\pycharm\darknet-master-V4\VOCdevkit\VOC2009\labels folder

Only the last two steps are needed before the final training. Edit the configuration file: under \darknet-master-V4\build\darknet\x64\cfg, copy a yolov4-tiny-xxx-train.cfg as your configuration file, Search for [yolo] near the keyword, modify the actual number of classes and the number of filters to (3*classes+5), as shown below

Modify the category name: Open the names file under \darknet-master-V4\build\darknet\x64\data for editing, and the content and order should be consistent with the names in the python script

Modify the data file: copy a data file under darknet-master-V4\build\darknet\x64\data, and check whether the image path, names path, etc. are consistent with the previous settings

After all configuration information is saved, create a command script yolov4_tiny_train_magnate.cmd for the training command; the format of the training command is:

darknet.exe detector train data/magnate.data cfg/yolov4-tiny-magnate-train.cfg yolov4-tiny.conv.29 -gups 0,1,2,3

All the preparatory work is done, click on the yolov4_tiny_train_magnate.cmd file, the software starts to execute the training command, and normally it will display a chart interface and a command line interface that constantly refreshes the data

If there is a problem, an error will be reported within a few minutes. The general errors are mainly file not found or insufficient memory, etc. Please carefully check all file names and paths in the above steps. If there is insufficient memory, you can modify the first few lines in the cfg configuration file After the

batch and subdivisions in the numbers, try again.

If the data is refreshing all the time, congratulations on your success. Generally, training takes several hours (see the host configuration). I usually let him run for one night. If you look at it tomorrow morning, please ensure that the hard disk has more than 2G free space. After a few hours, a more perfect table picture should look like a curve that is constantly converging, as shown below

Check the trained model file: \darknet-master-V4\build\darknet\x64\backup There will be many questions about the weights file, generally the last one is the best

The training is over, now you can test the training results. Copy the cfg configuration file yolov4-tiny-magnate-train.cfg you just trained to yolov4-tiny-magnate-test.cfg, and modify lines 3, 4, 6, and 7 save

Save the three files yolov4-tiny-magnate-test.cfg, magnate.names and yolov4-tiny-magnate-train_last.weights for future use.

Now use the compiled front-end executable software to test the training effect (it can be run on a computer without GPU). After downloading the program, install the VC runtime library, MVS driver and application in turn

Double-click the exe executable file in the intelligent AI image software to open the front-end software. If the dll file is missing, it may be that you have not installed the vc runtime library or mvs driver library. Please install the first two steps first

After opening the software and entering the main interface, click to switch to the setting mode, first add the test image source, we first select the file image test, after the test is ok, the file can be changed to the camera image

In the file image tool, we select the file path mode and add the folder path during training

Click OK to return to the setting interface, then click the tool add button to add a deep recognition tool, click add

In the deep recognition tool, add just yolov4-tiny-magnate-test.cfg, magnate.names and yolov4-tiny-magnate-train_last.weights path to the setting interface

In the label setting interface, set the actual name corresponding to each label. We like to change it to a Chinese name. Click the execute detection button, you can refer to the execution effect

After clicking OK, return to the main setting interface, click the run button to test the execution effect, we add OK/NG judgment logic, click the IO button in the main setting interface, add CCD judgment conditions and comprehensive judgment conditions

Click the OK button to return to the main setting interface, click the run button to test the overall running effect

After the test results are OK, save the entire project file to the hard disk, which can be used next time or in the actual project

to sum up:

The trained model is in the front-end reasoning software, under pure i5 or higher CPU, the recognition time is less than 100ms, under the GPU environment, the recognition time is less than 10ms, and 99% of the defects visible to the naked eye are recognized, achieving relatively good detection results. This front-end software can be used in actual projects after a slight modification.

insufficient:

At present, the training environment is not optimized enough. The next step will be to develop a more efficient training software in one step, so stay tuned. It took an afternoon to finally explain the whole project process, and the operating instructions and software have shortcomings. Please bear with me and judge, and make progress together.

annex:

Download address of all software routines and data sets in this article, link: https://pan.baidu.com/s/1YPjR_TPJYLmriXNVnNbgZg Extraction code: 52ai

Other download links: [The following is hidden, visible after reply]