Time complexity and space complexity are very important concepts in algorithms.

1. Time complexity:

The time efficiency (execution time) of an algorithm.

1. Big O notation

The "order of magnitude" function is used to describe the fastest growing part of the T(n) function when the scale n increases. This order of magnitude function is generally represented by "big O", denoted as O(f(n)). It provides an approximation of the actual number of steps in the calculation process. The function f(n) is a simplified representation of the dominant part of the original function T(n).

In the above example of the summation function, T(n) = n + 1. When n increases, the constant 1 becomes less and less sense of existence for the final result. If we need T(n) As an approximation, what we have to do is to ignore 1 and directly think that the running time of T(n) is O(n). You must understand here. It is not that 1 is not important to T(n), but that when n increases to a large value, the approximate value obtained by dropping 1 is also very accurate.

To give another example, for example, there is an algorithm T(n) = 2n^2 +2n +1000,

when n is 10 or 20, the constant 1000 seems to play a decisive role in T(n). But when n is 1000 or 10000 or greater? n^2

played a major role. In fact, when n is very large, the latter two are of no importance to the final result. Similar to the above example of the summation function, when n gets larger and larger, we can ignore other terms and only focus on using 2n^2

to represent the approximate value of T(n). Similarly, the effect of coefficient 2 will become smaller and smaller as n increases, so it can be ignored. At this time, we will say that the magnitude of T(n) is f(n) = n^2,

which is O(n^2).

2. Best case, worst case and average case

Although not shown in the previous two examples, we should note that sometimes the running time of the algorithm also depends on the "specific data" rather than just "the size of the problem." For such algorithms, we divide their performance into "best case", "worst case" and "average case".

A certain data set can make the algorithm perform extremely well, which is the most "best case", and another different data can make the algorithm perform extremely poorly, which is the "worst case". However, in most cases, the execution of the algorithm is between these two extreme cases, which is the "average case". Therefore, we must understand the difference between different situations, and don't be brought into rhythm by extreme situations.

For the "optimal situation", there is no great value, because it does not provide any useful information, and only reflects the most optimistic and ideal situation, without reference value. "Average situation" is a comprehensive evaluation of the algorithm, because it fully and comprehensively reflects the nature of the algorithm, but on the other hand, there is no guarantee for this measurement, and not every operation can be in this way. Complete within the situation. For the "worst case", it provides a guarantee that the running time will not be broken anymore, so generally the time complexity we calculate is the time complexity of the worst case , which is the same as what we usually do. It is a truth to consider the worst case.

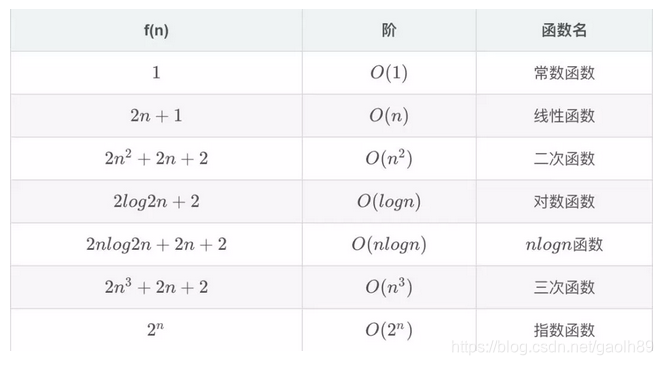

In our subsequent algorithm learning process, we will encounter a variety of order of magnitude functions. Below I will list several common order of magnitude functions for you:

2. Space complexity

The space complexity of an algorithm refers to the storage space consumed by the algorithm . The calculation formula is calculated as: S(n) = O(f(n)). Where n is also the size of the data, f(n) here refers to the function of the storage space occupied by n.

At present, the space complexity is actually more about this concept here, because the storage level of today's hardware is relatively large, generally not to reduce the space complexity a little bit, it is more to think about how to optimize the algorithm Time complexity. So when we write code every day, we have derived the practice of using "space for time" and it has become the norm.