System high availability/disaster tolerance is a topic that every system operation and maintenance personnel is very concerned about. Only when high availability is done well can we calmly deal with regional system disasters. And data is the core that needs to be protected.

What high-availability architecture designs can we do based on the AWS cloud platform database?

Currently in China, there are two independent regions, Beijing and Ningxia. Regarding RDS, not only can Multi-AZ be configured, but AWS also supports cross-region master-slave synchronization of RDS.

Today, we will discuss the performance test of Mysql-based high availability/disaster tolerance architecture.

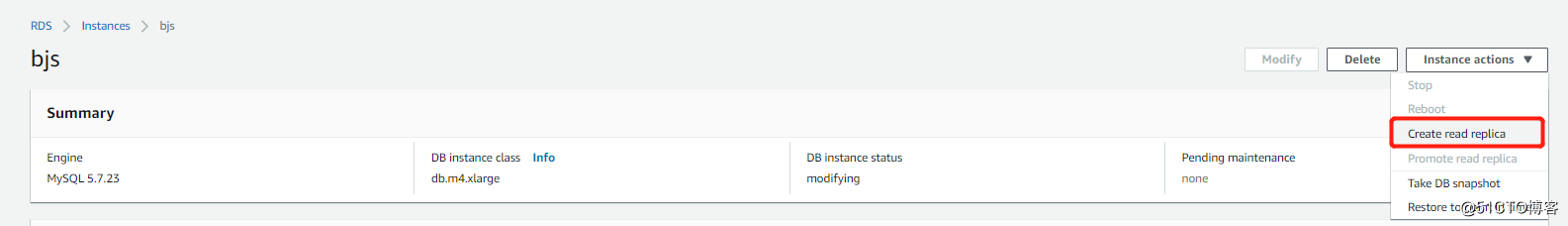

1. Create Replica Slave

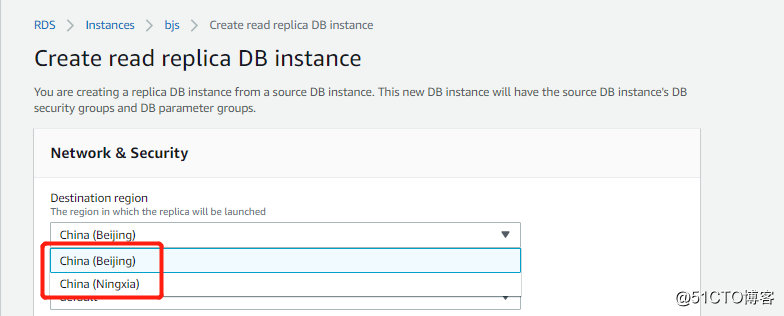

2. Here we can choose to create the Replica slave to "Beijing region" or "Ningxia region".

3. Due to the distance between Beijing and Ningxia, everyone will be concerned about the synchronization of Beijing Master and Ningxia Replica. Will the lag be larger due to network delays?

Today I will conduct a test to see how much the network delay will affect the master-slave synchronization.

(Note: There is no direct connection between the Beijing region and Ningxia region. In other words, the cross-region data synchronization of customers' EC2 or other services can only transmit data through the public network or private lines prepared by themselves. However, within AWS, It will provide a dedicated line for the master-slave synchronization of RDS and the Cross Region Replication function of S3 , and provide a certain bandwidth for each account )

Test environment preparation,

<1. EC2 m4.xlarge (4CPU 16G) <2. RDS db.r4.xlarge (4CPU 16G) Mysql version 5.7.12 <3. Create two replicas, one in Ningxia and one in Beijing, mainly to compare synchronization conditions, confirm and distinguish differences in network factors <4. Mysql creates 20 tables, each table has 1000W rows of data, and the data volume is about 40G <5. The main library uses sysbench for stress testing, and sysbench use method reference: https://blog.51cto.com/hsbxxl/2068181 <6. Parameter modification of the main library binlog_format=row <7. From the following parameter settings of the library, use the concurrent performance of the relay work of 5.7 slave_parallel_workers=50

4. sysbench script

Execute the sysbench script through timed tasks and execute it every 5 minutes # crontab -l */5 * * * * /root/test.sh Each execution of 150s, 50 sessions concurrently read and write 20 tables, each table 1000W rows of data # cat test.sh date >> sync.log sysbench /usr/share/sysbench/tests/include/oltp_legacy/oltp.lua \ --mysql-host = bjs.cbchwkqr6lfg.rds.cn-north-1.amazonaws.com.cn --mysql-port = 3306 \ --mysql-user=admin --mysql-password=admin123 --mysql-db=testdb2 --oltp-tables-count=20 \ --oltp-table-size=10000000 --report-interval=10 --rand-init=on --max-requests=0 \ --oltp-read-only=off --time=150 --threads=50 \ run >> sync.log

5. On the main library, the sysbench test data output is as follows. According to the output, the read and write data of the main library can be determined for reference:

[ 10s ] thds: 50 tps: 1072.24 qps: 21505.53 (r/w/o: 15060.08/4296.37/2149.08) lat (ms,95%): 89.16 err/s: 0.00 reconn/s: 0.00 [ 20s ] thds: 50 tps: 759.83 qps: 15195.04 (r/w/o: 10640.78/3034.21/1520.05) lat (ms,95%): 215.44 err/s: 0.00 reconn/s: 0.00 [ 30s ] thds: 50 tps: 631.10 qps: 12645.47 (r/w/o: 8853.55/2529.71/1262.21) lat (ms,95%): 262.64 err/s: 0.00 reconn/s: 0.00 ...... [ 130s ] thds: 50 tps: 765.94 qps: 15295.77 (r/w/o: 10701.91/3062.27/1531.59) lat (ms,95%): 125.52 err/s: 0.00 reconn/s: 0.00 [ 140s ] thds: 50 tps: 690.08 qps: 13776.96 (r/w/o: 9636.39/2761.41/1379.16) lat (ms,95%): 150.29 err/s: 0.00 reconn/s: 0.00 [ 150s ] thds: 50 tps: 749.51 qps: 15033.64 (r/w/o: 10532.97/3000.45/1500.22) lat (ms,95%): 147.61 err/s: 0.00 reconn/s: 0.00 SQL statistics: queries performed: read: 1458030 write: 416580 other: 208290 total: 2082900 transactions: 104145 (689.50 per sec.) queries: 2082900 (13790.04 per sec.) ignored errors: 0 (0.00 per sec.) reconnects: 0 (0.00 per sec.) General statistics: total time: 151.0422s total number of events: 104145 Latency (ms): min: 8.92 avg: 72.39 max: 7270.55 95th percentile: 167.44 sum: 7538949.20 Threads fairness: events (avg/stddev): 2082.9000/18.44 execution time (avg/stddev): 150.7790/0.44

Now let’s take a look at the synchronization of the two replicas in Beijing and Ningxia.

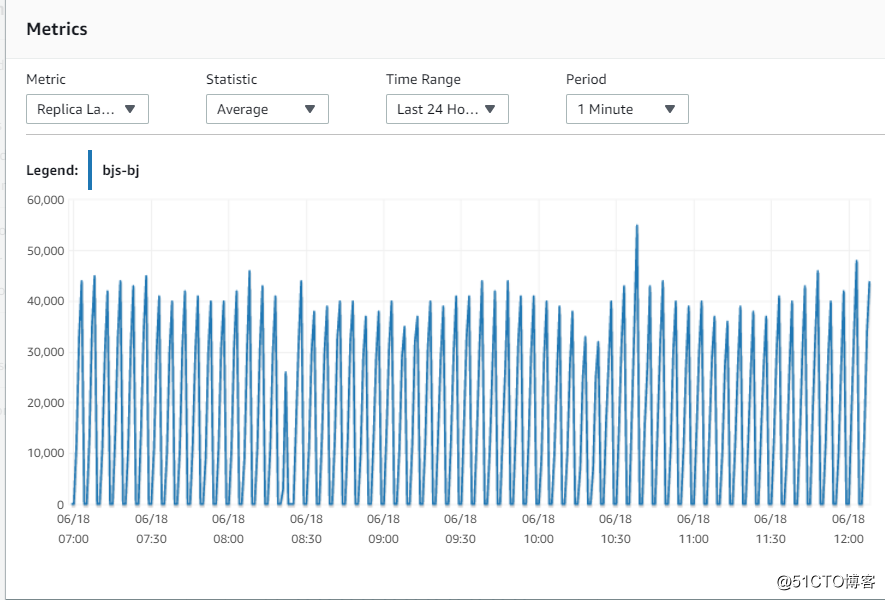

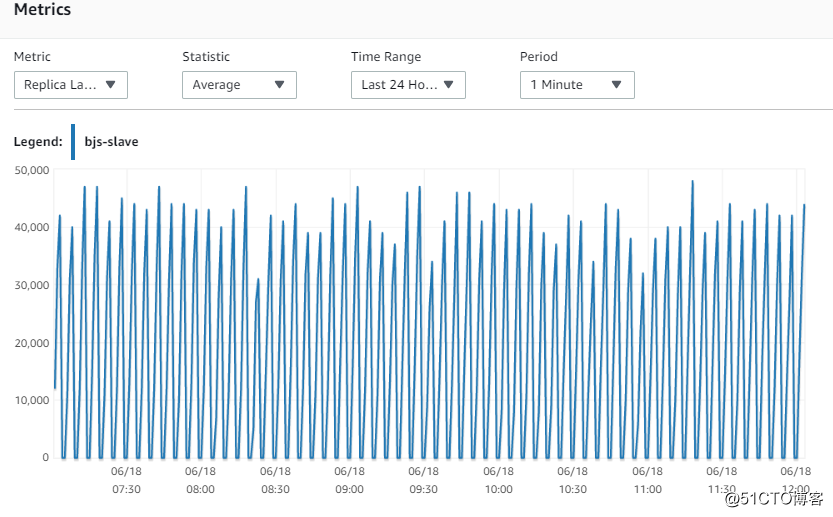

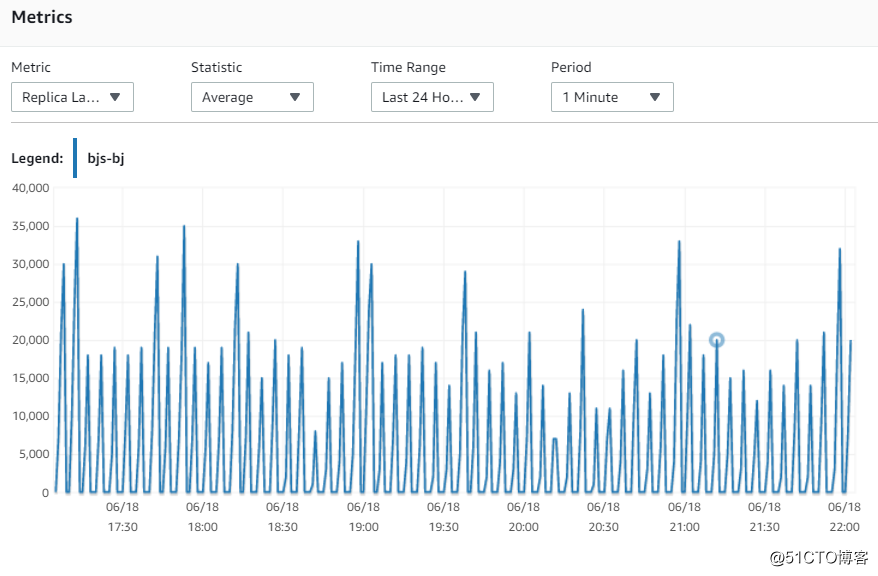

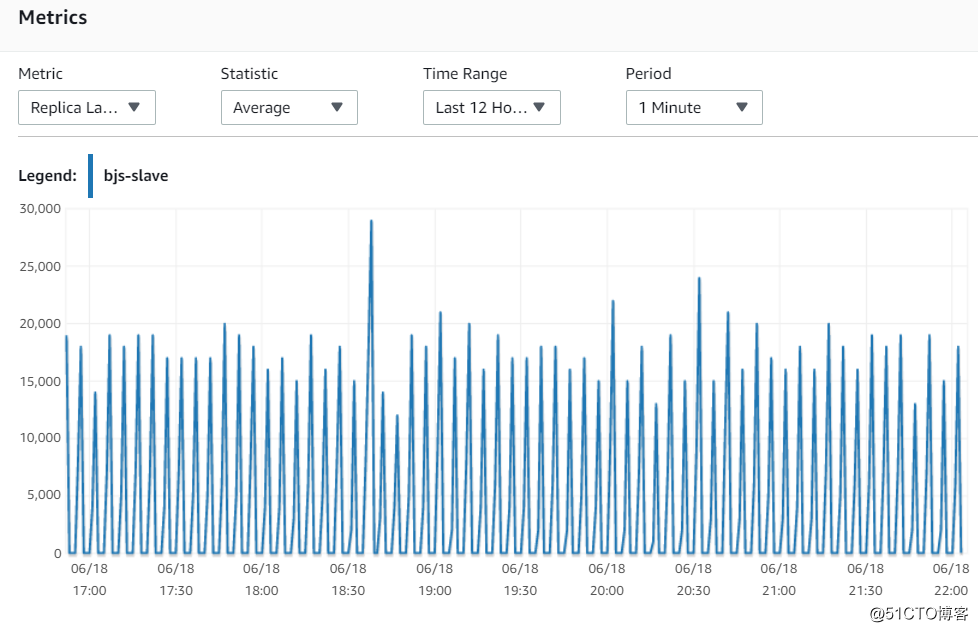

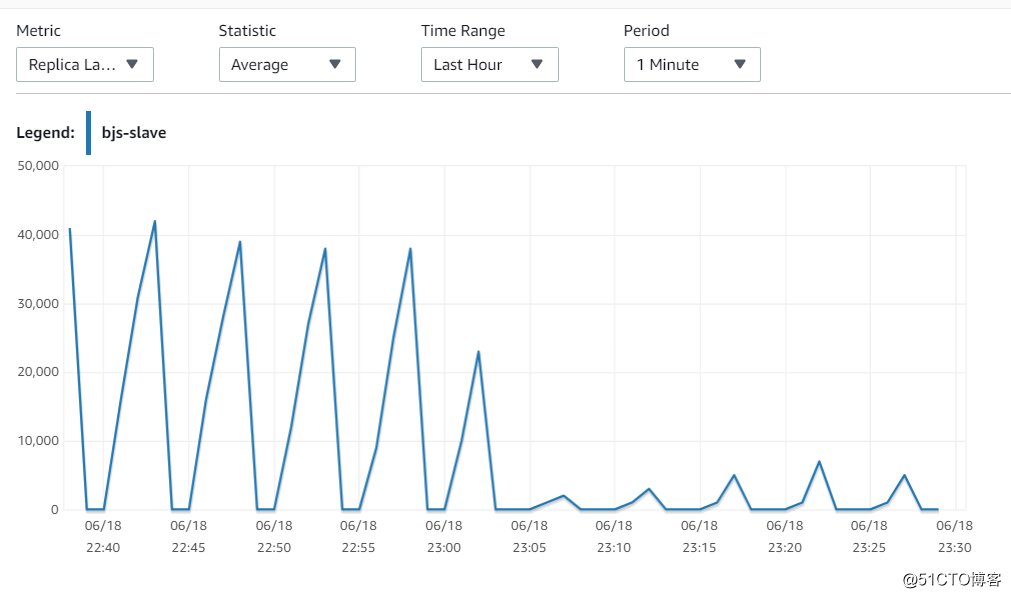

6. Indicator Replica Lag (Milliseconds)

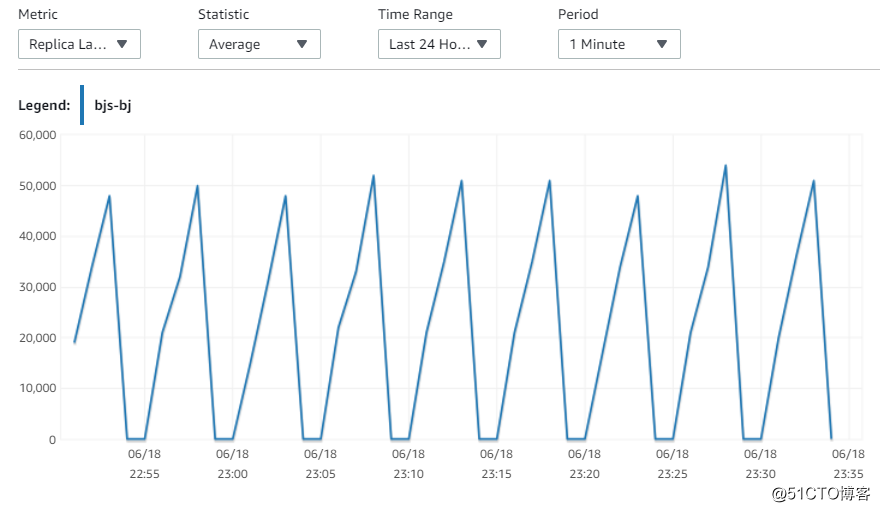

It can be seen that the synchronization performance of the replicas in Beijing and Ningxia is basically the same. In every 150s write cycle, there will be an increase in lag, and the basic delay is about 40-50s. This shows that the network is not a bottleneck, because the replica and master in Beijing are in the same subnet in the same AZ.

Beijing

Ningxia

7. According to the results of the above figure, it can be analyzed that the delay of the master and the slave has not kept up with the delay caused by the replay process of Mysql itself.

So, I upgraded the RDS models of the two replicas to 8CPU 32G, tested them, and compared the results.

It can be seen that upgrading the model is still helpful, the lag is significantly reduced, and the delay is maintained at 20~30S

Beijing

Ningxia

It also maintains the same lag as Beijing, which shows that the network is not a synchronization bottleneck. Mysql's own replay log is a performance bottleneck.

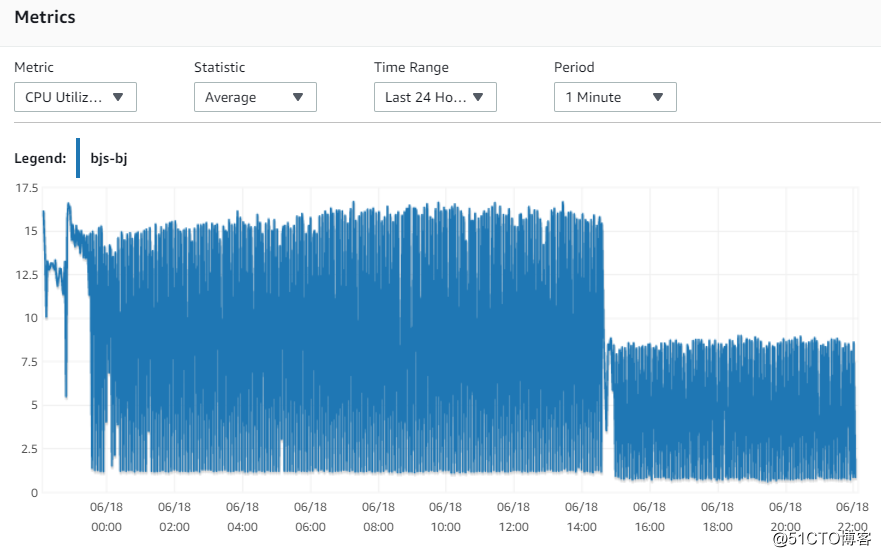

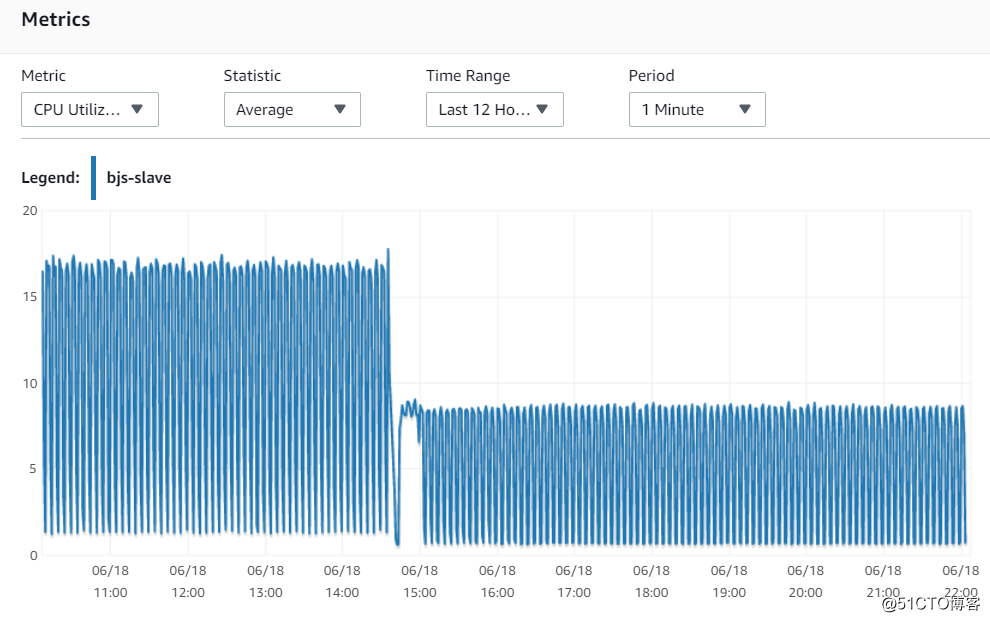

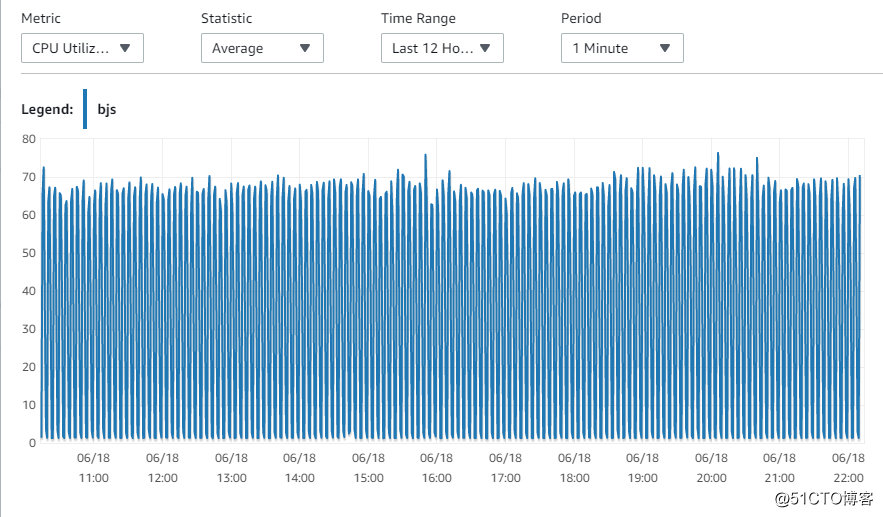

8. Observe the CPU usage again

Since the two replicas have only the load of synchronized data, it can be seen that the performance consumption of synchronized data is only 15%

The latter curve is after I upgraded to 8CPU 32G, only 7.5% load. It shows that hardware resources are sufficient, but Mysql's own reasons cause synchronization lag.

Beijing

Ningxia

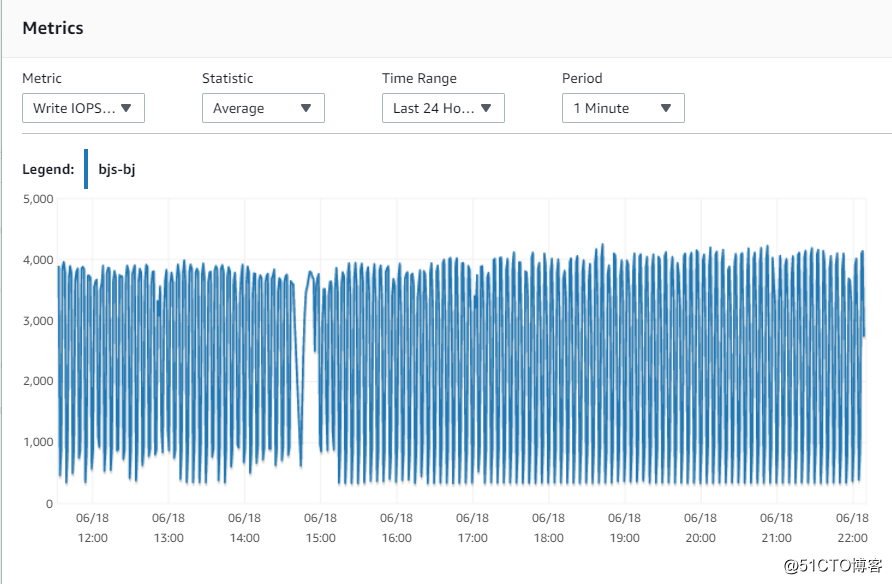

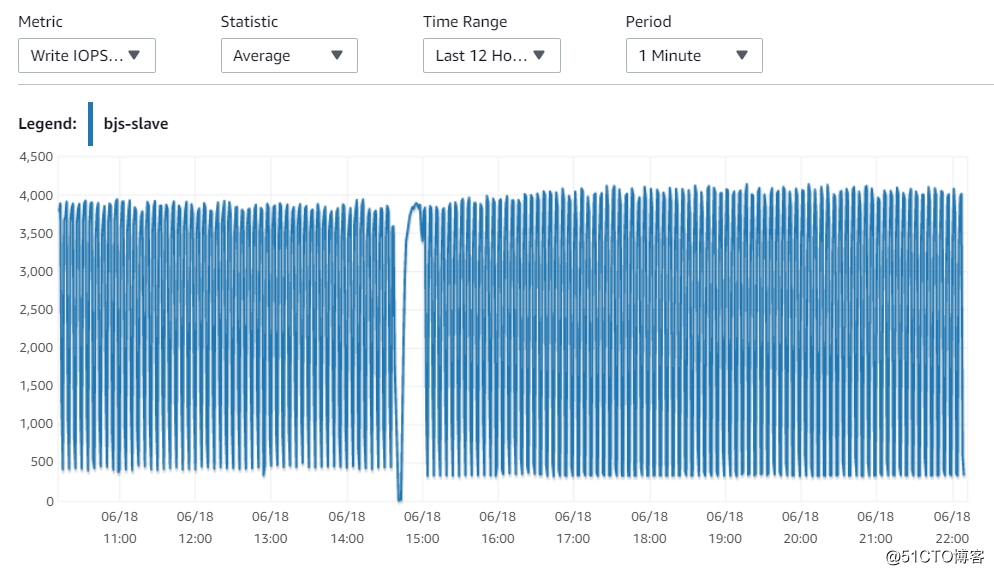

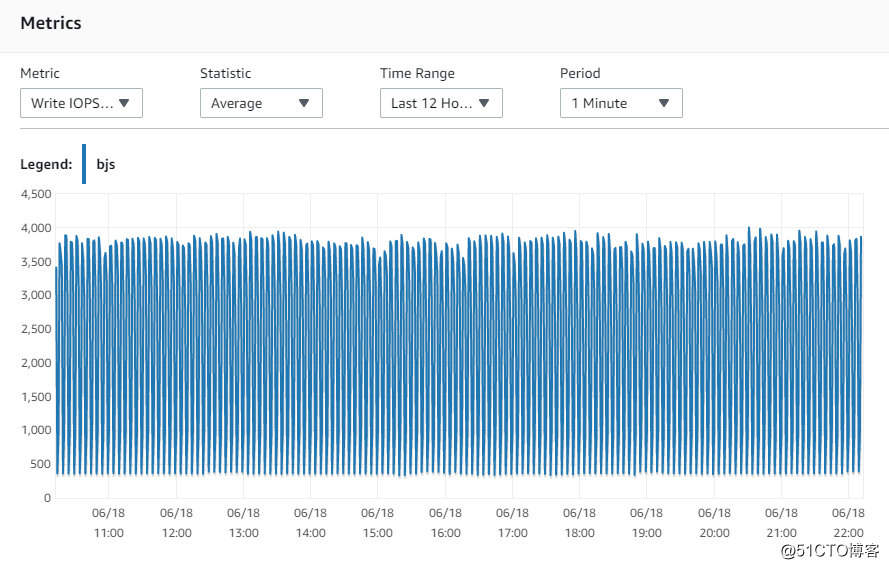

9. Write IOPS

Stable around 4000, I actually allocated 6000 IOPS, so the disk is not a bottleneck. In addition, the master library can complete read and write operations. In theory, the slave library only has write operations, and the load is lower. Physical hardware resources will not be a limitation.

Beijing

Ningxia

10. The performance indicators of the main library, you can see that the resources of the main library are still sufficient for the same configuration. Can meet the needs of reading and writing.

CPU performance indicators

11. Write IOPS performance indicators

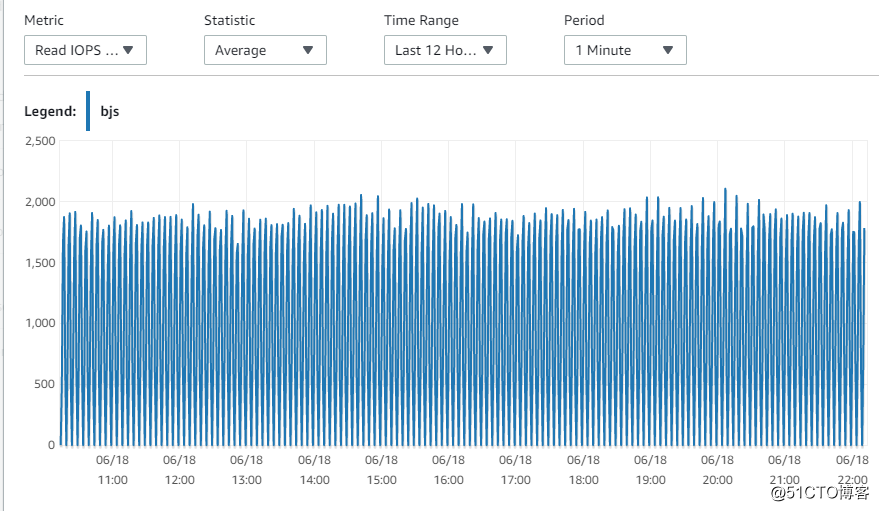

12. Read IOPS performance indicators

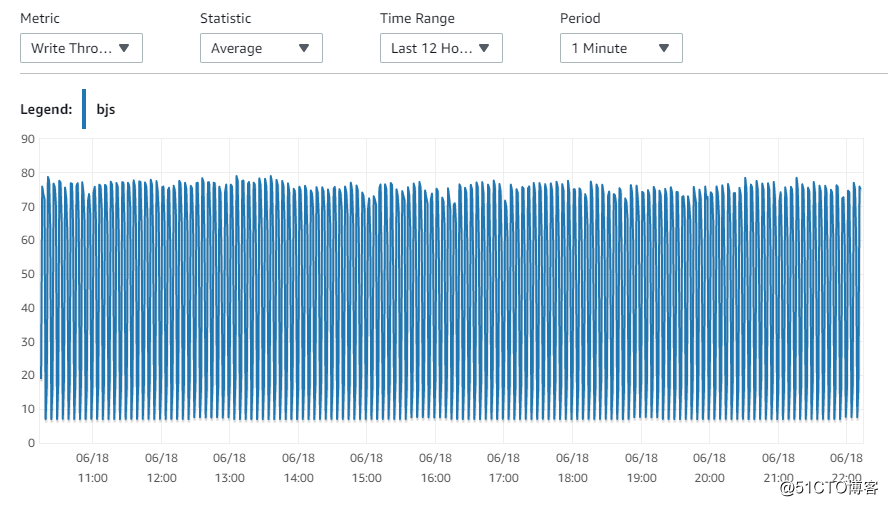

13. Write throughput performance indicators

14. Since the database host resources, and the network is not a bottleneck. Then Mysql's own problems, we need to gradually adjust.

Next, adjust and optimize the parameters of mysql.

The optimization is to minimize the impact of the related parameters of the standby database, and basically close all the IO related parameters to follow the OS-level mechanism for order placement.

In fact, the data security requirement of the replica is much smaller than that of the master database. Therefore, when the synchronization speed of the slave database is slow, adjusting the following parameters according to the actual situation will have a significant effect.

innodb_flush_log_at_trx_commit=0 innodb_flush_neighbors=0 innodb_flush_sync=0 sync_binlog=0 sync_relay_log=0 slave_parallel_workers=50 master-info-repository = TABLE relay-log-info-repository = TABLE

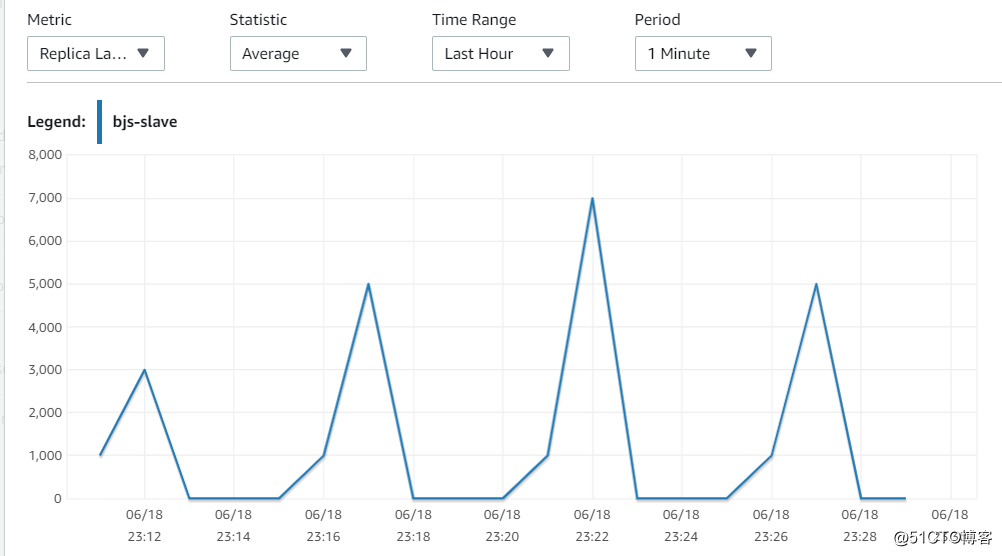

15. Results after tuning

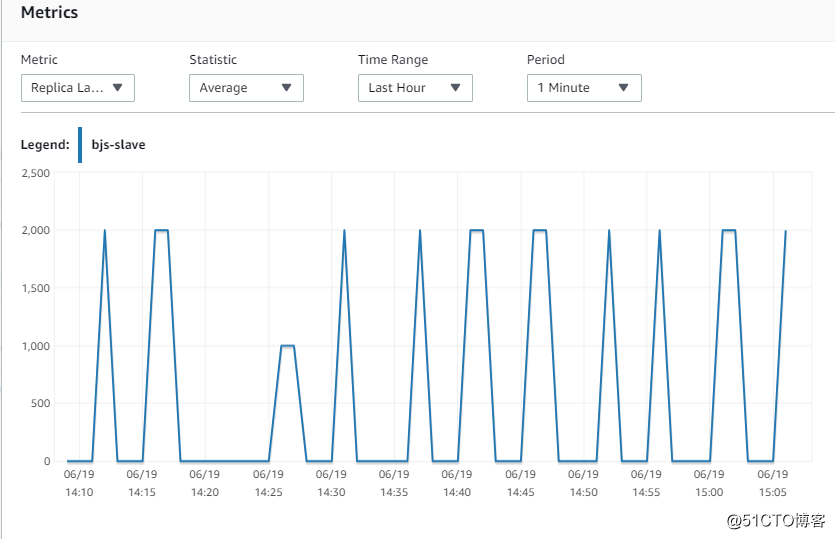

Ningxia

After adjusting the parameters, the effect is immediate, from a delay of 40s to about 5s

16. Look at the replica in Beijing again (Mysql parameters are not adjusted)

The previous lag is still maintained, and the delay is maintained at 50s.

Beijing

17. Finally, adjust a parameter to allow workers to be parallel. By default, this parameter is database, which means that workers will work in parallel only when multiple databases are modified. In reality, most customers have multiple tables in one database (schema). Therefore, modify the parameter to LOGICAL_CLOCK to play a parallel role.

slave_parallel_type=LOGICAL_CLOCK

When the workers are parallel, the master-slave delay can be reduced to within 2S, which can basically satisfy most business scenarios.

18. Test summary

Based on the following conditions

Hardware: 4CPU 16G memory 6000IOPS disk

In the case of 50 concurrent, 700 TPS per second, 1.5W QPS, 1W write.

The Mysql master and slave from Beijing to Ningxia can control the delay within 10S.

If the data security requirements for the replica are relatively high, that is, without adjusting the parameters of Mysql placement, the delay is in the range of 40-50s.

According to the actual situation, you can obtain better synchronization performance by adjusting the mysql parameters and increasing the replica model.

As an open source RDBMS, Mysql has made great progress with the technical support of Oracle. However, compared with the synchronization of Oracle's Dataguard, there is still a big gap between the performance of replica.

I will continue to study how to make Mysql sync faster.

19. About the tuning of Mysql master-slave synchronization:

<1. The master-slave synchronization delay principle of MySQL database.

Answer: Talking about the principle of MySQL database master-slave synchronization delay, we must start with the principle of mysql database master-slave replication. MySQL master-slave replication is a single-threaded operation. The master database generates binlogs for all DDL and DML, and binlog is written sequentially. , So the efficiency is very high. Slave_IO_Running thread to the main library to fetch logs, the efficiency is very high, the next step, the problem is coming, the slave Slave_SQL_Running thread implements the DDL and DML operations of the main library in the slave. The IO operation of DML and DDL is random, not sequential, and the cost is much higher. It may also cause lock contention for other queries on the slave. Since Slave_SQL_Running is also single-threaded, a DDL card master takes 10 minutes to execute. Then all subsequent DDLs will wait for the execution of this DDL before continuing, which causes a delay. Some friends will ask: "The same DDL on the main library also needs to be executed for 10 minutes, why does the slave delay?" The answer is that the master can be concurrent, but the Slave_SQL_Running thread cannot.

<2. How does the MySQL database master-slave synchronization delay occur?

Answer: When the TPS concurrency of the main library is high, and the amount of DDL generated exceeds the range that a sql thread of the slave can bear, then the delay will occur. Of course, there may be lock waits with the large query statement of the slave.

<3. MySQL database master-slave synchronization delay solution

Answer: The easiest solution to reduce slave synchronization delay is to optimize the architecture and try to make the DDL of the main library execute quickly. There is also the main library is written, which has higher data security, such as sync_binlog=1, innodb_flush_log_at_trx_commit=1 and the like, while slave does not need such high data security. It is completely possible to set sync_binlog to 0 or turn off binlog. innodb_flushlog can also be set to 0 to improve the execution efficiency of sql. The other is to use hardware devices that are better than the main library as the slave.

Reference documents:

https://www.cnblogs.com/cnmenglang/p/6393769.html

https://dev.mysql.com/doc/refman/5.7/en/replication-options-slave.html