Return to Deep Learning Network model development history of classic catalog

Previous: depth articles - classic History of Deep Learning Network model (c) elaborate structure and characteristics of the ZF-Net

Next: depth articles - classic History of Deep Learning Network model (five) elaborate GoogleNet structure and characteristics (including v1, v2, v3)

In this section, VGG16 elaborate structure and characteristics, the next section GoogleNet elaborate structure and characteristics (including v1, v2, v3)

VGG16 论文:Very Deep Convolutional Networks for Large-Scale Image Recognition

II. Network Classic (Classic Network)

4. VGG16

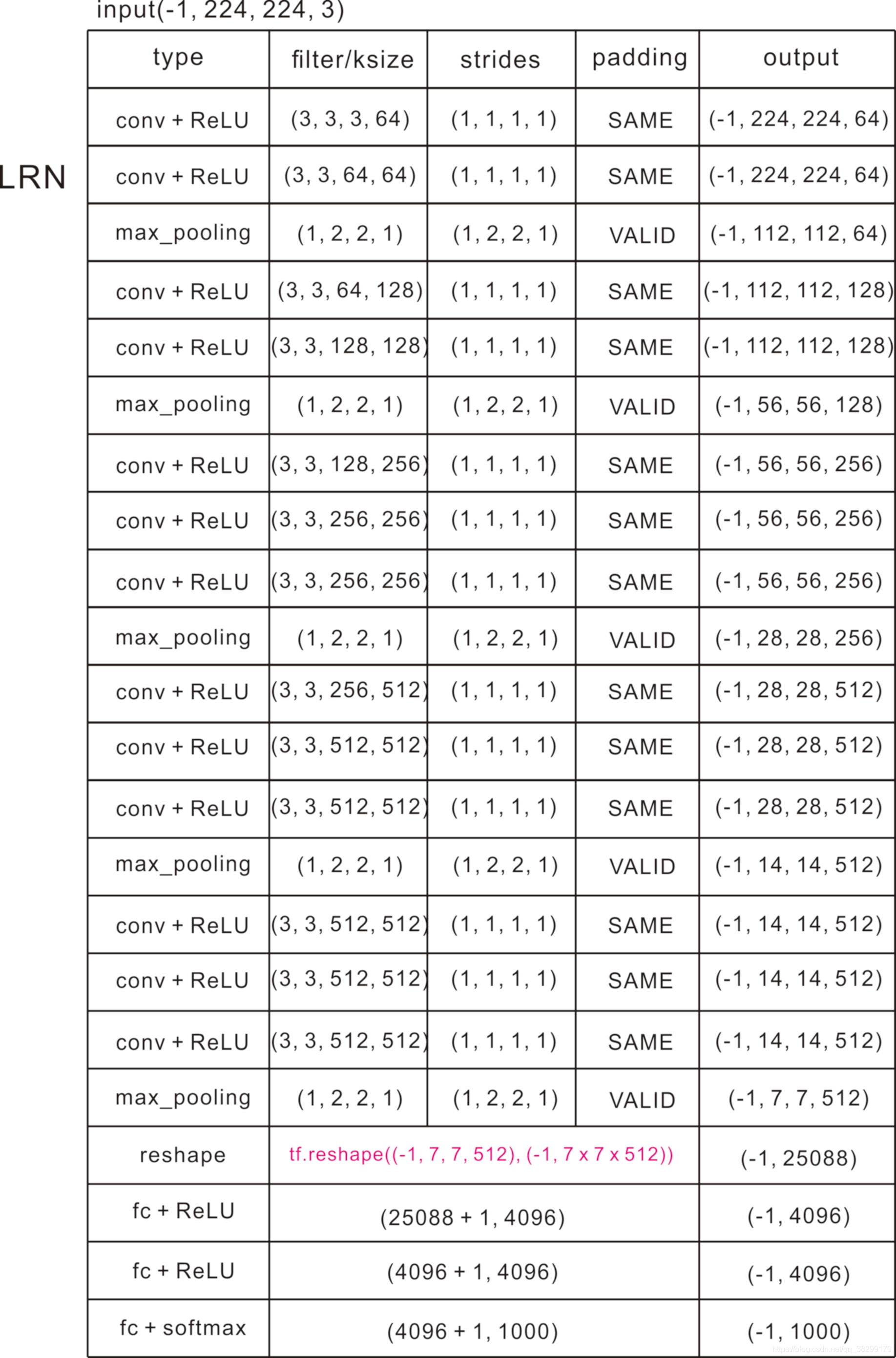

VGG16 proposed by Oxford University VGG (Visual Geometry Group, VGG), the first and second classification is the task of the 2014 contest ImageNet positioning tasks in the underlying network. Past experience and observation AlexNet ZF-Net and found, convolution kernel smaller, deeper network, will help improve accuracy, so, VGG16 and ran this direction to develop obtained.

(1) Network Description:

. (2) VGG16 new features:

. ① In VGG16, all are convolution kernel size , steps are

, padding are SAME ; all are pooled max_pool (), nuclear size are pooled

, steps are

, padding are VALID .

An improvement ②. VGG16 AlexNet is compared to several continuous convolution kernel instead of AlexNet larger convolution kernel (

). Given receptive field (the size of the local input image output), given receptive field (the size of the local input image output), a small accumulation of a convolution kernel is superior to large volumes convolution kernel, nonlinear layer can be increased because the multi-layered network depth to ensure a more complex model of learning, but the cost is still relatively small (less parameters, 5 x 5> 3 x 3 + 3 x 3)

. ③ advantages:

a. convolution series larger than a single convolution kernel, has fewer parameters, will also have more than a single change in a non-linear convolution, for more complex patterns. The convolution kernel series, features multiple extractions, to delicate than a single convolution kernel feature extraction. padding stride less than the size of the core, covering the extraction features may also improve the characteristics of sophistication.

b. VGG16 model is relatively stable, easy to transplant, so many network backbone to do with it exemplary.

. ④ Disadvantages:

a. the number of network architecture considerable weight, it consumes disk space. Bigger than the weight ResNet-53's.

b. Due to the number VGG16 fully connected nodes, plus the network relatively deep, so training is very slow.

Return to Deep Learning Network model development history of classic catalog

Previous: depth articles - classic History of Deep Learning Network model (c) elaborate structure and characteristics of the ZF-Net

Next: depth articles - classic History of Deep Learning Network model (five) elaborate GoogleNet structure and characteristics (including v1, v2, v3)