Article Directory

proxy

Acting in front of the theory has been said before, this time looking directly with requests to set the proxy

import requests

url = 'https://www.baidu.com/s?wd=ip&ie=UTF-8'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36'

}

proxies = {

'http': '117.88.176.252 :3000',

'https': '117.88.176.252:3000',

}

r = requests.get(url, headers=headers, proxies=proxies)

print(r.status_code)

If the return is to explain the success of 200, but when the next visit may not be successful, if we test it, go west thorn agency to find a new, easy to use one o'clock

ssl certification

HTTPs CA certificate

no ssl certificate web site is accessible, but some mainstream browser to open the site ssl certificate that is not the type of site will prompt "unsafe" and other information.

I believe we have seen such an interface

Certification ssl substantially as follows:

-

Server to obtain a certificate (assuming that this can not be forged certificate) to the CA, when the browser first request to the server, the server returns the certificate to the browser. (Certificate contains: a public information about the applicant + and + the issuer's signature)

-

The browser to get the certificate, start the verification of the information certificate, the certificate is valid (not expired, etc.). (Verification process more complicated, detailed above).

-

After verification of the certificate if the certificate is valid, the client generates a random number is then encrypted with the public key of the certificate, encrypted, to the server, the server is decrypted with the private key to obtain the random number. After the two sides began to use the random number as a key, the data to be transferred to encrypt and decrypt.

In case of no ssl certification of the case, if the user does not point advanced that users can not see, we had said on the first day, only to climb reptiles page takes users to access, users do not see now, reptiles also not climb acridine

In any case, press routine climb it

import requests

url = 'https://www.desktx.com'# 这个是提示危险的网页

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36'

}

response = requests.get(url=url,headers=headers)

data= response.content

with open ('ssl_requests.html','wb') as f:

f.write(data)

# 为了更清楚的看到,我把它写入文件中

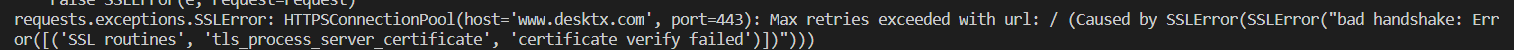

Embarrassing, direct error,

error stick out on

error stick out on requests.exceptions.SSLError: HTTPSConnectionPool(host='www.desktx.com', port=443)

fundamentally Rom ah (crying)

HTTPs because there is a third party CA certificate authentication, but this site, although it is https, but there is no third-party CA certificate, certificate of their own

Solution: Ignore certificate directly tell the web

import requests

url = 'https://www.desktx.com'# 这个是提示危险的网页

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36'

}

response = requests.get(url=url,headers=headers,verify=False)

data= response.content

with open ('ssl_requests.html','wb') as f:

f.write(data)

When coupled with the direct request verify=Falseit acridine

Another method is the global cancel certificate validation

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

The first general use it just fine ~ ~ ~

cookie

Remember before using simulated login urlllib library, all networks do, recall, there are two ways before us

- After logging capture, direct write it in the cookie request header

- When you log capture, get the form data (submitted FormData ), create an

cookiejarobject, and throughcookiejarthe use ofHTTPCookieProcessorcreating ahandlerprocessor, and then create our ownopennerto send the request.

This time we use requests to implement what

we know, cookie is a problem for the dialogue, we must first create a session, after which all requests are sent over the session.

There is no longer a demonstration of packet capture analysis, the same as in the previous example, you can forget the point here to see

Here to see the bar code directly,

import requests

# 如果碰到会话相关问题,要先创建一个会话

s = requests.Session()

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36'

}

formdata = {

'rkey': '', # 这里是我们抓包得到的数据,填进去

'password': '',# 这里是我们抓包得到的数据,填进去

'origURL': 'http://www.renren.com/home',

'key_id': '1',

'icode' : '',

'f' : 'http%3A%2F%2Fwww.renren.com%2F823000881',

'email' : ' ', # 这里是我们抓包得到的数据,填进去

'domain': 'renren.com',

'captcha_type': 'web_login',

}

post_url = 'http://www.renren.com/ajaxLogin/login?1=1&uniqueTimestamp=2020159150'

r = s.post(url=post_url, headers=headers, data=formdata)

# 用刚才的会话发送请求

# 这下s里面就保存了cookie,我们这时候访问个人主页就可以成功了

get_url = 'http://sc.renren.com/scores/mycalendar'

ret = s.get(url=get_url, headers=headers)

# with open('renren_r.html', 'wb') as f:

# f.write(ret.text)

print(ret.text)

# 这样用s访问,而不是request 就可以登录成功

Similarly, if you use the first method, we need to do is to get unsolicited incoming cookie parameters

import requests

# 直接传入 cookie 的方法

cookie = '' # 这里填自己抓包得到的cookie

# 这个是cookie字符串,但我们要传入的是字典

# 我们先创建一个字典

cookie_dict = {}

# 我们手动转换一下,先从 封号 拆开

cookie_list = cookie.split(';')

for cookie in cookie_list:

# 再从 '=' 拆开

cookie_dict[cookie.split('=')[0]] = cookie.split('=')[1]

# 我们也可以写字典推导式,来生成字典

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36'

}

url = ''# 这个是自己要访问的url,

r = requests.get(url=url, headers=headers, cookies=cookie_dict)

with open('renren_r.html', 'wb') as f:

f.write(r.content)

auth certification

This example does not demonstrate is that we can say something simple, if a company intranet, you can try

import requests

auth = (username, pwd) # 这个是一个元组,访问内网的时候,传进去就好了

response = requests.get(url, headers=headers, auth=auth)

# 这样就可以了

Here we will probably finished school library requests, but we have not found, we are currently saved pages, it makes no practical effect, so then we will study the data parsing crawling tomorrow first learn regular expressions Decryption

I came to praise, and if that something can be learned, then, like a point and then go, welcome to correct me passing Gangster Comments