Import tensorflow AS TF from tensorflow.examples.tutorials.mnist Import input_data # load data sets MNIST = input_data.read_data_sets ( " MNIST_data / " , one_hot = True) # input picture is 28 * 28 n_inputs = 28 # input line, a line there are 28 data MAX_TIME = 28 # total of 28 rows lstm_size = 100 # hidden layer n_classes = 10 # 10 Category the batch_size = 50 # for each batch of 50 samples n_batch mnist.train.num_examples // = the batch_size # calculates a total number of lot # where none indicates a first dimension may be any length tf.placeholder = X (tf.float32, [None, 784 ]) # correct labels Y = tf.placeholder (tf.float32, [None, 10 ]) # initialization weights weights = tf.Variable (tf.truncated_normal ( [lstm_size, n_classes], STDDEV = 0.1 )) # initialization value paranoid biases = tf.Variable (tf.constant (0.1, Shape = [n_classes])) # define RNN network DEF RNN (X-, weight, biases): Inputs = tf.reshape (X-, [-. 1 , MAX_TIME, n_inputs]) # define LSTM substantially the CELL lstm_cell = tf.contrib.rnn.BasicLSTMCell (lstm_size) Outputs, final_state = tf.nn.dynamic_rnn (lstm_cell, Inputs, DTYPE = TF. float32) Resultstf.nn.softmax = (tf.matmul (final_state [. 1], weights) + biases) return Results # calculation results returned rnn Prediction = RNN (X, weights, biases) # loss function cross_entropy = tf.reduce_mean (tf. nn.softmax_cross_entropy_with_logits (= logits Prediction, Labels = Y)) # use AdamOptimizer optimized trian_step tf.train.AdamOptimizer = (. 4-1E ) .minimize (cross_entropy) # store the result in a Boolean list correct_prediction = tf.equal ( tf.argmax (Y,. 1), tf.argmax (Prediction,. 1)) # the argmax return to the one-dimensional position of the maximum value of the tensor located # seeking accuracy accuarcy = tf.reduce_mean (tf.cast (correct_prediction, tf.float32) ) #把correct_prediction变为float32类型 #初始化 init=tf.global_variables_initializer() with tf.Session() as sess: sess.run(init) for epoch in range(6): for batch in range(n_batch): batch_xs,batch_ys=mnist.train.next_batch(batch_size) sess.run(trian_step, feed_dict={x:batch_xs,y:batch_ys}) acc=sess.run(accuarcy, feed_dict={x:mnist.test.images,y:mnist.test.labels}) print ("Iter "+str(epoch)+", Testing Accuarcy= " + str(acc))

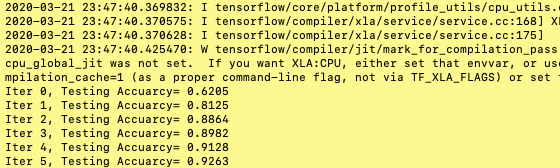

operation result: