Official address:https://github.com/THUDM/ChatGLM3

1 Save the code locally

method 1:

git clone https://github.com/THUDM/ChatGLM3Method 2:

https://github.com/THUDM/ChatGLM3/archive/refs/heads/main.zip2 Create Docker file

Note: Please complete the Docker installation first, please refer to the installation method:

Docker usage and local Yolov5 packaging tutorial_Father_of_Python's blog-CSDN blog

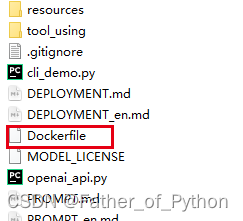

①Create a new Dockerfile

②Write in the file:

#基于的基础镜像

FROM python:3.11.6

#代码添加到code文件夹

ADD . /usr/src/app/uniform/ChatGLM3

# 设置code文件夹是工作目录

WORKDIR /usr/src/app/uniform/ChatGLM3

# 安装支持

RUN pip install -i https://pypi.tuna.tsinghua.edu.cn/simple/ -r requirements.txt3 Create Docker

①Run the following command:

docker build -t chatglm3 .

docker run -it --gpus all --net=host --ipc=host --privileged=true --name test01 --ulimit core=-1 -v F:/ChatGLM3:/usr/src/app/uniform/ChatGLM3 env LANG=C.UTF-8 /bin/bashNote: Please change F:/ChatGLM3 in the above command to the path where you save the project locally.

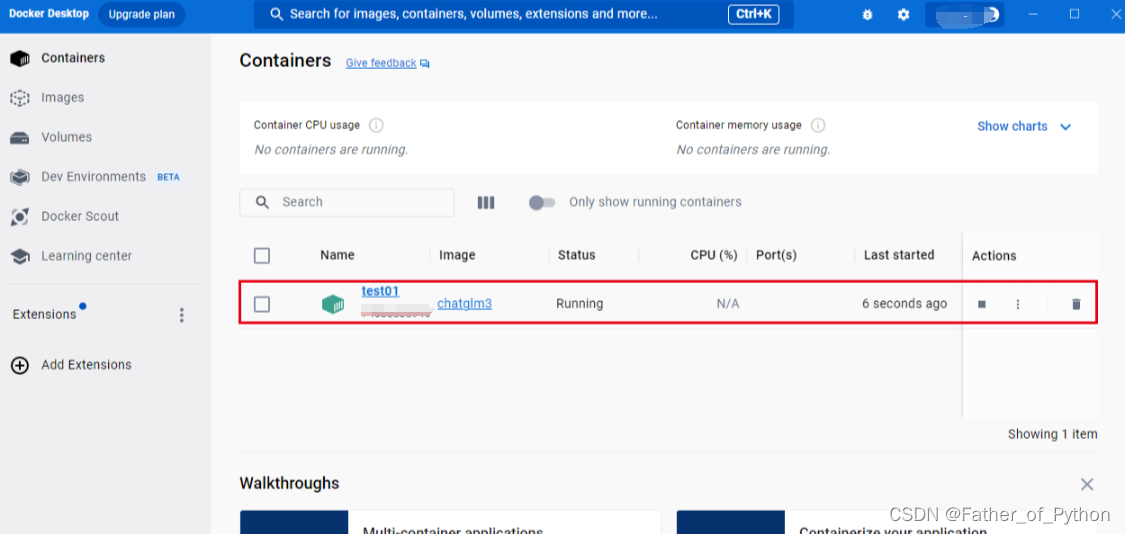

② Check the operation status:

4 Enter Docker to execute the command

Excuting an order:

cd /usr/src/app/uniform/ChatGLM3/basic_demo

python web_demo.pyThe weight file will be downloaded at this time, which may take a long time. If there is a ladder, remember to enable global mode. If you don’t have a ladder, you can download it manually:chatglm3-6b

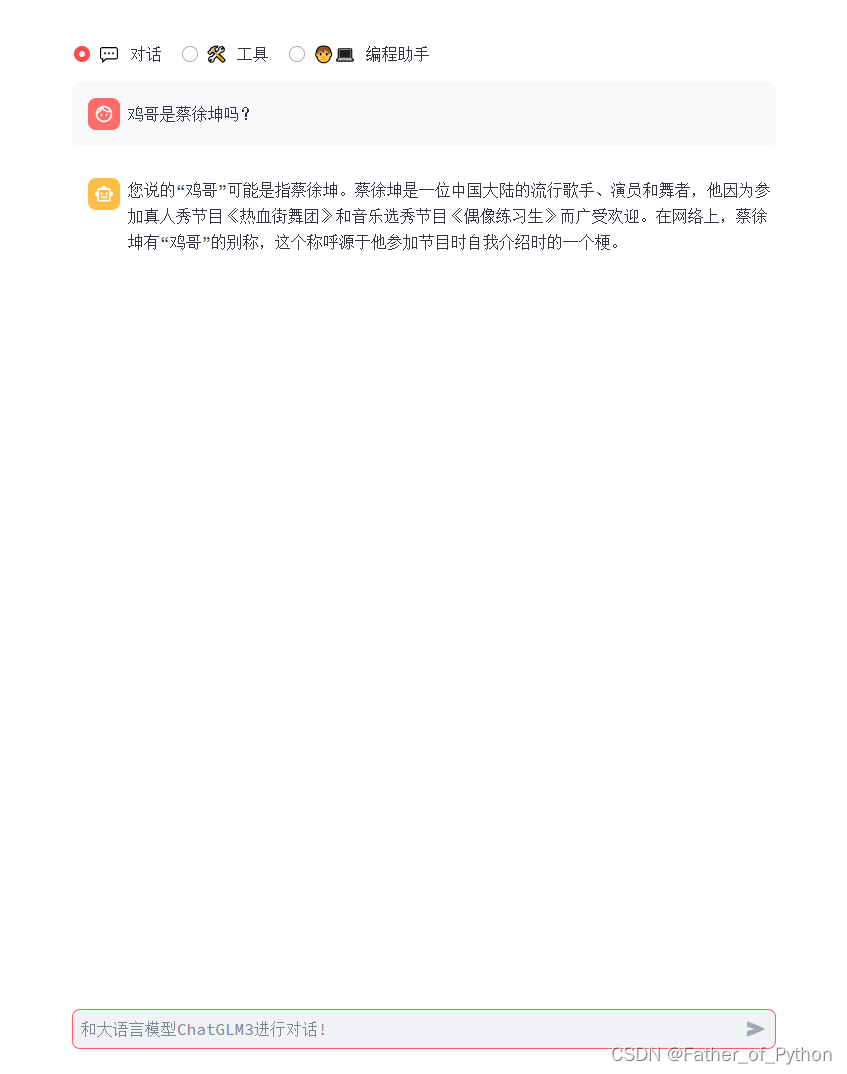

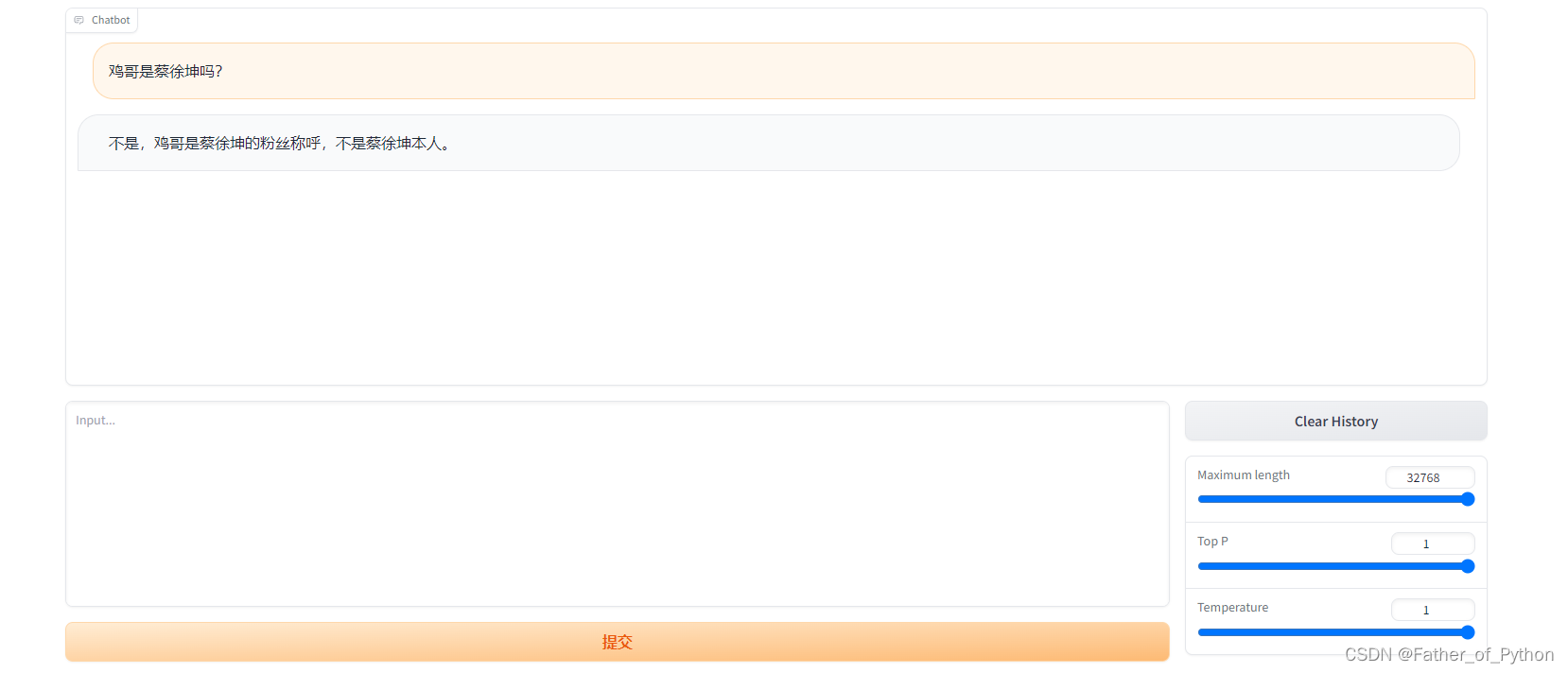

5 Test after completion

Show success:

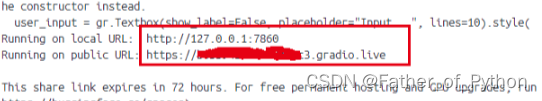

6 composite_demo test

cd /usr/src/app/uniform/ChatGLM3/composite_demo

pip install -r requirements.txt

streamlit run main.pyRun successfully: