This article is an implementation case of neural network and backpropagation algorithm (without deep learning framework), the main content is to explain the code, and the programming environment is python3.

Table of contents:

- 1. Algorithm derivation

- 2. Algorithm implementation

- 3. Results

1. Algorithm derivation

Attached is the most complete article on neural network and backpropagation algorithm derivation that I have seen so far. For the algorithm derivation part, please move to the link below:

https://www.zybuluo.com/hanbingtao/note/476663

2. Algorithm implementation

For the complete code, please refer to GitHub: https://github.com/hanbt/learn_dl/blob/master/bp.py (python2.7)

This article has changed the code to python format. The differences from the original text are as follows:

- The reduce function needs to import the function tool module (from functools import reduce) in the current version;

- After using the map(function) function, you need to use list(map(function)) to convert it into an array;

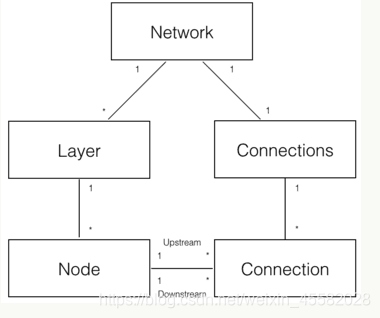

The basic model is shown in the figure below:

As shown in the figure above, five domain objects can be decomposed to realize the neural network:

Network The neural network object provides an API interface. It consists of several layer objects as well as connection objects.

Layer object, composed of multiple nodes.

The Node node object calculates and records the information of the node itself (such as output value, error term, etc.), as well as the upstream and downstream connections related to this node. Connection Each connection object records the weight of the connection.

Connections is only a collection object of Connection, providing some collection operations.

2.1 Preparations

Import module, define activation function

import random

from numpy import *

from functools import reduce

def sigmoid(inX):

return 1.0 / (1 + exp(-inX))2.2 Building a neural network

Define nodes, neural layers, and connections between neural layers

1). Node definition:

class Node(object):

#下文由class Layer(object)通过self.nodes.append(Node(layer_index, i))语句调用

def __init__(self, layer_index, node_index):

''' 构造节点对象。

layer_index: 节点所属的层的编号

node_index: 节点的编号

'''

self.layer_index = layer_index

self.node_index = node_index

self.downstream = []

self.upstream = []

self.output = 0

self.delta = 0

def set_output(self, output):

'''

设置节点的输出值。如果节点属于输入层会用到这个函数。

'''

self.output = output

def append_downstream_connection(self, conn):

'''

添加一个到下游节点的连接

'''

self.downstream.append(conn)

def append_upstream_connection(self, conn):

'''

添加一个到上游节点的连接

'''

self.upstream.append(conn)

def calc_output(self):

'''

计算节点输出

reduce()的函数原型是: reduce(function(), iterable[, initializer]),函数数据进行下列操作:用传给 reduce 中的函数 function(二元函数)先对集合中的第 1、2 个元素进行操作,得到的结果再与第三个数据用 function 函数运算,最后得到一个结果。

下面由于初始化数值为0,开始时ret、conn为upstream数组的前两项,运算后的值暂存在ret中,conn为数组下一位置的值。

'''

output = reduce(lambda ret, conn: ret + conn.upstream_node.output * conn.weight, self.upstream, 0)

self.output = sigmoid(output)

def calc_hidden_layer_delta(self):

'''

计算隐藏层的误差项

'''

downstream_delta = reduce(

lambda ret, conn: ret + conn.downstream_node.delta * conn.weight,

self.downstream, 0.0)

self.delta = self.output * (1 - self.output) * downstream_delta

def calc_output_layer_delta(self, label):

'''

计算输出层的误差项

'''

self.delta = self.output * (1 - self.output) * (label - self.output)

def __str__(self):#打印结果

node_str = '%u-%u: output: %f delta: %f' % (self.layer_index, self.node_index, self.output, self.delta)

downstream_str = reduce(lambda ret, conn: ret + '\n\t' + str(conn), self.downstream, '')

upstream_str = reduce(lambda ret, conn: ret + '\n\t' + str(conn), self.upstream, '')

return node_str + '\n\tdownstream:' + downstream_str + '\n\tupstream:' + upstream_str

class ConstNode(object):

'''

ConstNode对象,为了实现一个输出恒为1的节点(计算偏置项时需要)

'''

def __init__(self, layer_index, node_index):

self.layer_index = layer_index

self.node_index = node_index

self.downstream = []

self.output = 1

def append_downstream_connection(self, conn):

self.downstream.append(conn)

def calc_hidden_layer_delta(self):

downstream_delta = reduce(

lambda ret, conn: ret + conn.downstream_node.delta * conn.weight,

self.downstream, 0.0)

self.delta = self.output * (1 - self.output) * downstream_delta

def __str__(self):

node_str = '%u-%u: output: 1' % (self.layer_index, self.node_index)

downstream_str = reduce(lambda ret, conn: ret + '\n\t' + str(conn), self.downstream, '')

return node_str + '\n\tdownstream:' + downstream_str2). Neural layer object definition:

class Layer(object):

def __init__(self, layer_index, node_count):

#由下文classNetwork(object):self.layers.append(Layer(i, layers[i]))所调用

''' 初始化一层

layer_index: 层编号

node_count: 层所包含的节点个数

'''

self.layer_index = layer_index

self.nodes = []

for i in range(node_count):

self.nodes.append(Node(layer_index, i))#使用Node对象建立节点

self.nodes.append(ConstNode(layer_index, node_count))#使用ConstNode对象建立节点ConstNode对象,为了实现一个输出恒为1的节点(计算偏置项时需要)

def set_output(self, data):

for i in range(len(data)):

self.nodes[i].set_output(data[i])

def calc_output(self):

for node in self.nodes[:-1]:

node.calc_output()

def dump(self):

for node in self.nodes:

print(node)

3). Definition of connection between neural layers:

class Connection(object):

'''

初始化连接,权重初始化为是一个很小的随机数

upstream_node: 连接的上游节点

downstream_node: 连接的下游节点

Connection对象,包含有计算单个梯度、更新节点权值的方法

'''

def __init__(self, upstream_node, downstream_node):

self.upstream_node = upstream_node

self.downstream_node = downstream_node

self.weight = random.uniform(-0.1, 0.1)

self.gradient = 0.0

def calc_gradient(self):

'''

梯度=误差项*上游节点输出

'''

self.gradient = self.downstream_node.delta * self.upstream_node.output

def update_weight(self, rate):

'''

新权值=旧权值+学习率*梯度

'''

self.calc_gradient()

self.weight += rate * self.gradient

def get_gradient(self):

return self.gradient

def __str__(self):

return '(%u-%u) -> (%u-%u) = %f' % (

self.upstream_node.layer_index,

self.upstream_node.node_index,

self.downstream_node.layer_index,

self.downstream_node.node_index,

self.weight)

class Connections(object):

def __init__(self):

self.connections = []

def add_connection(self, connection):

self.connections.append(connection)

def dump(self):

for conn in self.connections:

print(conn)4).Network object, providing API.

class Network(object):

def __init__(self, layers):#net = Network([8, 3, 8])->layers=[8,3,8]

self.connections = Connections()#建立连接数组,初始化connections=[]

self.layers = []

layer_count = len(layers)#神经层数:3

node_count = 0

for i in range(layer_count):

self.layers.append(Layer(i, layers[i]))#使用Layer对象建立神经层(3层)

for layer in range(layer_count - 1):

connections_1 = [Connection(upstream_node, downstream_node)

for upstream_node in self.layers[layer].nodes

for downstream_node in self.layers[layer + 1].nodes[:-1]]#(除node数组最后一位),因为最后一位为偏置项。

'''

这里与源代码不同,源代码为connections,而非connections_1,connections_1是一个数组,数组的成员为Connection对象

'''

for conn in connections_1:

'''

以下几行用于了解conn

print("hhh1")

print(layer)

print(conn)

print(conn.upstream_node)

print(conn.downstream_node)

'''

self.connections.add_connection(conn)

conn.downstream_node.append_upstream_connection(conn)

conn.upstream_node.append_downstream_connection(conn)

def train(self, labels, data_set, rate, epoch):

for i in range(epoch):

for d in range(len(data_set)):

self.train_one_sample(labels[d], data_set[d], rate)

# print 'sample %d training finished' % d

def train_one_sample(self, label, sample, rate):

self.predict(sample)

self.calc_delta(label)

self.update_weight(rate)

def calc_delta(self, label):

'''

计算误差项,先计算输出层的误差,再逐层计算隐藏层误差,反向传播,由于本案例只含有一个隐藏层。

'''

output_nodes = self.layers[-1].nodes

for i in range(len(label)):

output_nodes[i].calc_output_layer_delta(label[i])

for layer in self.layers[-2::-1]:

for node in layer.nodes:

node.calc_hidden_layer_delta()

def update_weight(self, rate):

'''

逐层更新权值的方法

'''

for layer in self.layers[:-1]:

for node in layer.nodes:

for conn in node.downstream:

conn.update_weight(rate)

def calc_gradient(self):

'''

逐层计算梯度方法

'''

for layer in self.layers[:-1]:

for node in layer.nodes:

for conn in node.downstream:

conn.calc_gradient()

def get_gradient(self, label, sample):

self.predict(sample)

self.calc_delta(label)

self.calc_gradient()

def predict(self, sample):

'''

将样本输入至输入层,计算输出

'''

#print(sample)

self.layers[0].set_output(sample)

for i in range(1, len(self.layers)):

self.layers[i].calc_output()

return map(lambda node: node.output, self.layers[-1].nodes[:-1])

def dump(self):

for layer in self.layers:

layer.dump()

5). Generate data set:

class Normalizer(object):

'''

用于建立数据集

'''

def __init__(self):

self.mask = [0x1, 0x2, 0x4, 0x8, 0x10, 0x20, 0x40, 0x80]

def norm(self, number):

'''

数据集生成——编码

'''

return list(map(lambda m: 0.9 if number & m else 0.1, self.mask))

def denorm(self, vec):

'''

数据集解码,用于预测

'''

binary = list(map(lambda i: 1 if i > 0.5 else 0, vec))

for i in range(len(self.mask)):

binary[i] = binary[i] * self.mask[i]

return reduce(lambda x, y: x + y, binary)

def train_data_set():

normalizer = Normalizer()

data_set = []

labels = []

for i in range(0, 256, 8):

n = normalizer.norm(int(random.uniform(0, 256)))

data_set.append(n)

labels.append(n)

return labels, data_set6). Calculate the square mean error:

def mean_square_error(vec1, vec2):

return 0.5 * reduce(lambda a, b: a + b,

map(lambda v: (v[0] - v[1]) * (v[0] - v[1]),

zip(vec1, vec2)

)

)

7). Gradient check:

see the blog post for the gradient check: https://www.zybuluo.com/hanbingtao/note/476663

def gradient_check(network, sample_feature, sample_label):

'''

梯度检查

network: 神经网络对象

sample_feature: 样本的特征

sample_label: 样本的标签

'''

# 计算网络误差

network_error = lambda vec1, vec2: \

0.5 * reduce(lambda a, b: a + b,

map(lambda v: (v[0] - v[1]) * (v[0] - v[1]),

zip(vec1, vec2)))

# 获取网络在当前样本下每个连接的梯度

network.get_gradient(sample_feature, sample_label)

# 对每个权重做梯度检查

for conn in network.connections.connections:

# 获取指定连接的梯度

actual_gradient = conn.get_gradient()

# 增加一个很小的值,计算网络的误差

epsilon = 0.0001

conn.weight += epsilon

error1 = network_error(network.predict(sample_feature), sample_label)

# 减去一个很小的值,计算网络的误差

conn.weight -= 2 * epsilon # 刚才加过了一次,因此这里需要减去2倍

error2 = network_error(network.predict(sample_feature), sample_label)

# 根据式6计算期望的梯度值

expected_gradient = (error2 - error1) / (2 * epsilon)

# 打印

print('expected gradient: \t%f\nactual gradient: \t%f' % (

expected_gradient, actual_gradient))Attach the complete code:

# -*- coding: UTF-8 -*-

import random

from numpy import *

from functools import reduce

def sigmoid(inX):

return 1.0 / (1 + exp(-inX))

class Node(object):

def __init__(self, layer_index, node_index):

self.layer_index = layer_index

self.node_index = node_index

self.downstream = []

self.upstream = []

self.output = 0

self.delta = 0

def set_output(self, output):

self.output = output

def append_downstream_connection(self, conn):

self.downstream.append(conn)

def append_upstream_connection(self, conn):

self.upstream.append(conn)

def calc_output(self):

output = reduce(lambda ret, conn: ret + conn.upstream_node.output * conn.weight, self.upstream, 0)

self.output = sigmoid(output)

def calc_hidden_layer_delta(self):

downstream_delta = reduce(

lambda ret, conn: ret + conn.downstream_node.delta * conn.weight,

self.downstream, 0.0)

self.delta = self.output * (1 - self.output) * downstream_delta

def calc_output_layer_delta(self, label):

self.delta = self.output * (1 - self.output) * (label - self.output)

def __str__(self):

node_str = '%u-%u: output: %f delta: %f' % (self.layer_index, self.node_index, self.output, self.delta)

downstream_str = reduce(lambda ret, conn: ret + '\n\t' + str(conn), self.downstream, '')

upstream_str = reduce(lambda ret, conn: ret + '\n\t' + str(conn), self.upstream, '')

return node_str + '\n\tdownstream:' + downstream_str + '\n\tupstream:' + upstream_str

class ConstNode(object):

def __init__(self, layer_index, node_index):

self.layer_index = layer_index

self.node_index = node_index

self.downstream = []

self.output = 1

def append_downstream_connection(self, conn):

self.downstream.append(conn)

def calc_hidden_layer_delta(self):

downstream_delta = reduce(

lambda ret, conn: ret + conn.downstream_node.delta * conn.weight,

self.downstream, 0.0)

self.delta = self.output * (1 - self.output) * downstream_delta

def __str__(self):

node_str = '%u-%u: output: 1' % (self.layer_index, self.node_index)

downstream_str = reduce(lambda ret, conn: ret + '\n\t' + str(conn), self.downstream, '')

return node_str + '\n\tdownstream:' + downstream_str

class Layer(object):

def __init__(self, layer_index, node_count):

self.layer_index = layer_index

self.nodes = []

for i in range(node_count):

self.nodes.append(Node(layer_index, i))

self.nodes.append(ConstNode(layer_index, node_count))

def set_output(self, data):

for i in range(len(data)):

self.nodes[i].set_output(data[i])

def calc_output(self):

for node in self.nodes[:-1]:

node.calc_output()

def dump(self):

for node in self.nodes:

print(node)

class Connection(object):

def __init__(self, upstream_node, downstream_node):

self.upstream_node = upstream_node

self.downstream_node = downstream_node

self.weight = random.uniform(-0.1, 0.1)

self.gradient = 0.0

def calc_gradient(self):

self.gradient = self.downstream_node.delta * self.upstream_node.output

def update_weight(self, rate):

self.calc_gradient()

self.weight += rate * self.gradient

def get_gradient(self):

return self.gradient

def __str__(self):

return '(%u-%u) -> (%u-%u) = %f' % (

self.upstream_node.layer_index,

self.upstream_node.node_index,

self.downstream_node.layer_index,

self.downstream_node.node_index,

self.weight)

class Connections(object):

def __init__(self):

self.connections = []

def add_connection(self, connection):

self.connections.append(connection)

def dump(self):

for conn in self.connections:

print(conn)

class Network(object):

def __init__(self, layers):

self.connections = Connections()

self.layers = []

layer_count = len(layers)

node_count = 0

for i in range(layer_count):

self.layers.append(Layer(i, layers[i]))

for layer in range(layer_count - 1):

connections = [Connection(upstream_node, downstream_node)

for upstream_node in self.layers[layer].nodes

for downstream_node in self.layers[layer + 1].nodes[:-1]]

for conn in connections:

self.connections.add_connection(conn)

conn.downstream_node.append_upstream_connection(conn)

conn.upstream_node.append_downstream_connection(conn)

def train(self, labels, data_set, rate, epoch):

for i in range(epoch):

for d in range(len(data_set)):

self.train_one_sample(labels[d], data_set[d], rate)

# print 'sample %d training finished' % d

def train_one_sample(self, label, sample, rate):

self.predict(sample)

self.calc_delta(label)

self.update_weight(rate)

def calc_delta(self, label):

output_nodes = self.layers[-1].nodes

for i in range(len(label)):

output_nodes[i].calc_output_layer_delta(label[i])

for layer in self.layers[-2::-1]:

for node in layer.nodes:

node.calc_hidden_layer_delta()

def update_weight(self, rate):

for layer in self.layers[:-1]:

for node in layer.nodes:

for conn in node.downstream:

conn.update_weight(rate)

def calc_gradient(self):

for layer in self.layers[:-1]:

for node in layer.nodes:

for conn in node.downstream:

conn.calc_gradient()

def get_gradient(self, label, sample):

self.predict(sample)

self.calc_delta(label)

self.calc_gradient()

def predict(self, sample):

#print(sample)

self.layers[0].set_output(sample)

for i in range(1, len(self.layers)):

self.layers[i].calc_output()

return map(lambda node: node.output, self.layers[-1].nodes[:-1])

def dump(self):

for layer in self.layers:

layer.dump()

class Normalizer(object):

'''

用于建立数据集

'''

def __init__(self):

self.mask = [0x1, 0x2, 0x4, 0x8, 0x10, 0x20, 0x40, 0x80]

def norm(self, number):

'''

数据集生成——编码

'''

return list(map(lambda m: 0.9 if number & m else 0.1, self.mask))

def denorm(self, vec):

'''

数据集解码,用于预测

'''

binary = list(map(lambda i: 1 if i > 0.5 else 0, vec))

for i in range(len(self.mask)):

binary[i] = binary[i] * self.mask[i]

return reduce(lambda x, y: x + y, binary)

def mean_square_error(vec1, vec2):

return 0.5 * reduce(lambda a, b: a + b,

map(lambda v: (v[0] - v[1]) * (v[0] - v[1]),

zip(vec1, vec2)

)

)

def gradient_check(network, sample_feature, sample_label):

'''

梯度检查

network: 神经网络对象

sample_feature: 样本的特征

sample_label: 样本的标签

'''

# 计算网络误差

network_error = lambda vec1, vec2: \

0.5 * reduce(lambda a, b: a + b,

map(lambda v: (v[0] - v[1]) * (v[0] - v[1]),

zip(vec1, vec2)))

# 获取网络在当前样本下每个连接的梯度

network.get_gradient(sample_feature, sample_label)

# 对每个权重做梯度检查

for conn in network.connections.connections:

# 获取指定连接的梯度

actual_gradient = conn.get_gradient()

# 增加一个很小的值,计算网络的误差

epsilon = 0.0001

conn.we’ight += epsilon

error1 = network_error(network.predict(sample_feature), sample_label)

# 减去一个很小的值,计算网络的误差

conn.weight -= 2 * epsilon # 刚才加过了一次,因此这里需要减去2倍

error2 = network_error(network.predict(sample_feature), sample_label)

# 根据式6计算期望的梯度值

expected_gradient = (error2 - error1) / (2 * epsilon)

# 打印

print('expected gradient: \t%f\nactual gradient: \t%f' % (

expected_gradient, actual_gradient))

def train_data_set(

'''

建立数据集

'''

normalizer = Normalizer()#实例化Normaliazer类

data_set = []

labels = []

for i in range(0, 256, 8):

n = normalizer.norm(int(random.uniform(0, 256)))

data_set.append(n)

labels.append(n)

return labels, data_set

def train(network):

labels, data_set = train_data_set()#获取数据集

network.train(labels, data_set, 0.3, 50)#调用Network对象train方法进行训练

def test(network, data):

normalizer = Normalizer()

norm_data = normalizer.norm(data)

predict_data = network.predict(norm_data)

print('\ttestdata(%u)\tpredict(%u)' % (

data, normalizer.denorm(predict_data)))

def correct_ratio(network):

normalizer = Normalizer()

correct = 0.0

for i in range(256):

if normalizer.denorm(network.predict(normalizer.norm(i))) == i:

correct += 1.0

print('correct_ratio: %.2f%%' % (correct / 256 * 100))

def gradient_check_test():

net = Network([2, 2, 2])

sample_feature = [0.9, 0.1]

sample_label = [0.9, 0.1]

gradient_check(net, sample_feature, sample_label)

if __name__ == '__main__':

net = Network([8, 3, 8])#共三层神经网络,各层节点数分别为8,3,8

train(net)#训练神经网络

net.dump()

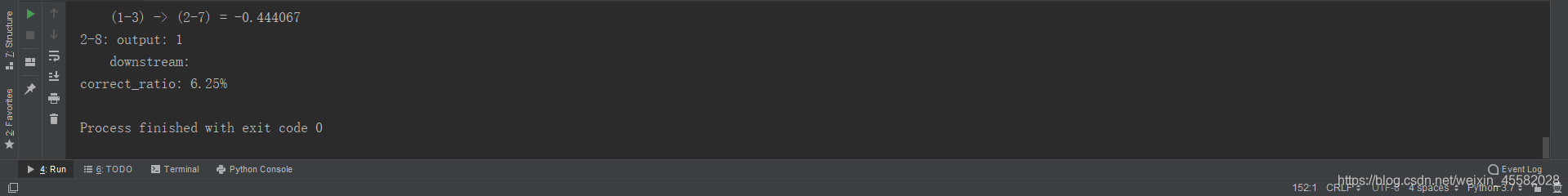

correct_ratio(net)#测试3. Running results:

The result is not satisfactory. This time learning the code, I will have the opportunity to optimize it later.

Welcome everyone to communicate, if there is something wrong, please correct me.