The article includes an improvement to the logit distillation loss calculation method, and proposes a customized soft label based on the improved formula for self-distillation. The code is open source. Don’t blame the big guy who moved here, just learn for yourself~~

Here is an introduction to ICCV 2023's work on knowledge distillation: From Knowledge Distillation to Self-Knowledge Distillation: A Unified Approach with Normalized Loss and Customized Soft Labels, the article includes improvements to the logit distillation loss calculation method, and proposes based on the improved formula Customized soft labels for self-distillation.

Article link: https://arxiv.org/abs/2303.13005

Code link: https://github.com/yzd-v/cls_KD

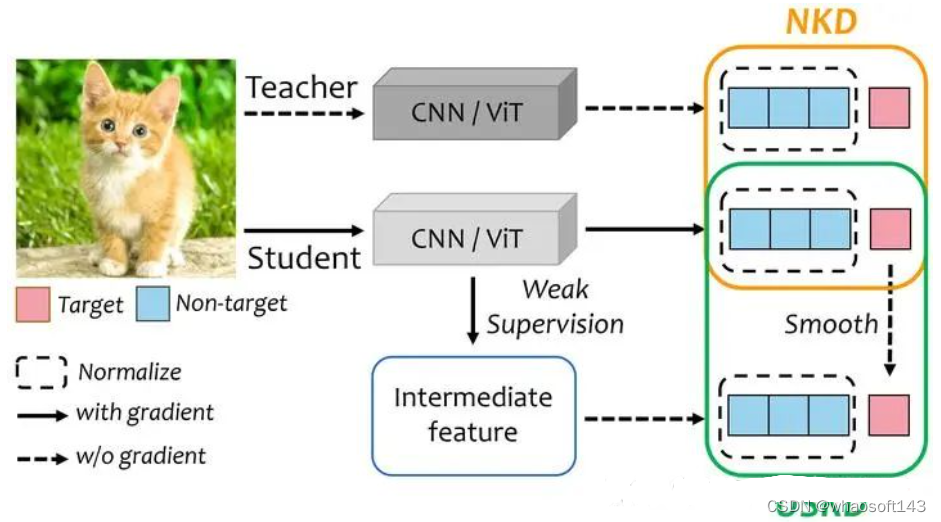

Native distillation uses the teacher's logits as soft labels, and computes the distillation loss with the student's output. Self-distillation attempts to obtain soft labels by designing additional branches or special distributions in the absence of a teacher model, and then calculates the distillation loss with the student's output. The difference between the two lies in the way of obtaining the soft label. whaosoft aiot http://143ai.com

This paper aims to, 1) improve the method of calculating the distillation loss, so that students can better use soft labels. 2) Propose a general, efficient and simple method to obtain better soft labels, which is used to improve the performance and generality of self-distillation. For these two goals, we propose Normalized KD (NKD) and Universal Self-Knowledge Distillation (USKD) respectively.

method and details

method and details

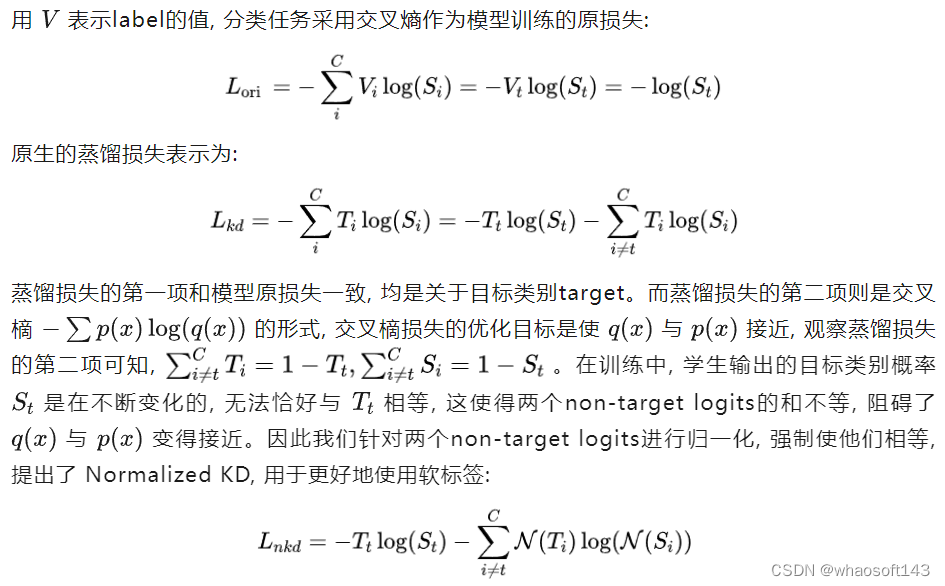

1) NKD

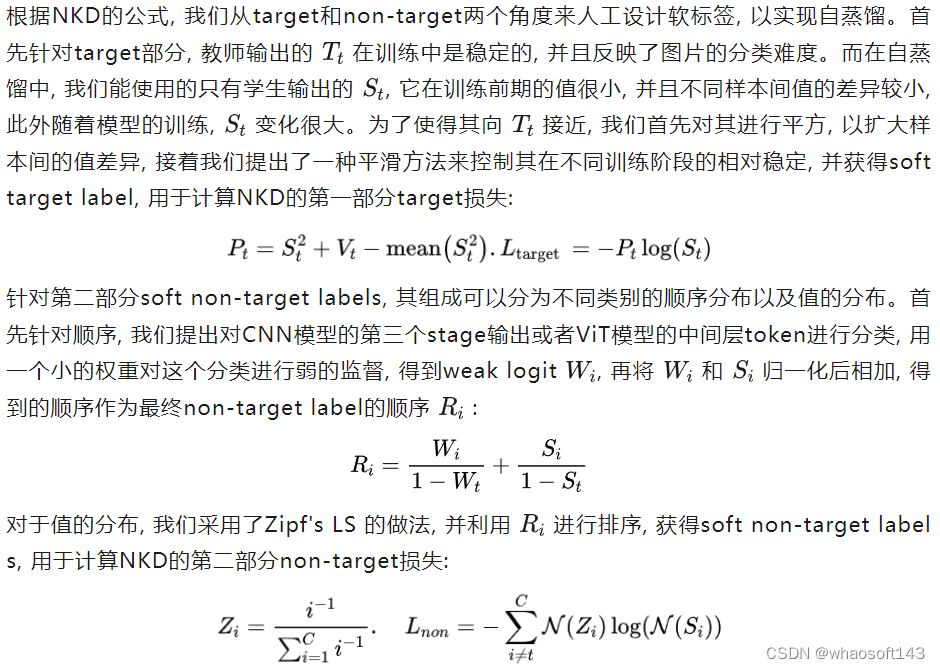

2) USD

2) USD

experiment

experiment

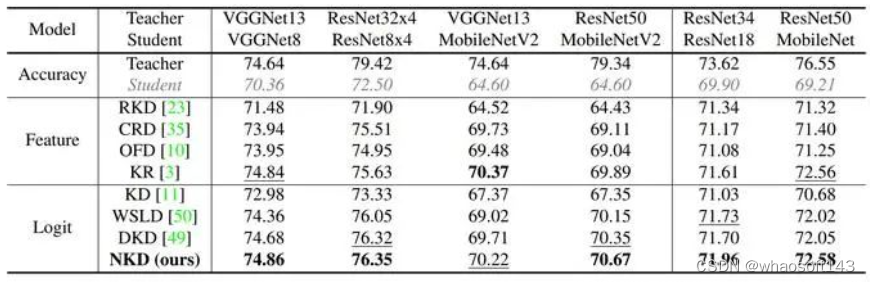

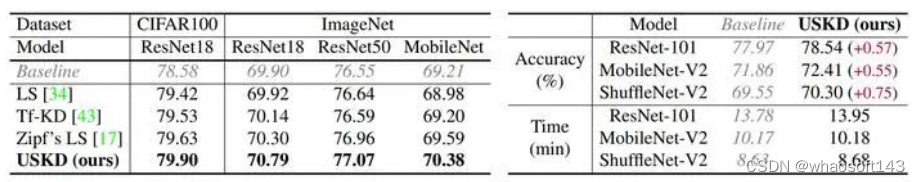

We first validate NKD on CIFAR-100 and ImageNet, and students make better use of the teacher's soft labels and achieve better performance.

For self-distillation, we also verified USKD on CIFAR-100 and ImageNet, and tested the additional training time required for self-distillation, and the model achieved considerable improvement with very little time consumption.

For self-distillation, we also verified USKD on CIFAR-100 and ImageNet, and tested the additional training time required for self-distillation, and the model achieved considerable improvement with very little time consumption.

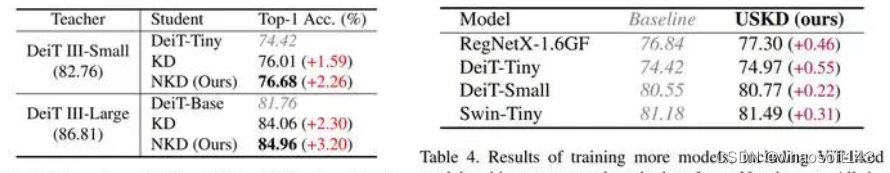

Our NKD and USKD are applicable to both CNN models and ViT models, so we also validated on more models.

Our NKD and USKD are applicable to both CNN models and ViT models, so we also validated on more models.

Code: https://github.com/yzd-v/cls_KD

Code: https://github.com/yzd-v/cls_KD