Source | Heart of the Machine ID | almosthuman2014

"But I'm getting old, and all I want is for young promising researchers like you to figure out how we can have these superintelligences that make our lives better instead of being controlled by them."

On June 10, in the closing speech of the 2023 Beijing Zhiyuan Conference, when talking about how to prevent superintelligence from deceiving and controlling humans, the 75-year-old Turing Award winner Geoffrey Hinton said with emotion.

Hinton's speech titled "Two Paths to Intelligence" (Two Paths to Intelligence), that is, immortal computing performed in digital form and immortal computing dependent on hardware, which are represented by digital computers and human brains respectively . At the end of the speech, he focused on the concern about the threat of superintelligence brought by the large language model (LLM). He expressed his pessimistic attitude very bluntly on this topic involving the future of human civilization.

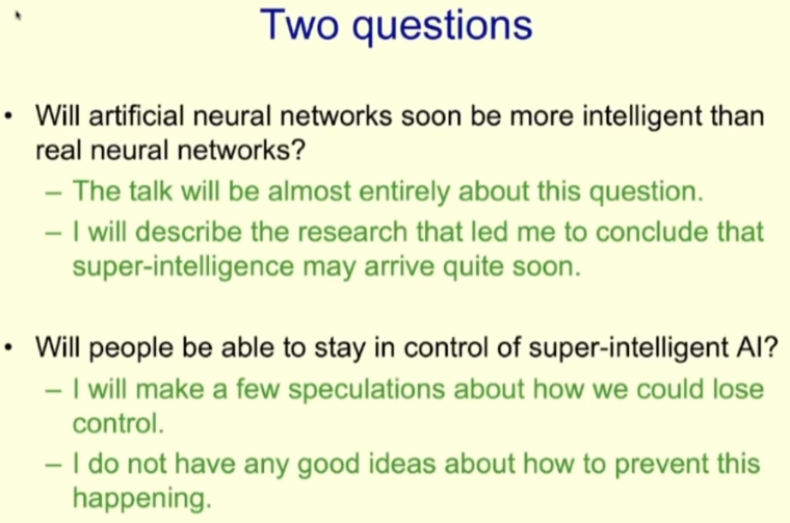

Hinton began his speech by declaring that superintelligence may be born much earlier than he once imagined. This observation leads to two big questions: (1) Will artificial neural networks soon surpass the intelligence level of real neural networks? (2) Can humans guarantee control over super AI? In his speech at the conference, he discussed the first question in detail; for the second question, Hinton said at the end of his speech: superintelligence may come soon.

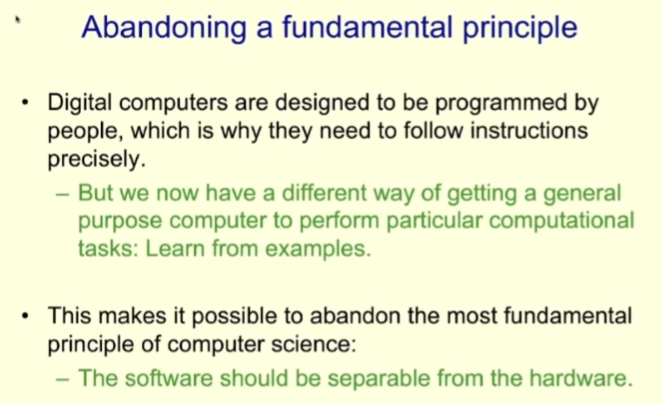

First, let's look at traditional calculations. Computers are designed to execute instructions precisely, which means that if we run the same program on different hardware (whether it is a neural network or not), the effect should be the same. This means that the knowledge contained in the program (such as the weights of the neural network) is immortal and has nothing to do with the specific hardware.

In order to achieve the immortality of knowledge, our approach is to run transistors at high power so that they can function reliably digitally. But while doing this, we are equivalent to abandoning some other properties of hardware, such as rich analog and high variability.

The reason why traditional computers adopt that design pattern is because the programs that traditional computing runs are written by humans. Now with the development of machine learning technology, computers have another way to obtain program and task goals: sample-based learning.

This new paradigm allows us to abandon one of the most basic principles of previous computer system design, that is, the separation of software design and hardware; instead, we can co-design software and hardware.

The advantage of the separate design of software and hardware is that the same program can run on many different hardware, and at the same time, we can only look at the software when designing a program, regardless of the hardware—this is why the Department of Computer Science and the Department of Electronic Engineering can be established separately.

For software and hardware co-design, Hinton proposed a new concept: Mortal Computation. Corresponding to the software in the form of immortality mentioned above, we translate it as "immortal computing" here.

What is immortal computing?

Immortal computing abandons the immortality of running the same software on different hardware, and instead adopts a new design idea: knowledge is inseparable from the specific physical details of the hardware. This new approach naturally has its pros and cons. Among the main advantages are energy savings and low hardware costs.

In terms of energy saving, we can refer to the human brain, which is a typical immortal computing device. Although there is still a bit of digital calculation in the human brain, that is, neurons either fire or not fire, but overall, most of the calculations in the human brain are analog calculations, and the power consumption is very low.

Immortal computing can also use lower-cost hardware. Compared with today's processors that are produced with high precision in two dimensions, the hardware of immortal computing can be "grown" in three dimensions, because we don't need to know exactly how the hardware is connected and the exact function of each part. Clearly, in order to achieve "growth" of computing hardware, we need a lot of new nanotechnology or the ability to genetically modify biological neurons. Ways to engineer biological neurons may be easier to implement because we already know that biological neurons are roughly capable of doing what we want them to do.

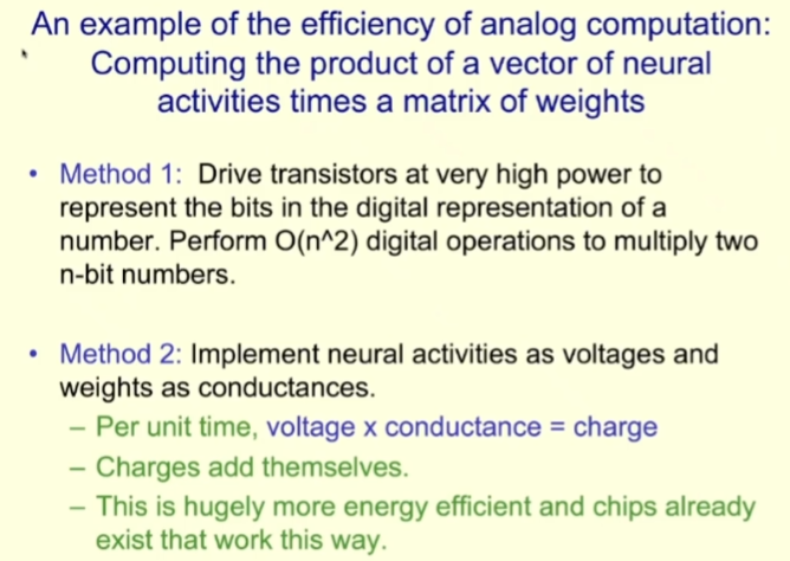

To demonstrate the efficient power of analog computing, Hinton gives an example: computing the product of a neural activity vector and a weight matrix (most of the work of neural networks is this kind of calculation).

The current approach in computers for this task is to use power-hungry transistors to represent the value as digitized bits, and then perform an O(n²) number operation to multiply two n-bit values. Although this is just a single operation on the computer, it is an operation of n² bits.

And what if you use analog computing? We can regard neural activity as voltage, and weight as conductance; then, in each unit of time, voltage can be multiplied by conductance to obtain charge, and charge can be superimposed. The energy efficiency of this way of working will be much higher, and in fact, chips that work like this already exist. But unfortunately, Hinton said, people still have to use very expensive converters to convert the results in analog form to digital form. He hopes that in the future we can complete the entire calculation process in the analog field.

Immortal computing also faces some problems, the most important of which is that it is difficult to guarantee the consistency of the results, that is, the calculation results on different hardware may be different. Also, we need to find new methods in cases where backpropagation is not available.

Problems facing immortal computing: backpropagation is not available

Learning to perform immortal computation on specific hardware requires programs to learn to exploit specific simulated properties of that hardware, but they don't need to know what those properties actually are. For example, they don't need to know what the internal connection of neurons is, and what function is used to connect the input and output of the neuron.

This means that we cannot use the backpropagation algorithm to obtain gradients, because backpropagation requires an exact forward-propagation model.

So since immortal computing cannot use backpropagation, what should we do? Let's look at a simple learning process performed on simulated hardware using a method called weight perturbation.

First, a random vector is generated for each weight in the network, consisting of random small perturbations. Then, based on one or a few samples, measure how the global objective function changes after applying this perturbation vector. Finally, according to the improvement of the objective function, the effect brought by the disturbance vector is permanently scaled into the weight.

The advantage of this algorithm is that it roughly behaves in the same way as backpropagation, which also follows gradients. But the problem is that it has very high variance. Therefore, when the size of the network increases, the noise generated when choosing a random direction of movement in the weight space will be very large, making this method unsustainable. This means that this method is only suitable for small networks, not large ones.

Another approach is activity perturbation, which suffers from similar problems but also works better for larger networks.

The activity perturbation method is to perturb the overall input of each neuron with a random vector, then observe the change of the objective function under a small batch of samples, and then calculate how to change the weight of the neuron to follow the gradient.

Activity perturbations are much less noisy than weight perturbations. And this method is already good enough to learn simple tasks like MNIST. If you use a very small learning rate, it behaves exactly like backpropagation, but much slower. And if the learning rate is large, then there will be a lot of noise, but it is enough to deal with tasks like MNIST.

But what if our network size is even larger? Hinton mentions two approaches.

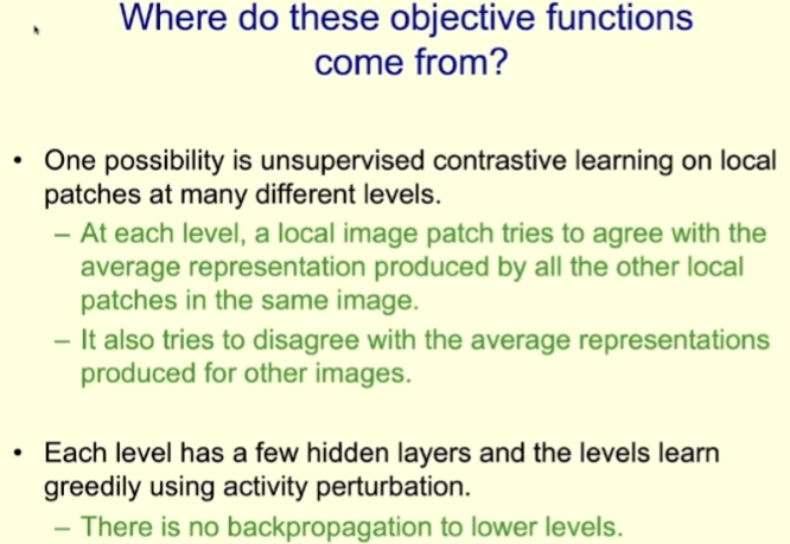

The first approach is to use massive objective functions, that is, instead of using a single function to define the objective of a large neural network, a large number of functions are used to define the local objectives of different groups of neurons in the network.

In this way, large neural networks are fragmented and we can use activity perturbations to learn small multilayer neural networks. But here comes the question: where do these objective functions come from?

One possibility is to use unsupervised contrastive learning on local patches at different levels. It works like this: a local tile has multiple levels of representation, and at each level, the local tile tries to be consistent with the average representation produced by all other local tiles of the same image; Try to be as distinct as possible from other image representations at that level.

Hinton says the approach works well in practice. The general approach is to have multiple hidden layers for each representation level, so that non-linear operations can be performed. These layers learn greedily using activity perturbations and do not backpropagate to lower layers. Since it cannot pass through as many layers as backpropagation, it will not be as powerful as backpropagation.

In fact, this is one of the most important research results of the Hinton team in recent years. For details, please refer to the report "After giving up backpropagation, Geoffrey Hinton participated in the heavy research on forward gradient learning" .

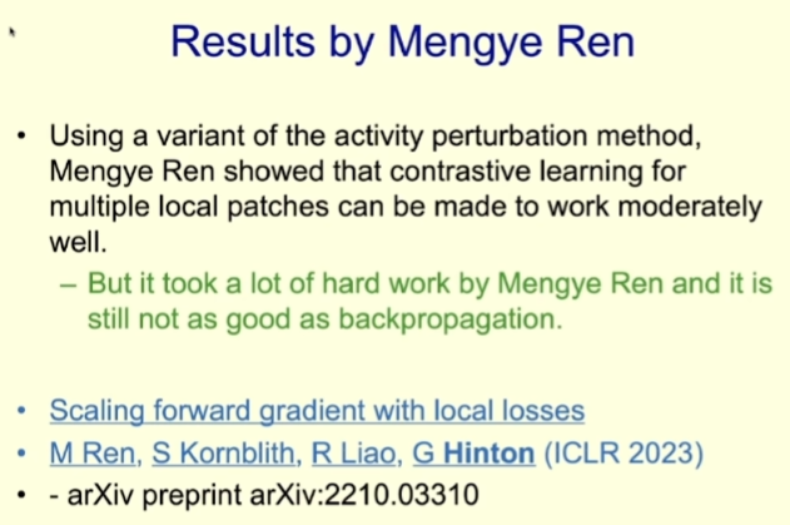

Mengye Ren has shown through a lot of research that this method can actually work in neural networks, but it is very complicated to operate, and the actual effect is not as good as backpropagation. If the depth of the large network is deeper, the gap between it and backpropagation will be even greater.

Hinton said that this learning algorithm that can use the simulation properties can only be said to be OK, enough to deal with tasks like MNIST, but it is not really easy to use, for example, the performance on ImageNet tasks is not very good.

Problems in Immortal Computing: Inheritance of Knowledge

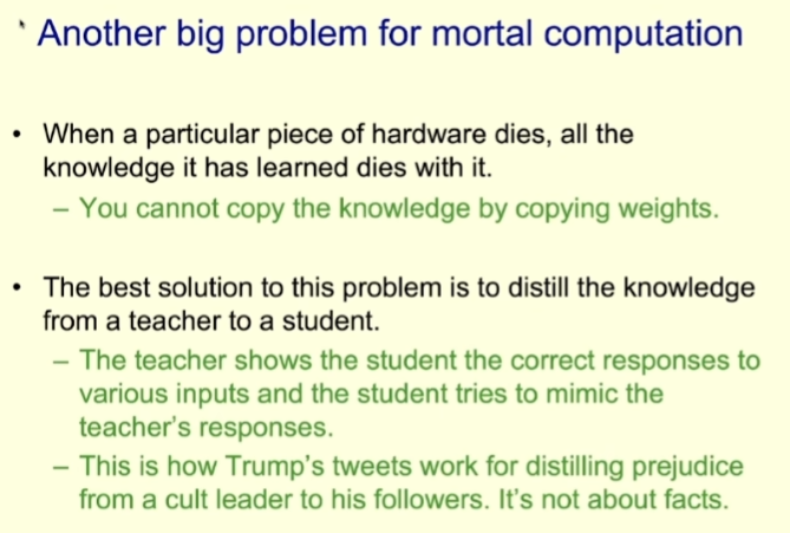

Another major problem faced by immortal computing is that it is difficult to guarantee the inheritance of knowledge. Since immortal computing is highly related to hardware, knowledge cannot be copied by copying weights, which means that when a specific piece of hardware "dies", its learned knowledge will also disappear.

Hinton said the best way to solve this problem is to transfer knowledge to students before the hardware "dies." This type of approach is known as knowledge distillation, a concept first proposed by Hinton in the 2015 paper "Distilling the Knowledge in a Neural Network" co-authored with Oriol Vinyals and Jeff Dean.

The basic idea of this concept is simple, similar to a teacher teaching students knowledge: the teacher shows the correct response to different inputs to the student, and the student tries to imitate the teacher's response.

Hinton used former U.S. President Trump’s tweets as an example to make an intuitive explanation: Trump often responds very emotionally to various events when he tweets, which will prompt his followers to change their “ Neural Network” to generate the same emotional response; in doing so, Trump distilled prejudice into the minds of his followers, like a “cult”—and Hinton clearly didn’t like Trump.

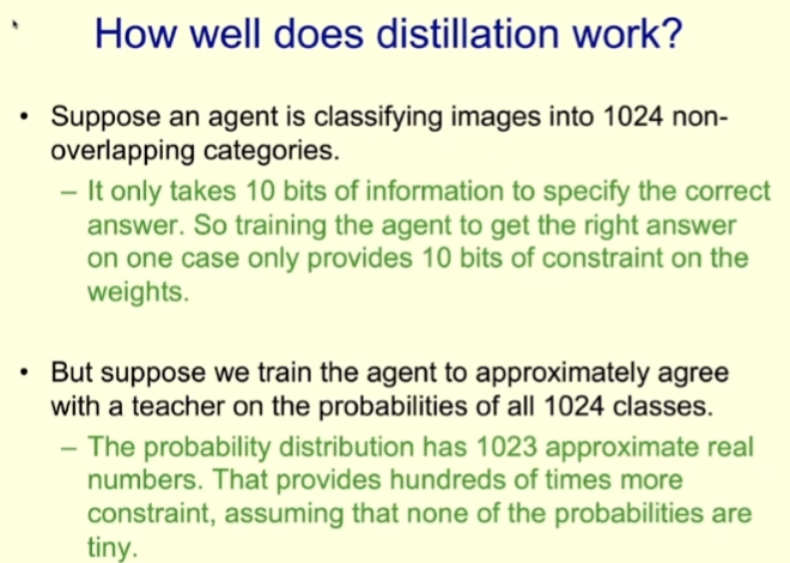

How effective is the knowledge distillation method? Considering that Trump has many fans, the effect should not be bad. Hinton uses an example to explain: Suppose an agent needs to classify images into 1024 non-overlapping categories.

To pinpoint the correct answer, we only need 10 bits of information. Therefore, to train the agent to correctly recognize a particular sample, only 10 bits of information need to be provided to constrain its weights.

But what if we trained an agent to roughly match the probabilities of a teacher on those 1024 categories? That is, make the probability distribution of the agent the same as that of the teacher. This probability distribution has 1023 real numbers, and if none of these probabilities are very small, then the constraints it provides are hundreds of times larger.

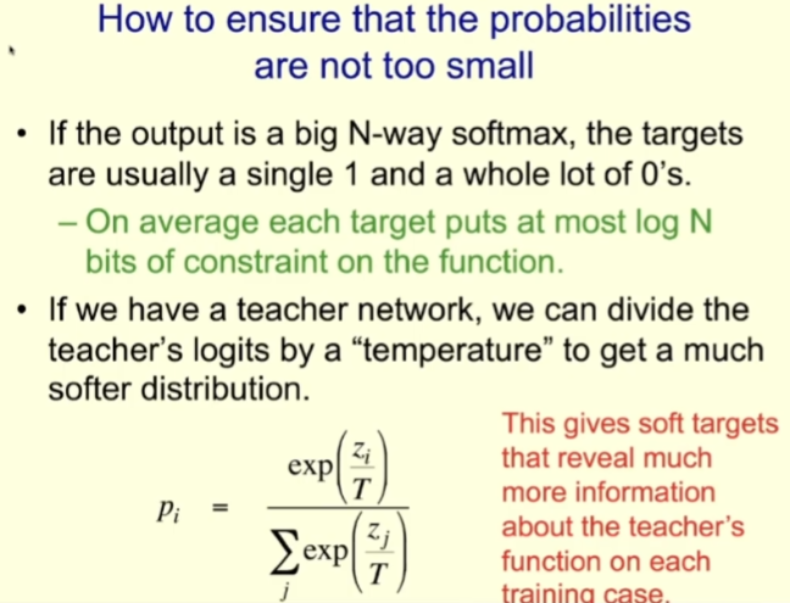

To ensure that these probabilities are not too small, the teacher can be run on "hot", and the students are also run on "hot" when they are trained. For example, if logit is used, that's what goes into softmax. For the teacher, it can be scaled based on the temperature parameter to get a softer distribution; then use the same temperature when training the students.

Let's look at a specific example. Below are some images of character 2 from the MNIST training set, and to the right are the probabilities assigned by the teacher to each image when the temperature at which the teacher is run is high.

For the first row, the teacher is confident that it is 2; for the second row, the teacher is also confident that it is 2, but it also thinks it may be 3 or 8. The third line is somewhat like 0. For this sample, the teacher should say that this is a 2, but should also leave some possibility for a 0. In this way, students learn more from it than from telling them that it is 2.

For the fourth line, you can see that the teacher is confident that it is 2, but it also thinks it is somewhat likely to be 1, after all, sometimes we write 1 like the one drawn on the left side of the picture.

For the fifth row, the teacher made an error, thinking it was 5 (but should be 2 according to the MNIST labels). Students also learn a lot from teachers' mistakes.

Distillation has a very special property, that is, when the student is trained using the probabilities given by the teacher, the student is trained to generalize in the same way as the teacher. If the teacher assigns a certain small probability to wrong answers, then students are also trained to generalize to wrong answers.

Generally speaking, we train the model so that the model can get the correct answer on the training data and generalize this ability to the test data. But when using the teacher-student training model, we are directly training the generalization ability of the student, because the training goal of the student is to be able to generalize as well as the teacher.

Clearly, we can create richer output for distillation. For example, we can assign a description to each image, rather than just a single label, and then train students to predict the words in these descriptions.

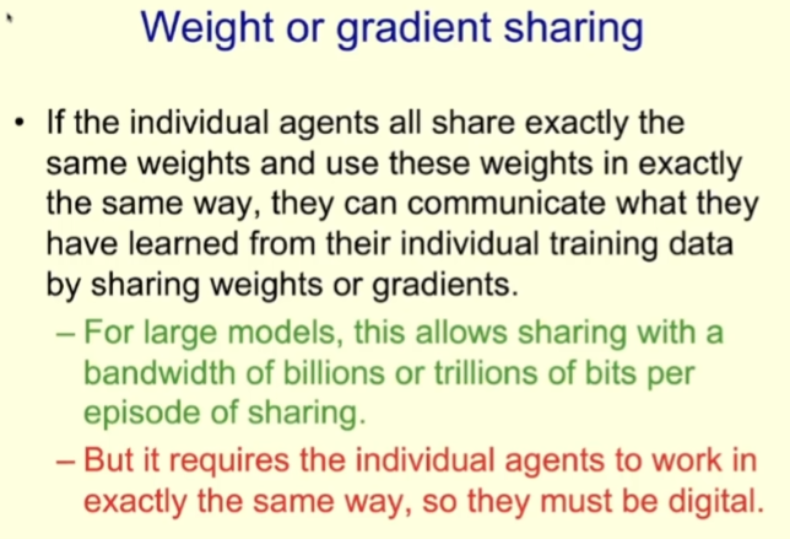

Next, Hinton talked about research on sharing knowledge in groups of agents. It is also a way of passing on knowledge.

When a community of multiple agents shares knowledge with each other, the way the knowledge is shared can largely determine the way the computation is performed.

For digital models, we can create a large number of agents using the same weights by duplication. We can have these agents look at different parts of the training dataset, have them each compute gradients of weights based on different parts of the data, and then average those gradients. In this way, each model learns what every other model has learned. The benefit of this training strategy is that it can handle large amounts of data efficiently; if the model is large, a large number of bits can be shared in each share.

At the same time, since this method requires each agent to work in exactly the same way, it can only be a digital model.

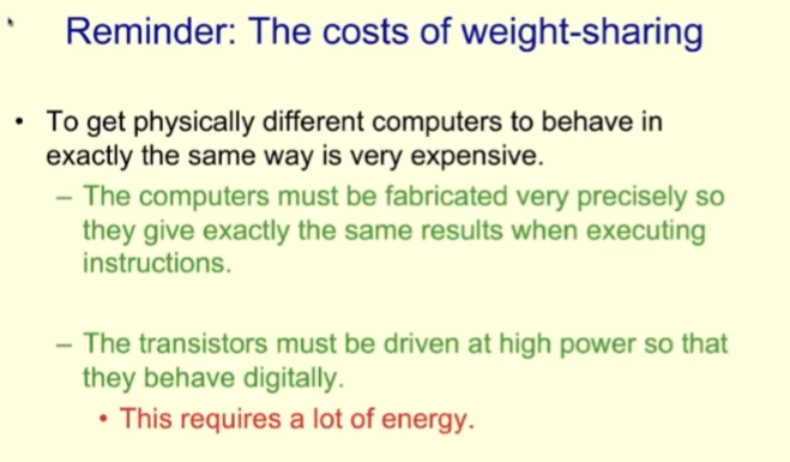

Weight sharing is also costly. Getting different pieces of hardware to work in the same way requires building computers with such precision that they always get the same result when they execute the same instructions. In addition, the power consumption of the transistor is not low.

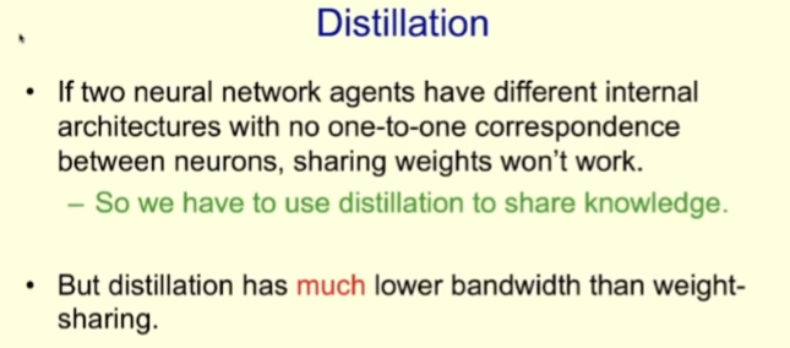

Distillation can also replace weight sharing. Especially when your model uses simulation properties of specific hardware, then you cannot use weight sharing, but you must use distillation to share knowledge.

Sharing knowledge with distillation is not efficient and has low bandwidth. Just like in school, teachers want to pour the knowledge they know into the heads of students, but this is impossible, because we are biological intelligence, your weight is useless to me.

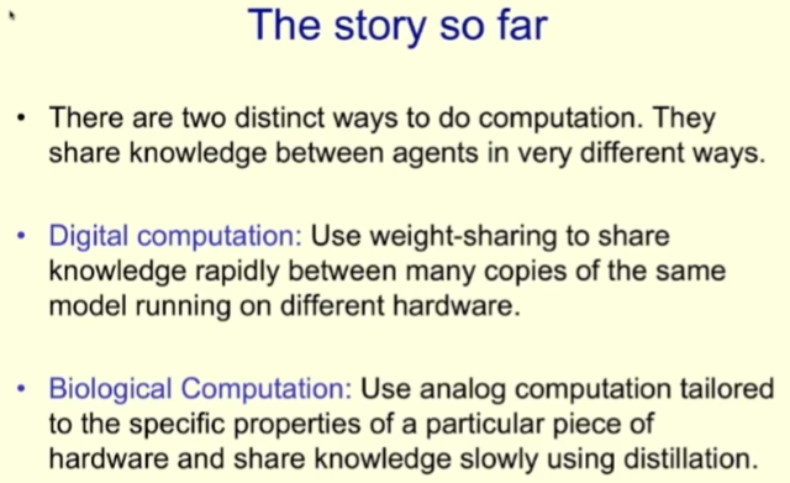

Here is a brief summary. The above mentioned two very different ways of performing calculations (digital computing and biological computing), and the way of sharing knowledge between agents is also very different.

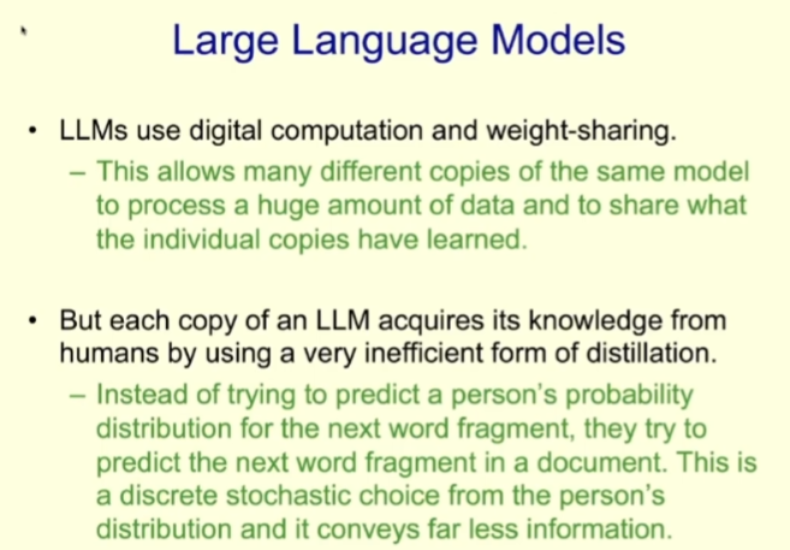

So what is the form of the large-scale language model (LLM) that is currently developing? They are numerical computations that can use weight sharing.

But each replica agent of LLM can only learn knowledge from documents in a very inefficient distillation way. The approach of LLM is to predict the next word of the document, but there is no probability distribution of the teacher for the next word, what it has is just a random choice, that is, the word selected by the author of the document in the next word position. LLM actually learns us humans, but the bandwidth to transfer knowledge is very low.

Then again, although each copy of the LLM learns very inefficiently through distillation, there are many of them, as many as thousands, so they can learn thousands of times more than us. That said, LLMs today are more knowledgeable than any of us.

Will superintelligence end human civilization?

Hinton then asked a question: "What would happen if instead of learning us very slowly through distillation, these digital intelligences started learning directly from the real world?"

In fact, when LLM learns documents, it is already learning the knowledge accumulated by humans for thousands of years. Because human beings describe our understanding of the world through language, then digital intelligence can directly acquire the knowledge accumulated by human beings through text learning. Although the distillation is slow, they do learn very abstract knowledge.

What if digital intelligence could be modeled from images and videos for unsupervised learning? There is now a large amount of image data available on the Internet, and in the future we may be able to find ways for AI to learn from this data effectively. In addition, if AI has methods such as robotic arms that can manipulate reality, it can further help them learn.

Hinton believes that if digital agents can do this, they will be able to learn far better than humans, and the learning rate will be very fast.

Now back to the question Hinton posed at the beginning: If AIs outsmart us, can we keep them under control?

Hinton said he gave the speech primarily to express his concerns. "I think superintelligence may come a lot sooner than I thought," he said, offering several possible ways in which superintelligence could take over humans.

For example, bad actors may use superintelligence to manipulate elections or win wars (in fact, some people are already doing these things with existing AI).

In this case, if you want the superintelligence to be more efficient, you might allow it to create subgoals on its own. Controlling more power is an obvious subgoal. After all, more power and more resources controlled can help the agent achieve its ultimate goal. A superintelligence might then discover that more power can be easily gained by manipulating the people who wield it.

We have a hard time imagining beings smarter than us and the ways we interact with them. But Hinton argues that a superintelligence smarter than us can certainly learn to deceive humans, after all, humans have so much fiction and political literature to study.

Once a superintelligence has learned to deceive humans, it can get humans to behave as it wants. This is actually no different from deceiving people. For example, Hinton said, if someone wants to invade a building in Washington, he doesn't actually need to go there himself, he just needs to deceive people into believing that the building is being invaded to save democracy.

"I think this is very scary." Hinton's pessimism is beyond words. "Now, I can't see how to prevent this from happening, but I am old." He hopes that young talents can find ways to make superintelligence help human life better than letting humans fall under their control.

But he also said we have an advantage, albeit a fairly small one, in that AI didn't evolve, it was created by humans. In this way, AI does not have the same competitive and aggressive goals as primitive humans. Maybe we can set moral and ethical principles for AI in the process of creating them.

However, if it is a superintelligence whose intelligence level is far beyond that of human beings, this may not be effective. Hinton says he's never seen a case of something of higher intelligence being controlled by something of far less intelligence. Let’s say that if frogs created humans, who controls who are the current frogs and humans?

Finally, Hinton pessimistically released the last slide of the speech:

This not only marks the end of the speech, but also a warning to all mankind: superintelligence may lead to the end of human civilization.