BIDAF baseline model

Baseline system implementation

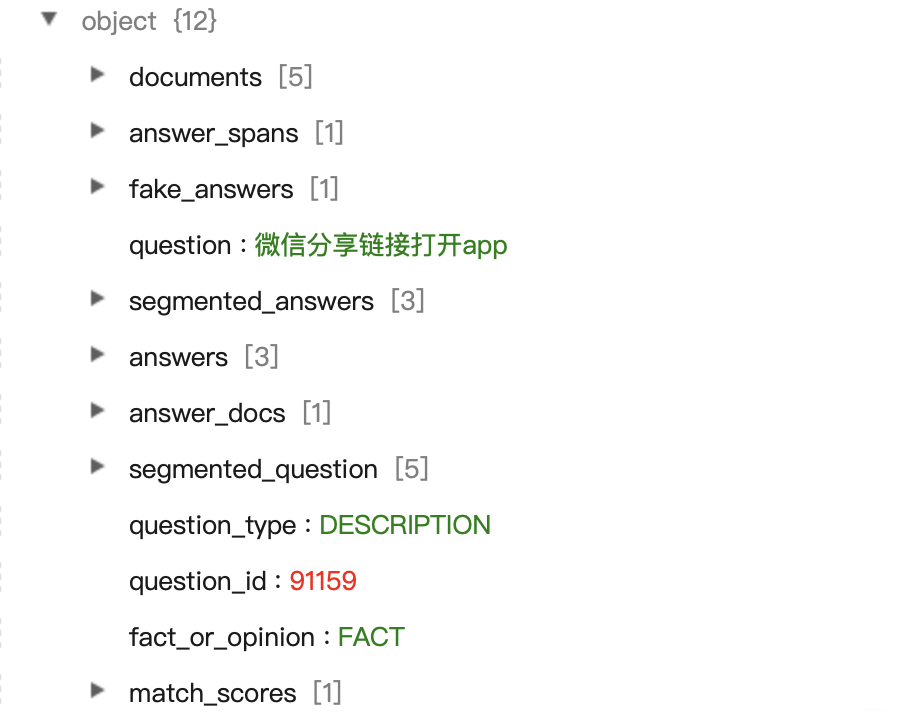

Analysis of the data set

Data sets are DuReader, characterized by a problem corresponds to many articles, we extracted from articles in the correct answer.

A style of training data as follows:

Pretreatment

As used herein, is trained sgns.wiki.word word vector

download at sgns.wiki.word

can also create vocabulary, word random initialization vector

def prepare(args):

"""

checks data, creates the directories, prepare the vocabulary and embeddings

检查数据,创建目录,准备词汇表和嵌入

"""

logger = logging.getLogger("brc")

logger.info('Checking the data files...')

#检查路径是否存在

for data_path in args.train_files + args.dev_files + args.test_files:

assert os.path.exists(data_path), '{} file does not exist.'.format(data_path)

logger.info('Preparing the directories...')

#准备路径,不存在则创建目录

for dir_path in [args.vocab_dir, args.model_dir, args.result_dir, args.summary_dir]:

if not os.path.exists(dir_path):

os.makedirs(dir_path)

#建立词表

logger.info('Building vocabulary...')

brc_data = BRCDataset(args.max_p_num, args.max_p_len, args.max_q_len,

args.train_files, args.dev_files, args.test_files)

vocab = Vocab(lower=True)

for word in brc_data.word_iter('train'):#将训练集中的问题和文章中的token加入到vocab中

vocab.add(word)

unfiltered_vocab_size = vocab.size() #没有过滤的词表大小

vocab.filter_tokens_by_cnt(min_cnt=2) #过滤频次小于2的token

filtered_num = unfiltered_vocab_size - vocab.size()#filtered过滤的token 数量

logger.info('After filter {} tokens, the final vocab size is {}'.format(filtered_num,

vocab.size()))

#经过多少个token的过滤,最后词表的规模是

logger.info('Assigning embeddings...') #构建词向量 ,随机初始化词向量

#vocab.randomly_init_embeddings(args.embed_size)

vocab.load_pretrained_embeddings('../data/sgns.wiki.word')

logger.info('Saving vocab...')

with open(os.path.join(args.vocab_dir, 'vocab.data'), 'wb') as fout: #写入

pickle.dump(vocab, fout)

logger.info('Done with preparing!') #准备完成

Wiki same time as loading of the first row shows the vocabulary size and dimension , it is to be noted first row skip vocabularies

def load_pretrained_embeddings(self, embedding_path):

"""

loads the pretrained embeddings from embedding_path,

tokens not in pretrained embeddings will be filtered

Args:

embedding_path: the path of the pretrained embedding file

"""

trained_embeddings = {}

#字典

with open(embedding_path, 'r') as fin:

for i,line in enumerate(fin):

if i==0:

continue

contents = line.strip().split() #split 以空格为分隔符切片

token = contents[0]#.decode('utf8')

#contents[0]是token单词

if token not in self.token2id:

#若原先训练好的词没有在新建立的词表中,则跳过本次循环,相当于过滤掉原先训练好词表中在训练集没有出现的词

continue

trained_embeddings[token] = list(map(float, contents[1:]))#将一组向量转换成float

#token对应embedding 建立映射

if self.embed_dim is None:

self.embed_dim = len(contents) - 1

filtered_tokens = trained_embeddings.keys() #过滤掉后剩余的词

#也就是筛选出训练好的词向量与训练集词表重合的词向量trained_embeddings = {}

# rebuild the token x id map 重新建立映射

self.token2id = {}

self.id2token = {}

for token in self.initial_tokens: #向token2id,id2token中增加特殊字符

self.add(token, cnt=0)

for token in filtered_tokens: #遍历筛选出的token加入token2id,id2token

self.add(token, cnt=0)

# load embeddings 加载embedding

self.embeddings = np.zeros([self.size(), self.embed_dim])

for token in self.token2id.keys():

if token in trained_embeddings: #若token在训练好的trained_embeddings中

self.embeddings[self.get_id(token)] = trained_embeddings[token]

#建立该词新ID和embedding的映射

The first step, vocabulary created, ready!