Guide brother promised everyone Kafka second series of original articles. In order to ensure that the content updated in real time, I will also be sent to the relevant articles on Gihub! Address : https: //github.com/Snailclimb/springboot-kafka

Related reading: Beginners! Vernacular take you know Kafka!

Precondition: Your computer has been installed Docker

main content:

- Use Docker installation

- Using the command line function test message queue

- kafka zookeeper and visual management tool

- Java programs using a simple Kafka

Docker installation environment using the built Kafka

single vision

Stand-alone version of Kafka used below to demonstrate, it is recommended to build a stand-alone version of Kafka to learn.

Use the following basic environmental Docker build Kafka from the open source project: https: //github.com/simplesteph/kafka-stack-docker-compose. Of course, you can also follow the official offer to: https: //github.com/wurstmeister/kafka-docker/blob/master/docker-compose.yml.

Called a new zk-single-kafka-single.ymlfile, the file contents are as follows:

version: '2.1'

services:

zoo1:

image: zookeeper:3.4.9

hostname: zoo1

ports:

- "2181:2181"

environment:

ZOO_MY_ID: 1

ZOO_PORT: 2181

ZOO_SERVERS: server.1=zoo1:2888:3888

volumes:

- ./zk-single-kafka-single/zoo1/data:/data

- ./zk-single-kafka-single/zoo1/datalog:/datalog

kafka1:

image: confluentinc/cp-kafka:5.3.1

hostname: kafka1

ports:

- "9092:9092"

environment:

KAFKA_ADVERTISED_LISTENERS: LISTENER_DOCKER_INTERNAL://kafka1:19092,LISTENER_DOCKER_EXTERNAL://${DOCKER_HOST_IP:-127.0.0.1}:9092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: LISTENER_DOCKER_INTERNAL:PLAINTEXT,LISTENER_DOCKER_EXTERNAL:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: LISTENER_DOCKER_INTERNAL

KAFKA_ZOOKEEPER_CONNECT: "zoo1:2181"

KAFKA_BROKER_ID: 1

KAFKA_LOG4J_LOGGERS: "kafka.controller=INFO,kafka.producer.async.DefaultEventHandler=INFO,state.change.logger=INFO"

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

volumes:

- ./zk-single-kafka-single/kafka1/data:/var/lib/kafka/data

depends_on:

- zoo1

复制代码Run the following command to complete the build environment (automatically download and run a zookeeper and kafka)

docker-compose -f zk-single-kafka-single.yml up复制代码If you need to stop Kafka associated container, then run the following command:

docker-compose -f zk-single-kafka-single.yml down复制代码Cluster Edition

Use the following basic environmental Docker build Kafka from the open source project: https: //github.com/simplesteph/kafka-stack-docker-compose.

Called a new zk-single-kafka-multiple.ymlfile, the file contents are as follows:

version: '2.1'

services:

zoo1:

image: zookeeper:3.4.9

hostname: zoo1

ports:

- "2181:2181"

environment:

ZOO_MY_ID: 1

ZOO_PORT: 2181

ZOO_SERVERS: server.1=zoo1:2888:3888

volumes:

- ./zk-single-kafka-multiple/zoo1/data:/data

- ./zk-single-kafka-multiple/zoo1/datalog:/datalog

kafka1:

image: confluentinc/cp-kafka:5.4.0

hostname: kafka1

ports:

- "9092:9092"

environment:

KAFKA_ADVERTISED_LISTENERS: LISTENER_DOCKER_INTERNAL://kafka1:19092,LISTENER_DOCKER_EXTERNAL://${DOCKER_HOST_IP:-127.0.0.1}:9092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: LISTENER_DOCKER_INTERNAL:PLAINTEXT,LISTENER_DOCKER_EXTERNAL:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: LISTENER_DOCKER_INTERNAL

KAFKA_ZOOKEEPER_CONNECT: "zoo1:2181"

KAFKA_BROKER_ID: 1

KAFKA_LOG4J_LOGGERS: "kafka.controller=INFO,kafka.producer.async.DefaultEventHandler=INFO,state.change.logger=INFO"

volumes:

- ./zk-single-kafka-multiple/kafka1/data:/var/lib/kafka/data

depends_on:

- zoo1

kafka2:

image: confluentinc/cp-kafka:5.4.0

hostname: kafka2

ports:

- "9093:9093"

environment:

KAFKA_ADVERTISED_LISTENERS: LISTENER_DOCKER_INTERNAL://kafka2:19093,LISTENER_DOCKER_EXTERNAL://${DOCKER_HOST_IP:-127.0.0.1}:9093

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: LISTENER_DOCKER_INTERNAL:PLAINTEXT,LISTENER_DOCKER_EXTERNAL:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: LISTENER_DOCKER_INTERNAL

KAFKA_ZOOKEEPER_CONNECT: "zoo1:2181"

KAFKA_BROKER_ID: 2

KAFKA_LOG4J_LOGGERS: "kafka.controller=INFO,kafka.producer.async.DefaultEventHandler=INFO,state.change.logger=INFO"

volumes:

- ./zk-single-kafka-multiple/kafka2/data:/var/lib/kafka/data

depends_on:

- zoo1

kafka3:

image: confluentinc/cp-kafka:5.4.0

hostname: kafka3

ports:

- "9094:9094"

environment:

KAFKA_ADVERTISED_LISTENERS: LISTENER_DOCKER_INTERNAL://kafka3:19094,LISTENER_DOCKER_EXTERNAL://${DOCKER_HOST_IP:-127.0.0.1}:9094

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: LISTENER_DOCKER_INTERNAL:PLAINTEXT,LISTENER_DOCKER_EXTERNAL:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: LISTENER_DOCKER_INTERNAL

KAFKA_ZOOKEEPER_CONNECT: "zoo1:2181"

KAFKA_BROKER_ID: 3

KAFKA_LOG4J_LOGGERS: "kafka.controller=INFO,kafka.producer.async.DefaultEventHandler=INFO,state.change.logger=INFO"

volumes:

- ./zk-single-kafka-multiple/kafka3/data:/var/lib/kafka/data

depends_on:

- zoo1

复制代码Run the following commands to complete Kafka 1 node Zookeeper + 3 nodes build environment.

docker-compose -f zk-single-kafka-multiple.yml up复制代码If you need to stop Kafka associated container, then run the following command:

docker-compose -f zk-single-kafka-multiple.yml down复制代码Use the command-line production and consumption of test messages

Under normal circumstances we rarely used Kafka's command line.

1. The implementation of Kafka Kafka container into the interior of the official comes with some command

docker exec -ti docker_kafka1_1 bash复制代码2. List all Topic

root@kafka1:/# kafka-topics --describe --zookeeper zoo1:2181复制代码3. Create a Topic

root@kafka1:/# kafka-topics --create --topic test --partitions 3 --zookeeper zoo1:2181 --replication-factor 1

Created topic test.复制代码We have created a Topic named test, the number of partition is 3, the number of replica 1.

4. Consumers subscribe to topics

root@kafka1:/# kafka-console-consumer --bootstrap-server localhost:9092 --topic test

send hello from console -producer复制代码We subscribe to the Topic named test.

The producer sends a message to the Topic

root@kafka1:/# kafka-console-producer --broker-list localhost:9092 --topic test

>send hello from console -producer

>复制代码We use the kafka-console-producer command to send a message to Topic named test, the message content is: "send hello from console -producer"

This time, you will find that consumers successfully received the message:

root@kafka1:/# kafka-console-consumer --bootstrap-server localhost:9092 --topic test

send hello from console -producer复制代码IDEA related plug-ins recommended

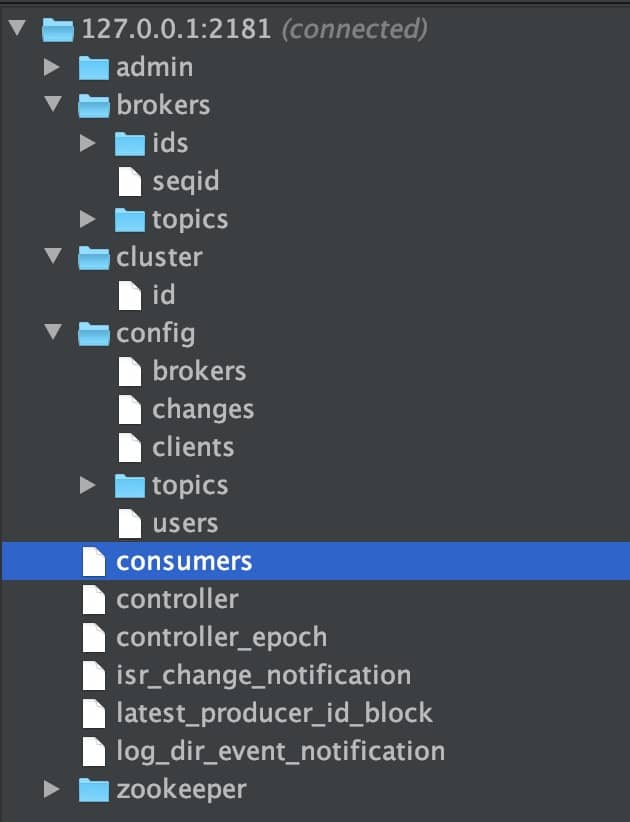

Zoolytic-Zookeeper tool

This is a plug-in Zookeeper visualization tools provided by IDEA, very easy to use! We can use it to:

- Visualization node information ZkNodes

- ZkNodes Node Manager - Add / Remove

- Edit zkNodes data

- ......

Practical effects as follows:

Instructions:

- Open Tools: View-> Tool windows-> Zoolytic;

- Click on the "+" in the pop-up data: "127.0.0.1:2181" is connected ZooKeeper;

- Once connected click on the newly created connection and click the Refresh button next to the "+" sign!

Kafkalytic

Kafka IDEA provides visual management plug-ins. This plug-in provides us with the following wrote this function:

- Support for multiple clusters

- Theme Manager: create / delete / change the partition

- Use regular expressions to search for topics

- Post string / byte sequence of the message

- Use different strategies consumer news

Practical effects as follows:

Instructions:

- Open Tools: View-> Tool windows-> kafkalytic;

- Click on the "+" in the pop-up data: "127.0.0.1:9092" is connected;

Java programs using a simple Kafka

Code address: https: //github.com/Snailclimb/springboot-kafka/tree/master/kafka-intro-maven-demo

Step 1: Create a new Maven project

Step2: to add its dependenciespom.xml

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>2.2.0</version>

</dependency>复制代码Step 3: Initialization consumers and producers

KafkaConstantsKafka constants class defines some common configuration constants.

public class KafkaConstants {

public static final String BROKER_LIST = "localhost:9092";

public static final String CLIENT_ID = "client1";

public static String GROUP_ID_CONFIG="consumerGroup1";

private KafkaConstants() {

}

}

复制代码ProducerCreatorThere is a createProducer()method for a method for returning KafkaProduceran object

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.Producer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

/**

* @author shuang.kou

*/

public class ProducerCreator {

public static Producer<String, String> createProducer() {

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, KafkaConstants.BROKER_LIST);

properties.put(ProducerConfig.CLIENT_ID_CONFIG, KafkaConstants.CLIENT_ID);

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

return new KafkaProducer<>(properties);

}

}

复制代码ConsumerCreator there is a createConsumer()method of a method returns KafkaConsumerthe object

import org.apache.kafka.clients.consumer.Consumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.util.Properties;

public class ConsumerCreator {

public static Consumer<String, String> createConsumer() {

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, KafkaConstants.BROKER_LIST);

properties.put(ConsumerConfig.GROUP_ID_CONFIG, KafkaConstants.GROUP_ID_CONFIG);

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

return new KafkaConsumer<>(properties);

}

}

复制代码Step 4: send and consume messages

Producer Send message:

private static final String TOPIC = "test-topic";

Producer<String, String> producer = ProducerCreator.createProducer();

ProducerRecord<String, String> record =

new ProducerRecord<>(TOPIC, "hello, Kafka!");

try {

//send message

RecordMetadata metadata = producer.send(record).get();

System.out.println("Record sent to partition " + metadata.partition()

+ " with offset " + metadata.offset());

} catch (ExecutionException | InterruptedException e) {

System.out.println("Error in sending record");

e.printStackTrace();

}

producer.close();复制代码Consumer spending news:

Consumer<String, String> consumer = ConsumerCreator.createConsumer();

// 循环消费消息

while (true) {

//subscribe topic and consume message

consumer.subscribe(Collections.singletonList(TOPIC));

ConsumerRecords<String, String> consumerRecords =

consumer.poll(Duration.ofMillis(1000));

for (ConsumerRecord<String, String> consumerRecord : consumerRecords) {

System.out.println("Consumer consume message:" + consumerRecord.value());

}

}复制代码Step 5: Test

Run the program to print out the console:

Record sent to partition 0 with offset 20

Consumer consume message:hello, Kafka!复制代码Open source projects recommended

On the recommendation of other open source projects:

- JavaGuide : Java learning [+] Interview Guide covers a majority of Java programmers need to master the core knowledge.

- Guide-springboot : suitable for beginners as well as experienced developers access to the Spring Boot tutorial (spare time maintenance, maintenance welcome together).

- Advancement-Programmer : I think the technical staff should have some good habits!

- -Security-jwt-the Spring Guide : Getting started from zero! Spring Security With JWT (including verification authority) a rear end part of the code.