SVM study notes (c) - Kernel

Kernel

Nonlinear problems

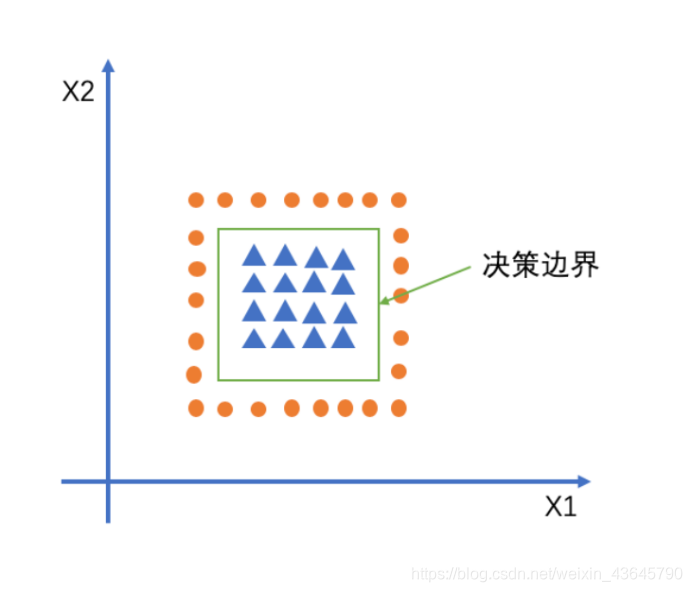

The contents of the previous two articles are carried out on the basis of the sample linearly separable. But the reality is that not all of the sample sets are linearly separable. For non-linear separable data sets, if we still adopt measures before the two articles to optimization solution, it is clearly not acceptable. For example:

At this point we have to find a way to linearly inseparable sample space into the sample space linearly separable. The sample can be mapped from the original space to a higher dimensional feature space, so that the sample can be divided linearly in the feature space.

Fortunately, if the original finite dimensional space, i.e. a limited number of attributes, then there must be a high dimensional feature space can be divided into the sample. The linearly inseparable (linear) space is mapped to sample the high dimensional space, we can use linear high-dimensional feature vector classification, i.e., the nonlinear problem into a linear problem .

Kernel Methods

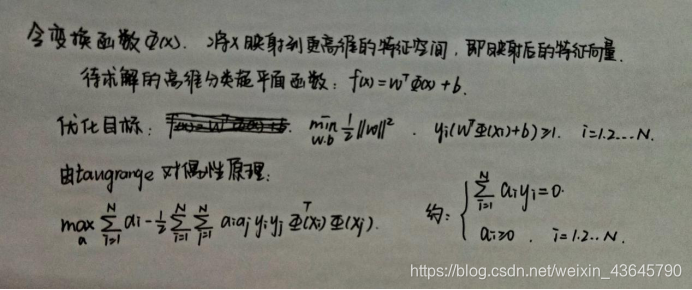

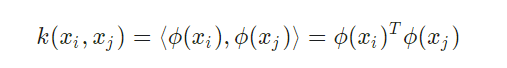

Since for linearly inseparable sample space, to find enables sample set linearly separable high-dimensional space is difficult, and by mapping to a high dimensional feature space, then the inner product calculation process is complex and difficult calculation, so the introduction of the kernel function concept:

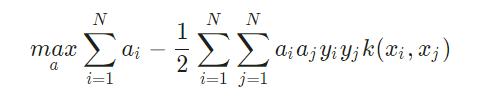

i.e., xi and xj in the feature space volume equal to them by the resulting function K (xi, xj) is calculated in the original sample space, then the above equation can evolve as:

where constraints are:

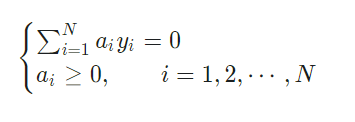

the final decision function model :

If there is a way to the inner product in the feature space is calculated directly, as in the original function of the input point, there are two steps may be fused to create a nonlinear learner together, so that the direct calculation method called the kernel method .

By kernel, we do not have to go directly to the inner product of the high-dimensional even infinite dimensional feature space, is calculated in the original input space, which greatly reduces the computational difficulty.

Presence of kernels

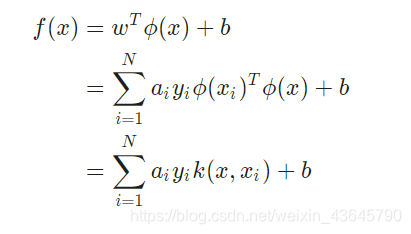

As long as a symmetric function corresponding to the core matrix semi-definite, then it may be used as the kernel function. In fact, for a semi-definite matrix core, you can always find a mapping φ corresponding thereto. In other words, any of a kernel function implicitly define the characteristics of a space called the "reproducing kernel Hilbert space". (Emmm do not understand this sentence)

Common core function

Kernel function required to satisfy Mercer Theorem (Mercer's theorem), i.e. within the sample space of the kernel function any Gram matrix (Gram matrix) semi-definite matrix (semi-positive definite).

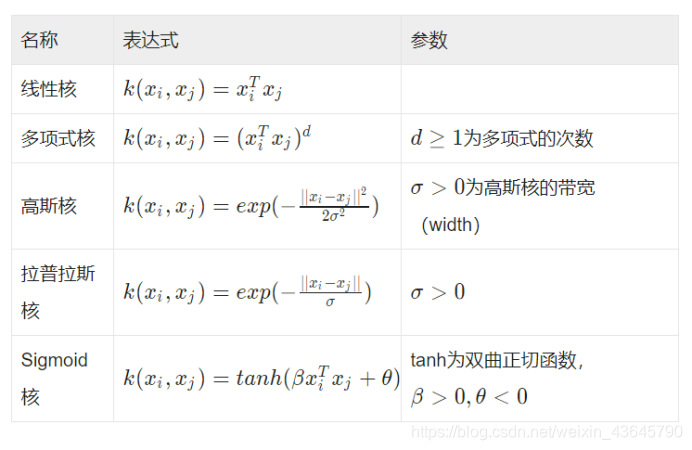

Common kernel functions are: linear kernel, a polynomial kernel function, radial basis function kernel function and the Sigmoid function of the compound nucleus, the nuclear Fourier series, B-spline tensor product kernel and kernel and the like.

The following are a few particular kernel functions commonly used:

Similarly, the kernel function may be obtained by a combination of functions.

Similarly, the kernel function may be obtained by a combination of functions.

Examples of non-linear kernel function to solve the problem

Suppose now that you are a farmer, a group of captive flock, but to prevent the wolves attack the flock, you need to build a fence to up the sheep together. Then the fence should be built where?

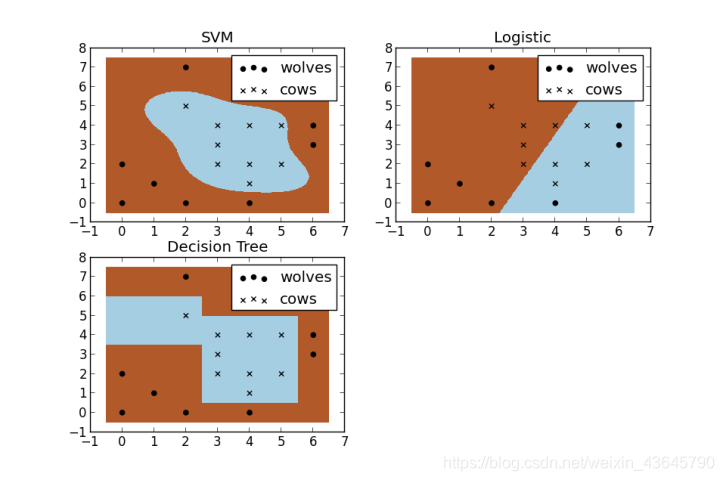

You probably need to create a "classifier" depending on the location of cattle and wolves, compare the following figure which several different classifiers, we can see SVM completed a perfect solution.