20175306 "Information Security System Design Basics" Week 13 learning summary

Find the book you think the most important chapter, in-depth re-learn what is required (period accounted for 10 points):

- Complete all the exercises in this chapter

- Detailed summary of main points

- Knot explain to you a summary of your learning partner and get feedback

Referring to the above study summary template, the learning process published by blog (essay), the blog title "study" Information Security System Design Basics "Week 13 study concluded," blog (essay) through job submission, as of the time this week 23:59.

Chapter XII: concurrent programming learning summary

- If the logic control flow overlap in time, then they are concurrent. And issues on many different levels now computer system.

- Modern operating systems provide a means of three basic configurations concurrent programs

1. Process: Each logical process is a control flow

2.I / O multiplexing: In this form of concurrent programming, the application in a context of the process scheduled show their logical flow

3. thread: a thread is running in a single process flow logic context. - Application-level concurrency is useful:

1. Access the slow I / O devices. When an application is waiting for data from the slow I / O devices (such as disk) is reached, the kernel will run another process keeps the CPU busy. Each application may be used concurrently in a similar way, the I / O requests and other useful work alternately.

2. interact with people. And human-computer interaction requires a computer has the ability to simultaneously perform multiple tasks. For example, when they print a document, you may want to resize a window. This ability to provide concurrent use of modern Windows systems. Each time a user requests a certain operation (such as by mouse click), the logic flows a separate concurrent is created to perform this operation.

3. delayed by delaying work to reduce. Sometimes, the application can be performed concurrently, and other operations by delaying them by reducing the delay of some concurrent operations. For example, a dynamic memory allocator can be combined delayed and put it in a run concurrently on a lower priority "merge" stream, when there is idle CPU cycles take advantage of these idle periods, thus reducing operating a single free Delay.

4. service multiple network clients.

The parallel computing on multi-core machine. Many modern systems are equipped with multi-core processors, multi-core processors contain multiple CPU. Applications are generally divided into concurrent flows faster than running on a single machine on a multicore processor machines, because these streams may be performed in parallel, instead of performing interleaving.

12.1 process-based concurrent programming

Concurrent server-based process

- Use SIGCHLD handler to recover resources dead child processes.

- The parent process must close their respective connfd copy (of the descriptor attached), to avoid a memory leak.

Because the socket file-table entry in the reference count until connfd parent and child are closed, the client connection will be terminated.

About the merits of the process

- Share state information between parent and child, by sharing the file table, but does not share user address space.

Use explicit inter-process communication (IPC) mechanism. But the cost is high, they tend to be slow.

Concurrent based programmed I / O multiplexing

- Use the select function, requiring suspend the kernel process, only after one or more I / O event occurs, it returns control to the application.

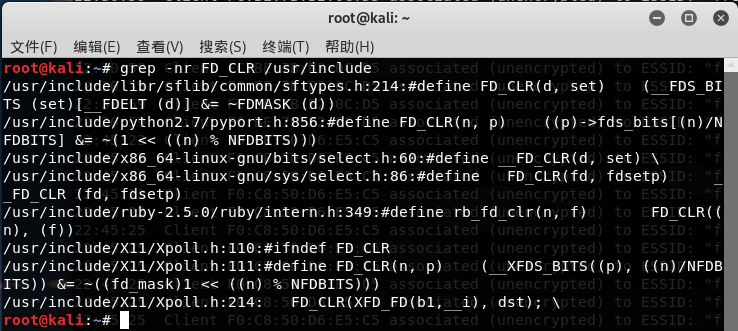

int select(int n,fd_set *fdset,NULL,NULL,NULL); 返回已经准备好的描述符的非0的个数,若出错则为-1。 - fd_set select function processing type is set, is called the set of descriptors, seen as a vector of size n bits:

bn-1,......,b1,b0 - A method of processing a set of descriptors:

- Assign them

- This type of a variable to another variable

With FD_ZERO, FD_SET, FD_CLR and FD_ISSET macros to modify and check them.

Concurrent event driven server-based I / O multiplexing

- I / O multiplexing may be used as the basis of an event concurrent driver.

- The state machine: a set of state, input events, output events and metastasis.

From the circulation: the same transfer between the input and output states.

I / O multiplexing technique merits

- Compared process-based design gives the programmer more control over the behavior of the process, running in a single process context, each logical flow can access all of the address space, sharing data between flow easily.

Coding complexity, concurrent with decreasing particle size, complexity will increase. Particle size: Each logical stream number of instructions executed per time slice.

12.3 thread-based concurrent programming

Thread: operating logic in the context of the process flow is automatically scheduled by the kernel, it has its own thread context, the thread comprising a unique integer ID, stack, stack pointer, program counter, general purpose registers, and condition codes. All threads in a process running in the shared virtual address space of the entire process.

Thread execution model

Each process at the beginning of the life cycle is a single thread, which is called the main thread (main thread). At some point, the main thread to create a peer thread (peer thread), starting from this point of time, the two threads to run concurrently. Finally, because the main thread to perform a slow system call. Or because it is the system timer interrupt interval, the control is passed to the peer by the thread context switch. Peer thread execution for some time, and then passes control back to the main thread, and so on.

Posix Threads

- Posix thread is a standard interface in C language processing threads, an application to create, kill and recovery of threads, thread-safe shared data and peer.

Threaded code and data are encapsulated in a local thread routine.

Create a thread

- Other threads to create a thread by calling pthread_create.

int pthread_create(pthread_t *tid,pthread_attr_t *attr,func *f,void *arg); 成功则返回0,出错则为非零 When the function returns, the parameter contains the newly created thread tid of ID, a new thread can obtain its own thread ID by calling pthread_self function.

pthread_t pthread_self(void);返回调用者的线程ID。Terminate the thread

- A thread is terminated by one of the following ways.

- When the routine returns to the top of the thread, the thread implicitly terminated.

Pthread_exit by calling the function, the thread explicitly terminated

void pthread_exit(void *thread_return);Resource recovery thread has been terminated

Pthread_join thread by calling function waits for the other thread to terminate.

int pthread_join(pthread_t tid,void **thread_return); 成功则返回0,出错则为非零Detached thread

At any one point in time, a thread may be combined or separable. A combination of thread can be withdrawn its resources and kill another thread, before being recycled, its memory resources are not being released. Separate thread on the contrary, resources are automatically released when it terminates.

int pthread_deacth(pthread_t tid); 成功则返回0,出错则为非零Initialize the thread

pthread_once allow initialization state associated with the thread routine.

pthread_once_t once_control=PTHREAD_ONCE_INIT; int pthread_once(pthread_once_t *once_control,void (*init_routine)(void)); 总是返回0

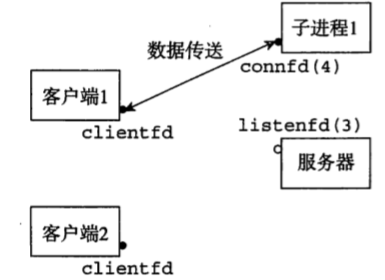

The first step: server accepts the connection request from a client:

After the parent client to create a child process, it accepts a new connection request client terminal 2, and returns a new child process connection descriptor (descriptor 5 for example), then the parent and another derived this sub-process connected with the descriptor 5 to serve its clients.

In this case, under the parent process is waiting for a connection request, and the two sub-processes are concurrently serve their respective clients.

Step two: The server spawns a child process for this client service:

Third Step: Server accepts the connection request to another:

Over 12.4 threaded programs shared variables

A variable is shared. If and only if an instance multiple threads refer to this variable.

Thread memory model

- Each thread has its own thread context, the thread comprising a unique integer ID, stack, stack pointer, program counter, general purpose registers, and condition codes.

- Register is never shared, and virtual memory is always shared.

Separate thread stack is stored in the stack area of the virtual address space, and are usually the corresponding thread independently access.

Mapping variables to memory

- Global Variables: variables defined outside the function

- Local automatic variables: define variables inside a function, but there is no static properties.

Local static variables: functions defined inside and have variable static properties.

Shared variables

If and only if an instance variable is referenced more than one thread, said variable is shared.

12.5 Semaphore thread synchronization

At the same time the introduction of the shared variable synchronization error, that is no way to predict whether the operating system to choose a correct order for the thread.

Progress chart

The execution model n concurrent threads into an n-dimensional Cartesian trajectory space, modeled as instructions from one state to another state.

signal

- P(s):如果s是非零的,那么P将s减一,并且立即返回。如果s为零,那么就挂起这个线程,直到s变为非零。

- V(s):将s加一,如果有任何线程阻塞在P操作等待s变为非零,那么V操作会重启线程中的一个,然后该线程将s减一,完成他的P操作。

- 信号量不变性:一个正确初始化了的信号量有一个负值。

- 信号量操作函数:

int sem_init(sem_t *sem,0,unsigned int value);//将信号量初始化为value int sem_wait(sem_t *s);//P(s) int sem_post(sem_t *s);//V(s)使用信号量来实现互斥

- 二元信号量(互斥锁):将每个共享变量与一个信号量s联系起来,然后用P(s)(加锁)和V(s)(解锁)操作将相应的临界区包围起来。

禁止区:s<0,因为信号量的不变性,没有实际可行的轨迹线能够直接接触不安全区的部分

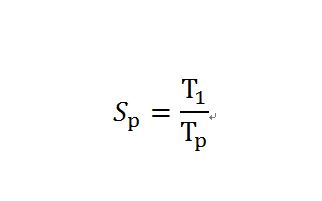

12.6 使用线程来提高并行性

并行程序的加速比通常定义为:

其中,p为处理器核的数量,T为在p个核上的运行时间。

12.7 其他并发问题

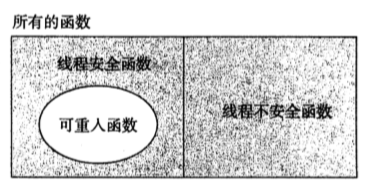

可重入性

- 可重入函数 (reentrant function),其特点在于它们具有这 样一种属性:当它们被多个线程调用时,不会引用任何共享数据。

- 可重入函数通常要比不可重人的线程安全的函数高效一些,因为它们不需要同步操作。更进一步来说,将第 2 类线程不安全函数转化为线程安全函数的唯一方法就是重写它,使之变为可重入的。

可重入函数、线程安全函数和线程不安全函数之间的集合关系:

- 检查某个函数的代码并先验地断定它是可重入的。

- 如果所有的函数参数都是传值传递的(即没有指针),并且所有的数据引用都是本地的自动栈变量(即没有引用静态或全局变量),那么函数就是显式可重入的 (explicitly reentrant),也就是说,无论它是被如何调用的,我们都可以断言它是可重入的。

我们总是使用术语可重入的 (reenntrant) 既包括显式可重入函数也包括隐式可重入函数。然而,认识到可重入性有时既是调用者也是被调用者的属性,并不只是被调用者单独的属性是非常重要的。

线程安全

- 定义四个(不相交的)线程不安全函数类:

- 不保护共享变量的函数。

- 保持跨越多个调用状态的函数。

- 返回指向静态变量指针的函数。

调用线程不安全函数的函数。

竞争

- 当一个程序的正确性依赖于一个线程要在另一个线程到达y点之前到达他的控制流x点时,就会发生竞争。

为消除竞争,我么可以动态地为每个整数ID分配一个独立的块,并且传递给线程例程一个指向这个块的指针。

死锁

- 死锁:一组线程被阻塞了,等待一个永远也不会为真的条件。

- 程序员使用P和V操作顺序不当,以至于两个信号量的禁止区域重叠。

- 重叠的禁止区域引起了一组称为死锁区域的状态。

- 死锁是一个相当难的问题,因为它是不可预测的。

互斥锁加锁顺序规则:如果对于程序中每对互斥锁(s,t),给所有的锁分配一个全序,每个线程按照这个顺序来请求锁,并且按照逆序来释放,这个程序就是无死锁的。

小结:

- 一个并发程序是由在时间上重叠的一组逻辑流组成的。

- 三种不同的构建并发程序的机制:进程、I/O 多路复用和线程。

- 进程是由内核自动调度的,而且因为它们有各自独立的虚拟地址空间,所以要实现共享数 据,必须要有显式的 IPC 机制。事件驱动程序创建它们自己的并发逻辑流,这些逻辑流被模型化为状态机,用I/O 多路复用来显式地调度这些流。因为程序运行在一个单一进程中,所以在流之间共享数据速度很快而且很容易。线程是这些方法的综合。同基于进程的流一样,线程也是由内核自动调度的。同基于 I/O 多路复用的流一样,线程是运行在一个单一进程的上下文中的,因 此可以快速而方便地共享数据。

- 无论哪种并发机制,同步对共享数据的并发访问都是一个困难的问题。提出对信号量的 P 和 V操作就是为了帮助解决这个问题。信号量操作可以用来提供对共享数据的互斥访问,也对诸如生产者一消费者程序中有限缓冲区和读者一写者系统中的共享对象这样的资源访问进行调度。

- 并发也引人了其他一些困难的问题。被线程调用的函数必须具有一种称为线程安全的属性。

- 可重入函数是线程安全函数的一个真子集,它不访问任何共享数据。可重入函数通常比不可重人函数更为有效,因为它们不需要任何同步原语。竞争和死锁是并发程序中出现的另一些困难的问题。当程序员错误地假设逻辑流该如何调度时,就会发生竞争。当一个流等待一个永远不会发生的事件时,就会产生死锁。

教材学习中的问题和解决过程:

:练习题12.1:第33行代码,父进程关闭了连接描述符后,子进程仍然可以使用该描述符和客户端通信。为什么?

答:当父进程派生子进程是,它得到一个已连接描述符的副本,并将相关文件表中的引用计数从1增加到2.当父进程关闭他的描述符副本时,引用计数从2减少到1.因为内核不会关闭一个文件,直到文件表中他的应用计数值变为0,所以子进程这边的连接端将保持打开

练习题12.3:在Linux系统里,在标准输入上键入Ctrl+D表示EOF,若阻塞发生在对select的调用上,键入Ctrl+D会发生什么?

答:会导致select函数但会,准备好的集合中有描述符0

练习题12.4:在服务器中,每次使用select前都初始化pool.ready_set变量的原因?

答:因为pool.ready_set即作为输入参数也作为输出参数,所以在每一次调用select前都重新初始化他。输入时,他包含读集合,在输出,它包含准备好的集合

练习题12.5:在下图1中基于进程的服务器中,我们在两个位置小心地关闭了已连接描述符:父进程和子进程。然而,在图2中,基于线程的服务器中,我们只在一个位置关闭了已连接描述符:对等线程,为什么?

答:因为线程运行在同一个进程中,他们共享同一个描述符表,所以在描述符表中的引用计数与线程的多少是没有关系的都为1,因此只需要一个close就够了。

练习题12.9:设p表示生产者数量,c表示消费者数量,n表示以项目单元为单位的缓冲区大小。对于下面的美国场景,指出subfinsert和subfremove中的互斥信号量是否是必需的。

A.p =1,c =1,n>1

B.p =1,c=1,n=1

C.p>1,c>1,n=1- A:是。因为生产者和消费者会并发地访问缓冲区

- B:不是。因为n=1,一个非空的缓冲区就相当于一个满的缓冲区。当缓冲区包含一个项目的时候,生产者就已经被阻塞了;当缓冲区为空的时候,消费者就被阻塞了。所以在任意时刻,只有一个线程可以访问缓冲区,不必加互斥锁。

- C:不是。同上。

练习题12.16:编写hello.c一个版本,创建和回收n个可结合的对等线程,其中n是一个命令行参数

- 答:代码如下

#include <stdio.h>

#include "csapp.h"

void *thread(void *vargp);

#define DEFAULT 4

int main(int argc, char* argv[]) {

int N;

if (argc > 2)

unix_error("too many param");

else if (argc == 2)

N = atoi(argv[1]);

else

N = DEFAULT;

int i;

pthread_t tid;

for (i = 0; i < N; i++) {

Pthread_create(&tid, NULL, thread, NULL);

}

Pthread_exit(NULL);

}

void *thread(void *vargp) {

printf("Hello, world\n");

return NULL;

}练习题12.17:修改程序的bug,要求程序睡眠1秒钟,然后输出一个字符串

- 答:代码如下:

#include "csapp.h"

void *thread(void *vargp);

int main()

{

pthread_t tid;

Pthread_create(&tid, NULL, thread, NULL);

// exit(0);

Pthread_exit(NULL);

}

/* Thread routine */

void *thread(void *vargp)

{

Sleep(1);

printf("Hello, world!\n");

return NULL;

}代码调试中的问题和解决过程

condvar.c

#include <stdlib.h>

#include <pthread.h>

#include <stdlib.h>

typedef struct _msg{

struct _msg * next;

int num;

} msg;

msg *head;

pthread_cond_t has_product = PTHREAD_COND_INITIALIZER;

pthread_mutex_t lock = PTHREAD_MUTEX_INITIALIZER;

void *consumer ( void * p )

{

msg * mp;

for( ;; ) {

pthread_mutex_lock( &lock );

while ( head == NULL )

pthread_cond_wait( &has_product, &lock );

mp = head;

head = mp->next;

pthread_mutex_unlock ( &lock );

printf( "Consume %d tid: %d\n", mp->num, pthread_self());

free( mp );

sleep( rand() % 5 );

}

}

void *producer ( void * p )

{

msg * mp;

for ( ;; ) {

mp = malloc( sizeof(msg) );

pthread_mutex_lock( &lock );

mp->next = head;

mp->num = rand() % 1000;

head = mp;

printf( "Produce %d tid: %d\n", mp->num, pthread_self());

pthread_mutex_unlock( &lock );

pthread_cond_signal( &has_product );

sleep ( rand() % 5);

}

}

int main(int argc, char *argv[] )

{

pthread_t pid1, cid1;

pthread_t pid2, cid2;

srand(time(NULL));

pthread_create( &pid1, NULL, producer, NULL);

pthread_create( &pid2, NULL, producer, NULL);

pthread_create( &cid1, NULL, consumer, NULL);

pthread_create( &cid2, NULL, consumer, NULL);

pthread_join( pid1, NULL );

pthread_join( pid2, NULL );

pthread_join( cid1, NULL );

pthread_join( cid2, NULL );

return 0;

}运行结果

mutex用于保护资源,wait函数用于等待信号,signal函数用于通知信号,wait函数中有一次对mutex的释放和重新获取操作,因此生产者和消费者并不会出现死锁。

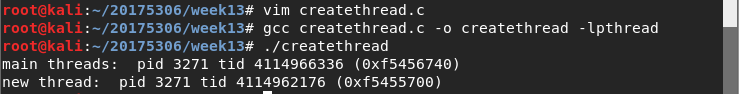

createthread.c

#include <stdio.h>

#include <string.h>

#include <stdlib.h>

#include <pthread.h>

#include <unistd.h>

pthread_t ntid;

void printids( const char *s )

{

pid_t pid;

pthread_t tid;

pid = getpid();

tid = pthread_self();

printf("%s pid %u tid %u (0x%x) \n", s , ( unsigned int ) pid,

( unsigned int ) tid, (unsigned int ) tid);

}

void *thr_fn( void * arg )

{

printids( arg );

return NULL;

}

int main( void )

{

int err;

err = pthread_create( &ntid, NULL, thr_fn, "new thread: " );

if ( err != 0 ){

fprintf( stderr, "can't create thread: %s\n", strerror( err ) );

exit( 1 );

}

printids( "main threads: " );

sleep(1);

return 0;

}运行结果

打印进程和线程ID

share.c

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

#include <unistd.h>

char buf[BUFSIZ];

void *thr_fn1( void *arg )

{

printf("thread 1 returning %d\n", getpid());

printf("pwd:%s\n", getcwd(buf, BUFSIZ));

*(int *)arg = 11;

return (void *) 1;

}

void *thr_fn2( void *arg )

{

printf("thread 2 returning %d\n", getpid());

printf("pwd:%s\n", getcwd(buf, BUFSIZ));

pthread_exit( (void *) 2 );

}

void *thr_fn3( void *arg )

{

while( 1 ){

printf("thread 3 writing %d\n", getpid());

printf("pwd:%s\n", getcwd(buf, BUFSIZ));

sleep( 1 );

}

}

int n = 0;

int main( void )

{

pthread_t tid;

void *tret;

pthread_create( &tid, NULL, thr_fn1, &n);

pthread_join( tid, &tret );

printf("n= %d\n", n );

printf("thread 1 exit code %d\n", (int) tret );

pthread_create( &tid, NULL, thr_fn2, NULL);

pthread_join( tid, &tret );

printf("thread 2 exit code %d\n", (int) tret );

pthread_create( &tid, NULL, thr_fn3, NULL);

sleep( 3 );

pthread_cancel(tid);

pthread_join( tid, &tret );

printf("thread 3 exit code %d\n", (int) tret );

}运行结果

获得线程的终止状态,thr_fn 1,thr_fn 2和thr_fn 3三个函数对应终止线程的三种方法,即从线程函数return,调用pthread_exit终止自己和调用pthread_cancel终止同一进程中的另一个线程。

countwithmutex.c

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

#define NLOOP 5000

int counter;

pthread_mutex_t counter_mutex = PTHREAD_MUTEX_INITIALIZER;

void *doit( void * );

int main(int argc, char **argv)

{

pthread_t tidA, tidB;

pthread_create( &tidA ,NULL, &doit, NULL );

pthread_create( &tidB ,NULL, &doit, NULL );

pthread_join( tidA, NULL );

pthread_join( tidB, NULL );

return 0;

}

void * doit( void * vptr)

{

int i, val;

for ( i=0; i<NLOOP; i++ ) {

pthread_mutex_lock( &counter_mutex );

val = counter++;

printf("%x: %d \n", (unsigned int) pthread_self(), val + 1);

counter = val + 1;

pthread_mutex_unlock( &counter_mutex );

}

return NULL;

}运行结果

引入互斥锁(Mutex),获得锁的线程可以完成”读-修改-写”的操作,然后释放锁给其它线程,没有获得锁的线程只能等待而不能访问共享数据。

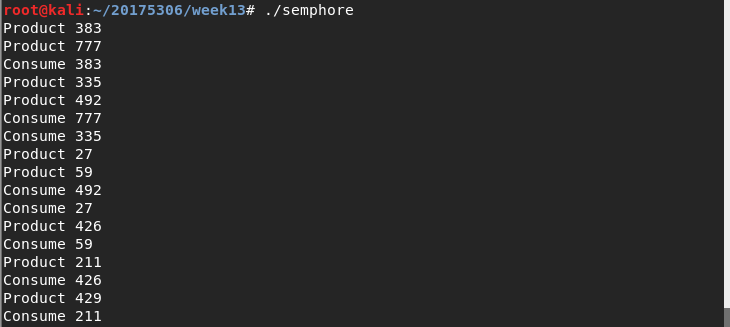

semphore.c

#include <stdio.h>

#include <pthread.h>

#include <stdlib.h>

#include <semaphore.h>

#define NUM 5

int queue[NUM];

sem_t blank_number, product_number;

void *producer ( void * arg )

{

static int p = 0;

for ( ;; ) {

sem_wait( &blank_number );

queue[p] = rand() % 1000;

printf("Product %d \n", queue[p]);

p = (p+1) % NUM;

sleep ( rand() % 5);

sem_post( &product_number );

}

}

void *consumer ( void * arg )

{

static int c = 0;

for( ;; ) {

sem_wait( &product_number );

printf("Consume %d\n", queue[c]);

c = (c+1) % NUM;

sleep( rand() % 5 );

sem_post( &blank_number );

}

}

int main(int argc, char *argv[] )

{

pthread_t pid, cid;

sem_init( &blank_number, 0, NUM );

sem_init( &product_number, 0, 0);

pthread_create( &pid, NULL, producer, NULL);

pthread_create( &cid, NULL, consumer, NULL);

pthread_join( pid, NULL );

pthread_join( cid, NULL );

sem_destroy( &blank_number );

sem_destroy( &product_number );

return 0;

}运行结果:

semaphore表示信号量,semaphore变量的类型为sem_t,sem_init()初始化一个semaphore变量,value参数表示可用资源 的数量,pshared参数为0表示信号量用于同一进程的线程间同步。

count.c

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

#define NLOOP 5000

int counter;

void *doit( void * );

int main(int argc, char **argv)

{

pthread_t tidA, tidB;

pthread_create( &tidA ,NULL, &doit, NULL );

pthread_create( &tidB ,NULL, &doit, NULL );

pthread_join( tidA, NULL );

pthread_join( tidB, NULL );

return 0;

}

void * doit( void * vptr)

{

int i, val;

for ( i=0; i<NLOOP; i++ ) {

val = counter++;

printf("%x: %d \n", (unsigned int) pthread_self(), val + 1);

counter = val + 1;

}

}运行结果:

- 这是一个不加锁的创建两个线程共享同一变量都实现加一操作的程序,在这个程序中虽然每个线程都给count加了5000,但由于结果的互相覆盖,最终输出值不是10000,而是5000。