Scenes:

glusterfs effect: allows multiple hosts to share data through glusterfs. (Ie, distributed file storage)

k8s deployed in multiple node node:

Scene 1: pod each update starts are likely to switch to a different node node. But after reading the file every time you start also need to call the last operation, this time on the show and glusterfs handy.

Scene 2: log generated by the need to retain pod and stored centrally, as glusterfs This situation is very perfectly centralized storage (e.g.,).

More than two cases illustrate the communication and interaction pod glusterfs directly paste the following code:

K8S host, the operating system unified CentOS Linux release 7.6.1810.

| IP | Roles | k8s version | Configuration |

| 192.168.133.128 | master | v1.14.2 | 2c 2G |

| 192.168.133.129 | node2 | v1.14.2 | 2c 2G |

| 192.168.133.130 | node3 | v1.14.2 | 2c 2G |

Glusterfs host situation

| IP | Roles | Glusterfs | Configuration |

| 192.168.133.131 | Single-Node Operation | glusterfs 6.6 | 2c 2G |

K8S build clusters: https://www.kubernetes.org.cn/5462.html

Installation Glusterfs:

Here only create Glusterfs point to start from K8S.

[root @ Master GlusterFS] # CAT GlusterFS-endpoints.json

apiVersion: v1 #### version numbers do not explain the

kind: type Endpoints #### declaration created directly copied on the line

the Metadata:

name: GlusterFS-Volume #### Endpoints name to be created, easily play.

Subsets:

- Addresses:

- ip: 192.168.133.131 IP #### GlusterFS

the ports:

- Port: 20 #### this port do not understand what it meant, just write.

#########################################################

[root @ Master GlusterFS] # CAT pv-demo.yaml

apiVersion: ###### v1 version does not explain the

kind: type PersistentVolume ###### declaration created

the Metadata:

name: Tong-Gluster ##### PV name # created easily play

spec:

capacity: ###### capacity attribute

storage: 7G ###### capacity size

accessModes: ###### mount mode

- ReadWriteMany ###### in three: ReadWriteMany: multiple nodes write ReadOnlyMany: read-only multi-node ReadWriteOnce: single node reader

persistentVolumeReclaimPolicy: Recycle ######## durability properties in three: Recycle: struck again required before use. delete: not used. Retain: Manual recovery

GlusterFS:

Endpoints: " GlusterFS-Volume " Endpoint ##### pv used, endpoint name we created above

path: "project" Volume Name ##### glusterfs created: this is very important, not wrong pod will be mounted on. (Many posts did not explain it ^ _ ^)

readOnly: ##### false read-only mode is set to false

################################################################

[root @ Master GlusterFS] # CAT PVC-demo.yaml

kind: PersistentVolumeClaim ##### declaration type

apiVersion: v1

the Metadata:

name: xian GlusterFS #### PVC-name casual play.

spec:

accessModes:

- ##### ReadWriteMany connected mode read-write mode

Resources:

Requests:

Storage: 2G ##### application size, consistent with the general PV created (or less than PV). PVC will automatically go to match PV has been created, if not exactly the same on the closest match.

###################################################################

In front of the basic work done, you can create the following POD to mount PVC, and to see an example:

[root @ Master GlusterFS] # CAT nginx-deployment.yaml

apiVersion: Apps / v1 ########### version number, do not explain

kind: Deployment ###### Type

the Metadata:

name: web02- deployment ###### deployment name, easily play

labels:

web02: nginx ###### label, just from the

spec:

Replicas: ###### 2 number of POD start, just write.

Selector:

matchLabels:

web02: nginx ###### label format. . Must be written, all the best labels are the same, a good distinction.

Template:

the Metadata:

Labels:

web02: nginx ####### POD label

spec:

Containers:

- name: nginx02 ###### POD name

image: nginx ###### image name

the ports:

- containerPort: 80 ###### port number of external exposure

volumeMounts: ###### mount

- name: www ##### and the following volumes name to be the same, because this is the name of Volumes call

mountPath: / usr / share / nginx / html ##### to mount what path the POD

volumes: # ##### mount statement

- name: www ###### declaration mount name, to call the above volumeMounts

persistentVolumeClaim: ###### persistent requests

claimName: xian-glusterfs ###### ###### selection PVC name created above.

#################################################################

The above documents are configured to use the next kubectl then we create, in order to:

1、kubectl create -f glusterfs-endpoints.json

2、kubectl create -f pv-demo.yaml

3、kubectl create -f pvc-demo.yaml

4、kubectl create -f nginx-deployment.yaml

Once created us to view the situation run under:

[root @ Master GlusterFS] # kubectl GET EP -o Wide

NAME AGE ENDPOINTS

Endpoint GlusterFS-Volume 192.168.133.131:20 93m we created

httpd 10.244.1.43:8080,10.244.2.33:8080,10.244.2.36:8080 27H

Kubernetes 192.168 .133.128: 6443 4d13h

nginx 10.244.1.41:80,10.244.2.32:80,10.244.2.37:80 2d3h

web02 10.244.1.48:80,10.244.2.41:80 82m Pod we created

[root@master glusterfs]# kubectl get pods -l web02=nginx

NAME READY STATUS RESTARTS AGE

web02-deployment-6f4f996589-4gw4z 1/1 Running 0 88m

web02-deployment-6f4f996589-m28nh 1/1 Running 0 88m

[root@master glusterfs]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

tong-gluster 7G RWX Recycle Bound default/xian-glusterfs 91m

[root@master glusterfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

xian-glusterfs Bound tong-gluster 7G RWX 93m

Are created, then we create a service for use with external host access

[root @ Master GlusterFS] # CAT nginx_service.yaml

apiVersion: v1

kind: Service ###### declare the type of service

the Metadata:

Labels:

web02: nginx ####### Label

name: web02 ###### # service name

namespace: default

spec:

the ports:

- Port: 80 ####### Pin servcie internal services used for k8s each node access use, external network can not access.

Port number 80 ####### pod exposed port number, it provides back-end services: TARGETPORT

nodePort: 30099 ####### mapped to the local node port number, providing services to the external network

type: nodePort ####### was declared nodeport type above nodeport valid.

selector:

web02: nginx ########### choice Pod label web02: nginx's Pod association, we played to the POD label when creating a POD, POD can see above creation.

##################################################################

Let's create a service created in association Pod 2 above.

kubectl create -f nginx_service.yaml

Use the following command to see the related case sevice:

[root@master glusterfs]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

httpd NodePort 10.1.26.50 <none> 80:30089/TCP 28h

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 4d14h

nginx NodePort 10.1.241.95 <none> 88:30088/TCP 2d4h

web02 NodePort 10.1.168.227 <none> 80:30099/TCP 94m

[root@master glusterfs]# kubectl describe svc web02

Name: web02

Namespace: default

Labels: web02=nginx

Annotations: <none>

Selector: web02=nginx

Type: NodePort

IP: 10.1.168.227

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 30099/TCP

Endpoints: 10.244.1.48:80,10.244.2.41:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

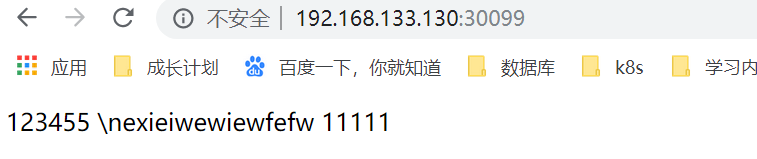

We are deployed, and our test access services. Glusterfs page is my own in the end changed, or is not mounted directly into the file.