table of Contents

Depth analysis of container across the host network

Docker default configuration, the container is on a different host IP address can not communicate with each other.

So there have been many community programs for cross-host communication to solve the problem of the container.

Flannel

Flannel supports three backend implementation:

- VXLAN

- host-w

- UDP

First to UDP mode as an example

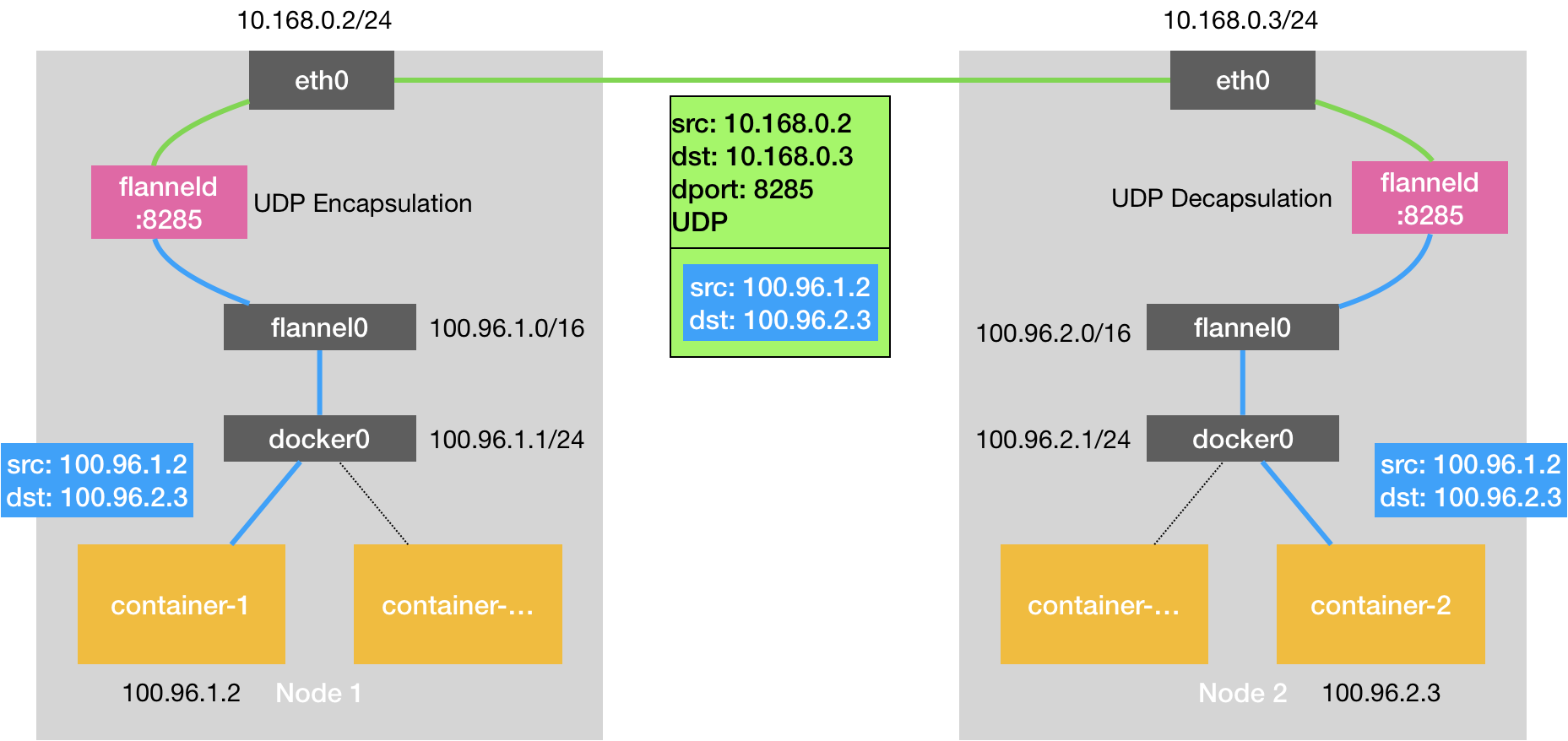

Flannel UDP mode rationale

Suppose there are two host:

- Node1: container-1, IP address 100.96.1.2, the corresponding address 100.96.1.1/24 bridge docker0

- Node2: container-2, IP address 100.96.2.3, the corresponding address 100.96.2.1/24 bridge docker0

If the container-1 and to container 2-communication, then the process initiated by the destination address of the IP packet is 100.96.2.3, the IP Datagram is docker0 forwarded to the host, Node-1 to determine the IP packet according to the routing table of the host next hop IP address.

Node-1 is located on a series of routing rules Flannel previously added in the Node-1, as follows:

# 在Node 1上

$ ip route

default via 10.168.0.1 dev eth0

100.96.0.0/16 dev flannel0 proto kernel scope link src 100.96.1.0

100.96.1.0/24 dev docker0 proto kernel scope link src 100.96.1.1

10.168.0.0/24 dev eth0 proto kernel scope link src 10.168.0.2Can be seen, according to the route table, the IP packet destination IP address 100.96.2.3 flannel0 will be sent to the interface of the machine.

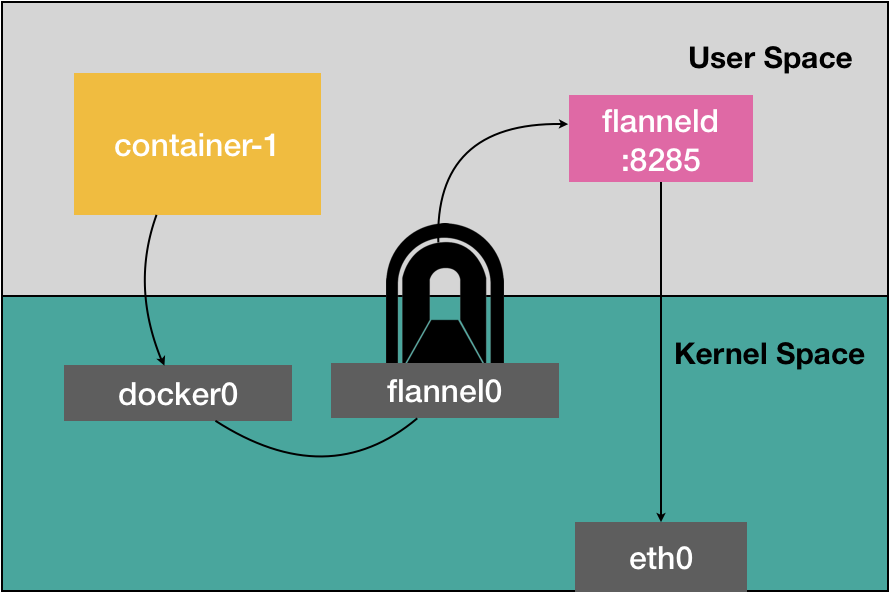

This flannel0 interface is a TUN device (Tunnel equipment).

In Linux, TUN device is a virtual network device to work in the network layer, the function of the device is to deliver IP packets between the kernel and user application process . The user application process is to create a process that TUN device, of course, is with us Flannel process on the host.

Therefore, here, the destination address of the vessel container-1 transmission to IP packets would be captured 100.96.2.3 Flannel process, the Flannel process name in the host is flanneld.

flanneld required according to the purpose IP, find the IP address to Node-2 continues forwarding.

Here it is necessary Flannel project is another very important concept: subnet.

In Flannel project, all containers on a single host, which host belongs to a subnet to be assigned to the subnet address is the address of the entry on the host docker0 bridge. For example, in the Node-1, the network address of the subnet bridge docker0 represented as 100.96.1.0/24, in the Node-2, the network address of the subnet bridge docker0 represented as 100.96.2.0/24.

And this correspondence is maintained by the Flannel project, so stand flanneld process through the perspective of God IP packet destination address to know where the destination IP subnet address, it will all host and subnet addresses corresponding relationship is saved in ETCD, you can find the node where the subnet by the subnet IP address of the destination is located.

$ etcdctl ls /coreos.com/network/subnets

/coreos.com/network/subnets/100.96.1.0-24

/coreos.com/network/subnets/100.96.2.0-24

/coreos.com/network/subnets/100.96.3.0-24The above instructions to view the correspondence relationship between the container and the IP subnet where the subnet is 100.96.2.3 100.96.2.0/24

$ etcdctl get /coreos.com/network/subnets/100.96.2.0-24

{"PublicIP":"10.168.0.3"}According to this subnet address, can also be found in the corresponding node is the IP address 10.168.0.3 ETCD

After obtaining the IP address, flanneld encapsulating the original IP destination IP address is 10.168.0.3 put into a UDP packet, and then transmitted to the Node-2 after the host. At this time, the source address of the UDP packet to the IP address of certain node Node-1. Of course, as flanneld process of the application layer needs to listen on a port to capture the contents of the UDP packet, listening port flanneld process for 8285

When the UDP packet is sent to the Node-2, firstly through layers of the network stack Node-2 deblocking, flanneld process will obtain the IP packet sent container-1, and then again it flanel0 interface sends the IP packet through the to Node 2-on network protocol stack, Node-2 in the routing table:

# 在Node 2上

$ ip route

default via 10.168.0.1 dev eth0

100.96.0.0/16 dev flannel0 proto kernel scope link src 100.96.2.0

100.96.2.0/24 dev docker0 proto kernel scope link src 100.96.2.1

10.168.0.0/24 dev eth0 proto kernel scope link src 10.168.0.3The network protocol stack of the Node-2 of the IP packet routing rules match, first sends an ARP request to all devices on the subnet 100.96.2.0/24 through docker0, corresponding to the MAC address of 100.96.2.3, the data Layer packet transmitted to the virtual switch docker0 bridge, docker0 network forwards the packet to the container-2 container and container-2 network stack layers of the container decapsulating final pass data to a vessel container-2 a process.

The basic schematic diagram:

Defects UDP mode

We can see that, in fact, the most critical step Flannel projects are completed by the flanneld process, the process acquires IP packets directly from the network layer network protocol stack of the Linux kernel, and then use the stored mapping relationship in etcd in re the as the IP data packet, encapsulate a new UDP packet, and then transmits the UDP packet to the destination host through the host network stack, consider the process container-1 from the data transmitted by the eth0, transmitting only the stage, the user data needs copy three times between processes and the kernel.

In Linux, a user mode operation, and the cost of copying data between user mode and kernel mode is relatively high. This is not good Flannel UDP mode performance reasons.

We need to reduce the number of switches user mode and kernel mode, and the core processing logic performed in kernel mode.

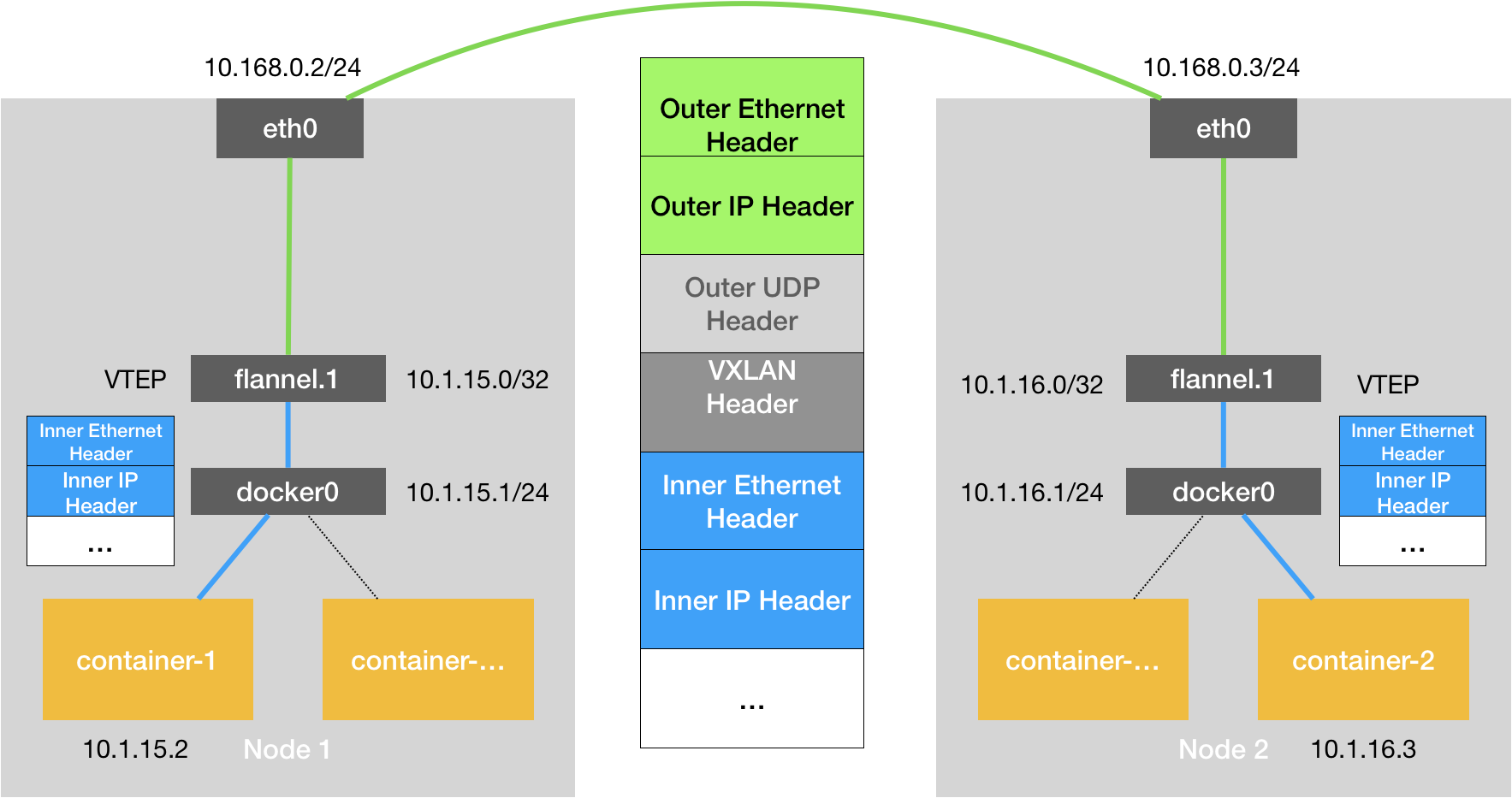

Flannel VXLAN mode

VXLAN: Virtual Extensible LAN (virtual local area network can be extended), is a network of Linux kernel to support virtualization technology.

The basic idea is a conventional three layer network over "coverage" of the virtual layer, the Layer 2 network kernel module VXLAN maintained, such that all devices (network adapter) connected to the layer 2 network can as in the same LAN as the free communication.

VETP equipment, while also having the IP address of the MAC address, can be understood as a virtual network adapter. VETP on our devices are connected to the aforementioned virtual Layer 2 network.

And UDP patterns, process the MAC address in the container connected to the bridge VETP docker0 apparatus flannel.1 by ARP, then the container Inner IP datagram encapsulated into a Layer 2 frame, the frame forwarding docker0 to flannel.1 interface.

After receiving the L2 data frame flannel.1, Node-1 through the network protocol stack, the data frame is deblocked, Node-1 obtained Inner IP datagram. Similarly, flannel Node-1 on the process will be started after the Node-2 was added and Flannel network, add a routing rule as follows on all nodes in the network:

$ route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

...

10.1.16.0 10.1.16.0 255.255.255.0 UG 0 0 0 flannel.1The control rule, the destination address of the IP packet should 10.1.16.3 via an interface flannel.1 (VETP source device) sent to the gateway 10.1.16.0, it is the object of Node-2 flannel.1 gateway (destination VETP) interface IP address, while, at the start and Node-2 was added Flannel network, we added to the Node-1 an ARP table entry as follows:

# 在Node 1上

$ ip neigh show dev flannel.1

10.1.16.0 lladdr 5e:f8:4f:00:e3:37 PERMANENTSo that we know the MAC address of the destination device is VETP 5e: f8: 4f: 00: e3: 37, IP address 10.1.16.0. With this MAC address, we can build a Layer 2 frame destination MAC address 5e: f8: 4f: 00: e3: 37, wherein the object IP IP datagram is 10.1.16.0.

At this point, based on knowledge of computer networks. We have pre-Node-1 and VETP devices on the Node-2 (understood as a network adapter) are "plug" at the top of this virtual Layer 2 network VXLAN, then we pass this Layer 2 data packets should be able to complete the link working layers, object VETP layer device can get the data packet.

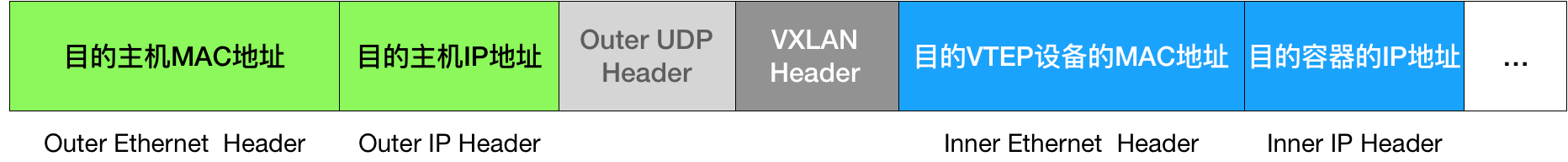

Internal principle of VXLAN

As the packet is a Layer how the host, using the actual network transmission, is the kernel module VXLAN completed.

All IP addresses and MAC address we used previously, in fact, for the host networks does not make sense. Because these devices are "virtual."

Layer 2 data previously obtained frame called an "internal data frames." So we need to internal data frames, as the data, encapsulated within UDP packets for transmission on a real network host.

Linux kernel before VXLAN header field a special "internal data frame", indicating that the data is a data frame VXLAN be used, so that when the destination host network stack unseal when the data can be transferred to it a frame their VXLAN.

Then, Linux kernel will be encapsulated into the data frames inside a UDP packet.

We now know that the "virtual" MAC address VXLAN interface, but also the real IP address of the destination host to continue.

flannel process in advance for us to maintain this relationship mapping

# 在Node 1上,使用“目的VTEP设备”的MAC地址进行查询

$ bridge fdb show flannel.1 | grep 5e:f8:4f:00:e3:37

5e:f8:4f:00:e3:37 dev flannel.1 dst 10.168.0.3 self permanentBy forwarding database FDB, we can query, IP address of the destination host is 10.168.0.3. The next process is normal, the work packet on the host network.

Finishing touches on the destination host

When the above-described "external data frame" is transmitted to the destination host through the host network the network adapter, the network kernel stack Node-2 packet decapsulation gradually until the kernel module acquires VXLAN UDP header, in which the information decided to forward the data frame to the remaining devices on VETP Node-2, the apparatus (virtual network adapters) deblocked to give Inner IP datagram, and wherein the IP address of the MAC address of eth0 purpose vessel through ARP, then use docker0 forwarded to complete the final step.