1. batch norm

Input data batch norm layer [N, C, H, W], the C layer is a calculated mean, variance into C, output data [N, C, H, W].

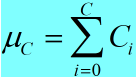

<1> image of the point that the average calculation process is as follows:

(1)

(1)

I.e., the number of channels in the batch of the same index are averaged, the average value is finally calculated C a calculation process and the same variance.

<2> batch norm layer effect:

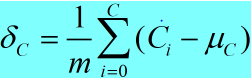

. a Mean:  (2)

(2)

b variance:  (3)

(3)

. c normalized:  (4)

(4)

2. caffe in batch_norm_layer.cpp in LayerSetUp function:

1 template <typename Dtype> 2 void BatchNormLayer<Dtype>::LayerSetUp(const vector<Blob<Dtype>*>& bottom, 3 const vector<Blob<Dtype>*>& top) { 4 BatchNormParameter param = this->layer_param_.batch_norm_param();

//读取deploy中moving_average_fraction参数值 5 moving_average_fraction_ = param.moving_average_fraction();

//改变量在batch_norm_layer.hpp中的定义为bool use_global_stats_ 6 use_global_stats_ = this->phase_ == TEST;

//channel在batch_norm_layer.hpp as defined in channels_ int . 7 if (param.has_use_global_stats()) 8 use_global_stats_ = param.use_global_stats(); 9 if (bottom[0]->num_axes() == 1) 10 channels_ = 1; 11 else 12 channels_ = bottom[0]->shape(1); 13 eps_ = param.eps(); 14 if (this->blobs_.size() > 0) { 15 LOG(INFO) << "Skipping parameter initialization"; 16 } the else {

// the number of blobs at strategically three, wherein:

// blobs_ [0] is the size of channels_, save mean batch input in each channel;

// blobs_ [. 1] size of channels_, each stored input batch the variance of the channel;

// blobs_ [2] a size of 1, the parameter saving moving_average_fraction;

// initializes to the above three blobs_ of 0. the . 17 the this -> blobs_.resize ( . 3 ); 18 is Vector < int > SZ; . 19 sz.push_back (channels_); 20 is the this -> blobs_ [ 0 ] .reset ( new new Blob <to Dtype> (SZ)); 21 is the this -> blobs_ [ . 1 ] .reset ( new new Blob <to Dtype> (SZ)); 22 is sz[0] = 1; 23 this->blobs_[2].reset(new Blob<Dtype>(sz)); 24 for (int i = 0; i < 3; ++i) { 25 caffe_set(this->blobs_[i]->count(), Dtype(0), 26 this->blobs_[i]->mutable_cpu_data()); 27 } 28 } 29 // Mask statistics from optimization by setting local learning rates 30 // for mean, variance, and the bias correction to zero. 31 for (int i = 0; i < this->blobs_.size(); ++i) { 32 if (this->layer_param_.param_size() == i) { 33 ParamSpec* fixed_param_spec = this->layer_param_.add_param(); 34 fixed_param_spec->set_lr_mult(0.f); 35 } else { 36 CHECK_EQ(this->layer_param_.param(i).lr_mult(), 0.f) 37 << "Cannot configure batch normalization statistics as layer " 38 << "parameters."; 39 } 40 } 41 }

3. caffe in batch_norm_layer.cpp in Reshape functions:

1 void BatchNormLayer<Dtype>::Reshape(const vector<Blob<Dtype>*>& bottom, 2 const vector<Blob<Dtype>*>& top) { 3 if (bottom[0]->num_axes() >= 1) 4 CHECK_EQ(bottom[0]->shape(1), channels_); 5 top[0]->ReshapeLike(*bottom[0]);

//batch_norm_layer.hpp对如下变量进行了定义:

//Blob<Dtype> mean_, variance_, temp_, x_norm_;

//blob<Dtype> batch_sum_multiplier_;

//blob<Dtype> sum_by_chans_;

BLOB // <to Dtype> spatial_sum_multiplier_; . 6 Vector < int > SZ; . 7 sz.push_back (channels_);

// Mean and variance blob BLOB size of Channel . 8 mean_.Reshape (SZ); . 9 variance_.Reshape (SZ);

// temp_ blob size and x_norm_ blob, blob data and input the same 10 temp_.ReshapeLike (bottom * [ 0 ]); . 11 x_norm_.ReshapeLike (bottom * [ 0 ]);

// SZ [0] the value N, batch_sum_multiplier_ blob size of N 12 is SZ [ 0 ] = bottom [ 0 ] -> Shape ( 0 ); 13 is batch_sum_multiplier_.Reshape (SZ);

//spatial_dim = N*C*H*W / C*N = H*W 14 int spatial_dim = bottom[0]->count()/(channels_*bottom[0]->shape(0)); 15 if (spatial_sum_multiplier_.num_axes() == 0 || 16 spatial_sum_multiplier_.shape(0) != spatial_dim) { 17 sz[0] = spatial_dim;

//spatial_sum_multiplier_的尺寸为H*W, 并且初始化为1 18 spatial_sum_multiplier_.Reshape(sz); 19 Dtype* multiplier_data = spatial_sum_multiplier_.mutable_cpu_data(); 20 caffe_set (spatial_sum_multiplier_.count (), to Dtype ( . 1 ), multiplier_data); 21 is }

// N * C = numbychans 22 is int numbychans = channels_ bottom * [ 0 ] -> Shape ( 0 ); 23 is IF (num_by_chans_.num_axes () == 0 || 24 num_by_chans_.shape ( 0 !) = numbychans) { 25 SZ [ 0 ] = numbychans;

// size of num_by_chans_ C * N, and initialized. 1 26 is num_by_chans_.Reshape (SZ); 27 caffe_set (batch_sum_multiplier_.count (), to Dtype ( . 1 ), 28 batch_sum_multiplier_.mutable_cpu_data()); 29 } 30 }

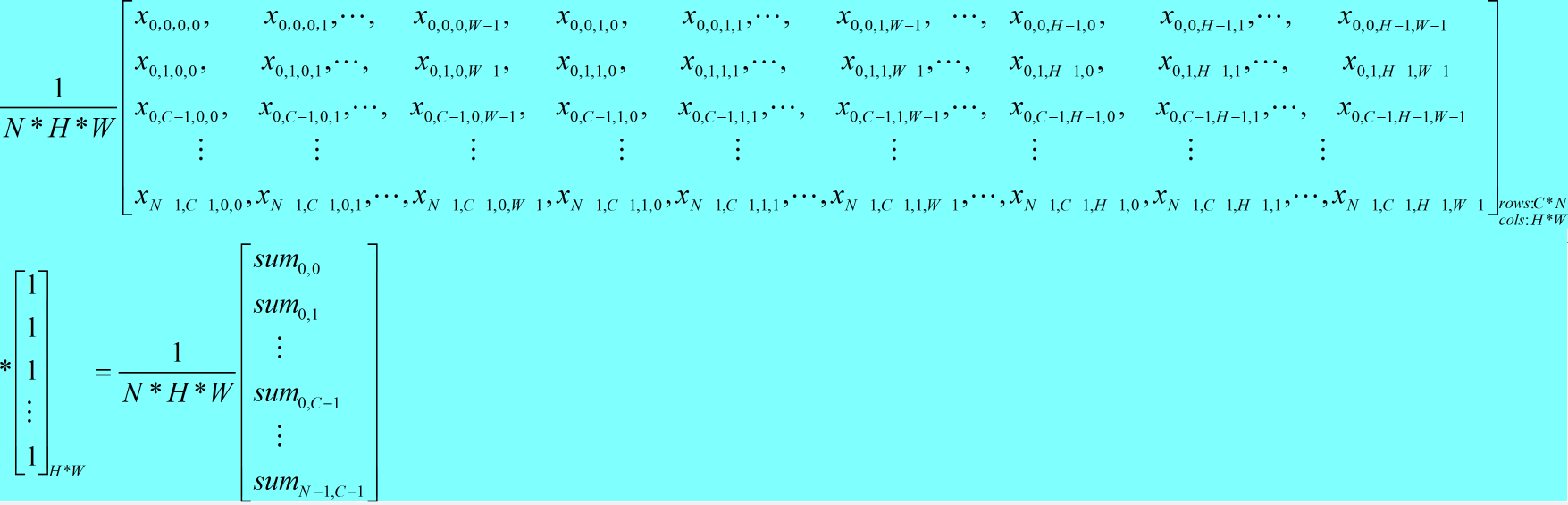

Blob the size of each image point that the variables above:

mean_ and variance_: channel number of elements of the vector

temp_ and x_norm_: the blob and the same size of the input, is N * C * H * W

batch_sum_multiplier_: N number of elements of the vector

spatial_sum_multiplier_: the number of elements of the matrix H * W, and the values for each element 1

num_by_chans_: C * N is the number of elements of a matrix, and each element value of 1

4. caffe in batch_norm_layer.cpp in Forward_cpu functions:

1 void BatchNormLayer<Dtype>::Forward_cpu(const vector<Blob<Dtype>*>& bottom, 2 const vector<Blob<Dtype>*>& top) { 3 const Dtype* bottom_data = bottom[0]->cpu_data(); 4 Dtype* top_data = top[0]->mutable_cpu_data();

//num = N 5 int num = bottom[0]->shape(0);

//spatial_dim = N*C*H*W/N*C = H*W 6 int spatial_dim = bottom[0]->count()/(bottom[0]->shape(0)*channels_); 7 8 if (bottom[0] != top[0]) { 9 caffe_copy(bottom[0]->count(), bottom_data, top_data); 10 } 11 12 if (use_global_stats_) { 13 // use the stored mean/variance estimates.

//在测试模式下,scale_factor=1/this->blobs_[2]->cpu_data()[0] 14 const Dtype scale_factor = this->blobs_[2]->cpu_data()[0] == 0 ? 15 0 : 1 / the this -> blobs_ [ 2 ] -> cpu_data () [ 0 ];

// mean_ BLOB scale_factorh * = this-> blobs_ [0] -> cpu_data ()

// variance_ BLOB = scale_factorh * this_blobs_ [. 1] -> cpu_data ( )

// the variables are defined as blobs_ in the class, so that a layer of batch norm each call, blobs_ [0], blobs_ [ 1], blobs_ [2] is updated 16 caffe_cpu_scale (variance_.count (), scale_factorh, . 17 the this -> blobs_ [ 0 ] -> cpu_data (), mean_.mutable_cpu_data ()); 18 is caffe_cpu_scale (variance_.count (), scale_factorh, . 19 the this -> blobs_ [ . 1 ] -> cpu_data (), variance_.mutable_cpu_data ( )); 20 is } the else{ 21 is // Compute Mean

// calculated in a batch training mode mean 22 is caffe_cpu_gemv <to Dtype> (CblasNoTrans, channels_ * NUM, spatial_dim, 23 is . 1 . / (NUM * spatial_dim), bottom_data, 24 spatial_sum_multiplier_.cpu_data (), 0 ., 25 num_by_chans_.mutable_cpu_data ()); 26 is caffe_cpu_gemv <to Dtype> (CblasTrans, NUM, channels_, . 1 ,. 27 num_by_chans_.cpu_data (), batch_sum_multiplier_.cpu_data (), 0 ,. 28 mean_.mutable_cpu_data ()); 29 } 30 // can be obtained from the above two steps: either the input batch mean a training or test mode

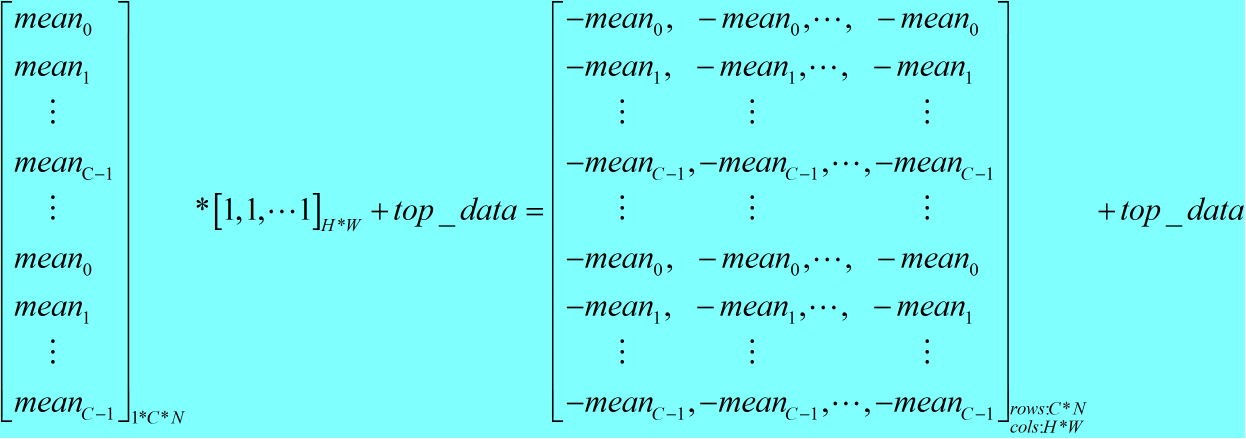

// for each data batch minus the mean of the corresponding channel 31 is // Subtract Mean 32 caffe_cpu_gemm <to Dtype> (CblasNoTrans, CblasNoTrans , NUM, channels_, . 1 , . 1 , 33 is batch_sum_multiplier_.cpu_data (), mean_.cpu_data (), 0 ,. 34 is num_by_chans_.mutable_cpu_data ()); 35 caffe_cpu_gemm <to Dtype> (CblasNoTrans, CblasNoTrans, channels_ * NUM, 36 spatial_dim, . 1 , - . 1 , num_by_chans_.cpu_data (), 37 [ spatial_sum_multiplier_.cpu_data (), . 1 , top_data);. 38 39 if (!use_global_stats_) {

//计算训练模式下的方差 40 // compute variance using var(X) = E((X-EX)^2) 41 caffe_sqr<Dtype>(top[0]->count(), top_data, 42 temp_.mutable_cpu_data()); // (X-EX)^2 43 caffe_cpu_gemv<Dtype>(CblasNoTrans, channels_ * num, spatial_dim, 44 1. / (num * spatial_dim), temp_.cpu_data(), 45 spatial_sum_multiplier_.cpu_data(), 0., 46 num_by_chans_.mutable_cpu_data()); 47 caffe_cpu_gemv <to Dtype> (CblasTrans, NUM, channels_, . 1 ,. 48 num_by_chans_.cpu_data (), batch_sum_multiplier_.cpu_data (), 0 ,. 49 variance_.mutable_cpu_data ()); // E ((X_EX) ^ 2) 50 51 is // Compute and Save Moving average

// in the training phase, can be obtained by the above calculation step: batch the mean and variance of each channel

// blobs_ [2] = + blobs_. 1 [2] * moving_average_fraction_

// first batch when, blobs_ [2] = 0, blobs_ [2] = 1 is calculated after

// the second batch, blobs_ [2] = 1 , blobs_ after calculating [2] = * moving_average_fraction_. 1. 1 + 1.9 = 52 is the this -> blobs_ [ 2 ] -> mutable_cpu_data () [ 0 ] = * moving_average_fraction_; 53 this->blobs_[2]->mutable_cpu_data()[0] += 1;

//blobs_[0] = 1 * mean_ + moving_average_fraction_ * blobs_[0]

//其中mean_是本次batch的均值,blobs_[0]是上次batch的均值 54 caffe_cpu_axpby(mean_.count(), Dtype(1), mean_.cpu_data(), 55 moving_average_fraction_, this->blobs_[0]->mutable_cpu_data());

//m = N*C*H*W/C = N*H*W 56 int m = bottom[0]->count()/channels_;

//bias_correction_factor = m/m-1 57 M = bias_correction_factor DTYPE> . 1 DTYPE (m) / (M-? . 1 ): . 1 ;

// blobs_ [. 1] + = bias_correction_factor moving_average_fraction_ * * variance_ blobs_ [. 1] 58 caffe_cpu_axpby (variance_.count (), bias_correction_factor, 59 variance_ .cpu_data (), moving_average_fraction_, 60 the this -> blobs_ [ . 1 ] -> mutable_cpu_data ()); 61 is } 62 is // previous step to the calculated variance plus a constant eps_, to prevent the variance in the denominator when normalized value appears is 0, while prescribing

63 is // the normalize variance 64 caffe_add_scalar (variance_.count (), eps_, variance_.mutable_cpu_data ()); 65 caffe_sqrt (variance_.count (), variance_.cpu_data (), 66 variance_.mutable_cpu_data ()); 67 68 // replicate variance to the INPUT size

// top_data currently saved is input blobs - the mean value, the following lines of code means for dividing each element corresponds to the variance of 69 caffe_cpu_gemm <to Dtype> (CblasNoTrans, CblasNoTrans, NUM, channels_, . 1 , . 1 , 70 batch_sum_multiplier_.cpu_data (), variance_.cpu_data (), 0 ., 71 is num_by_chans_.mutable_cpu_data () ); 72 caffe_cpu_gemm <to Dtype> (CblasNoTrans, CblasNoTrans, channels_ * NUM, 73 is spatial_dim, . 1 , . 1., num_by_chans_.cpu_data(), 74 spatial_sum_multiplier_.cpu_data(), 0., temp_.mutable_cpu_data()); 75 caffe_div(temp_.count(), top_data, temp_.cpu_data(), top_data); 76 // TODO(cdoersch): The caching is only needed because later in-place layers 77 // might clobber the data. Can we skip this if they won't? 78 caffe_copy(x_norm_.count(), top_data, 79 x_norm_.mutable_cpu_data()); 80 }

caffe_cpu_gemv prototype is:

1 caffe_cpu_gemv<float>(const CBLAS_TRANSPOSE TransA, const int M, const int N, const float alpha, const float *A, const float *x, const float beta, float *y)

Achieve the function of matrix and vector multiplication: Y = alpha * A * x + beta * Y

Wherein the dimensions of the matrix A is M * N, the dimension of the vector x N * 1, Y dimension vector is M * 1.

During the training phase, forward cpu functions perform the following steps:

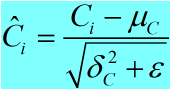

(1) calculate the mean, mean calculation process is as follows, divided into two steps:

<1> of each batch is calculated for each element of the channel and the channel;

1 caffe_cpu_gemv<Dtype>(CblasNoTrans, channels_ * num, spatial_dim, 1. / (num * spatial_dim), bottom_data, spatial_sum_multiplier_.cpu_data(), 0., num_by_chans_.mutable_cpu_data());

Wherein: X N-1, C-1, H-. 1, W is-. 1 meaning represented by: N-1 represents a batch of the N-1 samples, C-1 indicates that the sample corresponding to a first C-1 th channel, H-1 indicates the first passage line H-1, W-1 indicates the first passage W-1 column;

SUM N-1, C-1 meanings represented by: batch of N-1 first the first channel C-1 samples of all the elements and.

<2> in each channel calculated mean batch:

1 caffe_cpu_gemv<Dtype>(CblasTrans, num, channels_, 1., num_by_chans_.cpu_data(), batch_sum_multiplier_.cpu_data(), 0., mean_.mutable_cpu_data());

(2) For each batch of data by subtracting the mean of the corresponding channel;

<1> mean matrix obtained

1 caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num, channels_, 1, 1, batch_sum_multiplier_.cpu_data(), mean_.cpu_data(), 0., num_by_chans_.mutable_cpu_data());

<2> 每个元素减去对应均值

1 caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, channels_ * num, spatial_dim, 1, -1, num_by_chans_.cpu_data(), spatial_sum_multiplier_.cpu_data(), 1., top_data);

(3) 每个通道的方差计算,计算方式和均值的计算方式相同;

(4) 输入blob除以对应方差,得到归一化后的值。