【introduction】

In programming FLOAT type data is often used precision floating-point data and more accurate, for example 0.111111 how much, and integer such as INT type data can not represent the accuracy problem, if it is a large program design and development time accuracy issues are often It will cause the collapse of the entire program.

[Float] general representation

- Shift concept: We know that for any a hexadecimal number, the decimal point change in movement often makes binary multiples of that number to increase or decrease, so the same binary, binary numbers move the decimal point to the left, turn into the original value 1/2 times, and move the decimal point to the right, often cause an increase in value to the original twice ( in fact shift can handle a lot of problems, but this is not the point ) .

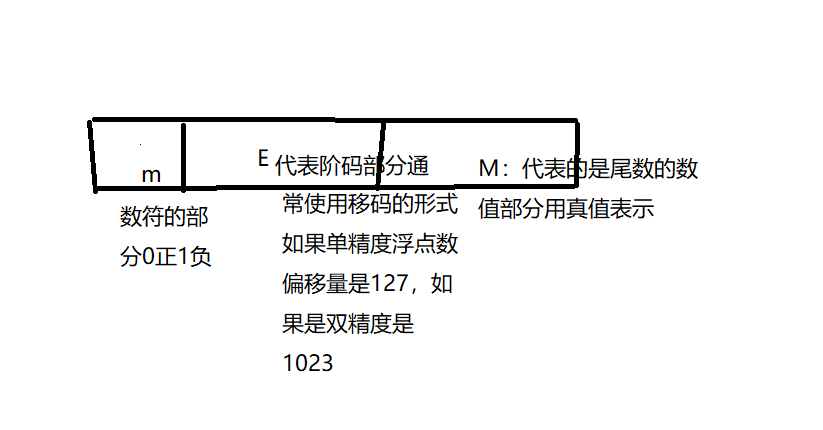

- Generally floating point numbers from the above several parts.

- However, in order to facilitate the portability of software, people do float a new standard: IEEE754 standard floating point

[ IEEE754 standard floating point representation and conversion]

An example is given below

[Example] ( 236.5 ) - " IEEE754 floating point