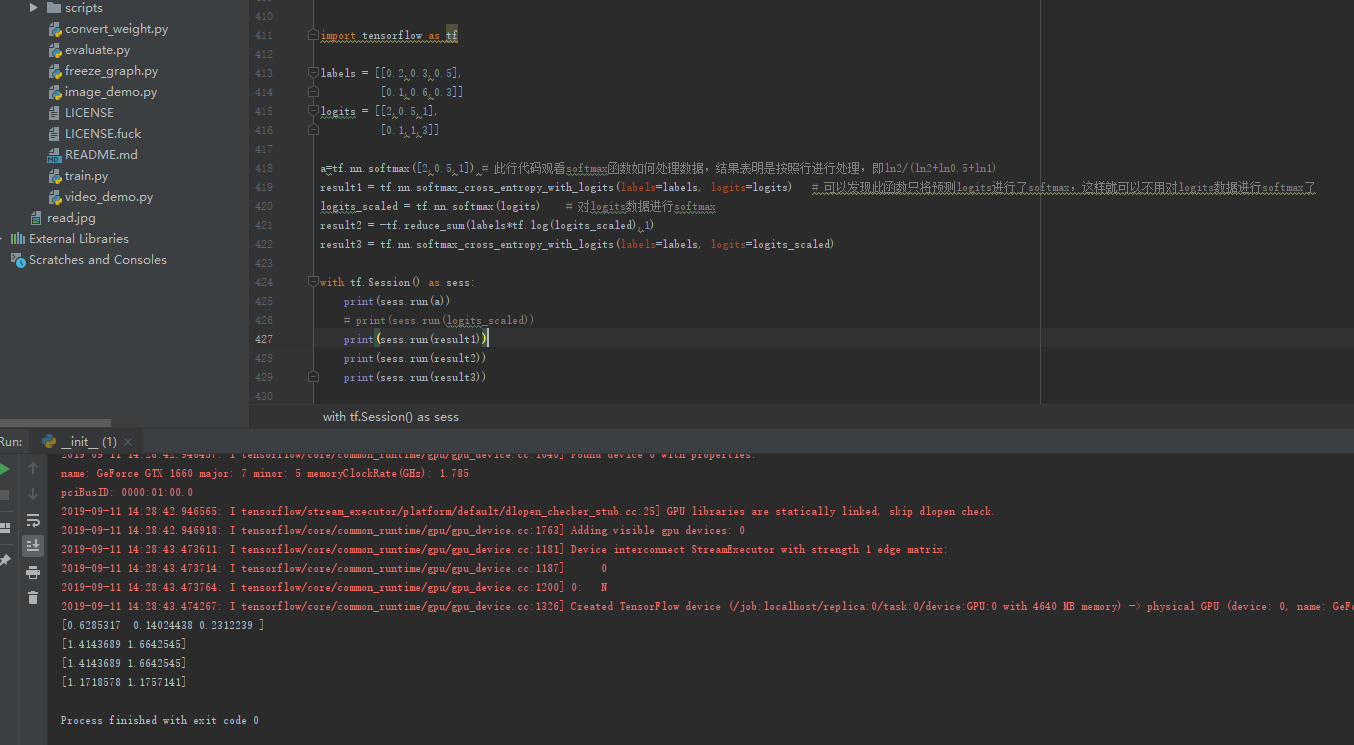

tensorflow TF AS Import

Labels = [[0.2,0.3,0.5],

[0.1,0.6,0.3]]

logits = [[2,0.5,1],

[0.1,1,3]]

A = tf.nn.softmax ( [2,0.5,1]) # see how this line softmax function processing data, indicates this is processed according to the line, i.e. LN2 / (+ LN2 + ln0.5 LN1)

RESULT1 = tf.nn.softmax_cross_entropy_with_logits (= Labels Labels , logits = logits) # this function can be found only predict logits were softmax, so that you can do to logits data softmax the

logits_scaled = tf.nn.softmax (logits) # of logits data softmax

result2 = -tf.reduce_sum (Labels * tf.log (logits_scaled),. 1)

result3 = tf.nn.softmax_cross_entropy_with_logits (= Labels Labels, logits = logits_scaled)

with tf.Session () AS Sess:

Print (sess.run (A))

# Print (Sess .run (logits_scaled))

Print (sess.run (result1))

print (sess.run (result2))

print (sess.run (result3))

softmax function is actually input to the equation np.power (np.e, xi) /Σnp.power (np.e, xi) obtained, which code is as follows:

[2,0.5,1]

a = np.power (np.e, 2) + np.power (np.e, 0.5) + np.power (np.e, 1)

print (np.power (np.e, 2) / a)