Spark calculation model RDD

First, the curriculum objectives

Goal 1: RDD grasp the principles of

Goal 2: RDD skilled operator using complete computing tasks

Goal 3: Mastering the width dependent RDD

Goal 4: Mastering the RDD cache mechanism

Goal 5: Mastering division stage

Goal 6: Mastering spark task scheduling process

Second, the resilient distributed data sets RDD

2. RDD Overview

2.1 What is RDD

RDD (Resilient Distributed Dataset) is called the flexible distributed data sets, is the most basic data abstraction Spark, which represents an immutable, partitionable, which set of elements parallel computing. RDD characteristic data flow model having: automatic fault tolerance, location-aware scheduling and scalability. RDD allows the user when performing a plurality of queries explicitly in the data cache memory, the subsequent data query can be reused, which greatly improves query speed.

Dataset: a set of data, for storing data.

Distributed: RDD data is distributed storage can be used for distributed computing.

Resilient: RDD data can be stored in memory or on disk.

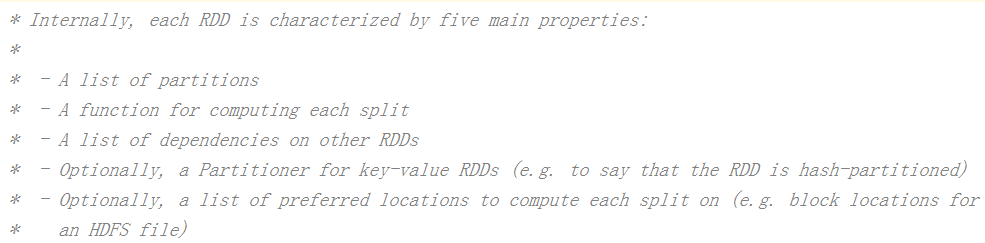

2.2 RDD property

1) A list of partitions: a partition (the Partition) list, the basic unit of the dataset.

For RDD, each partition will be a computing task processing, and determine the particle size parallel computing. The user can specify the number of partitions in the creation of RDD RDD, if not specified, it will default value. (For example: the number of partitions RDD HDFS read data file generated with equal number of block)

2) A function for computing each split: a calculation function for each partition.

Spark in the RDD is calculated based on partition units, each RDD will compute computing functions to achieve this purpose.

3) A list of dependencies on other RDDs: RDD will depend on a number of other RDD, dependencies between the RDD.

RDD each converter generates a new RDD, it will form a line similar to the same dependency between the front and rear RDD. When part of the partition data loss, Spark can recalculate the lost partition data by this dependency, rather than all partitions RDD were recalculated.

4) Optionally, a Partitioner for key-value RDDs (eg to say that the RDD is hash-partitioned): a Partitioner, i.e. a partition function RDD (optional).

Spark currently implemented in two types of partition function, based on a hash HashPartitioner, it is based on an additional RangePartitioner range. Only for key-value of RDD, will have Partitioner ( must be generated shuffle ), the value of non-key-value Parititioner the RDD is None.

5) Optionally, a list of preferred locations to compute each split on (eg block locations for an HDFS file): a list of priority store each Partition (optional).

For an HDFS file, the preservation of this list is the location where the blocks for each Partition. In accordance with the "Mobile Data not as mobile computing" concept, the Spark task scheduling is performed when the computing tasks as possible will assign it to a storage location of the data block to be processed (task assignment spark when selecting those data as there the worker node to perform compute tasks).

Why would produce 2.3 RDD?

(1) While the conventional MapReduce automatic fault tolerance, load balancing and scalability of advantages, but its biggest drawback is the non-recursive data flow model, so that the iterative calculation to be performed in a lot of disk IO operations. RDD is to solve this shortcoming of abstract methods.

(2) RDD is the most important abstract concept Spark provided, which is a special set of fault tolerance, it can be distributed over the nodes of the cluster to a set of operations to functional programming, a variety of operations in parallel. RDD result data can be cached to facilitate multiple reuse, to avoid double counting.

2.4 RDD status and role of Spark

(1) Why Spark?

Because the traditional parallel computing model can not effectively solve the iterative calculation (iterative) and interactive computing (interactive); and Spark's mission is to solve these two problems, which is the value of his existence and reason.

How (2) Spark solve iterative calculation?

The main idea is to achieve RDD, all calculated data stored in the distributed memory. Typically the iterative calculation of the iterative calculation is done repeatedly on the same data set, the data in the memory is greatly enhanced IO operations. This is also the core of Spark involved: in-memory computing.

How (3) Spark achieve interactive computing?

Because the Spark is the scala language, Spark and scala can be tightly integrated, so you can perfectly use Spark scala interpreter, which makes the scala can operate as a local collection of objects to easily operate the distributed data sets.

(4) the relationship between Spark and RDD's?

RDD is a fault tolerant memory based on the calculated abstract method, RDD Spark Core is the underlying core, it is achieved that the Spark abstract methods.

3. Create RDD

1) collection created by an existing Scala.

val rdd1 = sc.parallelize(Array(1,2,3,4,5,6,7,8))

2) Create from File external storage systems. Including local file system, as well as all support Hadoop data sets, such as HDFS, Cassandra, HBase and so on.

val rdd2 = sc.textFile("/words.txt")

3) existing RDD through conversion operators generate new RDD

val rdd3=rdd2.flatMap(_.split(" "))