Preface:

Recent required to achieve load balancing when you deploy the project, interesting to find that a search online are all similar to the following profiles

upstream localhost{

server 127.0.0.1:8080 weight=1;

server 127.0.0.1:8081 weight=1;

}

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://localhost;

index index.html index.htm index.jsp;

}

}

So I plan to take a look at the inner workings of Nginx, this blog focuses on how to implement Nginx reverse proxy and load balancing parameters using Nginx

First, forward proxy and reverse proxy

Forward Proxy is a proxy client, that is, the client can really come into contact with, such as when the need to use VPN software to access the Internet, in the software where the user can choose to connect the server.

Reverse proxy is a proxy server, the user is not aware, but the client sent the request to the server port, Nginx listening to the request put forward the port to a different server. Take the above configuration file to explain, when entered in the URL http: // localhost: After (the default when entering the port 80 without 80, as here, for clarity) 80 / hour, then Nginx listens to requests on port 80, it It will be performed to find the corresponding location. From the above we can see that the configuration file is to forward the request to a different port. This is performed in the server, invisible to the user.

The reverse proxy server in the tools we use most often is Nginx.

Second, the basic architecture of the interior Nginx

nginx after starting running in the background as a daemon way , there will be a master process and multiple worker processes .

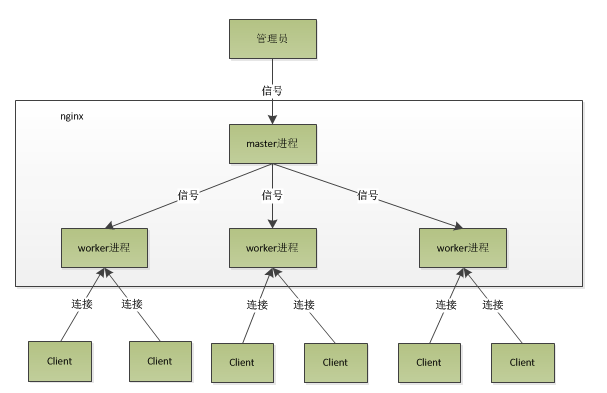

master process: mainly used to manage worker process, comprising: receiving a signal from the outside, a signal is sent to each worker process, monitor the operation status of worker processes, when the worker process exits (exceptional circumstances), it will automatically re-start a new worker process.

worker process: handling basic network incident. Between multiple worker processes are equal, they are the same competition from the client's request, the processes are independent of each other between. A request can only be processed in a process worker, a worker process, other processes can not handle the request. The number of worker processes that can be set, usually we will set up a machine with the same number of cpu core, or directly set the parameters worker_processes auto;

So Nginx basic architecture on the following:

When we enter ./nginx -s reload, it is to restart nginx,. / Nginx -s stop, nginx is to stop the run, and there is how to do? When executing commands, we are starting a new process nginx, and nginx process after the new resolve to reload parameters, we know our purpose is to control nginx to reload the configuration file, it sends a signal to the master process. master process after receiving the signal, will first reload the configuration file, and then start a new worker process, and sends a signal to all the old worker process, they can tell the honorable retirement. After starting a new worker, he began receiving new requests, while older worker after receiving a signal from the master, it is no longer receiving new requests, and any pending completion of the request in the current process after the completion of treatment , then exit. So use the above command to restart Nginx when the service is not interrupted .

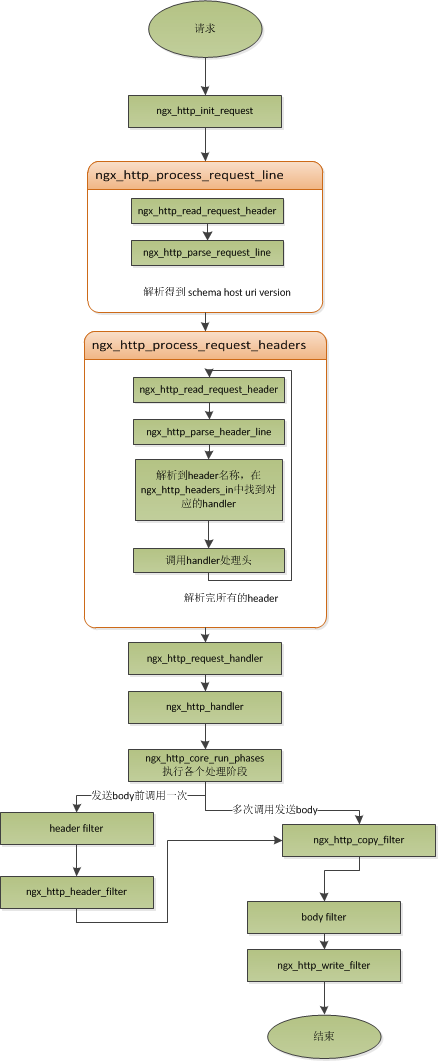

Three, Nginx how to handle client requests

First, let's explain the above architecture diagram: After each worker process is a process over from the master branch, in which the master process, needs to first establish good listening socket, then branch off multiple worker processes. All listenfd worker processes (socket fd in listenfd means when connecting the client machine, and is used to communicate with the client) becomes readable when a new connection arrives, to ensure that only a process to handle the connection, All worker processes grab before registering listenfd read event accept_mutex, grab a mutex that the process of registration listenfd read event, call accept to accept the connection in the event in reading.

Nginx between the worker processes are equal, each process, the opportunity to process the request is the same. When Nginx listening on port 80, a client requests a connection over each process are likely to deal with this connection, it comes on top of each worker process will go cybersquatting listenfd read event. When a worker process after accept this connection, they begin to read the request, parse the request, processes the request, after generating the data, and then returned to the client, and finally disconnected, such a complete request is like this. It should be noted that a request is handled entirely by the worker process, and only deal with a worker in the process .

The following two flow chart can be very good to help us understand

Four, Nginx how to handle events and high concurrency

Internal Nginx the asynchronous non-blocking way to process the request, i.e., Nginx can handle thousands of simultaneous requests.

Asynchronous non-blocking: When a network request is over, we do not rely on this request in order to do the follow-up operation, then the request is asynchronous operation, that is, the caller has not been the same in subsequent operations can be performed before the result. When a non-blocking is the current process / thread has not been the result of a request calls will not interfere with the process / thread subsequent operations. Asynchronous and non-blocking can be seen that the objects are different.

回到Nginx中,首先,请求过来,要建立连接,然后再接收数据,接收数据后,再发送数据。具体到系统底层,就是读写事件,而当读写事件没有准备好时,必然不可操作,如果不用非阻塞的方式来调用,那就得阻塞调用了,事件没有准备好,那就只能等了,等事件准备好了,再继续。而阻塞调用会进入内核等待,cpu就会让出去给别人用了,对单线程的worker来说,显然不合适,当网络事件越多时,大家都在等待,cpu空闲下来没人用,cpu利用率自然上不去了,更别谈高并发了。而非阻塞就是,事件没有准备好,马上返回EAGAIN,告诉调用者,事件还没准备好,过会再来。过一会,再来检查一下事件,直到事件准备好了为止,在这期间,Nginx可以处理其他调用者的读写事件。但是虽然不阻塞了,但Nginx得不时地过来检查一下事件的状态,Nginx可以处理更多请求了,但带来的开销也是不小的。所以,才会有了异步非阻塞的事件处理机制,具体到系统调用就是像select/poll/epoll/kqueue这样的系统调用。它们提供了一种机制,让你可以同时监控多个事件,以epoll为例子,当事件没准备好时,放到epoll里面,事件准备好了,Nginx就去读写,当读写返回EAGAIN时,就将它再次加入到epoll里面。这样,只要有事件准备好了,Nginx就可以去处理它,只有当所有事件都没准备好时,才在epoll里面等着。这样便实现了所谓的并发处理请求,但是线程只有一个,所以同时能处理的请求当然只有一个了,只是在请求间进行不断地切换而已,切换也是因为异步事件未准备好,而主动让出的。这里的切换是没有任何代价,你可以理解为循环处理多个准备好的事件,事实上就是这样的。与多线程相比,这种事件处理方式是有很大的优势的,不需要创建线程,每个请求占用的内存也很少,没有上下文切换,事件处理非常的轻量级。并发数再多也不会导致无谓的资源浪费(上下文切换)。更多的并发数,只是会占用更多的内存而已。现在的网络服务器基本都采用这种方式,这也是nginx性能高效的主要原因。

五、Nginx负载均衡的算法及参数

round robin(默认):轮询方式,依次将请求分配到后台各个服务器中,适用于后台机器性能一致的情况,若服务器挂掉,可以自动从服务列表中剔除

weight:根据权重来分发请求到不同服务器中,可以理解为比例分发,性能较高服务器分多点请求,较低的则分少点请求

IP_hash:根据请求者ip的hash值将请求发送到后台服务器中,保证来自同一ip的请求被转发到固定的服务器上,解决session问题

upstream localhost {

ip_hash;

server 127.0.0.1:8080;

server 127.0.0.1:8080;

}

上面是最基本的三种算法,我们还可以通过改变参数来自行配置负载均衡

upstream localhost{

ip_hash;

server 127.0.0.1:9090 down;

server 127.0.0.1:8080 weight=2;

server 127.0.0.1:6060;

server 127.0.0.1:7070 backup;

}

参数列表如下:

| down | 表示单前的server暂时不参与负载 |

| weight | 默认为1.weight越大,负载的权重就越大 |

| max_fails | 允许请求失败的次数默认为1.当超过最大次数时,返回proxy_next_upstream 模块定义的错误 |

| fail_timeout | max_fails次失败后,暂停的时间 |

| backup | 其它所有的非backup机器down或者忙的时候,请求backup机器。所以这台机器压力会最轻 |

参考: