1. The classification model assessment

sklearn There are three ways to assess the quality of a predictive model,

1) Each module has a score model method;

2) cross-validation module assessment tools;

3) metrics evaluation module has some functions.

Here are metrics module API, others see Resources.

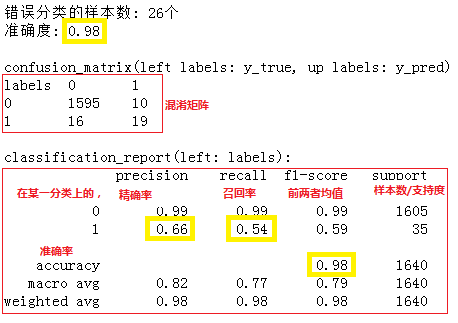

# Of test set predicted y_predict = clf.predict (X_test) # ---- output classification accuracy errors and Print ( ' number of samples misclassified:% D ' !% (= Android.permission.FACTOR. Y_predict) .sum () + ' a ' ) from sklearn.metrics Import accuracy_score Print ( " accuracy:% .2f ' % accuracy_score (android.permission.FACTOR., y_predict)) Print () # ---- classification result is output confusion matrix DEF my_confusion_matrix (y_true, y_pred): from sklearn.metrics Import confusion_matrix Labels = list(set(y_true)) conf_mat = confusion_matrix(y_true, y_pred, labels = labels) print("confusion_matrix(left labels: y_true, up labels: y_pred)") print("labels",'\t',end='') for i in range(len(labels)): print(labels[i],'\t',end='') print() for i in range(len(conf_mat)): print(i,'\t',end='') for j in range(len(conf_mat[i])): print(conf_mat[i][j],'\t',end='') print() my_confusion_matrix(y_test,y_predict) print() #----输出分类报告 from sklearn.metrics import classification_report print("classification_report(left: labels):") print(classification_report(y_test, y_predict))

References:

Machine learning and performance indicators used in the model evaluation sklearn

https://blog.csdn.net/sinat_36972314/article/details/82734370