11

- transmission of a manual request - the yield scrapy.Request (URL, the callback) / FormRequest (URL, the callback, FormData) - Depth crawling / crawling the station data - pass the request parameters: - usage scenario: Data Not crawling with a page. Depth crawling - the yield scrapy.Request (URL, the callback, Meta = {}) / FormRequest (URL, the callback, FormData, Meta = {}) - Item response.meta = [ ' Item ' ] - start_requests (Self): - role: the list element start_urls list to send get requests - core components - PyExcJs: simulation program execution js - js installed environment - nodejs environment - js confusion - js encryption - robots - UA camouflage - agent - Cookie - dynamic request parameter - code - Pictures lazy loading - page dynamic loading of data

Download Middleware - Role: Bulk intercept all requests and responses initiated the entire project - why should intercept requests - UA disguise: - process_request: Request.Headers [ ' the User-Agent ' ] = xxx - a proxy ip settings - process_exception: request.META [ ' Proxy ' ] = 'HTTP: // ip: Port' - Why intercepted response - tamper response data / tamper response objects - Note: middleware need to manually open the configuration file Netease news crawl - demand: Netease domestic news, international, military, aviation, unmanned aerial vehicles headlines and content in these five blocks to create the database table a four-field (title, content, Keys, of the type) - analysis: 1The corresponding sections under each headline data are dynamically loaded out of 2 Data details page of the news is not dynamically loaded - using selenium in scrapy in - in the constructor reptile file to instantiate a browser object - in reptiles the file rewriting a closed (self, spider), turn off Object browser - Get browser object process_response downloaded middleware then performs automated browser-related operations the station data CrawlSpider crawling - CrawlSpider is another forms of reptiles. CrawlSpider is a subclass of Spider - create a file based CrawlSpider reptiles: - scrapy genspider - t crawl spiderName www.xxx.com distributed - concept: set up a distributed fleet, the fleet so that the same set of distributed data distribution crawling. - role: to enhance the efficiency of data crawling - how distributed? - scrapy + distributed redis implemented - scrapy combined with scrapy-Distributed redis set up to achieve - native scrapy can not be achieved distributed - scheduler can not be shared distributed fleet - the pipeline can not be distributed shared clusters - What is the role scrapy-redis is? - provided that can be given to native scrapy sharing scheduler and pipes ? - Why is this form is called redis-scrapy - distributed crawling data must be stored in redis - encoding process: - PIP install scrapy- redis - create a crawler file (CrawlSpider / Spider) - modify reptiles file: - introducing scrapy- Redis encapsulated module type - from scrapy_redis.spiders import RedisCrawlSpider - the parent class designated as reptilian RedisCrawlSpider -The allowed_domains and delete start_urls - add a new attribute: redis_key = ' XXX ' # may be the name of the shared queue scheduler - configuration settings file: - Specify pipe: ITEM_PIPELINES = { ' scrapy_redis.pipelines.RedisPipeline ' : 400 } - of the scheduler DUPEFILTER_CLASS = " scrapy_redis.dupefilter.RFPDupeFilter " sCHEDULER = " scrapy_redis.scheduler.Scheduler " SCHEDULER_PERSIST =True - Specifies database REDIS_HOST = ' IP addresses and services redis ' REDIS_PORT = 6379 - Configuration redis profile redis.windows.conf: - Line 56 is: #bind 127.0 . 0.1 - Close Protection Mode: protected - MODE NO - Start Service redis : - Redis-Server ./ redis.windows.conf - redis- CLI - executing program: into a file directory corresponding crawler: Scrapy runspider xxx.py - into a starting url to a scheduler queue: -Scheduler queue name is redis_key value - in redis-cli: www.xxx.com queue name lpush

distributed

1pip install scrapy-redis

2 Create a file reptiles

3 Modify the reptile file

- introducing scrapy- Redis encapsulated module type

- from scrapy_redis.spiders Import RedisCrawlSpider (example only)

- the parent class designated as reptilian RedisCrawlSpider

- will delete start_urls allowed_domains and - add a new attribute: redis_key = 'XXX' # name can be shared queue scheduler

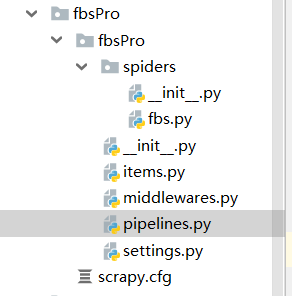

fbs.py

# -*- coding: utf-8 -*- import scrapy from scrapy.linkextractors import LinkExtractor from scrapy.spiders import CrawlSpider, Rule from scrapy_redis.spiders import RedisCrawlSpider from fbsPro.items import FbsproItem class FbsSpider(RedisCrawlSpider): name = 'fbs'

# allowed_domains = ['www.xxx.com'] # start_urls = ['http://www.xxx.com/']

redis_key = 'sunQueue'# May be shared scheduler queue name the rules = ( the Rule (LinkExtractor (the allow = R & lt ' type =. 4 & Page = \ D + ' ), the callback = ' parse_item ' , Follow = True), ) DEF parse_item (Self, Response): tr_list = response.xpath ( ' // * [@ ID = "morelist"] / div / Table [2] // TR / TD / TR Table // ' ) for TR in tr_list: title = tr.xpath ( ' . / TD [2] / A [2] / text () ' ) .extract_first () Item = FbsproItem () Item ['title'] = title yield item

Configuration setting

- specify the pipe:

ITEM_PIPELINES = {

'scrapy_redis.pipelines.RedisPipeline': 400 } - Specifies the scheduler DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter" SCHEDULER = "scrapy_redis.scheduler.Scheduler" SCHEDULER_PERSIST = True - Specifies database REDIS_HOST = 'redis service ip address'

BOT_NAME = 'fbsPro' SPIDER_MODULES = ['fbsPro.spiders'] NEWSPIDER_MODULE = 'fbsPro.spiders' USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.87 Safari/537.36' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'fbsPro (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) CONCURRENT_REQUESTS = 2 # Configure a delay for requests for the same website (default: 0) # See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://docs.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'fbsPro.middlewares.FbsproSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'fbsPro.middlewares.FbsproDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://docs.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://docs.scrapy.org/en/latest/topics/item-pipeline.html #ITEM_PIPELINES = { # 'fbsPro.pipelines.FbsproPipeline': 300, #} # Enable and configure the AutoThrottle extension (disabled by default) # See https://docs.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings = #HTTPCACHE_ENABLED True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = ' HttpCache ' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = ' scrapy.extensions.httpcache.FilesystemCacheStorage ' ITEM_PIPELINES = { ' scrapy_redis.pipelines.RedisPipeline ' : 400 } # adds a deduplication container class configuration, the effect of using Redis set of fingerprint data set to the storage request, the request to re-achieve persistence DUPEFILTER_CLASS = " scrapy_redis.dupefilter.RFPDupeFilter " # use Scrapy -redis component own scheduler SCHEDULER = " scrapy_redis.scheduler.Scheduler " # Configure the dispatcher whether to persist, that is, when the reptile is over, you do not empty the set Redis request queue and go heavy fingerprints. If it is True, it means to persistent storage, the data is not empty, otherwise empty data SCHEDULER_PERSIST = True REDIS_HOST = ' 192.168.16.53 ' REDIS_PORT = 6379

- Configuration redis profile redis.windows.conf:

- Line 56: #bind 127.0.0.1

- turn off protected mode: protected- the MODE NO

- start redis Services:

- redis-Server ./ redis.windows.conf - redis- cli - the implementation of the program: Go to the reptile file corresponding to the directory: scrapy runspider xxx.py

- into a starting url to a scheduler queue:

- name of the queue scheduler is redis_key value

- in redis-cli: www.xxx.com lpush queue name

item .py file

import scrapy class FbsproItem(scrapy.Item): # define the fields for your item here like: title = scrapy.Field() # pass