URL: https: //www.bilibili.com/video/av50747658/ (b station to find Chinese subtitles video)

the first week

I. Introduction

1.1 Welcome

1.2 What is Machine Learning

1.3 supervised learning

1.4 Unsupervised Learning

Second, univariate linear regression

2.1 model representation

2.2 cost function

2.3 I cost function intuitive understanding

2.4 cost function intuitive understanding II

Gradient descent 2.5

Gradient descent intuitive understanding 2.6

2.7 gradient descent of the linear regression

2.8 The following content

Third, Linear Algebra Review

3.1 Matrix and vector

3.2 addition and scalar multiplication

3.3 matrix-vector multiplication

3.4 Matrix Multiplication

3-5 matrix multiplication feature

(1) does not apply commutative matrix multiplication

(2) matrix multiplication is associative

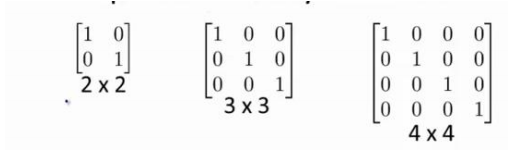

(3) is a diagonal matrix (a11, a22, a33 ...) is equal to the matrix 1

3-6 inverse and transpose

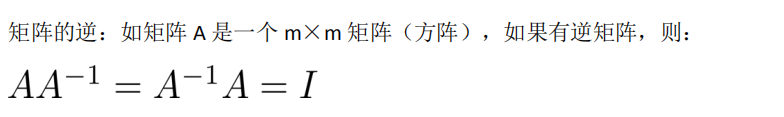

(1) the inverse matrix

Only m * m matrix has an inverse matrix

I is the identity matrix

(2) transposed

aij of the matrix into aji

Week 2

Fourth, the multivariate linear regression

4-1 Multifunction

When the prediction with a plurality of rate parameters, need to use multiple linear regression, the vector is expressed as:

4-2 polyhydric gradient descent

1- Practice 4-3 wherein the scaling gradient descent

4-4 practice gradient descent learning rate 2-

4-5 features and polynomial regression

4-6 normal equation

4-7 normal equation and irreversibility (optional)

Five, Octave Tutorial

5-1 Basic Operations

= ~ Presentation logic is not equal to

5-2 Mobile Data

5-3 calculated data

5-4 draw data

5-5 Control statements: for while if statement

5-6 vector (did not quite understand)

The third week

Sixth, logistic regression

Category 6-1

Logistic regression algorithm (logistics regression) - logistic regression algorithm is a classification algorithm, which applies to the value of y is worth taking the discrete case.

Binary classification (Category 0,1)

6-2 assume statement

6-3 decision limits

6-4 cost function

6-5 to simplify the cost function and gradient descent

6-6 Advanced Optimization

End of this chapter need to achieve: write a function, it returns the value of the cost function, the gradient value, so this should be applied to logistic regression or even linear regression, you can also put these optimization algorithm for linear regression, you need to do enter the appropriate code is calculated such things here.

6-7 multivariate classification - many

y value is a plurality of classification value

Seven regularization

7-1 overfitting

What is the over-fitting

Regularization

7-2 cost function

7-3 linear regression regularization

7-4 logistic regression regularization

---------------------------------------

the fourth week

Eight, neural networks: representation

8-1 linear hypothesis

8-2 neurons in the brain

8-3 show model I

8-4 show model II

Examples 8-5 and intuitive understanding I

Examples 8-6 and intuitive understanding II

8-7 multivariate classification

9-1 cost function

9-2 back-propagation algorithm

9-3 understanding of back-propagation algorithm

9-4 Note: Expand the parameters

9-5 gradient detection

9-6 random initialization

9-7 grouped together

Unmanned 9-8

10-1 decide what to do next

10-2 assess the hypothesis

10-3 model selection and training, testing, validation set

60% 20% 20% divided into three sets (common)

10-4 diagnostic bias and variance

10-5 regularization and variance, deviation

10-6 Learning Curve

10-7 decide what to do next

11-1 determine the priority of execution

11-2 Error Analysis

11-3 asymmetry error evaluation Classification

11-4 precision and recall tradeoff Rate

11-5 Machine Learning Data

12-1 optimization goals

Spaced on the understanding intuitively 12-2

Mathematical Principles of 12-3 large margin classifier

12-4 kernel 1

12-5 Kernel 2

12-6 using SVM

13-1 unsupervised learning

Clustering

13-2 K-Means algorithm

13-3 optimization goals

13-4 random initialization

Select the number of clusters 13-5-

14-1 goal I: data compression

14-2 goal II: Visualization

14-3 Principal Component Analysis planning issues 1

14-4 Principal component analysis planning issues 2

Select the number of principal components 14-5

14-6 compression reproduce

PCA 14-7 Application recommendations

15-1 motivation problem

15-2 Gaussian distribution (normal distribution)

15-3 Algorithm

15-4 anomaly detection system development and evaluation

15-5 Anomaly Detection VS-supervised learning

When the positive samples is too small, large amount of negative samples when using the anomaly detection algorithm can learn from a negative sample a sufficient number of features

On the contrary, negative samples too little time, with supervised learning

15-6 select the function you want to use

15-7 multivariate Gaussian distribution

15-8 abnormality detection using a multivariate Gaussian distribution

16-1 planning issues

Recommended system

Content-based recommendation algorithm 16-2

16-3 collaborative filtering

16-4 collaborative filtering algorithm

Vectorization 16-5: low-rank matrix decomposition

16-6 implementation details: the mean standardized

17-1 studying large data sets

17-2 stochastic gradient descent

17-3 Mini-Batch gradient descent

17-4 Stochastic Gradient Descent

17-5 online learning

Mapping data in parallel to reduce 17-6

18-1 Problem Description and OCR.pipeline

Image Identification

18-2 sliding window

Using a sliding window to find the image detector of the pedestrian

18-3 acquiring large amounts of data and manual data

18-4 Ceiling analysis: pipeline of future work

19-1 Summary and thanks