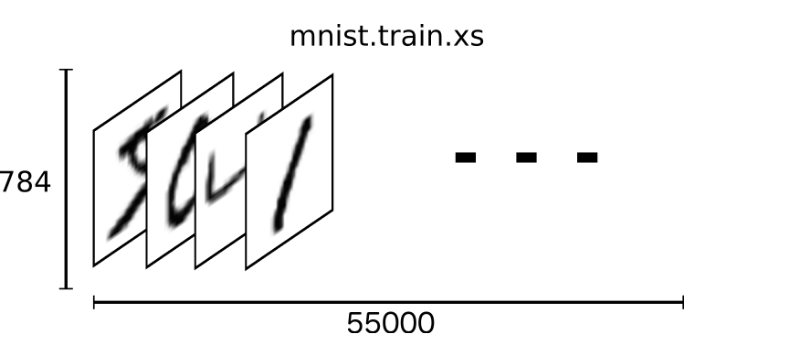

Mnist data sets can be downloaded from the official web site: http://yann.lecun.com/exdb/mnist/ downloaded data set is divided into two parts: the training data set to test 55,000 rows (mnist.train) and 10,000 lines data set (mnist.test). MNIST each data unit consists of two parts: a digital image comprising handwritten and a corresponding label. We put these pictures to "xs", these tags is set to "ys". Training data set and test data set contains xs and ys, such as pictures of the training data set is mnist.train.images, label training data set is mnist.train.labels.

We know that the picture is black and white pictures, each picture contains 28 pixel X28 pixels. We call this array unfolded into a vector of length is 28x28 = 784. Thus, the training data set MNIST, mnist.train.images is a tensor for the [60,000, 784] shape.

MNIST in each image having a corresponding label, a number between 0 to 9 are represented by the digital image drawn. Using a one-hot encoding

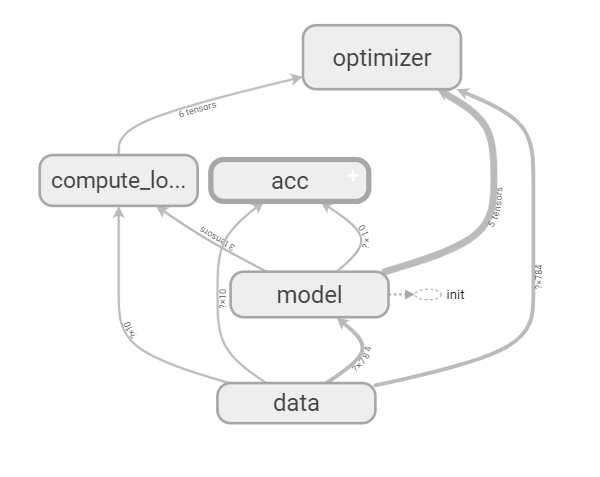

Monolayer (layer fully connected) handwritten digit recognition

1, the definition data placeholders eigenvalue [None, 784] target [None, 10]

with tf.variable_scope("data"): x = tf.placeholder(tf.float32,[None,784]) y_true = tf.placeholder(tf.float32,[None,10])

2,建立模型 随机初始化权重和偏置,w[784,10],b= [10] y_predict = tf.matmul(x,w)+b

with tf.variable_scope("model"): w = tf.Variable(tf.random_normal([784,10],mean=0.0,stddev=1.0)) b = tf.Variable(tf.constant(0.0,shape=[10])) y_predict = tf.matmul(x,w)+b

3,计算损失 loss 平均样本损失

with tf.variable_scope("compute_loss"): loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y_true,logits=y_predict))

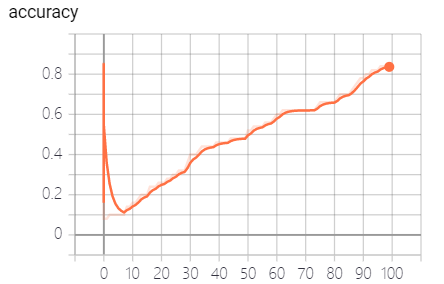

4,梯度下降优化 0.1 步数 2000 从而得出准确率

with tf.variable_scope("optimizer"): train_op = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

5,模型评估 argmax() reduce_mean

with tf.variable_scope("acc"): eq = tf.equal(tf.argmax(y_true, 1), tf.argmax(y_predict, 1)) accuracy = tf.reduce_mean(tf.cast(eq,tf.float32))

加载mnist数据集

import tensorflow as tf # 这里我们利用tensorflow给好的读取数据的方法 from tensorflow.examples.tutorials.mnist import input_data def full_connected(): # 加载mnist数据集 mnist = input_data.read_data_sets("data/mnist/input_data",one_hot=True)

运行结果

accuracy: 0.08

accuracy: 0.08

accuracy: 0.1

accuracy: 0.1

accuracy: 0.1

accuracy: 0.1

accuracy: 0.1

accuracy: 0.1

accuracy: 0.14

accuracy: 0.14

accuracy: 0.16

accuracy: 0.16

accuracy: 0.18

accuracy: 0.2

accuracy: 0.2

accuracy: 0.2

accuracy: 0.24

accuracy: 0.24

accuracy: 0.24

accuracy: 0.26

accuracy: 0.26

accuracy: 0.26

accuracy: 0.28

accuracy: 0.28

accuracy: 0.3

accuracy: 0.3

accuracy: 0.32

accuracy: 0.32

accuracy: 0.32

accuracy: 0.36

accuracy: 0.4

accuracy: 0.4

accuracy: 0.4

accuracy: 0.42

accuracy: 0.44

accuracy: 0.44

accuracy: 0.44

accuracy: 0.44

accuracy: 0.44

accuracy: 0.46

accuracy: 0.46

accuracy: 0.46

accuracy: 0.46

accuracy: 0.46

accuracy: 0.48

accuracy: 0.48

accuracy: 0.48

accuracy: 0.48

accuracy: 0.48

accuracy: 0.48

accuracy: 0.52

accuracy: 0.52

accuracy: 0.54

accuracy: 0.54

accuracy: 0.54

accuracy: 0.54

accuracy: 0.56

accuracy: 0.56

accuracy: 0.56

accuracy: 0.58

accuracy: 0.6

accuracy: 0.6

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.62

accuracy: 0.64

accuracy: 0.66

accuracy: 0.66

accuracy: 0.66

accuracy: 0.66

accuracy: 0.66

accuracy: 0.66

accuracy: 0.68

accuracy: 0.7

accuracy: 0.7

accuracy: 0.7

accuracy: 0.7

accuracy: 0.72

accuracy: 0.74

accuracy: 0.76

accuracy: 0.78

accuracy: 0.78

accuracy: 0.8

accuracy: 0.8

accuracy: 0.82

accuracy: 0.82

accuracy: 0.82

accuracy: 0.84

accuracy: 0.84

accuracy: 0.84

accuracy: 0.84

Process finished with exit code 0

对于使用下面的式子当作损失函数不太理解的:

tf.nn.softmax_cross_entropy_with_logits