On one explain to us how to restrict user access privileges dashboard, this section we explain a case: how to create a user read-only privileges.

Although you can create a variety of flexible user permissions based on the actual situation, but the actual production environment often requires only two on the list is a user owns all rights to the cluster created above, and the other is the ordinary user with read-only privileges. The only read permissions assigned to developers so that developers can clearly see the status of their project running.

Before carrying out this section, think about how we can use previous knowledge to achieve, we may have ideas, but to really achieve it is not a very easy thing to do simple, may require several rounds of modification and testing. In fact , kubernetes there is a default called viewthe clusterrole, it is actually a read-only permissions role. we look at this role

[centos@k8s-master ~]$ kubectl describe clusterrole view

Name: view

Labels: kubernetes.io/bootstrapping=rbac-defaults

rbac.authorization.k8s.io/aggregate-to-edit=true

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

bindings [] [] [get list watch]

configmaps [] [] [get list watch]

endpoints [] [] [get list watch]

events [] [] [get list watch]

limitranges [] [] [get list watch]

namespaces/status [] [] [get list watch]

namespaces [] [] [get list watch]

persistentvolumeclaims [] [] [get list watch]

pods/log [] [] [get list watch]

pods/status [] [] [get list watch]

pods [] [] [get list watch]

replicationcontrollers/scale [] [] [get list watch]

replicationcontrollers/status [] [] [get list watch]

replicationcontrollers [] [] [get list watch]

resourcequotas/status [] [] [get list watch]

resourcequotas [] [] [get list watch]

serviceaccounts [] [] [get list watch]

services [] [] [get list watch]

controllerrevisions.apps [] [] [get list watch]

daemonsets.apps [] [] [get list watch]

deployments.apps/scale [] [] [get list watch]

deployments.apps [] [] [get list watch]

replicasets.apps/scale [] [] [get list watch]

replicasets.apps [] [] [get list watch]

statefulsets.apps/scale [] [] [get list watch]

statefulsets.apps [] [] [get list watch]

horizontalpodautoscalers.autoscaling [] [] [get list watch]

cronjobs.batch [] [] [get list watch]

jobs.batch [] [] [get list watch]

daemonsets.extensions [] [] [get list watch]

deployments.extensions/scale [] [] [get list watch]

deployments.extensions [] [] [get list watch]

ingresses.extensions [] [] [get list watch]

networkpolicies.extensions [] [] [get list watch]

replicasets.extensions/scale [] [] [get list watch]

replicasets.extensions [] [] [get list watch]

replicationcontrollers.extensions/scale [] [] [get list watch]

networkpolicies.networking.k8s.io [] [] [get list watch]

poddisruptionbudgets.policy [] [] [get list watch]

[centos@k8s-master ~]$

You can see, it has access to all the paste and get list and watch, that is, write permissions are not operated so that we can be like the first user to bind to cluster-adminthe same, to create a new user bound to default viewon the role.

kubectl create sa dashboard-readonly -n kube-system

kubectl create clusterrolebinding dashboard-readonly --clusterrole=view --serviceaccount=kube-system:dashboard-readonlyThrough the above commands we have created a called dashboard-readonlyuser, and then bind it to viewthis role. We can kubectl describe secret -n=kube-system dashboard-readonly-token-随机字符串(by kubectl get -n = kube-system are listed all the secret to secret, and then find that specific a) view the dashboard-readonlyuser's secret, which contains token, we copy the token to the login screen dashboard landing.

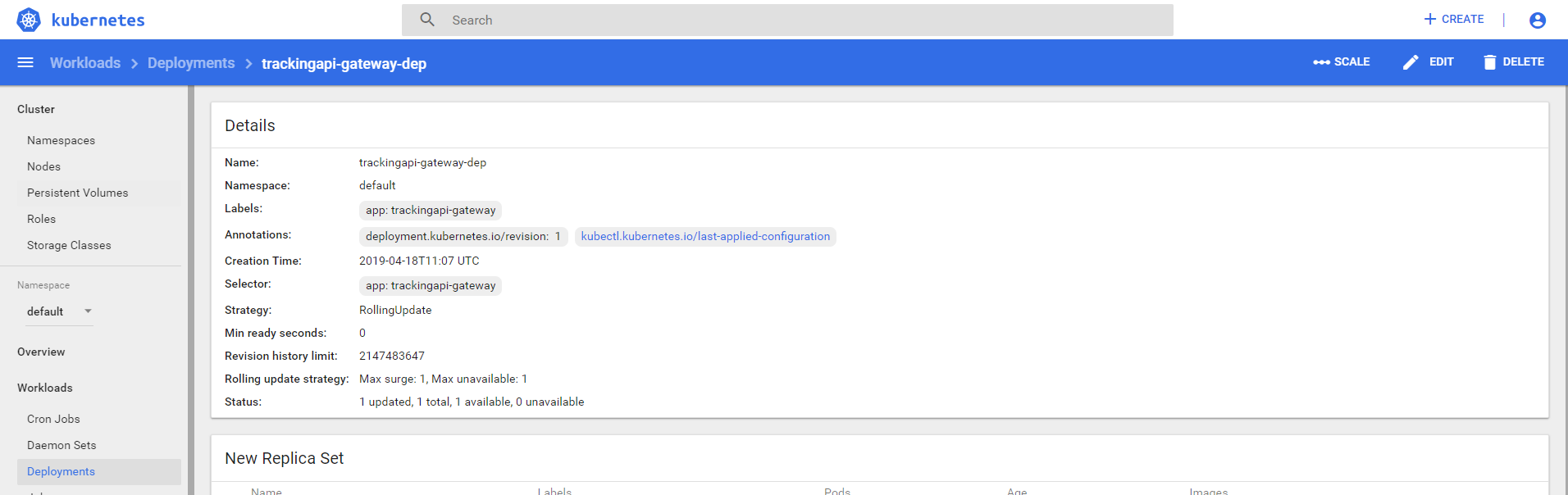

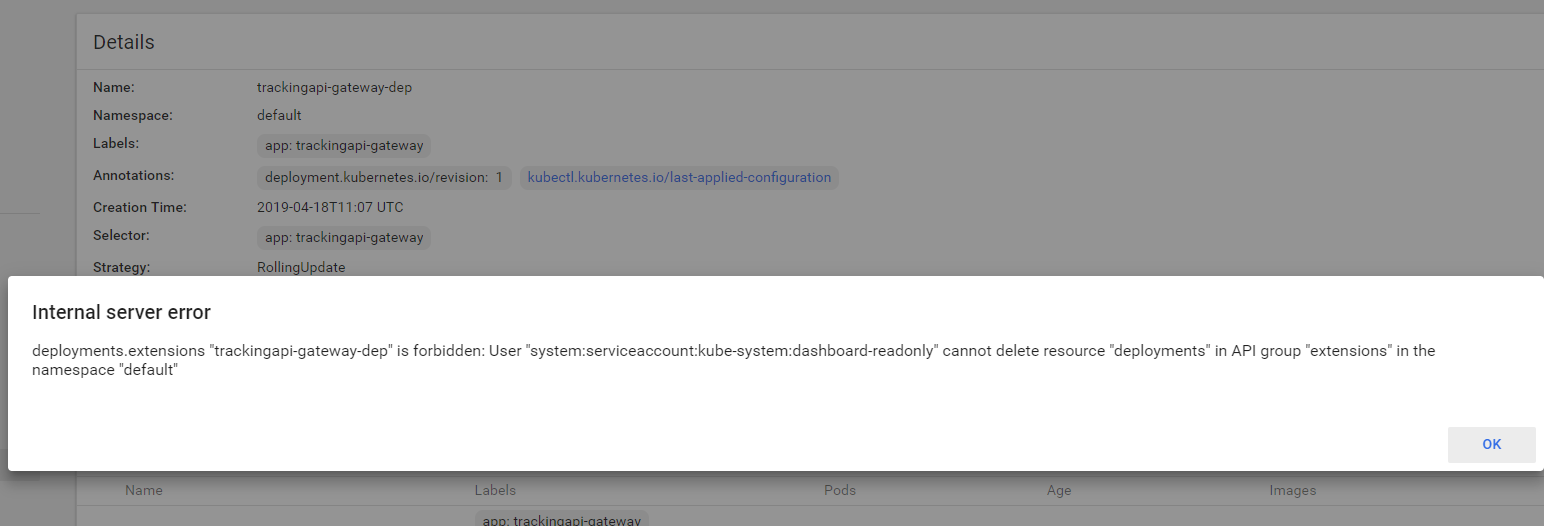

We casually into a deployment inside, you can see that the upper left corner there are still scale,edit和deletethese rights, in fact, do not worry, if you try to edit the time and scale, although there is no prompt, but the operation was unsuccessful, if you click on delete, it will an error message appears, as shown below, suggesting that dashboard-readonlyusers do not have permission to delete

Manually create a user with read-only privileges in the true sense

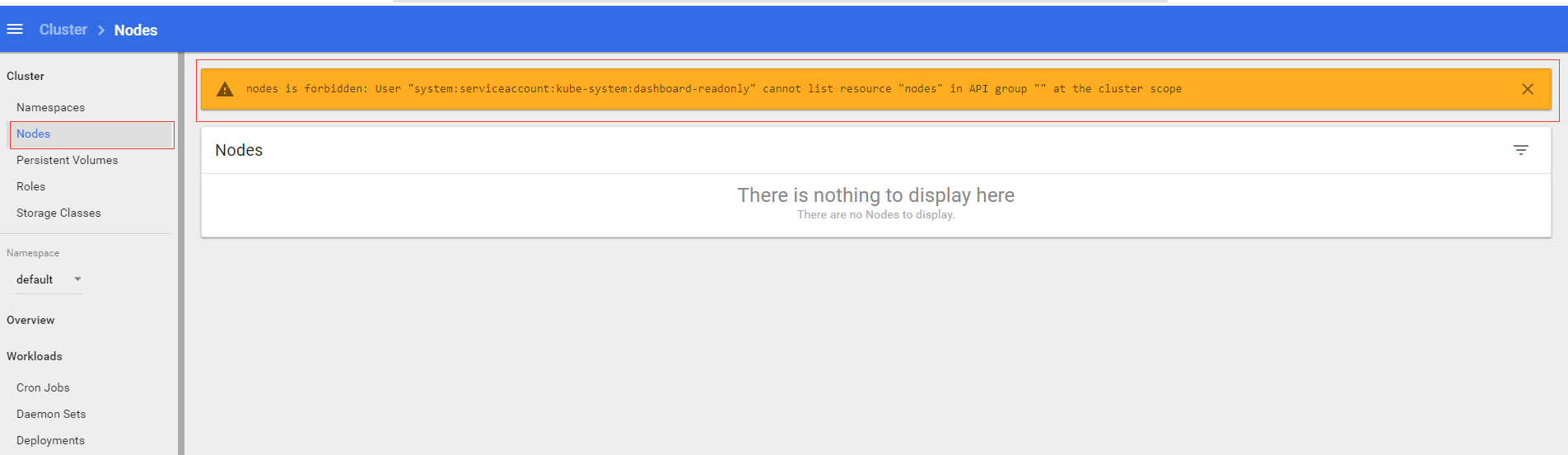

Before we pass to tie a user to viewcreate a user on this role with read-only privileges, but in fact you will find that this is not a read-only user rights user in the full sense, it is not certain jurisdictions cluster level , for example Nodes, persistent volumesand other rights, such as we click on the left side of the Nodeslabel, it will appear the following tips:

Now we come to manually create a resource for cluster-level users have read-only access

First, let's create a name for

kubectl create sa dashboard-real-readonly -n kube-systemLet's create a called dashboard-viewonlyof clusterrole

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: dashboard-viewonly

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- persistentvolumeclaims

- pods

- replicationcontrollers

- replicationcontrollers/scale

- serviceaccounts

- services

- nodes

- persistentvolumeclaims

- persistentvolumes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- bindings

- events

- limitranges

- namespaces/status

- pods/log

- pods/status

- replicationcontrollers/status

- resourcequotas

- resourcequotas/status

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- list

- watch

- apiGroups:

- apps

resources:

- daemonsets

- deployments

- deployments/scale

- replicasets

- replicasets/scale

- statefulsets

verbs:

- get

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- get

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- daemonsets

- deployments

- deployments/scale

- ingresses

- networkpolicies

- replicasets

- replicasets/scale

- replicationcontrollers/scale

verbs:

- get

- list

- watch

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- networkpolicies

verbs:

- get

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

- volumeattachments

verbs:

- get

- list

- watch

- apiGroups:

- rbac.authorization.k8s.io

resources:

- clusterrolebindings

- clusterroles

- roles

- rolebindings

verbs:

- get

- list

- watchThen bind it to dashboard-real-readonlyServiceAccount on

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: dashboard-viewonly

subjects:

- kind: ServiceAccount

name: dashboard-real-readonly

namespace: kube-systemBehind this is to get the user's token landing, and we have talked about many times before, the front section of this chapter there, you can refer to it, will not repeat them here.