Transfer from http://coolshell.cn/articles/7459.html

Two days before the release rsync algorithm , we wanted to look at the data compression algorithm, know a classical algorithm Huffman compression algorithm. I believe we should have heard of David Huffman and his compression algorithms - Huffman Code , one of the frequency, appearance by the character Priority Queue compression algorithm, binary tree and carried out, such a Huffman binary tree called a binary tree - A tape weights tree. Graduated from school for a long time I forgot this algorithm, but the Internet search a bit, it does not seem to put this algorithm made it clear that the article, especially the structure of the tree, and just saw an article in the overseas Chinese community. " the Simple example of Huffman Code a oN a String ", examples of which are easy to understand, is quite good, I'll turn over. Note that I did not fully translated to this report.

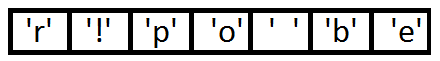

We look at direct example, if we need to compress the following string:

“beep boop beer!”

First of all, we first calculate the number of times each character appears, so we got a table below:

| character | frequency |

| ‘b’ | 3 |

| ‘e’ | 4 |

| ‘p’ | 2 |

| ‘ ‘ | 2 |

| 'O' | 2 |

| ‘r’ | 1 |

| ‘!’ | 1 |

Then I put these things into the Priority Queue (secondary data appear as priority), we can see, Priority Queue is Prioirry sort an array, if Priority, like, sort order will be used appear: Here's what we the resulting Priority Queue:

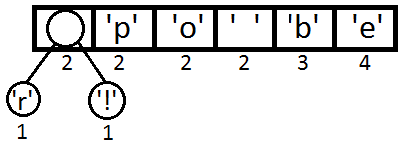

Then there is our Algorithm - this Priority Queue turn into a binary tree. We always taken from the queue of the first two elements to construct a binary tree (the first element is the left node, the second node is a right side), and the priority of these two elements are added, and placed back in the Priority ( Note again that the number of Priority here is the character that appears), and then, we get the following chart data:

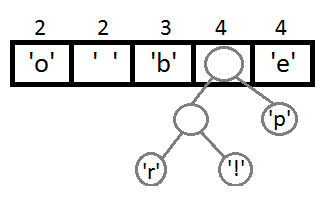

Similarly, we then take out the first two, is formed of a Priority 2 + 2 = 4 nodes, then returned in the Priority Queue:

Continuing with our algorithm (we can see that this is a process of bottom-up contribution from):

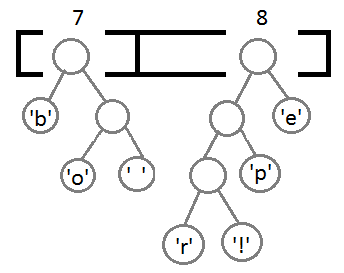

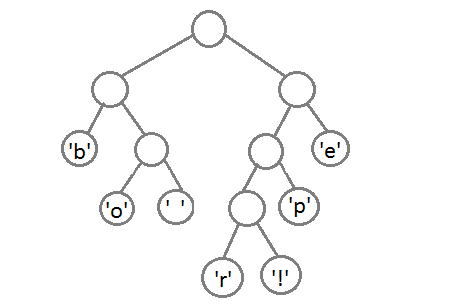

Eventually we'll get a binary tree follows:

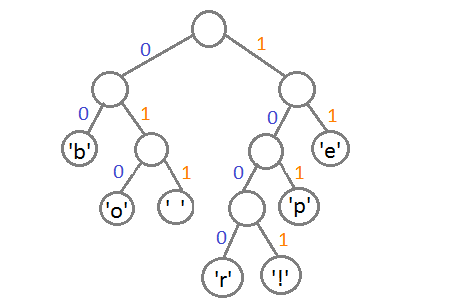

At this point, we put the tree in the left branch of coded as 0, the right branch coded as 1, so that we can traverse the tree to get encoded characters, such as: 'b' of the code is 00, 'p' is coded 101 , 'r' code is 1000. We can see that the more the frequency will be shorter in the upper, coding occurs, the less appear more in the lower frequencies, the longer coding .

Eventually we can get this code table below:

| character | coding |

| ‘b’ | 00 |

| ‘e’ | 11 |

| ‘p’ | 101 |

| ‘ ‘ | 011 |

| 'O' | 010 |

| ‘r’ | 1000 |

| ‘!’ | 1001 |

It should be noted that, when we encode, we are by "bit" to encode, decode is accomplished by bit, for example, if we have such a bitset "1011110111" So after decoding is "pepe". So, we need to build our Huffman encoding and decoding dictionary table by the binary tree.

One thing to note here is that our individual characters Huffman encoding is not conflict, that is, there will be no one code is the prefix of another code , otherwise it will be a big problem. Since the coding is not encode delimiter.

So, for our original string beep boop beer!

To its binary will be able to: 0,110,001,001,100,101 0,110,010,101,110,000 0,010,000,001,100,010 0,110,111,101,101,111 0,111,000,000,100,000 0,110,001,001,100,101 0,110,010,101,110,010 0010 0001

Our Huffman code is: 0,011,111,010,110,001 0,010,101,011,001,111 1000 1001

From the above example, we can see that the compressed ratio is still very substantial.

Author gives the source code you can look at (C99 standard) the Download at The Source Files

Reproduced in: https: //www.cnblogs.com/ericsun/p/3333910.html